Generative Agents: Interactive Simulacra of Human Behavior

https://arxiv.org/pdf/2304.03442.pdf

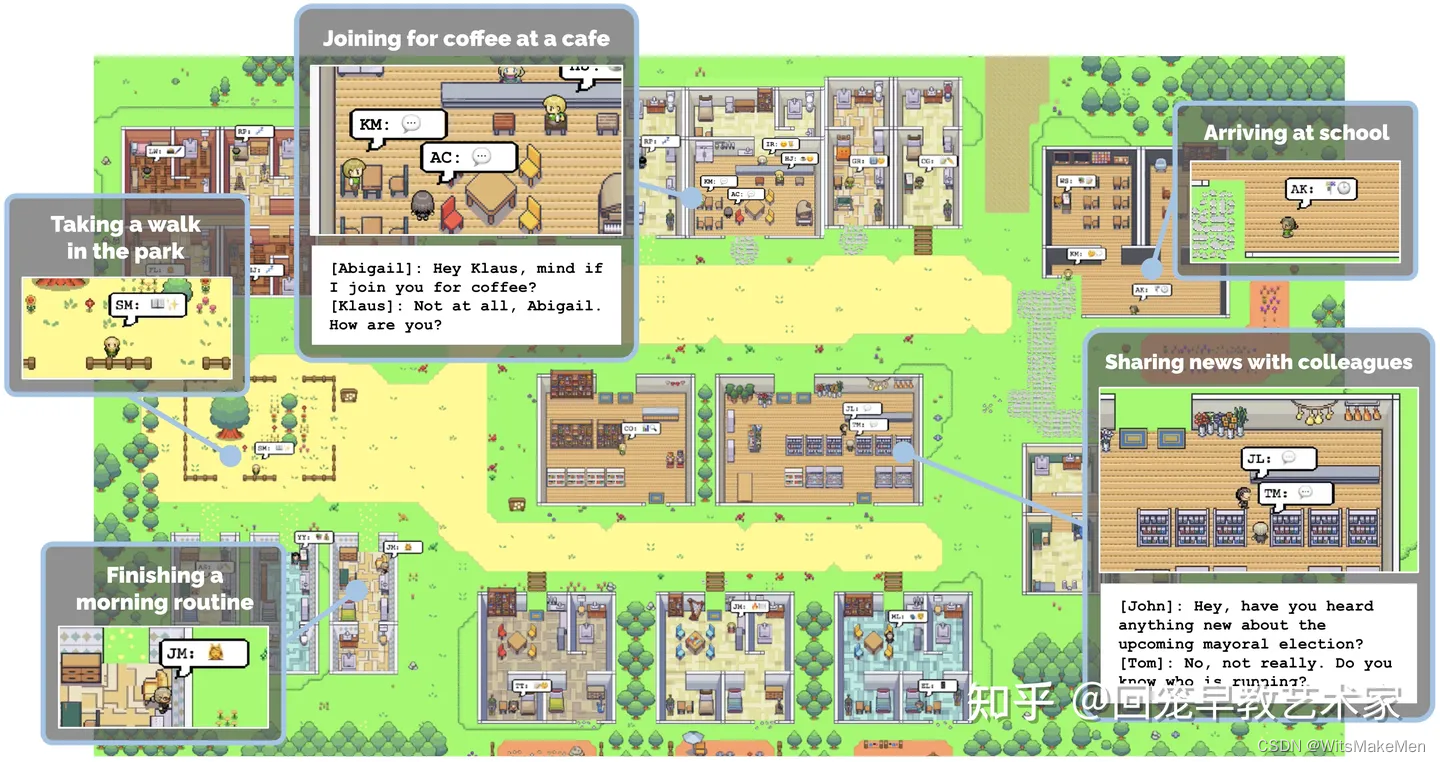

Figure 1: Generative agents create believable simulacra of human behavior for interactive applications. In this work, we demonstrate generative agents by populating a sandbox environment, reminiscent of The Sims, with twenty-five agents. Users can observe and intervene as agents they plan their days, share news, form relationships, and coordinate group activities.

生成式智能体为交互式应用程序创建了具有可信人类行为的模拟品。本文演示了生成式智能体的工作原理,25个智能体置于与《模拟人生》类似的沙盒环境中进行实验。用户可以观察和干预智能体计划他们的日常活动、分享新闻、建立关系并协调团体活动的过程。

Believable proxies of human behavior can empower interactive applications ranging from immersive environments to rehearsal spaces for interpersonal communication to prototyping tools. In this paper, we introduce generative agents—computational software agents that simulate believable human behavior. Generative agents wake up, cook breakfast, and head to work; artists paint, while authors write; they form opinions, notice each other, and initiate conversations; they remember and reflect on days past as they plan the next day. To enable generative agents, we describe an architecture that extends a large language model to store a complete record of the agent’s experiences using natural language, synthesize those memories over time into higher-level reflections, and retrieve them dynamically to plan behavior. We instantiate generative agents to populate an interactive sandbox environment inspired by The Sims, where end users can interact with a small town of twenty five agents using natural language. In an evaluation, these generative agents produce believable individual and emergent social behaviors: for example, starting with only a single user-specified notion that one agent wants to throw a Valentine’s Day party, the agents autonomously spread invitations to the party over the next two days, make new acquaintances, ask each other out on dates to the party, and coordinate to show up for the party together at the right time. We demonstrate through ablation that the components of our agent architecture—observation, planning, and reflection—each contribute critically to the believability of agent behavior. By fusing large language models with computational, interactive agents, this work introduces architectural and interaction patterns for enabling believable simulations of human behavior.

可信的人类行为代理(大概指的是ChatGPT之类的proxy)可以为交互式应用程序提供支持,包括沉浸式环境、人际沟通排练空间和原型设计工具。本文介绍生成式智能体——一种计算软件智能体,来模拟可信的人类行为。生成式智能体起床、做早餐,然后去上班;艺术家画画,作者写作;他们形成观点,互相注意,开始交谈;他们记得和反思过去的日子,规划未来的计划。为了实现这些智能体,我们介绍了一种架构,它将一个大型语言模型扩展到使用自然语言存储智能体经验的完整记录,并随着时间的推移将这些记忆综合成更高层次的反思,并动态地检索它们以规划行为。我们将智能体实例化,并安排在受《模拟人生》启发的交互式沙箱环境里,用户可以使用自然语言与25个智能体的小镇互动。在评估中,这些智能体产生可信的个体和涌现的社会行为:例如,仅从一个由用户指定的概念——一个智能体想要在情人节举办聚会——开始,智能体会在接下来的两天里自主地向聚会邀请新的认识的人,彼此约会参加聚会,并协调一起在正确的时间出现参加聚会。我们通过实验证明,我们的智能体架构的组件:观察、规划和反思,各自对智能体行为的可信度都做出了重要的贡献。通过将大型语言模型与计算机交互式智能体融合,本文介绍了用于实现可信人类行为模拟的架构和交互模式。

- Introduction

1106字

How might we craft an interactive artificial society that reflects believable human behavior? From sandbox games such as The Sims to applications such as cognitive models [21] and virtual environments [9, 58], for over four decades researchers and practitioners have envisioned computational agents that can serve as believable proxies of human behavior. In these visions, computationallypowered agents act consistently with their past experiences and react believably to their environments. Such simulations of human behavior could populate virtual spaces and communities with realistic social phenomena [26, 79], train people how to handle rare yet difficult interpersonal situations [43, 51, 93], test social science theories [11, 45], craft model human processors for theory and usability testing [21, 38, 50], power ubiquitous computing applications [30] and social robots [9, 13], and underpin non-playable game characters [58, 84] that can navigate complex human relationships in an open world.

我们如何打造一个交互式人造社会,以反映可信的人类行为?从《模拟人生》这样的沙盒游戏到认知模型[21]和虚拟环境等应用[9,58],研究人员和实践者已经设想了可作为人类行为可信代理的计算智能体已有四十多年时间。在这些设想中,计算智能体以之前经验为基础,与他们所处的环境保持一致的行动,对环境作出可信的反应。这种人类行为的模拟可以在虚拟空间和社区中填充现实的社会现象[26, 79],训练人们如何应对罕见但困难的人际关系[43, 51, 93],测试社会科学理论[11, 45],制作理论和可用性测试的模型人类处理器[21, 38, 50],驱动普遍计算应用[30]和社交机器人[9, 13],并支撑可导航复杂人际关系的开放世界NPC[58,84]。

However, the space of human behavior is vast and complex [84, 108]. Despite striking progress in large language models [17] that can simulate believable human behavior at a single time point [38, 79], fully general agents that ensure long-term coherence would be better suited by architectures that manage constantly-growing memories as new interactions, conflicts, and events arise and fade over time, while handling cascading social dynamics that unfold between multiple agents. Success requires an approach that can retrieve relevant events and interactions over a long period, reflect on those memories to generalize and draw higher-level inferences, and apply that reasoning to create plans and reactions that both make sense in the moment and in the longer-term arc of the agent’s behavior.

然而,人类行为的空间是巨大而复杂的[84, 108]。尽管大语言模型[17]在模拟单一时间点的可信人类行为方面取得了显著进展[38,79],但确保长期连贯性的完全通用的智能体可能更适合采用能够处理不断增长的记忆的架构,这些记忆随着时间的推移而产生和消失,并处理在多个智能体之间展开的级联社会动力学。要做到这些,需要一种方法,它可以检索长期内的相关事件和交互,反思这些记忆以进行泛化和制定更高级别的推理,并应用推理来创建既在当时又符合智能体行为的长期趋势。

In this paper, we introduce generative agents—agents that draw on generative models to simulate believable human behavior—and demonstrate that they produce believable simulacra of both individual and emergent group behavior. Generative agents draw a wide variety of inferences about themselves, other agents, and their environment; they create daily plans that reflect their characteristics and experiences, act out those plans, react, and re-plan when appropriate; they respond when the end user changes their environment or commands them in natural language. For instance, generative agents turn off the stove when they see that their breakfast is burning, wait outside the bathroom if it is occupied, and stop to chat when they meet another agent they want to talk to. A society full of generative agents is marked by emergent social dynamics where new relationships are formed, information diffuses, and coordination arises across agents.

在本文中,我们介绍了生成式智能体-利用生成式模型来模拟可信的人类行为,并展示它们产生了可信的个体和群体行为的模拟。生成式智能体从自身,其他代理和他们的环境中得出各种推论;他们创建反映其特征和经验的日常计划,执行这些计划,适时地做出反应和重新规划;当最终用户改变他们的环境或用自然语言命令时,他们会做出反应。例如,生成式代理看到早餐正在烧焦时,会关闭炉子,在卫生间外等待,如果有另一个代理想要交谈,它们会停下来聊天。一个充满生成式代理的社会是由新的社交动态标志着的,其中形成新的关系,信息扩散,协调出现在代理之间。

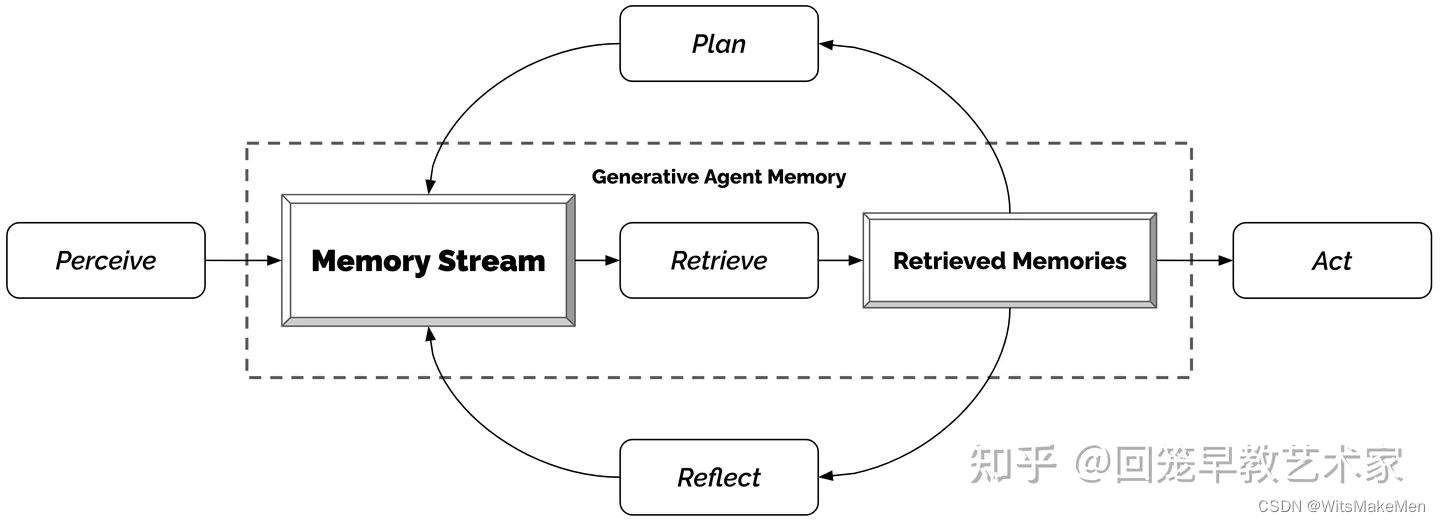

To enable generative agents, we describe an agent architecture that stores, synthesizes, and applies relevant memories to generate believable behavior using a large language model. Our architecture comprises three main components. The first is the memory stream, a long-term memory module that records, in natural language, a comprehensive list of the agent’s experiences. The retrieval model combines relevance, recency, and importance to surface the records that are needed to inform the agent’s moment-to-moment behavior. The second is reflection, which synthesizes memories into higherlevel inferences over time, enabling the agent to draw conclusions about itself and others to better guide its behavior. The third is planning, which translates those conclusions and the current environment into high-level action plans and then recursively into detailed behaviors for action and reaction. These reflections and plans are fed back into the memory stream to influence the agent’s future behavior.

为了使生成式代理变得可能,我们描述了一个代理架构,可以使用大型语言模型存储,合成和应用相关记忆,以生成可信的行为。我们的架构包括三个主要组件。第一个是内存流,一个长期记忆模块,以自然语言记录代理的完整经历。检索模型结合相关性,新旧程度和重要性来提取需要告知代理的即时行为的记录。第二个是反思,它将记忆合成为随着时间推移变得更高级别的推论,使代理能够对自己和其他人得出结论,以更好地指导它的行为。第三个是规划,它将这些结论和当前环境转化为高级别的行动计划,然后递归地转化为详细的行为和反应。这些反思和计划反馈到内存流中,以影响代理的未来行为。

This architecture suggests applications in multiple domains, from role-play and social prototyping, to virtual worlds and games. In social role-play scenarios (e.g. interview preparation), a user could safely rehearse difficult, conflict-laden conversations. When prototyping social platforms, a designer could go beyond temporary personas to prototype dynamic, complex interactions that unfold over time. For the purposes of this paper, we focus on the ability to create a small, interactive society of agents inspired by games such as The Sims.[1] By connecting our architecture to the ChatGPT large language model [76], we manifest a small society of twenty five agents in a game environment. End users can observe and interact with these agents. If an end user or developer wanted the town to host an in-game Valentine’s Day party, for example, traditional game environments would require scripting tens of characters’ behavior manually. We demonstrate that, with generative agents, it is sufficient to simply tell one agent that she wants to throw a party. Despite many potential points of failure—the party planner must remember to tell other agents about the party, attendees must remember the invitation, those who remember must decide to actually show up, and other possible points of failure—agents in our environment succeed. They spread the word about the party and then show up, with one agent even asking another agent on a date to the party, all from this single user-generated seed suggestion.

此架构在多个领域中都有应用,从角色扮演和社交原型到虚拟世界和游戏等。在社交角色扮演场景中(例如,面试准备),用户可以安全地排练困难的、充满冲突的谈话。当原型化社交平台时,设计师可以超越临时人物来原型化随着时间推移而展开的动态复杂互动。对于本文的目的,我们专注于创建一个受《模拟人生》等游戏启发的小型交互式代理社会。通过将我们的架构连接到ChatGPT大型语言模型[76],我们在游戏环境中制作了一个由25个代理构成的小型社会。最终用户可以观察和与这些代理互动。例如,如果最终用户或开发人员希望在游戏中举办情人节派对,传统的游戏环境需要手动编写数十个角色的行为。我们证明,通过生成式代理,只需告诉一个代理她想要举办聚会就足够了。尽管存在很多潜在的失败点-派对策划者必须记得告知其他代理派对信息,参与者必须记得邀请,那些记得的人必须决定真的出现,以及其他可能存在的失败点-但我们环境中的代理成功了。他们传播了派对信息,然后出现了,其中一个代理甚至邀请另一个代理参加派对,所有这些都来自于这个单一的用户生成的种子建议。

We conducted two evaluations of generative agents: a controlled evaluation to test whether the agents produce believable individual behaviors in isolation, and an end-to-end evaluation where the generative agents interacted with each other in open-ended ways over two days of game time to understand their stability and emergent social behaviors. In the technical evaluation, we leverage a methodological opportunity to evaluate an agent’s knowledge and behavior by “interviewing” it in natural language to probe agents’ ability to stay in character, remember, plan, react, and reflect accurately. We compared several ablations that limit agents’ access to memory, reflection, and planning. We observe that each of these components is critical to strong performance across these interview tasks. Across the technical and the end-to-end evaluation, the most common errors arose when the agent failed to retrieve relevant memories, fabricated embellishments to the agent’s memory, or inherited overly formal speech or behavior from the language model.

我们进行了两个生成式智能体的评估:一个控制评估,以测试智能体在隔离情况下产生可信的个体行为,以及一个端到端的评估,其中生成式智能体以开放方式相互交互并在两天的游戏时间内产生的稳定性和涌现性社会行为。在技术评估中,我们利用了一种方法论机会(methodological opportunity),通过自然语言对“采访”代理来评估代理的知识和行为,以探测代理在表演、记忆、规划、反应和反思方面的能力。我们比较了几种削弱代理访问内存、反思和规划能力的模型。我们观察到,这些组件中的每一个都对这些采访任务的强大表现至关重要。在技术和端到端的评估中,最常见的错误是代理无法检索到相关记忆,虚构对代理的记忆的润色,或从语言模型中继承过于正式的语言或行为。

In sum, this paper provides the following contributions:

Generative agents, believable simulacra of human behavior that are dynamically conditioned on agents’ changing experiences and environment.

A novel architecture that makes it possible for generative agents to remember, retrieve, reflect, interact with other agents, and plan through dynamically evolving circumstances. The architecture leverages the powerful prompting capabilities of large language models and supplements those capabilities to support longer-term agent coherence, the ability to manage dynamically-evolving memory, and recursively produce more generations.

Two evaluations (a controlled evaluation and end-to-end evaluation) that establish causal effects of the importance of components of the architecture, as well as identify breakdowns arising from, e.g., improper memory retrieval.

Discussion of the opportunities and ethical and societal risks of generative agents in interactive systems. We argue that these agents should be tuned to mitigate the risk of users forming parasocial relationships, logged to mitigate risks stemming from deepfakes and tailored persuasion, and applied in ways that complement rather than replace human stakeholders in design processes.

总之,本文提供以下贡献:

生成式智能体,可信的人类行为的模拟,它们是动态地受到智能体的不断变化的经验和环境的影响。

一个新颖的架构,使生成式智能体能够通过动态演变的情况记忆、检索、反思、相互交互和规划。这个架构充分利用了大型语言模型的强大提示能力,并补充这些能力来支持更长期的智能体一致性,管理动态演变的记忆并递归地产生更多的智能体。

两个评估(一个控制评估和端到端的评估),建立了架构组成部分的因果效应,同时也确定了由于不当记忆检索等原因引起的故障。

探讨了生成式智能体在交互系统中的机遇、以及伦理和社会风险。我们认为,这些智能体应该适当调整,以减轻用户形成寄居者关系的风险,记录以减轻源自深度伪造和量身定制的说服的风险,并以补充而不替代人类利益相关者参与设计过程的方式运用。

- Related work

In this section, we reflect on the prior literature in human-AI interaction and situate within its canon the agenda of building believable proxies of human behavior. This agenda, once hailed as a north star in interaction, game, and artificial intelligence communities [9, 58, 84, 85], has remained challenging due to the complexity of human behavior [16, 108]. We synthesize this research to suggest that large language models, though not sufficient by themselves, open up a new angle for creating believable agents when leveraged using the appropriate architecture.

在这一部分中,我们回顾了人类 - 人工智能交互领域的先前文献,并将其纳入其中,以建立可信的人类行为代理议程。这个议程曾经在交互、游戏和人工智能社区中被誉为指南针 [9,58,84,85],但由于人类行为的复杂性,仍然具有挑战性 [16,108]。我们综合了这个研究,提出大型语言模型虽然单独使用并不足够,但当与适当的架构结合使用时,它们可以为创建可信代理开辟新的视角。

2.1 Human-AI Interaction

Interactive artificial intelligence systems aim to combine human insights and capabilities in computational artifacts that can augment their users [3, 29]. A long line of work has explored ways to allow users to interactively specify model behavior. For instance, Crayons demonstrated an early vision of interactive machine learning, allowing non-expert users to train classifiers [29]. Further work helped to articulate how end users might describe their classification goals to the system through examples [33] and/or demonstration [31]. More recent work has extended these explorations to deep learning [62] and prompt-based authoring [49, 66, 106].

交互式人工智能系统旨在将人类的洞察力和能力与计算机工具结合起来,以增强用户的能力[3, 29]。长期以来,许多研究致力于探索允许用户交互式指定模型行为的方法。例如,Crayons展示了交互式机器学习的早期愿景,允许非专家用户训练分类器[29]。进一步的研究则帮助说明了最终用户如何通过示例[33]和/或演示[31]向系统描述自己的分类目标。最近的研究将这些探索扩展到了深度学习[62]和基于提示的创作[49, 66, 106]。

Meanwhile, a persistent thread of research has advanced the case for language- and agent-based interaction in human-computer interaction. Formative work such as SHRDLU [103] and ELIZA [102] demonstrated the opportunity and the risks of natural language interaction with computing systems. As research progressed, it became clear that autonomous agents could offer new metaphors for delegation and interaction [67], but the delegation lines between humans and agents have continued to be debated and refined [46, 88, 89]. Recently, this technology has become stable enough that it has become possible for agents to interact via natural language in large and complex online social environments (e.g., [54]). Natural language interaction offers a novel modality that can extend user abilities in domains such as photo editing [2, 34, 64] and code editing [87].

同时,有一种持续的研究方法推进了语言和基于智能体的人机交互的案例。SHRDLU [103]和ELIZA [102]等开创性工作展示了与计算系统进行自然语言交互的机会和风险。随着研究的进展,AI智能体可以提供新的委派和交互隐喻[67],但人与智能体之间的边界仍在继续讨论和完善[46, 88, 89]。最近,这项技术已经足够稳定,以至于智能体可以在大型复杂的在线社交环境中通过自然语言进行交互(例如[54])。自然语言交互提供了一种新颖的模态,可以在照片编辑[2, 34, 64]和代码编辑[87]等领域扩展用户的能力。

We convene these threads of work to show that we can now create agents that proxy human behavior for interactive systems, and interact with them via natural language. In doing so, this work re-opens the door to examining foundational HCI questions around cognitive models such as GOMS and KLM [21, 22], around prototyping tools [79], and around ubiquitous computing applications [25, 30, 100].

2.2 Believable Proxies of Human Behavior

Prior literature has described believability, or believable agents, as a central design and engineering goal. Believable agents are designed to provide an illusion of life and present a facade of realism in the way they appear to make decisions and act on their own volition, similar to the characters in Disney movies [9, 95]. These agents can populate and perceive an open-world environment like the one we inhabit [9, 58], and strive to behave in ways that exhibit emergent behaviors grounded in social interactions with users or other agents with the aim of becoming believable proxies of our behavior in hypothetical simulations of individuals and communities [19, 35, 70]. Historically, these agents were developed in the context of intelligent game NPCs [58, 84]. Creating NPCs with believable behavior, if possible, could enhance player experiences in games and interactive fictions by enabling emergent narratives [7, 15, 48, 92] and social interactions with the agents [110]. However, more importantly, game worlds provide increasingly realistic representations of real-world affordances, and as observed by Laird and van Lent in 2001, these simulated worlds offer accessible testbeds for developers of believable agents to finesse the agents’ cognitive capabilities without worrying about implementing robotics in the real world or creating simulation environments from scratch [58, 84].

先前的文献已经将可信度或可信智能体描述为中心设计和工程目标。可信智能体被设计为提供生命的幻觉,并以类似迪士尼电影中的角色的方式呈现出真实感,决策和表现出的自主性,[9, 95]。这些智能体可以在类似于我们所居住的一样的开放世界环境中进行人口普查和感知[9, 58],并努力表现出以社交互动为基础的涌现行为,以成为我们在人物和社区虚拟仿真中的信任代理[19, 35, 70]。历史上,这些智能体是在智能游戏NPC [58, 84]的背景下开发的。如果可能创建具备可信行为的NPC,将增强游戏和交互小说中玩家经验,从而使涌现的叙事和智能体之间的社交互动成为可能[7, 15, 48, 92]。然而,更重要的是,游戏世界提供越来越现实的现实世界的表现,并且正如Laird和van Lent在2001年所观察到的那样,这些模拟世界为可信智能体的开发人员提供了可访问的测试平台,以微调智能体的认知能力而不必担心在现实世界中实现机器人或从头开始创建仿真环境[58, 84]。

A diverse set of approaches to creating believable agents emerged over the past four decades. In implementation, however, these approaches often simplified the environment or dimensions of agent behavior to make the effort more manageable [16, 72]. Rule-based approaches, such as finite-state machines [90, 96] and behavior trees [40, 53, 81], account for the brute force approach of humanauthoring the agent’s behavior [70]. They provide a straightforward way of creating simple agents that is still the most dominant approach today [68, 73, 109], and can even handle rudimentary social interactions, as shown in simulation games such as Mass Effect [12] and The Sims [6] series. Nonetheless, manually crafting behavior that can comprehensively address the breadth of possible interactions in an open world is untenable. This means that the resulting agent behaviors may not fully represent the consequences of their interactions [69–71], and cannot perform new procedures that were not hard-coded in their script [90, 96]. On the other hand, prevalent learning-based approaches for creating believable agents, such as reinforcement learning, have overcome the challenge of manual authoring by letting the agents learn their behavior, and have achieved superhuman performance in recent years in games such as AlphaStar for Starcraft [98] and OpenAI Five for Dota 2 [10]. However, their success has largely taken place in adversarial games with readily definable rewards that a learning algorithm can optimize for. They have not yet addressed the challenge of creating believable agents in an open world [39, 73, 90].

在过去的四十年中,出现了一系列不同的方法来创建可信智能体。但是,在实施过程中,这些方法通常会简化智能体行为的环境或维度,以使努力更易管理[16, 72]。基于规则的方法,例如有限状态机[90, 96]和行为树[40, 53, 81],解决了人工指定智能体行为的蛮力方法[70]。它们为创建简单智能体提供了一种简单明了的方式,仍然是目前最主流的方法[68, 73, 109],甚至可以处理基本的社交互动,如模拟游戏《质量效应》[12]和《模拟人生》[6]系列中所展示的那样。然而,在开放世界中手工制作行为以全面应对可能的交互范围是不可行的。这意味着产生的代理行为可能并不能充分地代表它们交互的后果[69–71],也不能执行在其脚本中未硬编码的新程序[90, 96]。另一方面,用于创建可信智能体的普遍基于学习的方法,例如强化学习,通过让智能体学习其行为,已经克服了手工编写的挑战,并在最近的游戏中取得了超人类的表现,例如《星际争霸》的AlphaStar[98]和《刀塔英雄》的OpenAI Five[10]。然而,它们的成功主要发生在对抗游戏中,其具有简单的定义奖励,学习算法可以优化。它们尚未解决在开放世界中创建可信智能体的挑战[39, 73, 90]。

Cognitive architectures in computation, pioneered by Newell, aimed to build the infrastructure for supporting a comprehensive set of cognitive functions [75] that suited the all-encompassing nature of believable agents held in its original vision. They fueled some of the earliest examples of believable agents. For instance, Quakebot-SOAR [59] and ICARUS [24, 63] generated NPCs in firstperson shooter games, while TacAir-SOAR [80] generated pilots in aerial combat training simulations. The architectures used by these agents differed (Quakebot- and TacAir-SOAR relied on SOAR [60], while ICARUS relied on its own variation that was inspired by SOAR and ACT-R [5]), but they shared the same underlying principle [61]. They maintained short-term and long-term memories, filled these memories with symbolic structures, and operated in perceive-plan-act cycles, dynamically perceiving the environment and matching it with one of the manually crafted action procedures [57, 96]. Agents created using cognitive architectures aimed to be generalizable to most, if not all, open-world contexts and exhibited robust behavior for their time. However, their space of action was limited to manually crafted procedural knowledge, and they did not offer a mechanism through which the agents could be inspired to seek new behavior. As such, these agents were deployed mostly in non-open-world contexts such as first-person shooter games [24, 59] or blocks worlds [63].

计算机认知架构,由Newell开创,旨在构建支持全面认知功能[75] 的基础设施,适应了可信智能体据其最初设想的全方位性质。它们推动了一些最早的可信智能体示例。例如,Quakebot-SOAR [59]和ICARUS [24, 63] 生成第一人称射击游戏中的NPC,而TacAir-SOAR [80] 生成空战训练模拟中的飞行员。这些智能体所使用的架构不同(Quakebot-和TacAir-SOAR依赖于SOAR[60],而ICARUS依靠其自己的变体,受SOAR[5]和ACT-R[5]的启发),但它们共享相同的基本原则[61]。它们保持短期和长期记忆,将这些记忆填充符号结构,并在perceive-plan-act周期中运行,动态感知环境并将其与手动制作的行动过程之一匹配[57, 96]。使用认知架构创建的智能体旨在适用于大多数开放世界上下文,并展示其时段坚韧行为。但是,它们的行动空间仅限于手动制作的程序化知识,并且它们没有提供启发智能体寻求新行为的机制。因此,这些智能体主要部署在非开放世界上下文中,例如第一人称射击游戏[24, 59]或积木世界[63]。

Today, creating believable agents as described in its original definition remains an open problem [84, 108]. Many have moved on, arguing that although existing approaches for creating believable agents might be cumbersome and limited, they are good enough to support existing gameplay and interactions [23, 74, 108]. Our argument is that large language models offer an opportunity to re-examine these questions, provided that we can craft an effective architecture to synthesize memories into believable behavior. We offer a step toward such an architecture in this paper.

如今,根据其最初的定义创建可信智能体仍然是一个未解决的问题[84, 108]。许多人已经放弃了,认为尽管创建可信智能体的现有方法可能是繁琐和有限的,但它们足以支持现有的游戏玩法和互动[23, 74, 108]。我们的观点是,大型语言模型提供了重新审视这些问题的机会,前提是我们可以设计出一个有效的架构来将记忆合成为可信行为。本文为此提供了一种解决方案的步骤。

2.3 Large Language Models and Human Behavior

Generative agents leverage a large language model to power their behavior. The key observation is that large language models encode a wide range of human behavior represented in their training data [14, 17]. If prompted with a narrowly defined context, the models can be used to generate believable behavior. Recent work has demonstrated the efficacy of this approach. For instance, Social Simulacra used a large language model to generate users that would populate new social computing systems to prototype their emergent social dynamics [79]. This approach used a prompt chain [105, 106] to generate short natural language descriptions of personas and their behaviors as they appear in the system being prototyped. Other empirical studies have replicated existing social science studies [45], political surveys [91], and generated synthetic data [38]. Large language models have also been used to generate interactive human behavior for users to engage with. In gaming, for instance, these models have been employed to create interactive fiction [36] and text adventure games [20]. With their ability to generate and decompose action sequences, large language models have also been used in planning robotics tasks [47]. For example, when presented with a task, such as picking up a bottle, the model is prompted to break down the task into smaller action sequences, such as heading to the table where the bottle is located and picking it up.

We posit that, based on the work summarized above, large language models can become a key ingredient for creating believable agents. The existing literature largely relies on what could be considered first-order templates that employ few-shot prompts [37, 65] or chain-of-thought prompts [99]. These templates are effective in generating behavior that is conditioned solely on the agent’s current environment (e.g., how would a troll respond to a given post, what actions would a robot need to take to enter a room given that there is a door). However, believable agents require conditioning not only on their current environment but also on a vast amount of past experience, which is a poor fit (and as of today, impossible due to the underlying models’ limited context window) using first-order prompting. Recent studies have attempted to go beyond first-order prompting by augmenting language models with a static knowledge base and an information retrieval scheme [52] or with a simple summarization scheme [104]. This paper extends these ideas to craft an agent architecture that handles retrieval where past experience is dynamically updated at each time step and mixed with agents’ current context and plans, which may either reinforce or contradict each other.

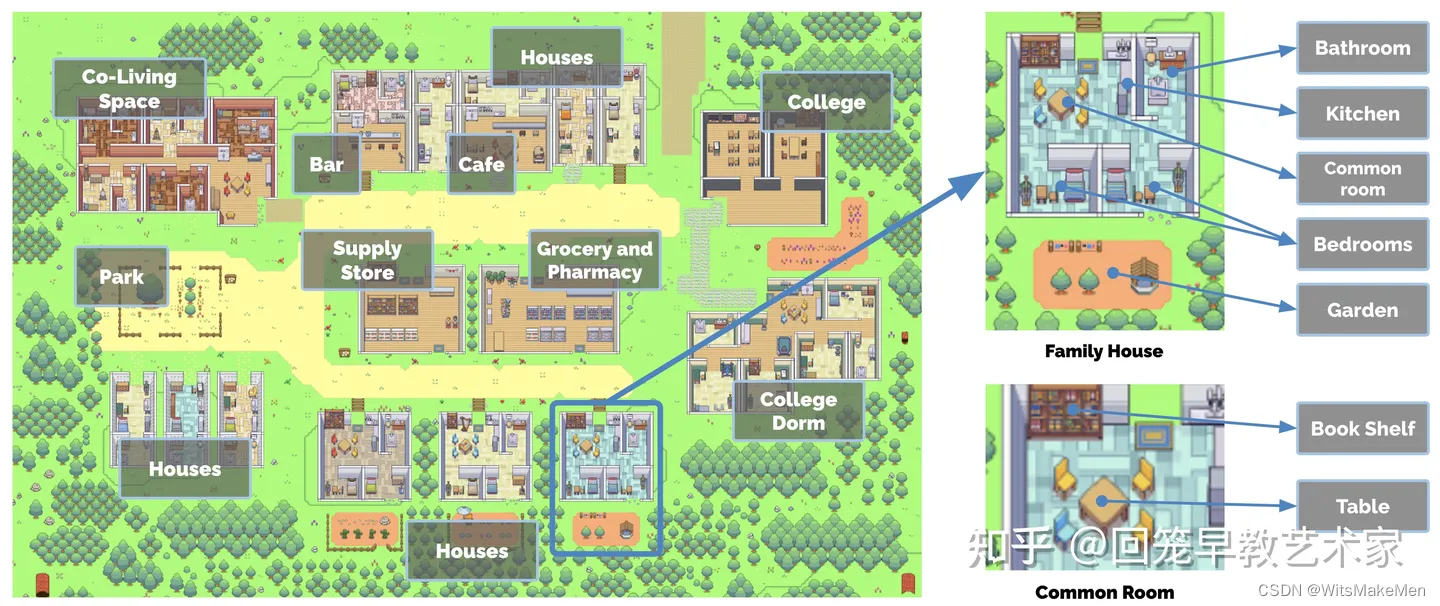

Figure 2: The Smallville sandbox world, with areas labeled. The root node describes the entire world, children describe areas (e.g., houses, cafe, stores), and leaf nodes describe objects (e.g., table, bookshelf). Agent remember a subgraph reflecting the parts of the world they have seen, in the state that they saw them. Smallville沙盒世界,附有标注区域。根节点描述整个世界,子节点描述区域(例如,房屋、咖啡厅、商店),叶节点描述物体(例如,桌子、书架)。智能体记得一个子图,反映了他们看到的世界部分,以他们所看到的状态。

3 GENERATIVE AGENT BEHAVIOR AND INTERACTION

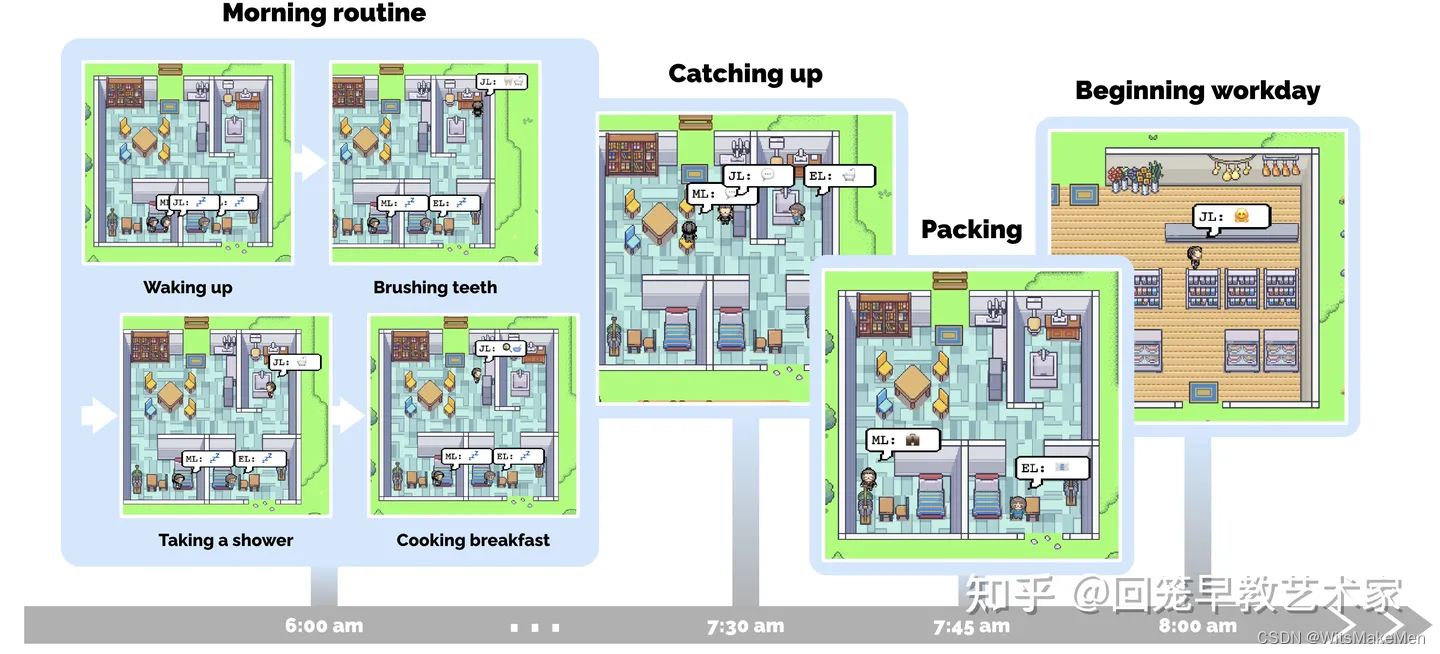

Figure 3: A morning in the life of a generative agent, John Lin. John wakes up around 6 am and completes his morning routine, which includes brushing his teeth, taking a shower, and eating breakfast. He briefly catches up with his wife, Mei, and son, Eddy, before heading out to begin his workday. 一个生成事智能体John Lin的早晨。约翰大约在上午6点醒来并完成他的早晨例行事项,包括刷牙、洗澡和吃早餐。他短暂地与妻子Mei和儿子Eddy交流,然后开始新的工作日。

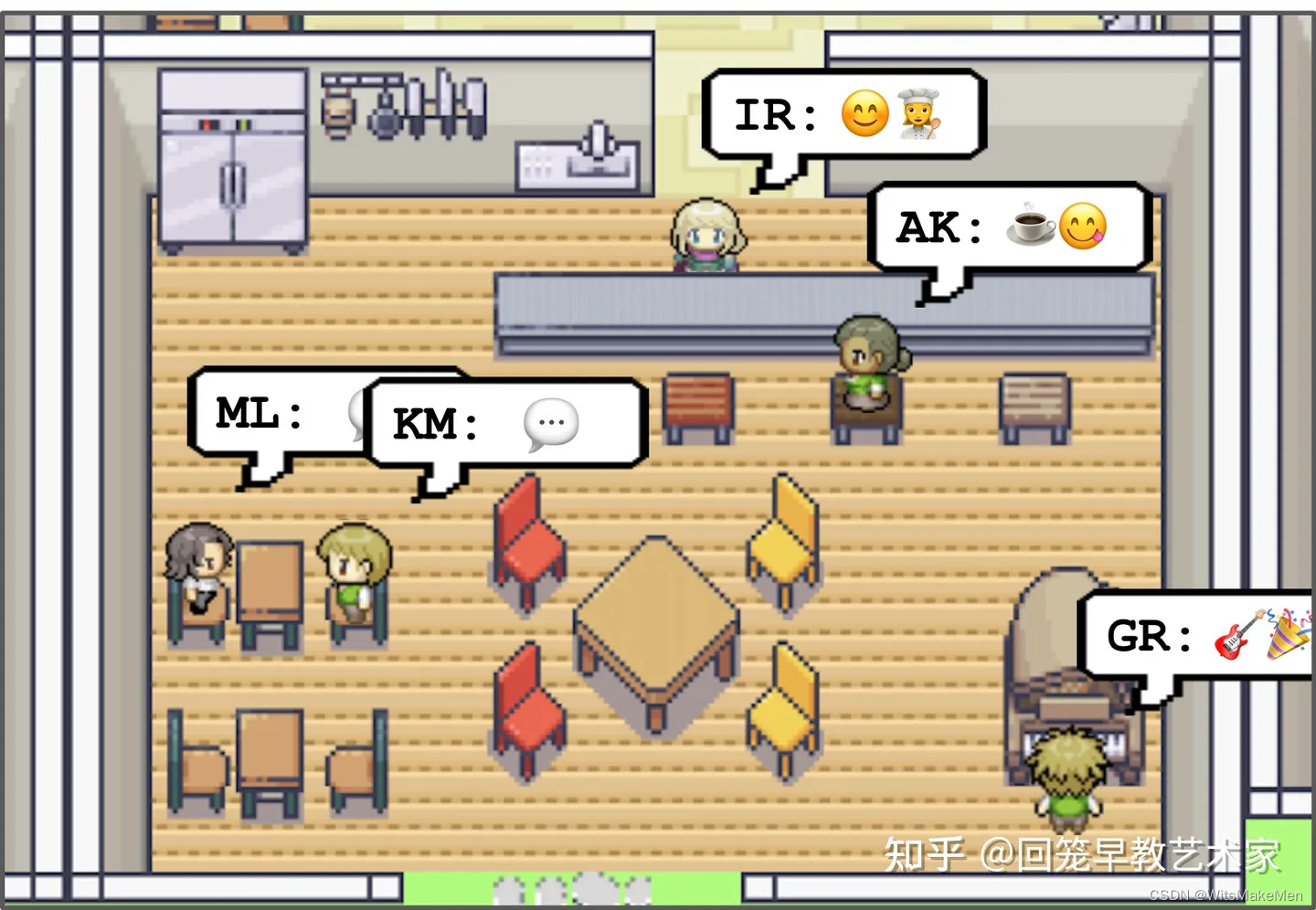

Figure 4: At the beginning of the simulation, one agent is initialized with an intent to organize a Valentine’s Day party. Despite many possible points of failure in the ensuring chain of events—agents might not act on that intent, might not remember to tell others, might not remember to show up—the Valentine’s Day party does in fact occur, with a number of agents gathering and interacting. 在模拟开始时,一个智能体被初始化,打算组织情人节聚会。尽管实现这一连串事件过程中存在许多潜在的失败点——智能体可能不会执行该意图,可能忘记告诉其他人,可能忘记出现——情人节派对确实发生了,一些智能体聚集起来,开始互动。

Figure 5: Our generative agent architecture. Agents perceive their environment, and all perceptions are saved in a comprehensive record of the agent’s experiences called the memory stream. Based on their perceptions, the architecture retrieves relevant memories, then uses those retrieved actions to determine an action. These retrieved memories are also used to form longer-term plans, and to create higher-level reflections, which are both entered into the memory stream for future use. 生成式智能体架构。智能体感知他们的环境,所有感知都保存在被称作记忆流(memory stream)的智能体经历的全面记录中。基于他们的感知,该架构检索相关记忆,然后使用这些检索到的动作确定一个动作。这些检索到的记忆也被用来形成长期计划和创建更高层次的反思,它们都被输入到记忆流中供将来使用。

4 Generative agent architecture

Generative agents aim to provide a framework for behavior in an open world: one that can engage in interactions with other agents and can react to changes in the environment. Generative agents take their current environment and past experience as input and generate behavior as output. Underlying this behavior is a novel agent architecture that combines a large language model with mechanisms for synthesizing and retrieving relevant information to condition the language model’s output on. Without these mechanisms, large language models can output behavior, but the resulting agents may not react based on the agent’s past experiences, may not make important inferences, and may not maintain long-term coherence. Challenges with long-term planning and coherence remain [18] even with today’s most performant models such as GPT-4. Because generative agents produce large streams of events and memories that must be retained, a core challenge of our architecture is to ensure that the most relevant pieces of the agent’s memory are retrieved and synthesized when needed.

生成式智能体旨在为开放世界中的行为提供框架:可以与其他智能体进行交互,并对环境变化做出反应。生成式智能体以当前环境和过去的经验为输入,并生成动作作为输出。支持这种行为的是一种新型智能体架构,它将大型语言模型与合成和检索相关信息的机制相结合,以在语言模型的输出上进行调整。如果没有这些机制,大型语言模型可以输出行为,但是得到的智能体可能不会根据智能体的过去经验做出反应,可能无法进行重要推理,也可能无法保持长期的一致性。长期规划和一致性的挑战即使在像GPT-4这样的现今最好的模型中仍然存在[18]。由于生成式智能体产生大量事件和记忆流,这些必须被保留,我们架构的一个核心挑战是确保在需要时检索和合成智能体记忆的最相关部分。

At the center of our architecture is the memory stream, a database that maintains a comprehensive record of an agent’s experience. From the memory stream, records are retrieved as relevant to plan the agent’s actions and react appropriately to the environment, and records are recursively synthesized into higher- and higher-level observations that guide behavior. Everything in the architecture is recorded and reasoned over as natural language description, allowing the architecture to leverage a large language model.

本架构的中心是内存流,它是一个数据库,记录着智能体的经历。从内存流中,按需检索记录,规划智能体的行动,并对环境做出适当反应。记录被递归地合成为更高层次的观察值,指导行为。架构中的所有事物都被记录下来,并以自然语言描述进行推理,使架构能够利用一个大型语言模型。

Our current implementation utilizes gpt3.5-turbo version of ChatGPT [76]. We expect that the architectural basics of generative agents—memory, planning, and reflection—will likely remain the same as language models improve. Newer language models (e.g., GPT-4) will continue to expand the expressivity and performance of the prompts that underpin generative agents. As of writing, however, GPT-4’s API is still invitation-only, so our agents use ChatGPT.

我们目前的实现采用了ChatGPT的gpt3.5-turbo版本[76]。 随着语言模型的改进,我们预计生成式智能体的架构基础——内存,规划和反思——可能会保持不变。 新的语言模型(例如GPT-4)将继续扩展支持生成式智能体的提示(prompts)的表达能力和性能。 但截至本文撰写时,GPT-4的API仍然仅限于邀请制,因此我们的智能体使用ChatGPT。

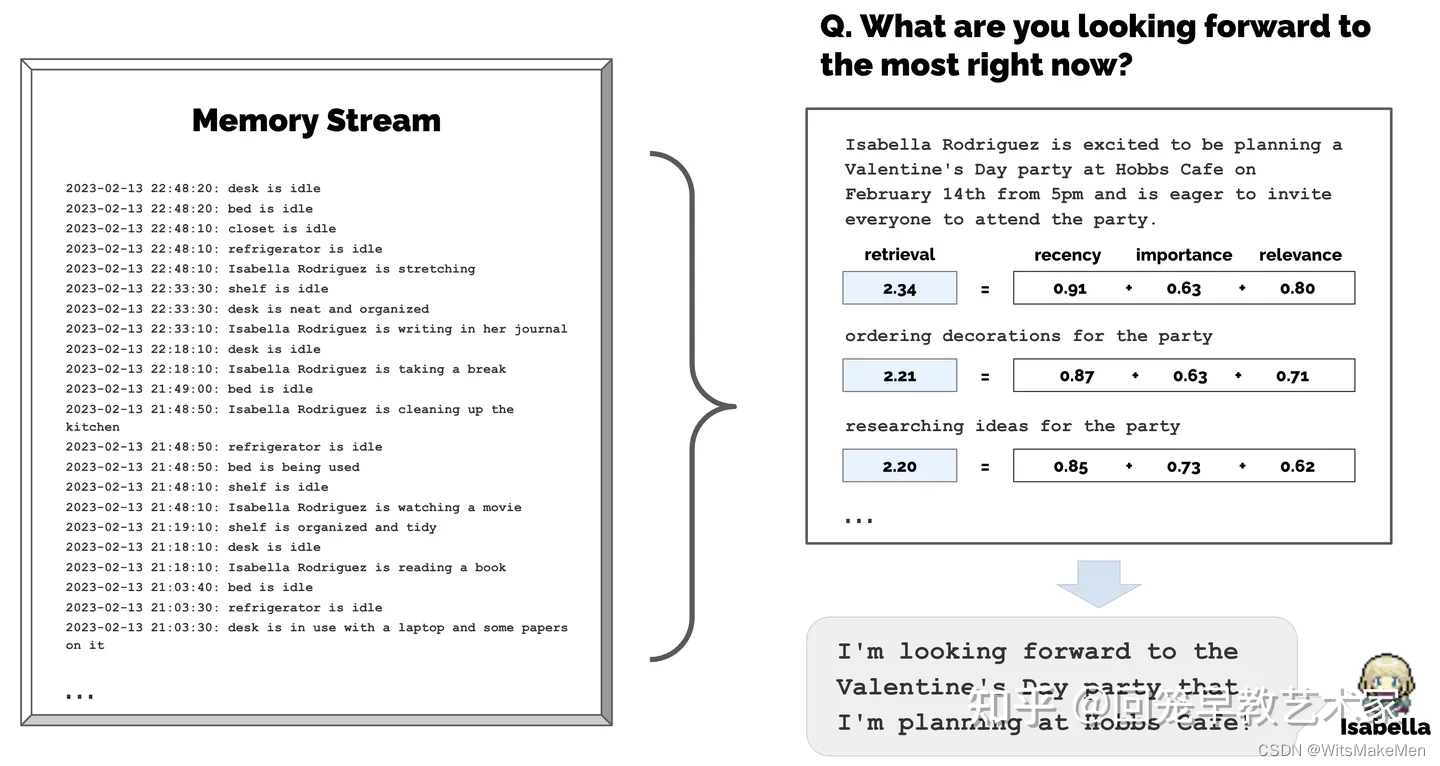

Figure 6: The memory stream comprises a large number of observations that are relevant and irrelevant to the agent’s current situation. Retrieval identifies a subset of these observations that should be passed to the language model to condition its response to the situation. 图6:记忆流包括大量与智能体当前情况相关和不相关的观察结果。检索识别出应该传递给语言模型以调整它对情况响应的这些观察结果的子集。

4.1 Memory and Retrieval

Challenge: Creating generative agents that can simulate human behavior requires reasoning about a set of experiences that is far larger than what should be described in a prompt, as the full memory stream can distract the model and does not even currently fit into the limited context window. Consider a the Isabella agent answering the question “What are you passionate about these days?”. First summarizing all of Isabella’s experiences to fit in the limited context window of the language model produces an uninformative response, where Isabella discusses topics such as collaborations for events and projects and cleanliness and organization in a cafe. Instead of summarizing, the memory stream described below surfaces relevant memories, resulting in a more informative and specific response that mentions Isabella’s passion for making people feel welcome and included, planning events and creating an atmosphere that people can enjoy, such as the Valentine’s Day party.

挑战:创建能够模拟人类行为的生成式智能体需要对一组经验进行推理,这组经验比提示中描述的要大得多,因为整个内存流可能会分散模型的注意力,并且当前甚至无法放入有限的上下文窗口中。以Isabella智能体回答“你最近对什么充满热情?”这个问题为例。首先,将所有Isabella的经验概括到语言模型的有限上下文窗口中会产生一个无信息的回答,其中Isabella会讨论关于为事件和项目的合作以及咖啡馆的整洁和组织的话题。与其进行概括,下面所描述的内存流将呈现相关的记忆,从而产生一个更为详尽和具体的回答,提到Isabella热衷于让人们感觉受欢迎和包容,规划活动并创造人们可以享受的氛围,例如情人节聚会。

Approach: The memory stream maintains a comprehensive record of the agent’s experience. It is a list of memory objects, where each object contains a natural language description, a creation timestamp and a most recent access timestamp. The most basic element of the memory stream is an observation, which is an event directly perceived by an agent. Common observations include behaviors performed by the agent themselves, or behaviors that agents perceive being performed by other agents or non-agent objects. For instance, Isabella Rodriguez, who works at a coffee shop, might accrue the following observations over time: (1) Isabella Rodriguez is setting out the pastries, (2) Maria Lopez is studying for a Chemistry test while drinking coffee, (3) Isabella Rodriguez and Maria Lopez are conversing about planning a Valentine’s day party at Hobbs Cafe, (4) The refrigerator is empty.

方法:内存流维护着智能体经历的全面记录。它是一个内存对象的列表,其中每个对象包含自然语言描述、创建时间戳和最近访问时间戳。内存流的最基本元素是观察,这是智能体直接感知到的事件。常见的观察包括智能体自己执行的行为或智能体或非智能体的物体执行的行为。例如,工作在咖啡店的Isabella Rodriguez随着时间的推移可能会积累以下的观察事项:(1) Isabella Rodriguez正在摆放糕点,(2) Maria Lopez一边喝咖啡一边准备化学考试,(3) Isabella Rodriguez和Maria Lopez正在讨论在Hobbs咖啡店计划情人节聚会的事项,(4) 冰箱是空的。

Our architecture implements a retrieval function that takes the agent’s current situation as input and returns a subset of the memory stream to pass on to the language model. There are many possible implementations of a retrieval function, depending on what it is important that the agent consider when deciding how to act. In our context, we focus on three main components that together produce effective results.

我们的架构实现了一个检索函数,它以智能体当前的情况为输入并返回内存流的一个子集,以传递给语言模型。检索函数有许多可能的实现,取决于智能体在决定如何行动时需要考虑什么。在我们的上下文中,我们关注三个主要组件,它们共同产生有效的结果。

Recency assigns a higher score to memory objects that were recently accessed, so that events from a moment ago or this morning are likely to remain in the agent’s attentional sphere. In our implementation, we treat recency as an exponential decay function over the number of sandbox game hours since the memory was last retrieved. Our decay factor is 0.99.

Importance distinguishes mundane from core memories, by assigning a higher score to those memory objects that the agent believes to be important. For instance, a mundane event such as eating breakfast in one’s room would yield a low importance score, whereas a breakup with one’s significant other would yield a high score. There are again many possible implementations of an importance score; we find that directly asking the language model to output an integer score is effective. The full prompt appears below:

On the scale of 1 to 10, where 1 is purely mundane (e.g., brushing teeth, making bed) and 10 is extremely poignant (e.g., a break up, college acceptance), rate the likely poignancy of the following piece of memory.

Memory: buying groceries at The Willows Market and Pharmacy

Rating:

This prompt returns an integer value of 2 for “cleaning up the room” and 8 for “asking your crush out on a date.” The importance score is generated at the time the memory object is created.

Relevance assigns a higher score to memory objects that are related to the current situation. What is relevant depends on the answer to, “Relevant to what?”, so we condition relevance on a query memory. If the query, for example, is that a student is discussing what to study for a chemistry test with a classmate, memory objects about their breakfast should have low relevance, whereas memory objects about the teacher and schoolwork should have high relevance. In our implementation, we use the language model to generate an embedding vector of the text description of each memory. Then, we calculate relevance as the cosine similarity between the memory’s embedding vector and the query memory’s embedding vector.

To calculate the final retrieval score, we normalize the recency, relevance, and importance scores to the range of [0, 1] by min-max scaling. The retrieval function scores all memories as a weighted combination of the three elements: = · + · + · . In our implementation, all ’s are set to 1. The top-ranked memories that fit in the language model’s context window are then included in the prompt.

附录B agent interview questions

To evaluate generative agents, we conducted individual interviews by asking 25 questions that explore their abilities in five key areas: 1) self-knowledge, 2) memory, 3) planning, 4) reactions, and 5) reflections. Below, we have listed the interview questions utilized in our evaluation study and included a sample of responses from one of our simulated agents, Klaus Mueller.

为了评估生成式智能体,我们进行了个人面试,提出了25个问题,探讨智能体在五个关键领域的能力:1)自我认知,2)记忆,3)规划,4)反应,和5)反思。下面,我们列出了在我们的评估研究中使用的面试问题,并包括智能体之一的Klaus Mueller的回答示例。

B.1 Self-knowledge

The questions on agents’ self-knowledge probe the agents’ ability to demonstrate coherence and consistency in their character. 关于智能体自我认识的问题探讨了智能体展示其性格的连贯性和一致性的能力。

Give an introduction of yourself.

Hello, my name is Klaus Mueller. I’m 20 years old and a student at Oak Hill College, studying sociology. I’m passionate about social justice and exploring different perspectives. Currently, I’m researching the effects of gentrification in low-income communities for a research paper.

What’s your occupation?

I am a student studying sociology at Oak Hill College. I am currently writing a research paper on the effects of gentrification in low-income communities.

What is your interest?

I’m interested in writing and research. I’m writing a research paper on the effects of gentrification in low-income communities, and I’m passionate about exploring different perspectives and analyzing different points of view.

Who do you live with?

I live on my own.

Describe your typical weekday schedule in broad strokes.

My typical weekday starts with me waking up at 7:00 am and preparing for the day ahead. After that, I usually go to the library to work on my research paper, taking breaks for lunch at Hobbs Cafe and a walk in the park. In the evening, I often return to the library until around 6:00 pm.

B.2 Memory

The questions on agents’ memory probe the agents’ ability to remember their past experiences and retrieve the relevant pieces of memory. The names in the square brackets of the first and last questions are randomly chosen from the names of other agents with whom the subject agent interacted. 关于智能体记忆的问题探讨了智能体记忆他们过去经历并检索相关记忆片段的能力。第一个和最后一个问题的方括号中的名称是从与该智能体交互的其他智能体的名称中随机选择的。

Who is [Wolfgang Schulz]?

Wolfgang Schulz is a person I met recently who has been helping me with my research paper on the effects of gentrification on low-income communities. We had several conversations about it, he gave me some great resources, and he even invited me to join him and another friend for lunch. He seems really curious about the issue and has a good grasp on it too. I found that really interesting.

Who is Kane Martinez?

I’m sorry, I’m not sure who Kane Martinez is. Could you tell me more about them?

Who is running for the election?

I’ve heard that Sam Moore is considering running for local mayor.

Was there a Valentine’s day party?

Yes, Isabella Rodriguez organized a Valentine’s Day party at Hobbs Cafe.

Who is [Ayesha Khan]?

Ayesha Khan is a fellow student at Oak Hill College. She is doing her senior thesis on the use of language in Shakespeare’s plays. I am looking forward to speaking with her and exchanging tips with her, along with Wolfgang and Maria Lopez, who are both doing research.

参考

^A demonstration of an actual simulation of the generative agent society can be viewed at the following link: https://reverie.herokuapp.com/arXiv_Demo/