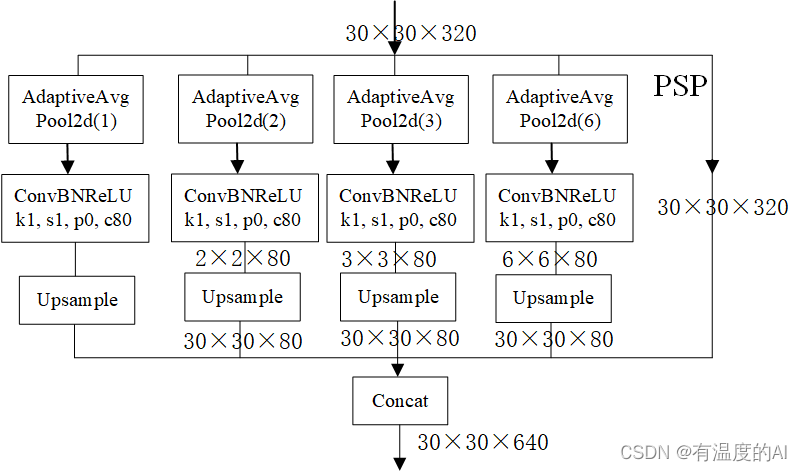

1、pyramid pooling模块(也叫PSP模块)

# 若输入为30*30*320

class PyramidPooling(nn.Module):

def __init__(self, in_channels, norm_layer, up_kwargs):

super(PyramidPooling, self).__init__()

self.pool1 = nn.AdaptiveAvgPool2d(1)

self.pool2 = nn.AdaptiveAvgPool2d(2)

self.pool3 = nn.AdaptiveAvgPool2d(3)

self.pool4 = nn.AdaptiveAvgPool2d(6)

out_channels = int(in_channels/4) #80

self.conv1 = nn.Sequential(nn.Conv2d(in_channels, out_channels, 1, bias=False),

norm_layer(out_channels),

nn.ReLU(True))

self.conv2 = nn.Sequential(nn.Conv2d(in_channels, out_channels, 1, bias=False),

norm_layer(out_channels),

nn.ReLU(True))

self.conv3 = nn.Sequential(nn.Conv2d(in_channels, out_channels, 1, bias=False),

norm_layer(out_channels),

nn.ReLU(True))

self.conv4 = nn.Sequential(nn.Conv2d(in_channels, out_channels, 1, bias=False),

norm_layer(out_channels),

nn.ReLU(True))

# bilinear interpolate options

self._up_kwargs = up_kwargs

def forward(self, x):

_, _, h, w = x.size()

feat1 = F.interpolate(self.conv1(self.pool1(x)), (h, w), **self._up_kwargs)

feat2 = F.interpolate(self.conv2(self.pool2(x)), (h, w), **self._up_kwargs)

feat3 = F.interpolate(self.conv3(self.pool3(x)), (h, w), **self._up_kwargs)

feat4 = F.interpolate(self.conv4(self.pool4(x)), (h, w), **self._up_kwargs)

return torch.cat((x, feat1, feat2, feat3, feat4), 1)2、strip pooling module

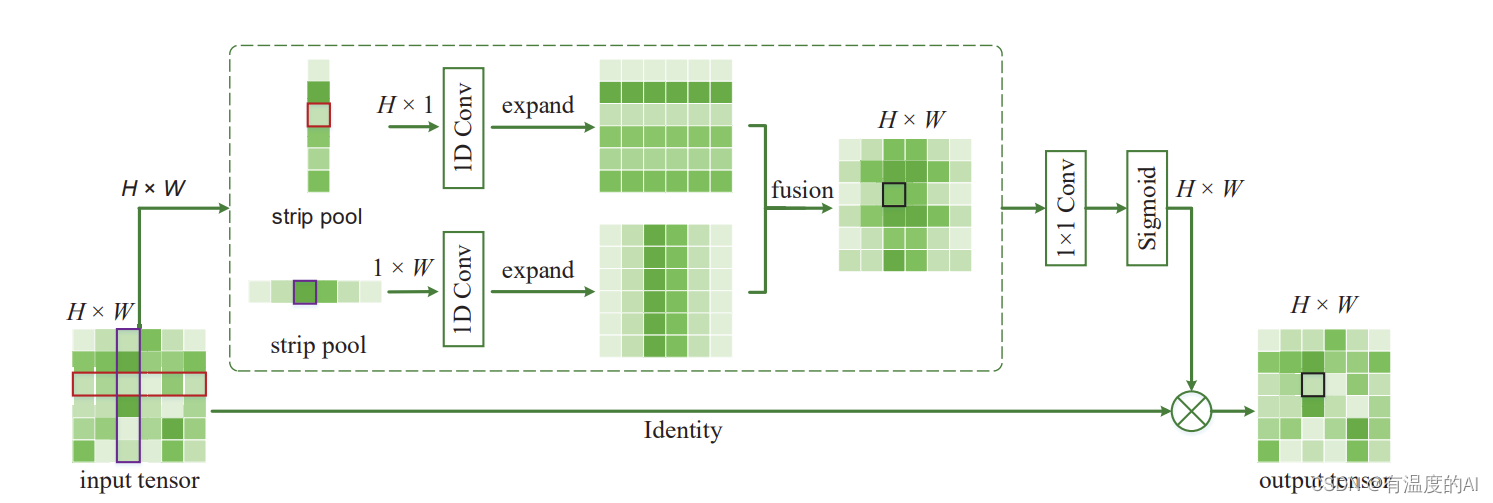

输入一个特征图,C×H×W,经过水平和竖直条纹池化后变为H×1和1×W,使用求平均的方法,对池化核内的元素值求平均,并以该值作为池化输出值;随后经过卷积对两个输出feature map分别沿着左右和上下进行扩充,扩充后两个特征图尺寸相同,对扩充后的特征图对应相同位置进行逐像素求和得到H×W的特征图;最后通过1×1的卷积与sigmoid处理后与原输入图对应像素相乘得到了最终的输出结果。

class StripPooling(nn.Module):

def __init__(self, in_channels, up_kwargs={'mode': 'bilinear', 'align_corners': True}):

super(StripPooling, self).__init__()

self.pool1 = nn.AdaptiveAvgPool2d((1, None))#1*W

self.pool2 = nn.AdaptiveAvgPool2d((None, 1))#H*1

inter_channels = int(in_channels / 4)

self.conv1 = nn.Sequential(nn.Conv2d(in_channels, inter_channels, 1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.ReLU(True))

self.conv2 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, (1, 3), 1, (0, 1), bias=False),

nn.BatchNorm2d(inter_channels))

self.conv3 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, (3, 1), 1, (1, 0), bias=False),

nn.BatchNorm2d(inter_channels))

self.conv4 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, 3, 1, 1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.ReLU(True))

self.conv5 = nn.Sequential(nn.Conv2d(inter_channels, in_channels, 1, bias=False),

nn.BatchNorm2d(in_channels))

self._up_kwargs = up_kwargs

def forward(self, x):

_, _, h, w = x.size()

x1 = self.conv1(x)

x2 = F.interpolate(self.conv2(self.pool1(x1)), (h, w), **self._up_kwargs)#结构图的1*W的部分

x3 = F.interpolate(self.conv3(self.pool2(x1)), (h, w), **self._up_kwargs)#结构图的H*1的部分

x4 = self.conv4(F.relu_(x2 + x3))#结合1*W和H*1的特征

out = self.conv5(x4)

return F.relu_(x + out)

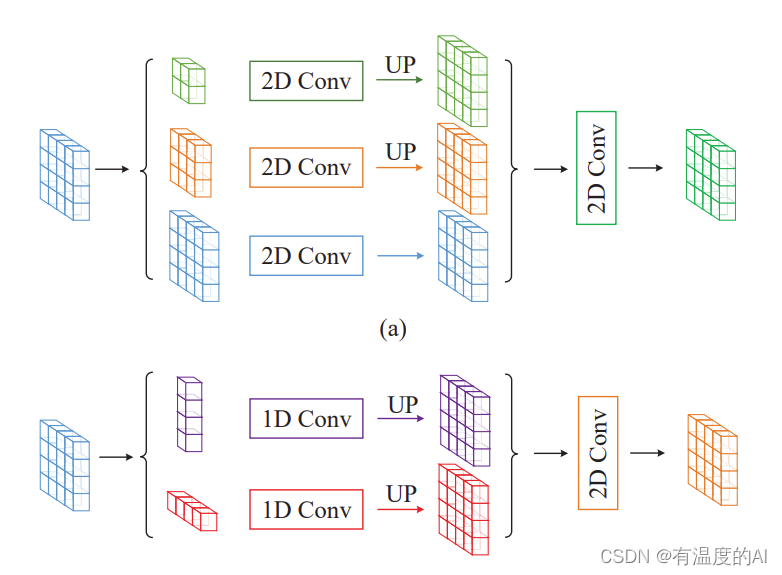

3、Mixed Pooling Module

如果将网络中的所有pooling操作全部换成strip pooling操作,则会导致原来非长条物体的检测效果变差。因此,将strip pooling和pyramid pooling结合起来,构造mixed pooling module模块,兼顾长条形和非长条形物体的效果,同时捕获不同位置之间的短距离和长距离依赖关系。MPM包括传统空间池化和条形池化两个子模块,分别用于捕获短距离(局部)依赖和长距离依赖,从而更好地适应于不同形状物体。

class MPM(nn.Module):

def __init__(self, in_channels, pool_size, up_kwargs={'mode': 'bilinear', 'align_corners': True}):

super(MPM, self).__init__()

inter_channels = int(in_channels / 4)

# 空间池化

self.pool1 = nn.AdaptiveAvgPool2d(pool_size[0])

self.pool2 = nn.AdaptiveAvgPool2d(pool_size[1])

# strip pooling

self.pool3 = nn.AdaptiveAvgPool2d((1, None))

self.pool4 = nn.AdaptiveAvgPool2d((None, 1))

self.conv1_1 = nn.Sequential(nn.Conv2d(in_channels, inter_channels, 1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.ReLU(True))

self.conv1_2 = nn.Sequential(nn.Conv2d(in_channels, inter_channels, 1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.ReLU(True))

self.conv2_0 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, 3, 1, 1, bias=False),

nn.BatchNorm2d(inter_channels))

self.conv2_1 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, 3, 1, 1, bias=False),

nn.BatchNorm2d(inter_channels))

self.conv2_2 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, 3, 1, 1, bias=False),

nn.BatchNorm2d(inter_channels))

self.conv2_3 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, (1, 3), 1, (0, 1), bias=False),

nn.BatchNorm2d(inter_channels))

self.conv2_4 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, (3, 1), 1, (1, 0), bias=False),

nn.BatchNorm2d(inter_channels))

self.conv2_5 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, 3, 1, 1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.ReLU(True))

self.conv2_6 = nn.Sequential(nn.Conv2d(inter_channels, inter_channels, 3, 1, 1, bias=False),

nn.BatchNorm2d(inter_channels),

nn.ReLU(True))

self.conv3 = nn.Sequential(nn.Conv2d(inter_channels * 2, in_channels, 1, bias=False),

nn.BatchNorm2d(in_channels))

# bilinear interpolate options

self._up_kwargs = up_kwargs

def forward(self, x):

b, c, h, w = x.size()

x1 = self.conv1_1(x)

x2 = self.conv1_2(x)

x2_1 = self.conv2_0(x1)

x2_2 = F.interpolate(self.conv2_1(self.pool1(x1)), size=[h, w], **self._up_kwargs)

x2_3 = F.interpolate(self.conv2_2(self.pool2(x1)), size=(h, w), **self._up_kwargs)

x2_4 = F.interpolate(self.conv2_3(self.pool3(x2)), size=(h, w), **self._up_kwargs)

x2_5 = F.interpolate(self.conv2_4(self.pool4(x2)), size=(h, w), **self._up_kwargs)

# PPM branch output

x1 = self.conv2_5(F.relu_(x2_1 + x2_2 + x2_3))

# MPM output

x2 = self.conv2_6(F.relu_(x2_5 + x2_4))

out = self.conv3(torch.cat([x1, x2], dim=1))

return F.relu_(x + out)