如果你也是spark2.4.0,那么在windows系统上肯定会出现该错误。

实验环境

- windows10

- spark2.4.0

相关报错

Traceback (most recent call last):

File "C:\Users\mjdbr\Anaconda3\lib\runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "C:\Users\mjdbr\Anaconda3\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\Spark\spark-2.4.0-bin-hadoop2.7\python\lib\pyspark.zip\pyspark\worker.py", line 25, in <module>

ModuleNotFoundError: No module named 'resource'

18/11/10 23:16:58 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)

org.apache.spark.SparkException: Python worker failed to connect back.

at org.apache.spark.api.python.PythonWorkerFactory.createSimpleWorker(PythonWorkerFactory.scala:170)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:97)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:402)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:408)

at java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.lang.Thread.run(Unknown Source)

Caused by: java.net.SocketTimeoutException: Accept timed out

at java.net.DualStackPlainSocketImpl.waitForNewConnection(Native Method)

at java.net.DualStackPlainSocketImpl.socketAccept(Unknown Source)

at java.net.AbstractPlainSocketImpl.accept(Unknown Source)

错误分析

可以看出所需要的包找不到。

"C:\Spark\spark-2.4.0-bin-hadoop2.7\python\lib\pyspark.zip\pyspark\worker.py", line 25, in <module>

ModuleNotFoundError: No module named 'resource'

之后再网上查找解决办法,大概说的就是:resoure模块在unix、linux下是支持的,但是windows系统中不可用。

其实这就是spark2.4.0的一个bug,如果我们回退到spark2.3或者使用最新版’spark2.4.3’都是可以正常运行的。

问题解决

既然知道的问题出在哪儿,这就就好解决了。

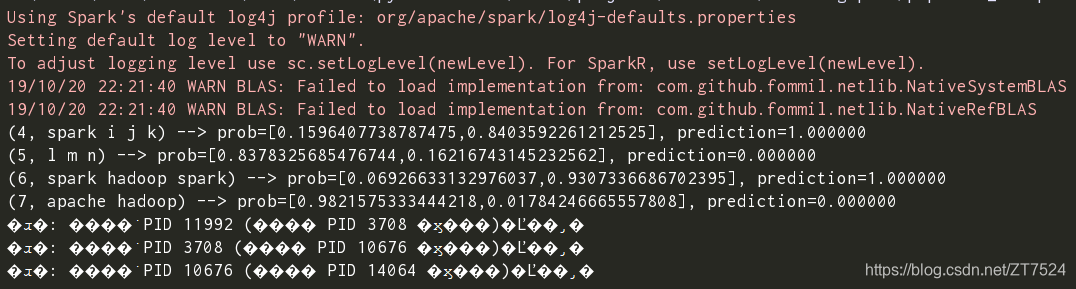

由于笔者之前安装过spark2.4.3,更换版本后运行结果如下图所示:

方法1

只需更换’spark’版本就行(推荐,因为省事方便);更换版本

方法2

在原版上修复错误。我没有yoga这种办法,这里贴出一些参考文档,有兴趣的的同学可以 尝试下。