1. Modo de máscara: es relativo a la secuencia cíclica de longitud variable. Si las secuencias de muestra de entrada tienen diferentes longitudes, se alinearán primero (0 para secuencias cortas, truncadas para secuencias largas) y luego se ingresarán al modelo. De esta forma, habrá muchos ceros en algunas de las muestras del modelo. Para mejorar el rendimiento de la operación, es necesario eliminar los valores cero innecesarios mediante el enmascaramiento y mantener los valores distintos de cero para el cálculo. Este es el papel de la máscara.

2. Modo medio: el normal el modo calcula la atención para todas las secuencias en cada puntaje de dimensión, mientras que el modo medio promedia los puntajes de atención para cada dimensión. El modo medio suaviza las diferencias entre diferentes dimensiones de la misma secuencia, considera todas las dimensiones iguales y utiliza la atención entre secuencias. Este método puede reflejar mejor la importancia de la secuencia.

Código Atención_cclasificación.py

import torchvision

import torchvision.transforms as tranforms

import pylab

import torch

from matplotlib import pyplot as plt

import numpy as np

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True' # 可能是由于是MacOS系统的原因

data_dir = './fashion_mnist'

tranform = tranforms.Compose([tranforms.ToTensor()])

train_dataset = torchvision.datasets.FashionMNIST(root=data_dir,train=True,transform=tranform,download=True)

print("训练数据集条数",len(train_dataset))

val_dataset = torchvision.datasets.FashionMNIST(root=data_dir, train=False, transform=tranform)

print("测试数据集条数",len(val_dataset))

im = train_dataset[0][0]

im = im.reshape(-1,28)

pylab.imshow(im)

pylab.show()

print("该图片的标签为:",train_dataset[0][1])

## 数据集的制造

batch_size = 10

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(val_dataset, batch_size=batch_size, shuffle=False)

def imshow(img):

print("图片形状:",np.shape(img))

npimg = img.numpy()

plt.axis('off')

plt.imshow(np.transpose(npimg,(1,2,0)))

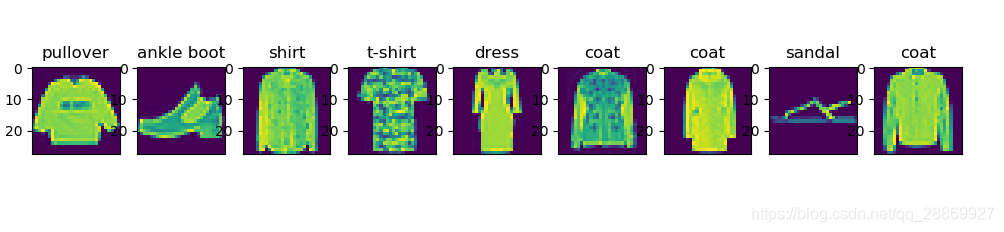

classes = ('T-shirt', 'Trouser', 'Pullover', 'Dress', 'Coat', 'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle_Boot')

sample = iter(train_loader)

images,labels = sample.next()

print("样本形状:",np.shape(images))

print("样本标签",labels)

imshow(torchvision.utils.make_grid(images,nrow=batch_size))

print(','.join('%5s' % classes[labels[j]] for j in range(len(images))))

class myLSTMNet(torch.nn.Module): #定义myLSTMNet模型类,该模型包括 2个RNN层和1个全连接层

def __init__(self,in_dim, hidden_dim, n_layer, n_class):

super(myLSTMNet, self).__init__()

# 定义循环神经网络层

self.lstm = torch.nn.LSTM(in_dim, hidden_dim, n_layer, batch_first=True)

self.Linear = torch.nn.Linear(hidden_dim * 28, n_class) # 定义全连接层

self.attention = AttentionSeq(hidden_dim, hard=0.03) # 定义注意力层,使用硬模式的注意力机制

def forward(self, t): # 搭建正向结构

t, _ = self.lstm(t) # 使用LSTM对象进行RNN数据处理

t = self.attention(t) # 对循环神经网络结果进行注意力机制的处理,将处理后的结果变形为二维数据,传入全连接输出层。1

t = t.reshape(t.shape[0], -1) # 对循环神经网络结果进行注意力机制的处理,将处理后的结果变形为二维数据,传入全连接输出层。2

out = self.Linear(t) # 进行全连接处理

return out

class AttentionSeq(torch.nn.Module):

def __init__(self, hidden_dim, hard=0.0): # 初始化

super(AttentionSeq, self).__init__()

self.hidden_dim = hidden_dim

self.dense = torch.nn.Linear(hidden_dim, hidden_dim)

self.hard = hard

def forward(self, features, mean=False): # 类的处理方法

# [batch,seq,dim]

batch_size, time_step, hidden_dim = features.size()

weight = torch.nn.Tanh()(self.dense(features)) # 全连接计算

# 计算掩码,mask给负无穷使得权重为0

mask_idx = torch.sign(torch.abs(features).sum(dim=-1))

# mask_idx = mask_idx.unsqueeze(-1).expand(batch_size, time_step, hidden_dim)

mask_idx = mask_idx.unsqueeze(-1).repeat(1, 1, hidden_dim)

# 将掩码作用在注意力结果上

# torch.where函数的意思是按照第一参数的条件对每个元素进行检查,如果满足,那么使用第二个参数里对应元素的值进行填充,如果不满足,那么使用第三个参数里对应元素的值进行填充。

# torch.ful_likeO函数是按照张量的形状进行指定值的填充,其第一个参数是参考形状的张量,第二个参数是填充值。

weight = torch.where(mask_idx == 1, weight,torch.full_like(mask_idx, (-2 ** 32 + 1))) # 利用掩码对注意力结果补0序列填充一个极小数,会在Softmax中被忽略为0

weight = weight.transpose(2, 1)

# 必须对注意力结果补0序列填充一个极小数,千万不能填充0,因为注意力结果是经过激活函数tanh()计算出来的,其值域是 - 1~1, 在这个区间内,零值是一个有效值。如果填充0,那么会对后面的Softmax结果产生影响。填充的值只有远离这个有效区间才可以保证被Softmax的结果忽略。

weight = torch.nn.Softmax(dim=2)(weight) # 计算注意力分数

if self.hard != 0: # hard mode

weight = torch.where(weight > self.hard, weight, torch.full_like(weight, 0))

if mean: # 支持注意力分数平均值模式

weight = weight.mean(dim=1)

weight = weight.unsqueeze(1)

weight = weight.repeat(1, hidden_dim, 1)

weight = weight.transpose(2, 1)

features_attention = weight * features # 将注意力分数作用于特征向量上

return features_attention # 返回结果

#实例化模型对象

network = myLSTMNet(28, 128, 2, 10) # 图片大小是28x28,28:输入数据的序列长度为28。128:每层放置128个LSTM Cell。2:构建两层由LSTM Cell所组成的网络。10:最终结果分为10类。

#指定设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

network.to(device)

print(network) #打印网络

criterion = torch.nn.CrossEntropyLoss() # 实例化损失函数类

optimizer = torch.optim.Adam(network.parameters(), lr=0.01)

for epoch in range(2): # 数据集迭代2次

running_loss = 0.0

for i, data in enumerate(train_loader, 0): # 循环取出批次数据

inputs, labels = data

inputs = inputs.squeeze(1) # 由于输入数据是序列形式,不再是图片,因此将通道设为1

inputs, labels = inputs.to(device), labels.to(device) # 指定设备

optimizer.zero_grad() # 清空之前的梯度

outputs = network(inputs)

loss = criterion(outputs, labels) # 计算损失

loss.backward() #反向传播

optimizer.step() #更新参数

running_loss += loss.item()

if i % 1000 == 999:

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

#使用模型

dataiter = iter(test_loader)

images, labels = dataiter.next()

inputs, labels = images.to(device), labels.to(device)

imshow(torchvision.utils.make_grid(images,nrow=batch_size))

print('真实标签: ', ' '.join('%5s' % classes[labels[j]] for j in range(len(images))))

inputs = inputs.squeeze(1)

outputs = network(inputs)

_, predicted = torch.max(outputs, 1)

print('预测结果: ', ' '.join('%5s' % classes[predicted[j]]

for j in range(len(images))))

#测试模型

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in test_loader:

images, labels = data

images = images.squeeze(1)

inputs, labels = images.to(device), labels.to(device)

outputs = network(inputs)

_, predicted = torch.max(outputs, 1)

predicted = predicted.to(device)

c = (predicted == labels).squeeze()

for i in range(10):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

sumacc = 0

for i in range(10):

Accuracy = 100 * class_correct[i] / class_total[i]

print('Accuracy of %5s : %2d %%' % (classes[i], Accuracy ))

sumacc =sumacc+Accuracy

print('Accuracy of all : %2d %%' % ( sumacc/10. ))