Hable acerca de las dos bibliotecas de código abierto Disruptor y Aeron.

Disruptor

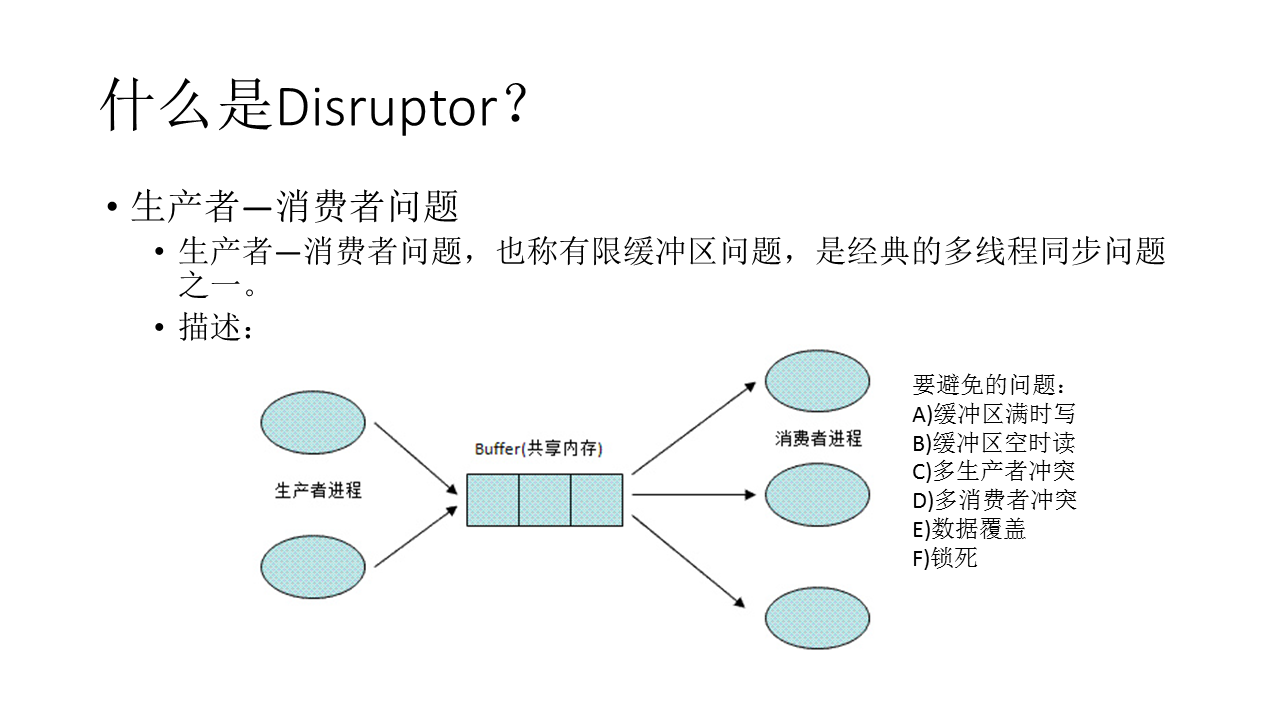

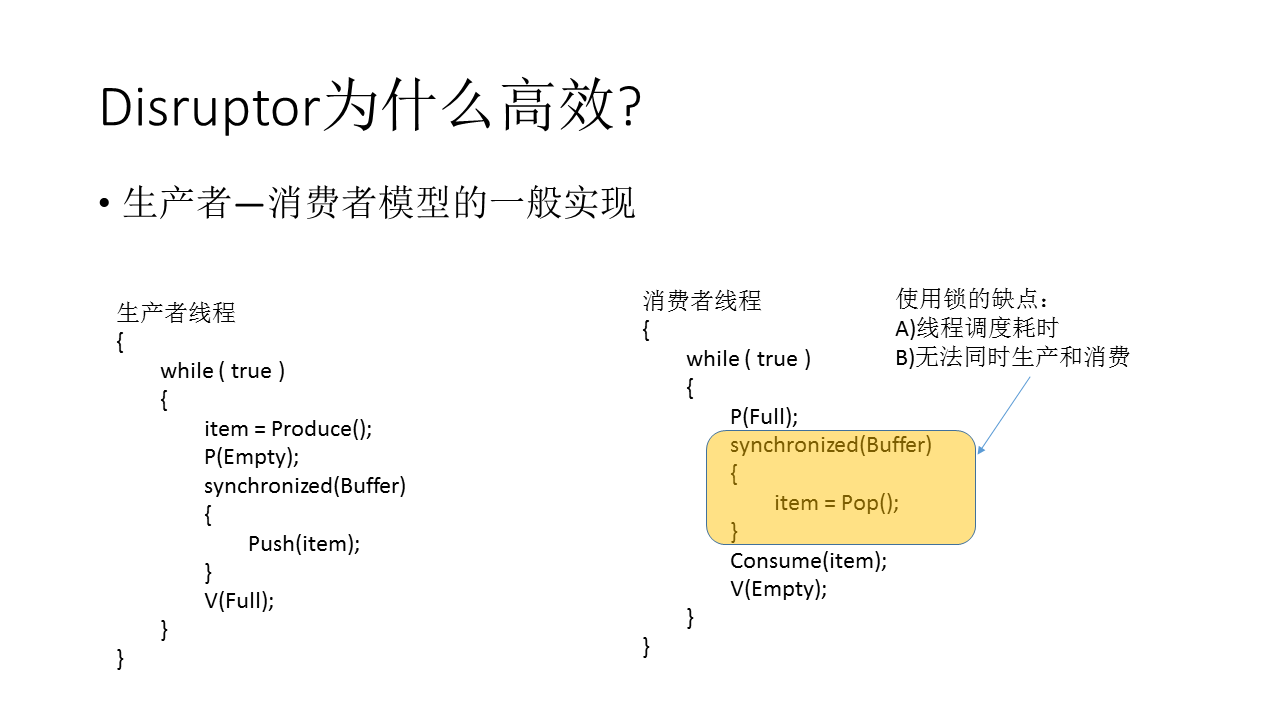

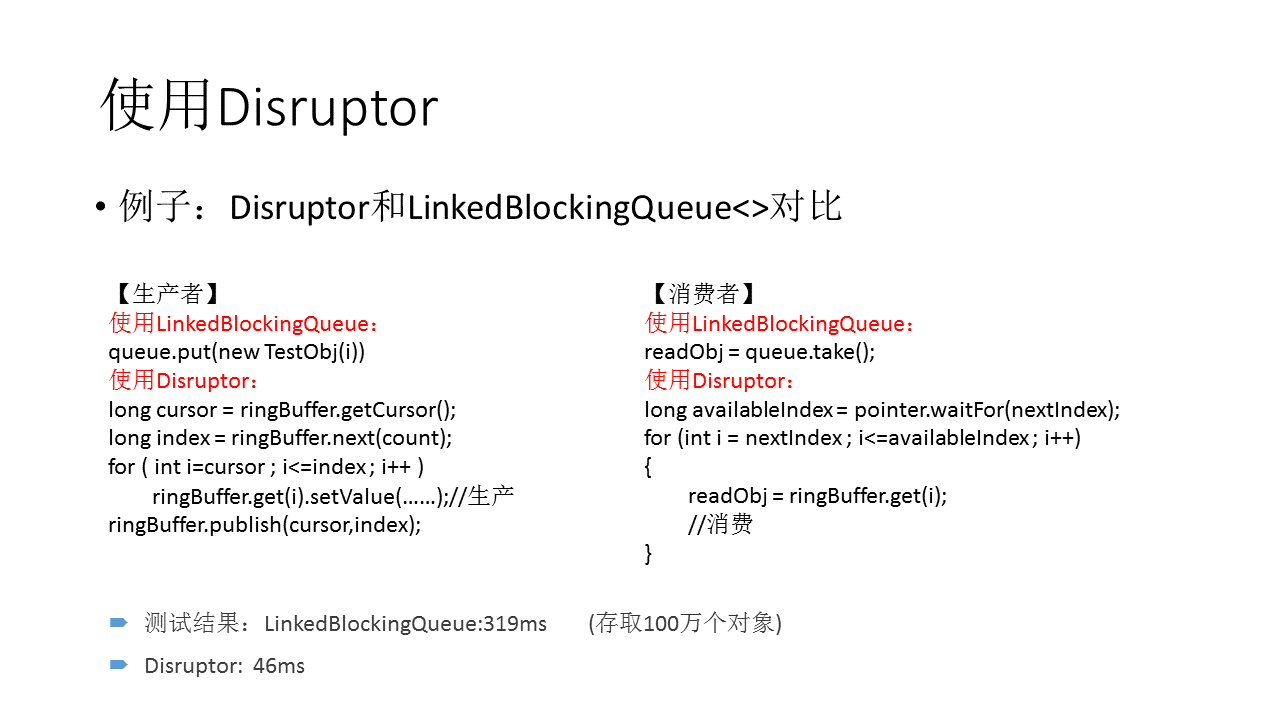

La mejor manera de entender qué es el disruptor es compararlo con algo bien entendido y con un propósito bastante similar. En el caso del Disruptor, este sería el BlockingQueue de Java. Al igual que una cola, el propósito del Disruptor es mover datos (por ejemplo, mensajes o eventos) entre hilos dentro del mismo proceso. Sin embargo, hay algunas características clave que proporciona el Disruptor que lo distinguen de una cola. Son:

• Eventos de multidifusión para consumidores, con gráfico de dependencia del consumidor.

• Preasignar memoria para eventos.

• Opcionalmente sin bloqueo.

Conceptos básicos

Antes de que podamos entender cómo funciona el Disruptor, vale la pena definir una serie de términos que se utilizarán en toda la documentación y el código. Para aquellos con una DDD doblada, piense en esto como el lenguaje omnipresente del dominio Disruptor.

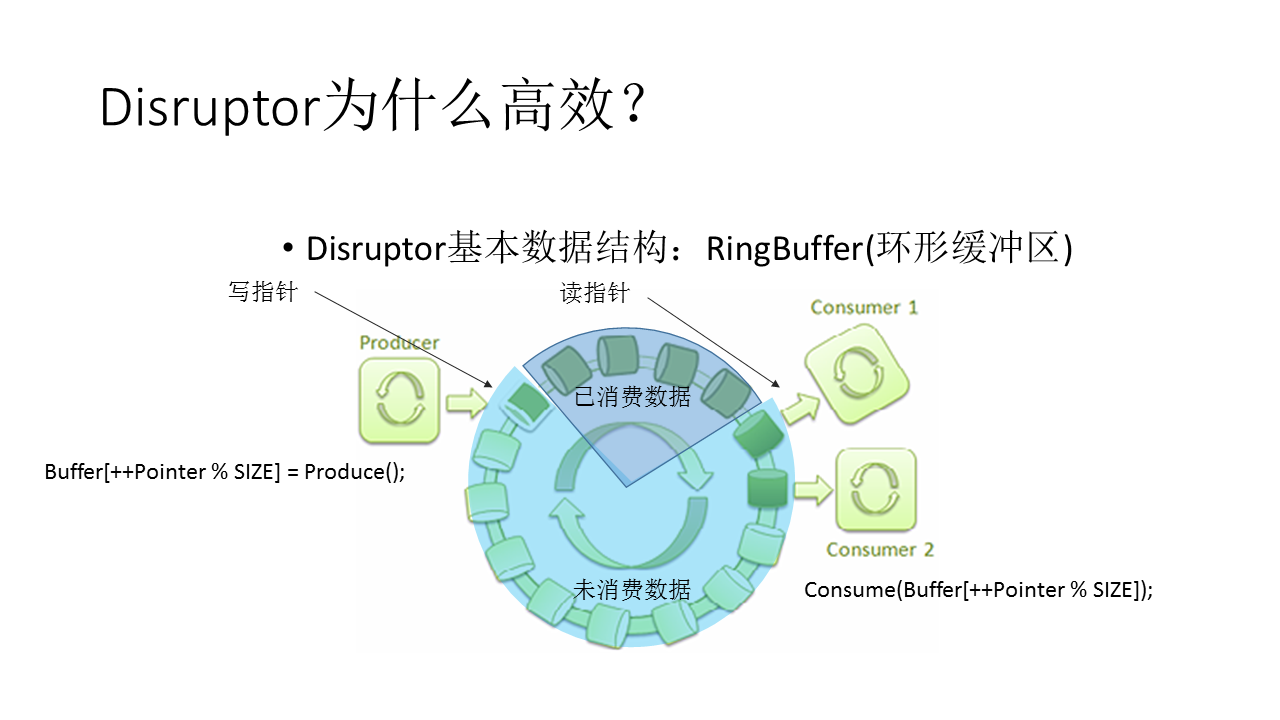

• Ring Buffer: el Ring Buffer a menudo se considera el aspecto principal del Disruptor, sin embargo, desde 3.0 en adelante, el Ring Buffer solo es responsable del almacenamiento y la actualización de los datos (Eventos) que se mueven a través del Disruptor. Y para algunos casos de uso avanzados, el usuario puede reemplazarlos por completo.

• Secuencia: el disruptor utiliza secuencias como un medio para identificar dónde está haciendo un componente en particular. Cada consumidor (EventProcessor) mantiene una secuencia al igual que el propio disruptor. La mayoría del código concurrente se basa en el movimiento de estos valores de Secuencia, por lo tanto, la Secuencia admite muchas de las características actuales de un AtomicLong. De hecho, la única diferencia real entre los 2 es que la secuencia contiene una funcionalidad adicional para evitar el intercambio falso entre secuencias y otros valores.

• Secuenciador: el secuenciador es el núcleo real del disruptor. Las 2 implementaciones (productor único, productor múltiple) de esta interfaz implementan todos los algoritmos concurrentes utilizados para el paso rápido y correcto de datos entre productores y consumidores.

• Barrera de secuencia: la barrera de secuencia es producida por el secuenciador y contiene referencias a la secuencia publicada principal del secuenciador y las secuencias de cualquier consumidor dependiente. Contiene la lógica para determinar si hay eventos disponibles para que el consumidor los procese.

• Estrategia de espera: la estrategia de espera determina cómo un consumidor esperará a que un productor coloque los eventos en el disruptor. Más detalles están disponibles en la sección sobre ser opcionalmente sin bloqueo.

• Evento: la unidad de datos transmitida del productor al consumidor. No hay una representación de código específica del Evento tal como lo define completamente el usuario.

• EventProcessor: el bucle principal de eventos para manejar eventos desde el disruptor y es propietario de la secuencia del consumidor. Hay una única representación llamada BatchEventProcessor que contiene una implementación eficiente del bucle de eventos y volverá a llamar a una implementación suministrada usada de la interfaz EventHandler.

• EventHandler: una interfaz implementada por el usuario y que representa un consumidor para el disruptor.

• Productor: este es el código de usuario que llama al Disruptor para poner en cola los eventos. Este concepto tampoco tiene representación en el código.

Aeron

Aeron es un transporte OSI de capa 4 para flujos orientados a mensajes. Funciona a través de UDP o IPC, y es compatible con unidifusión y multidifusión. El objetivo principal es proporcionar una conexión eficiente y confiable con una latencia baja y predecible. Aeron tiene clientes Java, C ++ y .NET.

¿Cuándo usar?

A high and predictable performance is a main advantage of Aeron, it’s most useful in application which requires low-latency, high throughput (e.g. sending large files) or both (akka remoting uses Aeron).

If it can work over UDP, why not to use UDP?

The main goal of Aeron is high performance. That is why it makes sense why it can work over UDP but doesn’t support TCP. But someone can ask what features Aeron provides on top of UDP?

Aeron is OSI Layer 4 (Transport) Services. It supports next features:

1. Connection Oriented Communication

2. Reliability

3. Flow Control

4. Congestion Avoidance/Control

5. Multiplexing

Architecture

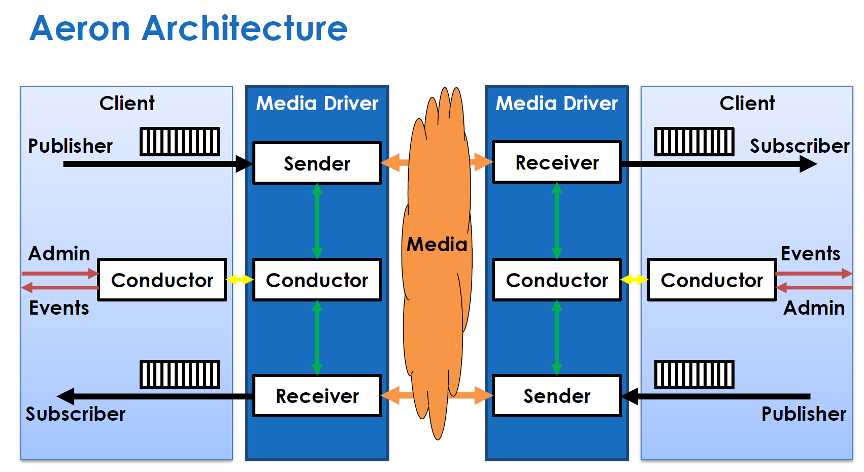

Aeron uses unidirectional connections. If you need to send requests and receive responses, you should use two connections.Publisher and Media Driver (see later) are used to send a message, Subscriber and Media Driver — to receive. Client talks to Media Driver via shared memory.

为什么将这两个库在一起聊呢,通常Disruptor框架的核心数据结构circular buffer是Java实现的,主要原因是Java对并发的支持比较友好,而且比较早的支持了内存模型,但是 C++ 11以后,C++ 同样在并发方面有了长足的进步, Aeron就是在这个背景下产生的,虽然核心代码依旧是Java,但是在对C++客户端支持的设计中也实现了比较多的,有价值的数据结构,比如OneToOneRingBuffer, ManyToOneRingBuffer。

下面就以OneToOneRingBuffer为例进行说明,

/* * Copyright 2014-2020 Real Logic Limited. * * Licensed under the Apache License, Version 2.0 (the "License"); * you may not use this file except in compliance with the License. * You may obtain a copy of the License at * * https://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ #ifndef AERON_RING_BUFFER_ONE_TO_ONE_H #define AERON_RING_BUFFER_ONE_TO_ONE_H #include <climits> #include <functional> #include <algorithm> #include "util/Index.h" #include "util/LangUtil.h" #include "AtomicBuffer.h" #include "Atomic64.h" #include "RingBufferDescriptor.h" #include "RecordDescriptor.h" namespace aeron { namespace concurrent { namespace ringbuffer { class OneToOneRingBuffer { public: explicit OneToOneRingBuffer(concurrent::AtomicBuffer &buffer) : m_buffer(buffer) { m_capacity = buffer.capacity() - RingBufferDescriptor::TRAILER_LENGTH; RingBufferDescriptor::checkCapacity(m_capacity); m_maxMsgLength = m_capacity / 8; m_tailPositionIndex = m_capacity + RingBufferDescriptor::TAIL_POSITION_OFFSET; m_headCachePositionIndex = m_capacity + RingBufferDescriptor::HEAD_CACHE_POSITION_OFFSET; m_headPositionIndex = m_capacity + RingBufferDescriptor::HEAD_POSITION_OFFSET; m_correlationIdCounterIndex = m_capacity + RingBufferDescriptor::CORRELATION_COUNTER_OFFSET; m_consumerHeartbeatIndex = m_capacity + RingBufferDescriptor::CONSUMER_HEARTBEAT_OFFSET; } OneToOneRingBuffer(const OneToOneRingBuffer &) = delete; OneToOneRingBuffer &operator=(const OneToOneRingBuffer &) = delete; inline util::index_t capacity() const { return m_capacity; } bool write(std::int32_t msgTypeId, concurrent::AtomicBuffer &srcBuffer, util::index_t srcIndex, util::index_t length) { RecordDescriptor::checkMsgTypeId(msgTypeId); checkMsgLength(length); const util::index_t recordLength = length + RecordDescriptor::HEADER_LENGTH; const util::index_t requiredCapacity = util::BitUtil::align(recordLength, RecordDescriptor::ALIGNMENT); const util::index_t mask = m_capacity - 1; std::int64_t head = m_buffer.getInt64(m_headCachePositionIndex); std::int64_t tail = m_buffer.getInt64(m_tailPositionIndex); const util::index_t availableCapacity = m_capacity - (util::index_t)(tail - head); if (requiredCapacity > availableCapacity) { head = m_buffer.getInt64Volatile(m_headPositionIndex); if (requiredCapacity > (m_capacity - (util::index_t)(tail - head))) { return false; } m_buffer.putInt64(m_headCachePositionIndex, head); } util::index_t padding = 0; auto recordIndex = static_cast<util::index_t>(tail & mask); const util::index_t toBufferEndLength = m_capacity - recordIndex; if (requiredCapacity > toBufferEndLength) { auto headIndex = static_cast<std::int32_t>(head & mask); if (requiredCapacity > headIndex) { head = m_buffer.getInt64Volatile(m_headPositionIndex); headIndex = static_cast<std::int32_t>(head & mask); if (requiredCapacity > headIndex) { return false; } m_buffer.putInt64Ordered(m_headCachePositionIndex, head); } padding = toBufferEndLength; } if (0 != padding) { m_buffer.putInt64Ordered( recordIndex, RecordDescriptor::makeHeader(padding, RecordDescriptor::PADDING_MSG_TYPE_ID)); recordIndex = 0; } m_buffer.putBytes(RecordDescriptor::encodedMsgOffset(recordIndex), srcBuffer, srcIndex, length); m_buffer.putInt64Ordered(recordIndex, RecordDescriptor::makeHeader(recordLength, msgTypeId)); m_buffer.putInt64Ordered(m_tailPositionIndex, tail + requiredCapacity + padding); return true; } int read(const handler_t &handler, int messageCountLimit) { const std::int64_t head = m_buffer.getInt64(m_headPositionIndex); const auto headIndex = static_cast<std::int32_t>(head & (m_capacity - 1)); const std::int32_t contiguousBlockLength = m_capacity - headIndex; int messagesRead = 0; int bytesRead = 0; auto cleanup = util::InvokeOnScopeExit { [&]() { if (bytesRead != 0) { m_buffer.setMemory(headIndex, bytesRead, 0); m_buffer.putInt64Ordered(m_headPositionIndex, head + bytesRead); } }}; while ((bytesRead < contiguousBlockLength) && (messagesRead < messageCountLimit)) { const std::int32_t recordIndex = headIndex + bytesRead; const std::int64_t header = m_buffer.getInt64Volatile(recordIndex); const std::int32_t recordLength = RecordDescriptor::recordLength(header); if (recordLength <= 0) { break; } bytesRead += util::BitUtil::align(recordLength, RecordDescriptor::ALIGNMENT); const std::int32_t msgTypeId = RecordDescriptor::messageTypeId(header); if (RecordDescriptor::PADDING_MSG_TYPE_ID == msgTypeId) { continue; } ++messagesRead; handler( msgTypeId, m_buffer, RecordDescriptor::encodedMsgOffset(recordIndex), recordLength - RecordDescriptor::HEADER_LENGTH); } return messagesRead; } inline int read(const handler_t &handler) { return read(handler, INT_MAX); } inline util::index_t maxMsgLength() const { return m_maxMsgLength; } inline std::int64_t nextCorrelationId() { return m_buffer.getAndAddInt64(m_correlationIdCounterIndex, 1); } inline void consumerHeartbeatTime(std::int64_t time) { m_buffer.putInt64Ordered(m_consumerHeartbeatIndex, time); } inline std::int64_t consumerHeartbeatTime() const { return m_buffer.getInt64Volatile(m_consumerHeartbeatIndex); } inline std::int64_t producerPosition() const { return m_buffer.getInt64Volatile(m_tailPositionIndex); } inline std::int64_t consumerPosition() const { return m_buffer.getInt64Volatile(m_headPositionIndex); } inline std::int32_t size() const { std::int64_t headBefore; std::int64_t tail; std::int64_t headAfter = m_buffer.getInt64Volatile(m_headPositionIndex); do { headBefore = headAfter; tail = m_buffer.getInt64Volatile(m_tailPositionIndex); headAfter = m_buffer.getInt64Volatile(m_headPositionIndex); } while (headAfter != headBefore); return static_cast<std::int32_t>(tail - headAfter); } bool unblock() { return false; } private: concurrent::AtomicBuffer &m_buffer; util::index_t m_capacity; util::index_t m_maxMsgLength; util::index_t m_headPositionIndex; util::index_t m_headCachePositionIndex; util::index_t m_tailPositionIndex; util::index_t m_correlationIdCounterIndex; util::index_t m_consumerHeartbeatIndex; inline void checkMsgLength(util::index_t length) const { if (length > m_maxMsgLength) { throw util::IllegalArgumentException( "encoded message exceeds maxMsgLength of " + std::to_string(m_maxMsgLength) + " length=" + std::to_string(length), SOURCEINFO); } } }; }}} #endif

使用示例

AtomicBuffer ab(&buff[0], buff.size()); OneToOneRingBuffer ringBuffer(ab); util::index_t tail = 0; util::index_t head = 0; ab.putInt64(HEAD_COUNTER_INDEX, head); ab.putInt64(TAIL_COUNTER_INDEX, tail); std::cout <<"circular buffer capacity is : "<<ringBuffer.capacity() << std::endl;

util::index_t length = 24; util::index_t recordLength = length + RecordDescriptor::HEADER_LENGTH; AtomicBuffer srcAb(&srcBuff[0], srcBuff.size()); srcAb.putInt64(0, 999); srcAb.putStringWithoutLength(8, "0123456789012345"); while (ringBuffer.write(MSG_TYPE, srcAb, 0, length)) { } int timesCalled = 0; const int messagesRead = ringBuffer.read( [&](std::int32_t msgTypeId, concurrent::AtomicBuffer& internalBuf, util::index_t valPosition, util::index_t length) { timesCalled++; std::cout << "circular buffer read value is : " << internalBuf.getInt64(valPosition)

<<" string value: " << internalBuf.getStringWithoutLength(valPosition+8, length - 8) <<" called times: "<< timesCalled <<std::endl; });

运行结果

circular buffer capacity is : 1024 circular buffer read value is : 999 string value: 0123456789012345 called times: 1 circular buffer read value is : 999 string value: 0123456789012345 called times: 2 circular buffer read value is : 999 string value: 0123456789012345 called times: 3 circular buffer read value is : 999 string value: 0123456789012345 called times: 4 circular buffer read value is : 999 string value: 0123456789012345 called times: 5 circular buffer read value is : 999 string value: 0123456789012345 called times: 6 circular buffer read value is : 999 string value: 0123456789012345 called times: 7 circular buffer read value is : 999 string value: 0123456789012345 called times: 8 circular buffer read value is : 999 string value: 0123456789012345 called times: 9 circular buffer read value is : 999 string value: 0123456789012345 called times: 10 circular buffer read value is : 999 string value: 0123456789012345 called times: 11 circular buffer read value is : 999 string value: 0123456789012345 called times: 12 circular buffer read value is : 999 string value: 0123456789012345 called times: 13 circular buffer read value is : 999 string value: 0123456789012345 called times: 14 circular buffer read value is : 999 string value: 0123456789012345 called times: 15 circular buffer read value is : 999 string value: 0123456789012345 called times: 16 circular buffer read value is : 999 string value: 0123456789012345 called times: 17 circular buffer read value is : 999 string value: 0123456789012345 called times: 18 circular buffer read value is : 999 string value: 0123456789012345 called times: 19 circular buffer read value is : 999 string value: 0123456789012345 called times: 20 circular buffer read value is : 999 string value: 0123456789012345 called times: 21 circular buffer read value is : 999 string value: 0123456789012345 called times: 22 circular buffer read value is : 999 string value: 0123456789012345 called times: 23 circular buffer read value is : 999 string value: 0123456789012345 called times: 24 circular buffer read value is : 999 string value: 0123456789012345 called times: 25 circular buffer read value is : 999 string value: 0123456789012345 called times: 26 circular buffer read value is : 999 string value: 0123456789012345 called times: 27 circular buffer read value is : 999 string value: 0123456789012345 called times: 28 circular buffer read value is : 999 string value: 0123456789012345 called times: 29 circular buffer read value is : 999 string value: 0123456789012345 called times: 30 circular buffer read value is : 999 string value: 0123456789012345 called times: 31 circular buffer read value is : 999 string value: 0123456789012345 called times: 32

参考资料

https://github.com/LMAX-Exchange/disruptor

https://github.com/real-logic/aeron

https://medium.com/@pirogov.alexey/aeron-low-latency-transport-protocol-9493f8d504e8

https://github.com/LMAX-Exchange/disruptor/wiki/Getting-Started