A, CPU multi-level cache - cache coherency

1, CPU multi-level cache

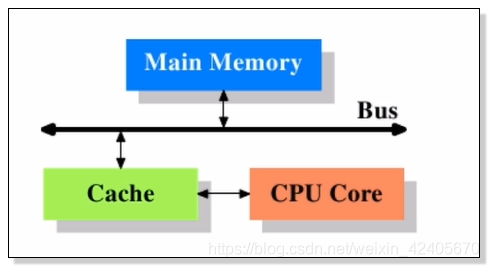

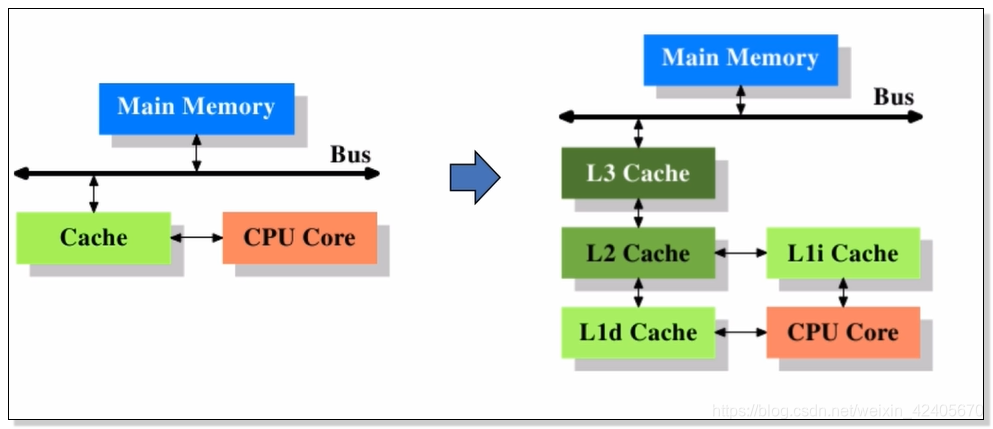

The figure shows the high-level cache CPU configuration, the data have been read and store has a special fast passage between the cache, the CPU core cache; simplified in this figure, the main memory and cache are connected to on the system bus, this bus is also used for communication with other components.

Shortly after cache appears, the system becomes more complex, the difference in speed between the cache and main memory pulled a large, until the cache is added L1d (called a cache), the newly added a cache that is larger than the cache , but more slowly, due to the increased level cache approach it is economically feasible. So with L2 cache. Even now some systems have a three-level cache.

2、CPU cache:

Why CPU cache?

CPU frequency too fast, approaching the main memory can not keep up, so that the processor clock cycle, CPU often needs to wait for main memory, a waste of resources. So the emergence of cache, in order to ease the speed mismatch between the CPU and memory problems (structure: CPU -> cache -> memory).

CPU cache What is the significance?

1) temporal locality: If a data is accessed, then it is likely to be accessed again in the near future;

2) spatial locality: If a data is accessed, and it soon might adjacent data to be accessed;

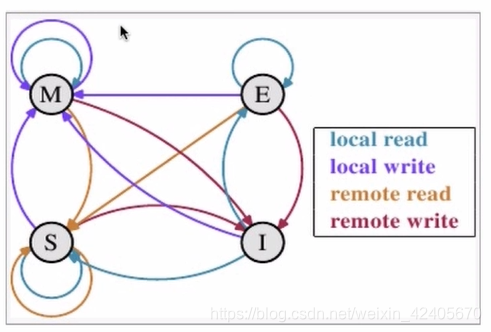

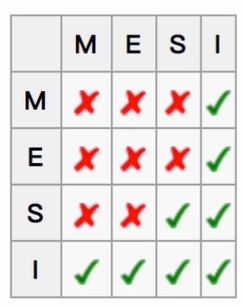

3, CPU multi-level cache - cache coherency (MESI)

- For ensuring cache coherent shared data among a plurality of CPU cache

M representatives Modify, showing modified; means that the cache line is only cached in the CPU cache, and is modified so that the data in main memory is inconsistent, changing the cache memory needs written in the future at some point back to main memory; this point in time we are allowed to read between the respective other CPU memory main memory, when the value here is written back to main memory, the state of the cache line will become E state;

E 代表 Exclusive,表示独享状态;该状态下的缓存行只被缓存在该CPU的缓存中,它是未被修改过的,是与主存中的数据一直的,这个状态可以在任意时刻,当有其他读取该内存时,变成共享状态,即S;

同样,当CPU修改该缓存行时,该缓存行状态可以被修改为M 的状态。

S 代表 share,代表共享的意思;该状态意味着该缓存行可能被多个CPU进行缓存,并且各个缓存数据和主存数据是一致的,当有一个CPU修改该缓存行时,其他CPU所有的缓存行是可以被作废的,变成I 的状态;

I 代表 invalid,表示无效的;代表该缓存行是无效的,有其他CPU修改了该缓存行。

四种操作:

local read 代表读本地缓存中的数据

local write 代表将数据写到本地缓存中

remote read 代表将内存中的数据读取过来,

remote write 代表将数据写回到主存中

状态转换示意图:

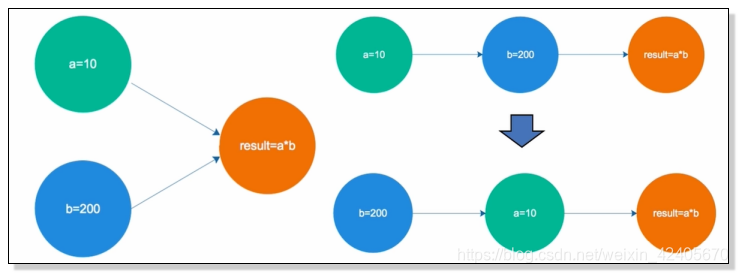

二、CPU多级缓存 - 乱序执行优化

意义:

- 处理器为提高运算速度而做出违背代码原有顺序的优化

a : 数据写入顺序,先写入a=10,再写入b=200,最后计算a*b的值

b : 乱序执行的执行顺序:先执行b=200,再执行a=10,单核情况下不做任何影响。

多核心的情况下,可能由于乱序而导致各个核所写入数据的最终计算结果与预想的结果不一致。

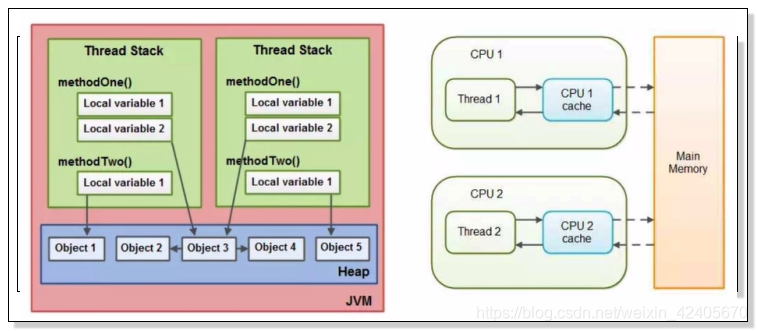

三、 JAVA内存模型

1、Java内存模型(Java Memory Model,JMM)

Java内存模型规范:规定了一个线程如何和何时可以看到由其他线程修改过后的共享变量的值,以及在必须时如何同步的访问共享变量。

Java内存模型:Java里的堆是一个运行时数据区,堆是由垃圾回收来负责的,堆的优势是可以动态的分配内存大小,生成期也不必告诉编译器。因为它是在运行时动态分配内存的。Java收集器会自动搜索这些不再使用的数据。

其缺点是,由于是在运行时动态分配内存,因此它的存取速度相对慢一些.

Java的栈(stack),优势是存取数据的速度要比堆快,仅次于计算机里的寄存器,栈的数据是可以共享的;

缺点是存在栈中的数据大小与生存期必须是确定的,缺乏一些灵活性,栈中主要存放一些基本类型的变量,比如int、short、float、double、char和对象句柄

Java内存模型要求调用栈和本地变量存放在线程栈上,而Thread stack对象则存放在堆上。

一个本地变量有可能指向一个对象的引用,这种情况下,引用这个本地变量是存放在这个线程栈上,但是对象本身是存放在堆上的;一个对象,它可能包含方法(methodOne()、method Two()),

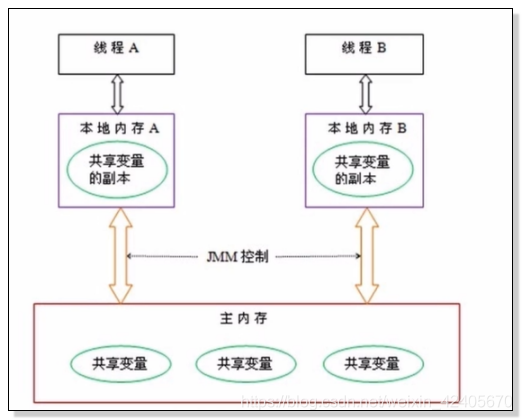

2、Java内存模型抽象结构图

线程A在操作共享变量时,是从主存中复制共享变量的副本到本地内存中,进行逻辑修改后,再将副本同步到主存中;并发环境下,线程A将副本修改后要同步到主存中时,线程B可能刚好将主存的共享变量同步到本地内存B中进行逻辑修改。而这是很不安全的。因为线程B所操作的共享变量相对线程A来说是已经过期的值,这会导致后续的一系列运算都是基于错误的基础上执行的。

解决思路: 对线程A和线程B进行加锁,在主存共享变量进行副本复制后就不再允许其他线程操作,即实现线程的同步操作。从而解决并发线程的读写安全问题。

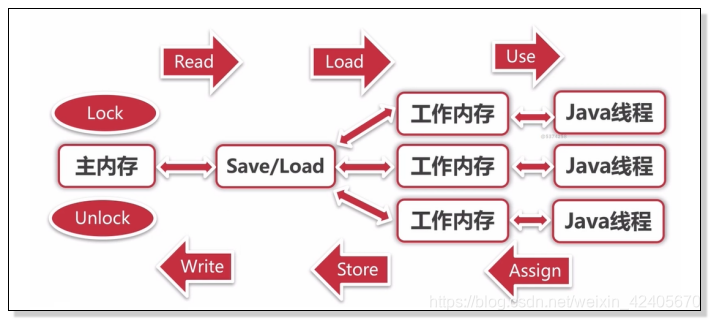

3、Java内存模型 - 同步八种操作

- lock (锁定):作用域主内存的变量,把一个变量标识为一条线程独占状态

- unlock (解锁):作用于主内存的变量,把一个处于锁定状态的变量释放出来,释放后的变量才可以被其他线程锁定

- read(读取):作用域主内存的变量,把一个变量值从主内存传输到线程的工作内存中,以便随后的load动作使用

- load(载入):作用于工作内存的变量,它把read操作从主内存中得到的变量值放入工作内存的变量副本中

- use (使用):作用域工作内存的变量,把工作内中的一个变量值传递给执行引擎

- assign(赋值):作用于工作内存的变量,它把一个从执行引擎接收到的值赋值给工作内存的变量

- store (存储):作用于工作内存的变量,把工作内存中的一个变量的值传送到主内存中,以便随后的write的操作

- write(写入):作用于主内存的变量,它把store操作从工作内存中一个变量的值传送到主内存的变量中

4、Java内存模型 - 同步规则

① 如果要把一个变量从主内存中复制到工作内存,就需要按顺序地执行read和load操作,如果把变量从工作内存中同步回主内存中,就要按顺序地执行store和write操作。但Java内存模型值要求上述操作必须按顺序执行,而没有保证必须是连续执行;

② not allowed to read and load, store one and write operations occur alone;

③ not allowed to discard it after a thread assign the most recent operation, i.e., the change in the working memory variables must be synchronized to the main memory;

④ does not allow a thread is no reason to (not assign any action occurs) to synchronize data from your memory back to the main memory;

⑤ a new variable can only be born in main memory, not allowed to use a non-initialized (load or assign of) variables directly in the working memory. That is, prior to use and embodiment of a variable store operation that must be performed and assign a load operation;

⑥ a variable at the same time allowing only one thread to manipulate its lock, but lock operation can be performed repeatedly with a thread-rich Oh times, after repeatedly lock, unlock operation only perform the same number of variables will be unlocked. lock and unlock must appear in pairs;

⑦ ago If you perform operations on a variable lock will clear the working memory in the value of this variable, use the variable in the execution engine load or the need to re-assign values to perform the operation variable initialization;

⑧ If a variable not previously been locked lock operation, it is not allowed to perform the unlock operation; variables are not allowed to unlock a locked other threads;

Prior to performing a variable unlock operation, must first variable synchronization time to main memory (store and perform write operations)

5, Java memory model - the implementation of a flow chart:

Fourth, the advantages and risks of concurrent

summary:

- CPU multi-level cache: cache consistency, order execution optimization

- Java Memory Model: JMM provisions abstract structure, synchronous operation and eight kinds of rules

- The advantages and risks of Java Concurrency