! # / usr / bin / python3

# Coding = utf8

Import Requests

from BS4 Import BeautifulSoup

Import pymysql

Import Time

'' '

needs: a video Web site, no search function, I get hold of python reptile crawling web video name and magnetic link all crawling down into the mysql database, you can get the address by movie download their favorite search keywords download the

author: xiaoxiaohui

time: 2019-10-03

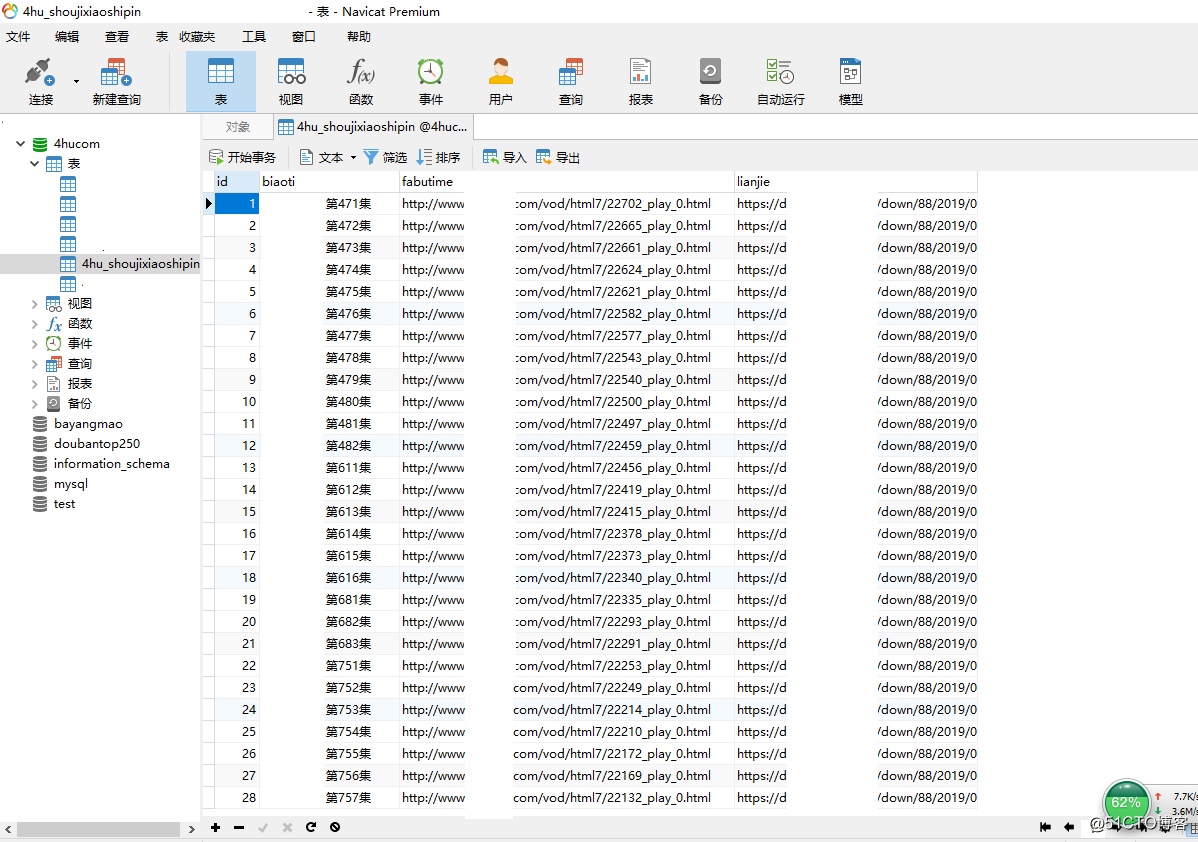

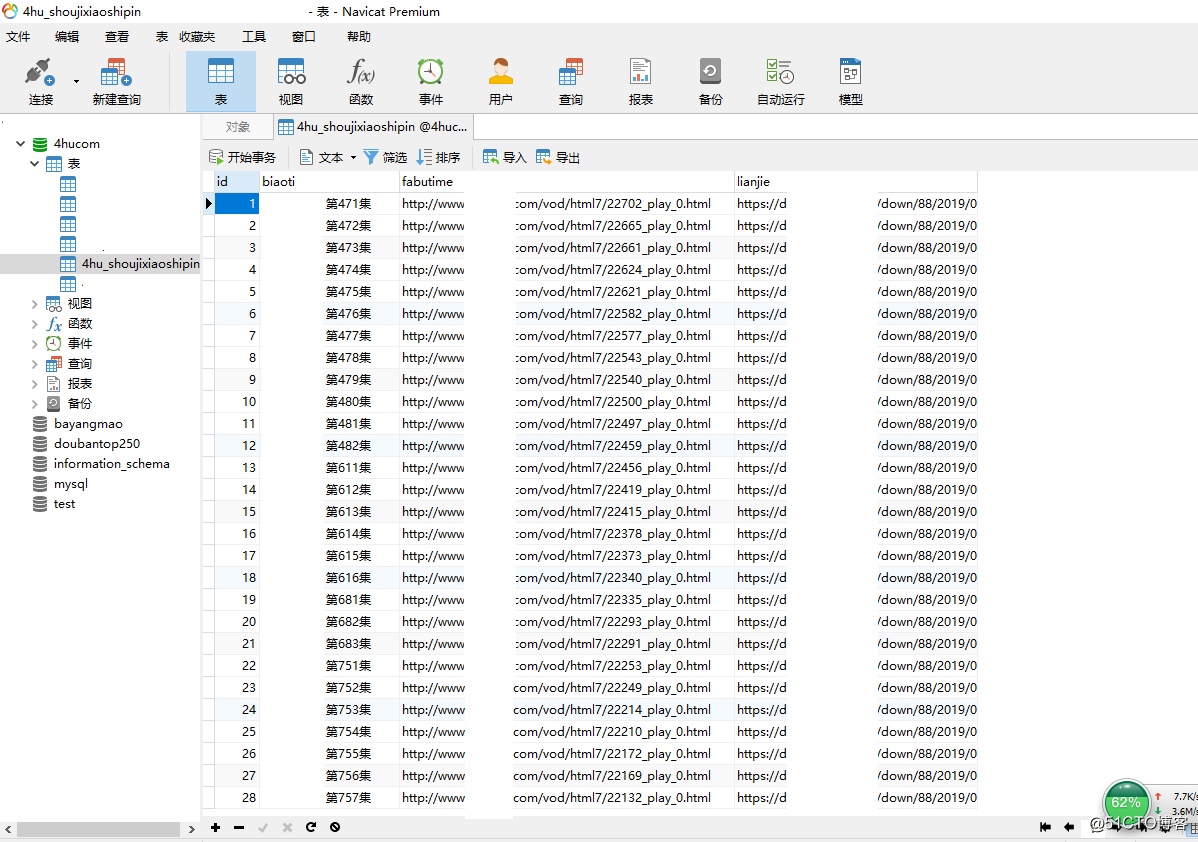

other: mysql database to create the database and data tables

mysql-uroot--pxxh123

the create database 4hucom;

use 4hucom;

database id growth since

CREATE TABLE `4hu_shoujixiaoshipin` (` id` INT (11) not null auto_increment, `biaoti` VARCHAR (380),` fabutime` VARCHAR (380), `lianjie` VARCHAR (380), primary key (id));

the other two: because it is improved by fast before some of the reptiles code, so on (1) concerning the method name is followed get_house_info rental website crawling name before friends (2) info dictionary inside the 'play address ': fabutime, in fact,' play address 'to bofangdizhi better

' ''

def get_links(url):

response = requests.get(url)

soup = BeautifulSoup(response.text,'html.parser')

links_div = soup.find_all('li',class_="col-md-2 col-sm-3 col-xs-4")

links = ['http://www.网站名马赛克.com'+div.a.get('href') for div in links_div]

#print(links)

return links

def get_house_info(item_url):

response = requests.get(item_url)

response.encoding = 'utf-8'

soup = BeautifulSoup(response.text,'html.parser')

links_div = soup.find_all('ul',class_="playul")

lianjie_temp = 'http://www.网站名马赛克.com'+links_div[1].li.a.get('href') Note here that the download link # climbing playul has two first playul links_div [0] is the second play playul links_div [1] is downloaded

lianjie = get_cililianjie (lianjie_temp)

Print (Lianjie)

links_div2 = soup.find_all ( 'div', class _ = "the Detail-title the Fn-the Clear")

biaoti = links_div2 [0] .text [:]. Strip () # climb movie name I added .strip ( ) to spaces

#Print (biaoti)

links_div3 = soup.find_all ( 'ul', class _ = "playul")

fabutime = 'HTTP: // the WWW site Mingmasaike .com.' + links_div [0] .li.a.get ( 'href') # climb video playback address

#Print (fabutime)

info = {

'the above mentioned id': the above mentioned id,

'the name of the movie': biaoti,

'play address': fabutime,

'download link': Lianjie

}

return info

DEF get_cililianjie ( URL):

Response = requests.get (URL)

response.encoding = 'UTF-. 8'

Soup = the BeautifulSoup (response.text, 'html.parser')

#print(soup)

links_div = Soup.find_all('div',class_="download")

#print(links_div)

lianjie = links_div[0].a.get('href') #磁力链接

return lianjie

def get_db(setting):

return pymysql.connect(**setting)

def insert(db,house):

values_ = "'{}',"*2 + "'{}'"

sql_values = values_.format(house['影片名字'],house['播放地址'],house['下载链接'])

sql ='insert into 4hu_shoujixiaoshipin (biaoti,fabutime,lianjie) values({})'.format(sql_values)

cursor = db.cursor()

cursor.execute(sql)

db.commit()

DATABASE = {

'host':'127.0.0.1',

'database':'4hucom',

'user':'root',

'password':'xxh123',

'charset':'utf8'# Before the code is used navicat.exe after utf8mb4 I look garbled been changed utf8 found navicat.exe investigation is a normal Chinese

db = get_db (DATABASE) # connect to the database

}

# Loop all pages of examples

for Yema in the Range (1,44):

IF Yema == 1:

url = 'HTTPS: // the WWW site Mingmasaike .com / the VOD / HTML7 / index.html.'

The else:

url = 'HTTPS : // www site Mingmasaike .com / VOD / HTML7 / index _ '+ STR (Yema) +' HTML '..

links get_links = (URL)

for links in item_url:

the time.sleep (1.0)

House = get_house_info (item_url)

print ( 'Get a success: {}'. format (house [ ' video name']))

iNSERT (DB, House) # crawling inserted into the data input into the database