Ali sister REVIEW: big data with existing scientific and technological means, for most industries can produce huge economic and social value. This is also the moment many companies because of deep plowing on large data. Big Data Analytics scene which technical challenges need to be addressed? Currently, there is what the mainstream big data architecture model and its development? Today, we will recite, and describes how to combine cloud storage, computing components to achieve better universal Big Data architecture patterns and typical data pattern can be covered by the processing scenario.

Challenges of Data Processing

Now a growing number of industry and technology needs of large data analysis system, such as the financial sector need to use big data systems combine VaR (value at risk) or machine learning programs credit risk control, retail, food and beverage industry requires a large data system auxiliary achieve sales decisions, IOT various scenarios requires large data aggregation and analysis system sustained sequential data, the major technology companies need to build large data analysis stage, and so on.

Abstract point of view, scenes support these requirements analysis systems, technical challenges faced roughly the same:

- Data analysis across a range of business real-time and historical data, which requires low latency real-time data analysis, historical data also need to PB-level exploratory data analysis;

- Reliability and scalability issues, users may store vast amounts of historical data, while the size of the data trend continues to grow, the need to introduce a distributed storage system to meet the needs of reliability and scalability, while ensuring cost control;

- Deep stack technology, components need to be combined stream, storage systems, and a computing component;

- Operation and maintenance requirements may be high, large, complex and difficult to maintain data architecture control;

Description of Big Data infrastructure development

Lambda architecture

Lambda architecture is the most profound impact of big data processing architecture, its core idea is to immutable data to be written additional parallel manner within the batch and stream processing system, then the same calculation logic separately in the flow and batch systems implementation and consolidation in the inquiry phase flow and calculated view of a batch and presented to the user. Lambda is also assumed that the author Nathan Marz relatively simple and easy to batch errors, while relatively less reliable stream processing, so the stream processor may use approximation algorithm, an approximate update to quickly generate the view, and in a slow batch processing system the exact algorithm to produce corrected version of the same view.

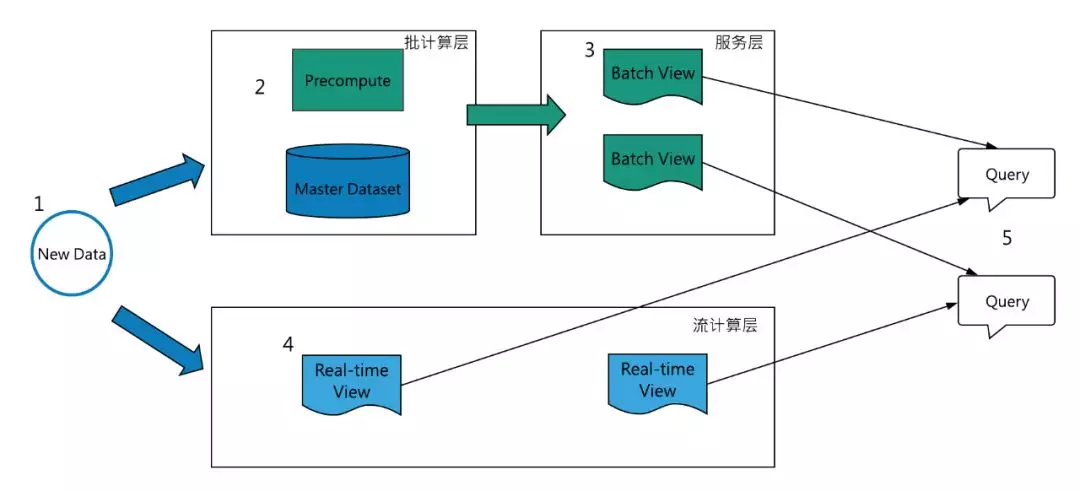

1 Lambda exemplary architecture of FIG.

Lambda architecture of a typical data flow is ( http://lambda-architecture.net/) :

- All the data are necessary to write the batch layer and the treatment layer flow;

- Batch layer two functions: (i) management master dataset (immutable storage, the total amount of additional data writing), (II) precomputed batch view;

- Service Layer establish batch view indexes to support low-latency, ad-hoc query mode view;

- Layer as the layer is calculated flow velocity, compute approximate real-time view of the data in real-time, high-delay compensation batch view of the quick view;

- All queries need to combine batch view and real-time view;

Lambda architecture designed to promote the principles of view on the event stream generated immutable, and can be reprocessed events when necessary, to ensure that the principles of the system over time evolution of demand, you can always create a new view out appropriate and practical to meet the changing historical data and real-time data analysis needs.

Four challenges Lambda architecture

Lambda architecture is very complex, data writing, storage, docking computing components and complex presentation layer has sub-topics need to be optimized:

- The writing layer, Lambda no data is written abstract, but will double the batch consistency of the flow system of reverse thrust to the upper application to write data;

- Storage to HDFS as the representative of the master dataset does not support data updates, constantly updated data sources can only copy the full amount at regular snapshot into HDFS way to keep data updates, data latency and cost relatively large;

- Respectively, to achieve the required computational logic flow batch framework and run, and in a similar Storm stream computing framework and batch framework Hadoop MR do job development, debugging, questionnaires are more complex;

- The results support the view needs analysis low-latency, usually also need to store derived data analysis system to the column, and ensure cost control.

Flow batch integration Lambda architecture

Question 3, respectively computational logic required to implement and run the batch framework for the flow problem Lambda architecture, many calculation engine has begun to unify the direction of flow of grant to develop, such as Spark and Flink, to simplify the calculation section lambda architecture . To achieve a unified flow batch usually need to support:

1. The same processing engine to handle the playback of real-time events and historical events;

2. Support exactly once semantics, calculated to ensure the presence or absence of the malfunction identical results;

3. Support to the time of the event rather than processing time windowing.

Kappa architecture

Kappa architecture proposed by Jay Kreps, unlike Lambda also calculated flow calculation and calculates and combined batch view, Kappa only data link by calculating a calculated flow and generate a view. Kappa uses the same principle to reprocess events, historical data analysis for the needs of the class, Kappa require long-term storage of data can be re-inflow calculation engine in an ordered log stream, regenerate view historical data.

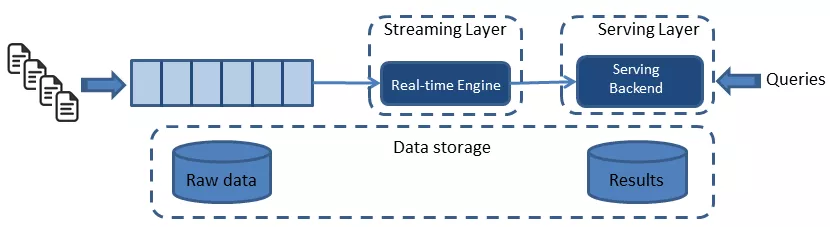

Figure 2 Kappa architecture big data

Kappa solutions by streamlining the data link 1 and 3 computational logic write complex issue, but it still does not solve the problem of storage and display, especially on the storage, use similar kafka message queue to store long-term log data, the data can not be compression, large storage costs, bypass is to use tiered storage support data messaging system (e.g., Pulsar, supports message history stored on the cloud storage system), but the historical log data tiered storage only for Kappa backfill operations, data utilization remains low.

Lambda and Kappa scene difference:

- Kappa Lambda is not a substitute for architecture, but its simplified version, Kappa dropped support for batch processing, better at analyzing the needs of the business itself is written scene is append-only data, such as time-series data of various scenarios, naturally occurring time windows concept, streaming directly calculated to meet the real-time calculation of compensation and historical mission requirements;

- Lambda directly supports batch processing, so there is a lot more suited to the needs of ad hoc queries scene of historical data, such as data analysts need to press any combination of conditions to analyze historical data exploratory, and have some of the real-time needs, expectations as soon as possible analysis results, the batch can meet these needs more directly and efficiently.

Kappa+

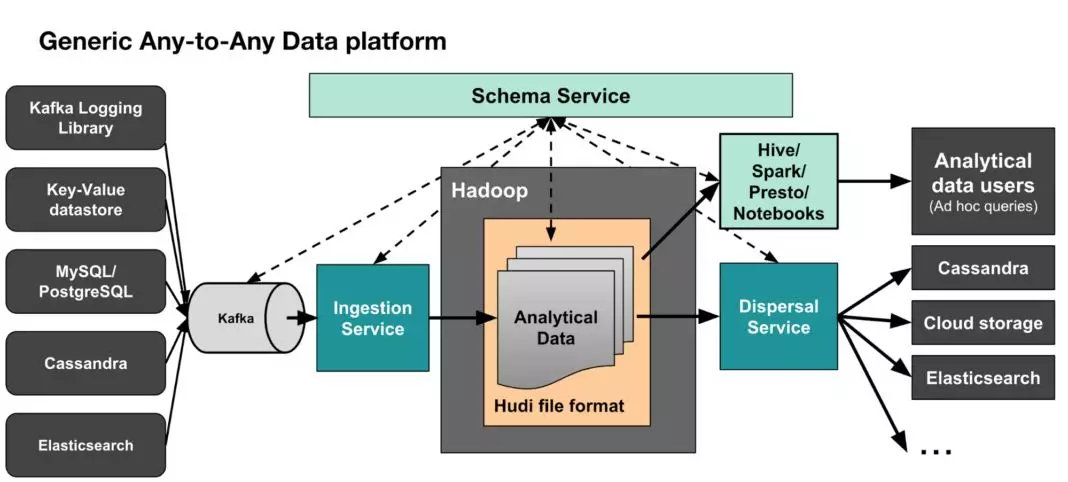

Kappa+是 Uber 提出流式数据处理架构,它的核心思想是让流计算框架直读 HDFS类的数仓数据,一并实现实时计算和历史数据 backfill 计算,不需要为 backfill 作业长期保存日志或者把数据拷贝回消息队列。Kappa+ 将数据任务分为无状态任务和时间窗口任务,无状态任务比较简单,根据吞吐速度合理并发扫描全量数据即可,时间窗口任务的原理是将数仓数据按照时间粒度进行分区存储,窗口任务按时间序一次计算一个 partition 的数据,partition 内乱序并发,所有分区文件全部读取完毕后,所有 source 才进入下个 partition 消费并更新 watermark。事实上,Uber 开发了Apache hudi 框架来存储数仓数据,hudi 支持更新、删除已有 parquet 数据,也支持增量消费数据更新部分,从而系统性解决了问题2存储的问题。下图3是完整的Uber 大数据处理平台,其中 Hadoop -> Spark -> Analytical data user 涵盖了Kappa+ 数据处理架构。

图3 Uber围绕Hadoop dataset的大数据架构

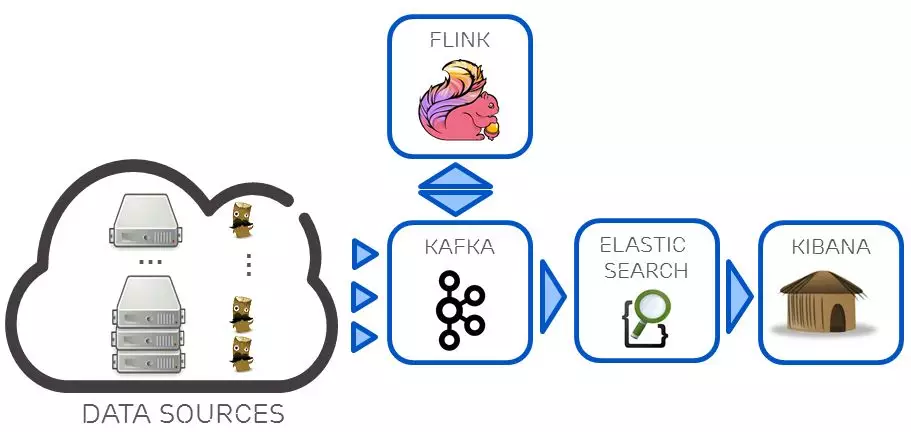

混合分析系统的 Kappa 架构

Lambda 和 Kappa 架构都还有展示层的困难点,结果视图如何支持 ad-hoc 查询分析,一个解决方案是在 Kappa 基础上衍生数据分析流程,如下图4,在基于使用Kafka + Flink 构建 Kappa 流计算数据架构,针对Kappa 架构分析能力不足的问题,再利用 Kafka 对接组合 ElasticSearch 实时分析引擎,部分弥补其数据分析能力。但是 ElasticSearch 也只适合对合理数据量级的热数据进行索引,无法覆盖所有批处理相关的分析需求,这种混合架构某种意义上属于 Kappa 和 Lambda 间的折中方案。

图4 Kafka + Flink + ElasticSearch的混合分析系统

Lambda plus:Tablestore + Blink 流批一体处理框架

Lambda plus 是基于 Tablestore 和 Blink 打造的云上存在可以复用、简化的大数据架构模式,架构方案全 serverless 即开即用,易搭建免运维。

表格存储(Tablestore)是阿里云自研的 NoSQL 多模型数据库,提供 PB 级结构化数据存储、千万 TPS 以及毫秒级延迟的服务能力,表格存储提供了通道服务(TunnelService)支持用户以按序、流式地方式消费写入表格存储的存量数据和实时数据,同时表格存储还提供了多元索引功能,支持用户对结果视图进行实时查询和分析。

Blink 是阿里云在 Apache Flink 基础上深度改进的实时计算平台,Blink 旨在将流处理和批处理统一,实现了全新的 Flink SQL 技术栈,在功能上,Blink 支持现在标准 SQL 几乎所有的语法和语义,在性能上,Blink 也比社区Flink更加强大。

在 TableStore + Blink 的云上 Lambda 架构中,用户可以同时使用表格存储作为master dataset 和 batch&stream view,批处理引擎直读表格存储产生 batch view,同时流计算引擎通过 Tunnel Service 流式处理实时数据,持续生成 stream view。

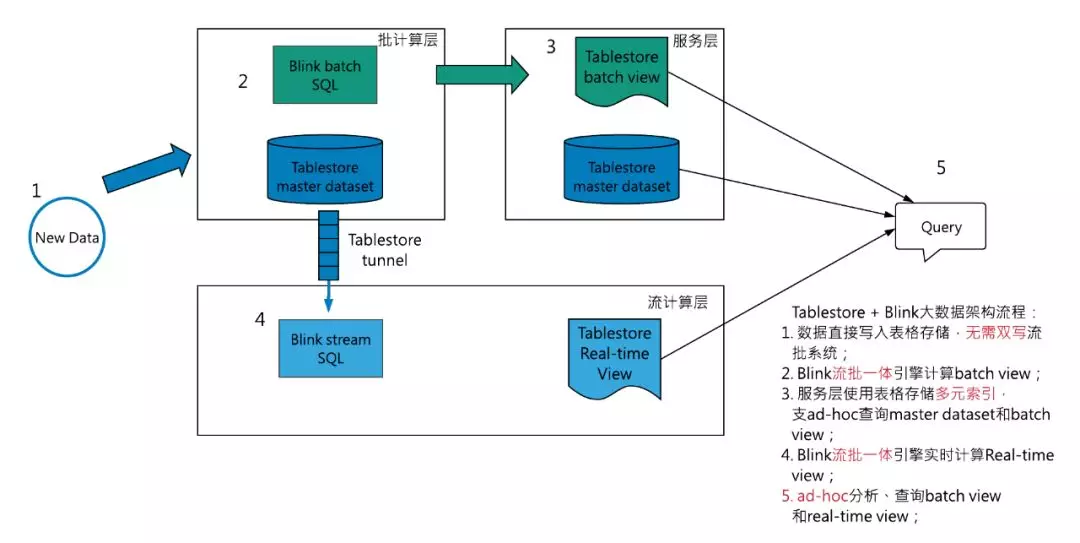

图5 Tablestore + Blink 的 Lambda plus 大数据架构

如上图5,其具体组件分解:

- Lambda batch 层:

Tablestore 直接作为 master dataset,支持用户直读,配合 Tablestore 多元索引,用户的线上服务直读、ad-hoc 查询 master dataset 并将结果返回给用户;Blink 批处理任务向 Tablestore 下推 SQL 的查询条件,直读 Tablestore master dataset,计算 batch view,并将 batch view 重新写回 Tablestore;

- Streaming 层:

Blink 流处理任务通过表格存储 TunnelService API 直读 master dataset 中的实时数据,持续产生 stream view;Kappa 架构的 backfill任务,可以通过建立全量类型数据通道,流式消费 master dataset 的存量数据,从新计算;

- Serving 层:

为存储 batch view 和 stream view 的 Tablestore 结果表建立全局二级索引和多元索引,业务可以低延迟、ad-hoc方式查询;

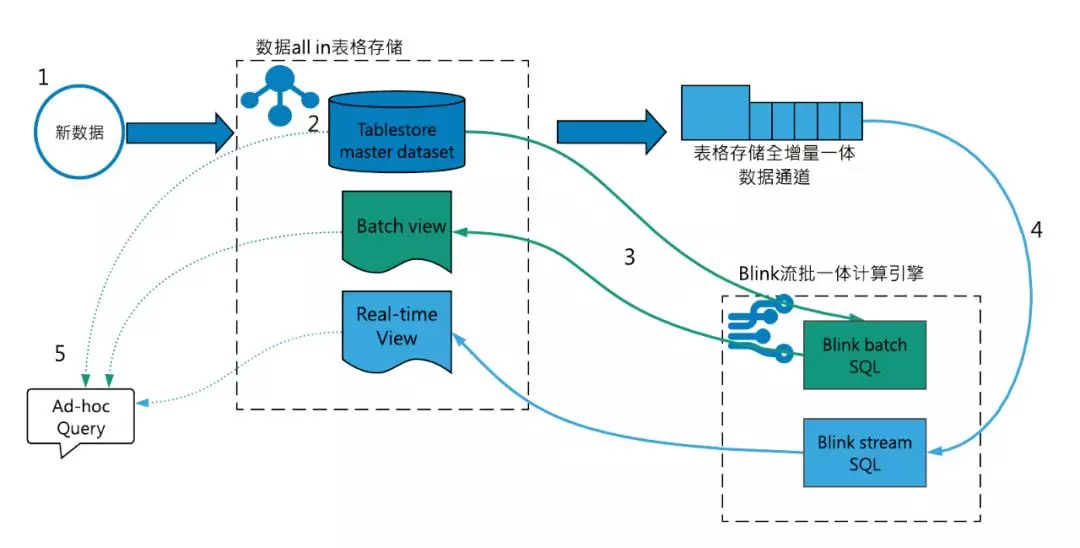

图6 Lambda plus的数据链路

针对上述 Lambda 架构1-4的技术问题,Lambda plus 的解决思路:

- 针对数据写入的问题,Lambda plus 数据只需要写入表格存储,Blink 流计算框架通过通道服务 API 直读表格存储的实时数据,不需要用户双写队列或者自己实现数据同步;

- 存储上,Lambda plus 直接使用表格存储作为 master dataset,表格存储支持用户 tp 系统低延迟读写更新,同时也提供了索引功能 ad-hoc 查询分析,数据利用率高,容量型表格存储实例也可以保证数据存储成本可控;

- 计算上,Lambda plus 利用 Blink 流批一体计算引擎,统一流批代码;

- 展示层,表格存储提供了多元索引和全局二级索引功能,用户可以根据解决视图的查询需求和存储体量,合理选择索引方式。

总结,表格存储实现了 batch view、master dataset 直接查询、stream view 的功能全集,Blink 实现流批统一,Tablestore 加 Blink 的 Lambda plus 模式可以明显简化 Lambda 架构的组件数量,降低搭建和运维难度,拓展用户数据价值。

表格存储是如何实现支持上述功能全集的

存储引擎的高并发、低延迟特性:表格存储面向在线业务提供高并发、低延迟的访问,并且 tps 按分区水平扩展,可以有效支持批处理和 Kappa backfill 的高吞吐数据扫描和流计算按分区粒度并发实时处理;

使用通道服务精简架构:Tablestore 数据通道支持用户以按序、流式地方式消费写入表格存储的存量数据和实时数据,避免 Lambda 架构引入消息队列系统以及master dataset 和队列的数据一致性问题;

二级索引和多元索引的灵活查询能力:存储在表格存储的 batch view 和 real-time view 可以使用多元索引和二级索引实现 ad-hoc 查询,使用多元索引进行聚合分析计算;同时展示层也可以利用二级索引和多元索引直接查询表格存储 master dataset,不强依赖引擎计算结果。

Lambda plus 的适用场景

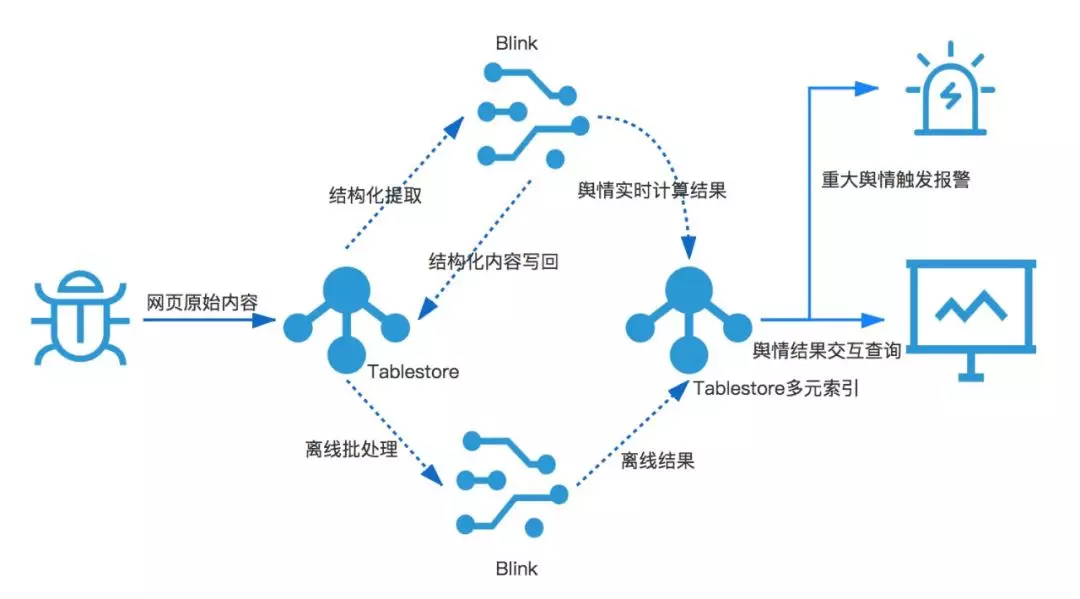

基于 Tablestore 和 Blink 的 Lambda plus 架构,适用于基于分布式 NoSQL 数据库存储数据的大数据分析场景,如 IOT、时序数据、爬虫数据、用户行为日志数据存储等,数据量以 TB 级为主。典型的业务场景如:

大数据舆情分析系统:

参考资料

[1].https://yq.aliyun.com/articles/704171?spm=a2c4e.11153959.0.0.229847a2BpWNvc

[2].http://lambda-architecture.net/

[3].http://shop.oreilly.com/product/0636920032175.do, Martin Kleppmann

[4].https://www.oreilly.com/ideas/applying-the-kappa-architecture-in-the-telco-industry

[5].https://www.oreilly.com/ideas/questioning-the-lambda-architecture,Jay Kreps

[6].http://milinda.pathirage.org/kappa-architecture.com/

[7].Moving from Lambda and Kappa Architectures to Kappa+ at Uber

[8].https://eng.uber.com/hoodie/, Prasanna Rajaperumal and Vinoth Chandar

[9].https://eng.uber.com/uber-big-data-platform/, Reza Shiftehfar

Original publication time: 2019-07-1

author: Ali technical

article from Yunqi community partners, " Ali technology " for information may concern " Ali technology ."