1. What is feature engineering?

Teacher Andrew Ng, a master in the field of machine learning, said, "Coming up with features is difficult, time-consuming, requires expert knowledge. "Applied machine learning" is basically feature engineering." Note: It is widely circulated in the industry that data and features

determine The upper limit of machine learning, and models and algorithms only approach this upper limit

Feature engineering is the process of using professional background knowledge and skills to process data so that features can play a better role in machine learning algorithms. Significance

: It will directly affect the effect of machine learning.

2. What to use for feature engineering?

Currently it is sklearn

3. Comparison of location and data processing of feature engineering

1. pandas

is a very convenient tool for data reading and basic processing formats.

2. sklearn

provides a powerful interface for feature processing

3. Feature engineering includes

(1) feature extraction

(2) feature preprocessing

(3) feature dimensionality reduction

4. Feature extraction/feature extraction.

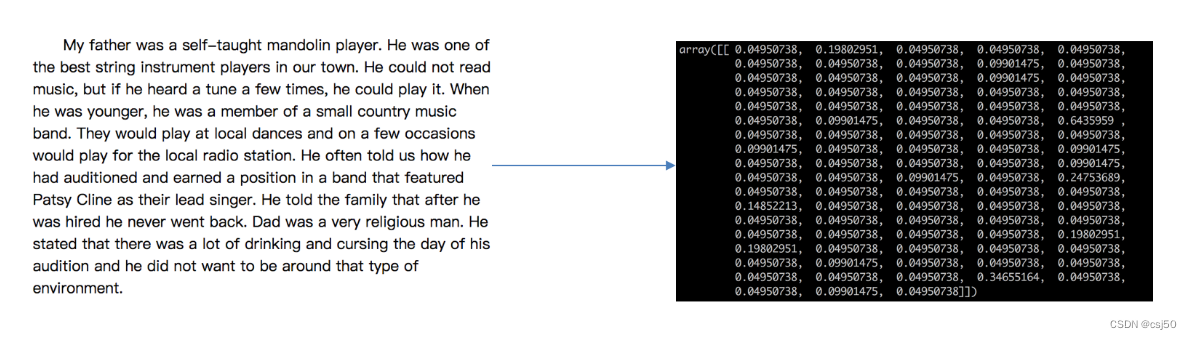

For example, there is an English short article, and the article needs to be classified:

machine learning algorithm--statistical method--mathematical formula. Mathematical

formula cannot handle strings, and the type of text string to be converted into a numerical

problem is: text How to convert type to numeric value?

Classification Ship Position

Question: Convert type to numeric value?

onehot encoding or dummy variable

4. Feature extraction

1. What is feature extraction?

Convert any data (such as text or image) into digital features that can be used for machine learning.

Note: Feature valueization is for the computer to better understand the data

(1) Dictionary feature extraction (feature discretization)

( 2) Text feature extraction

(3) Image feature extraction (deep learning will be introduced)

2. Feature extraction API

sklearn.feature_extraction

5. Dictionary feature extraction

sklearn.feature_extraction.DictVectorizer(sparse=True, ...)

Function: Characterize field data

Explanation:

vector is a vector in mathematics and vector

matrix in physics. A two-dimensional array.

If the vector is stored in a computer, it can be stored in a one-dimensional array.

1. DicVectorizer.fit_transform(X)

X: field or iterator containing dictionary

Return value: return sparse matrix

2. DicVectorizer.inverse_transform(X)

X: array array or sparse matrix

Return value: data format before conversion

3. DicVectorizer.get_feature_names()

return value: return category name

4. Example

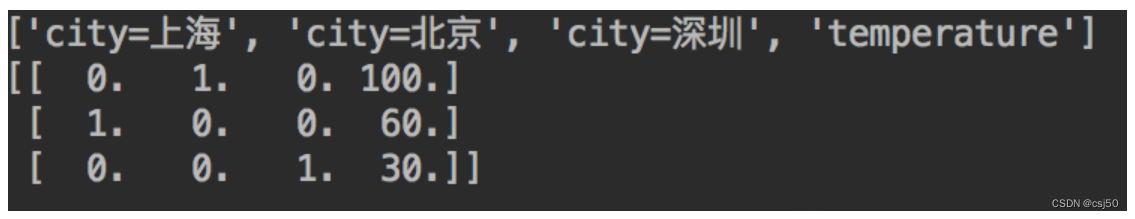

We perform feature extraction on the following data

[{'city': '北京','temperature':100}

{'city': '上海','temperature':60}

{'city': '深圳','temperature':30}]

The top row represents feature_names, and the bottom three rows are data.

To explain, if there are three cities, there will be 3 0s. Whichever 0 is in the dictionary order becomes 1. It is a bit like a binary encoding

sample is a matrix with three rows and two columns. , after dictionary feature extraction, the sample size becomes 4 columns,

and the city category is converted into one-hot encoding

5. Modify day01_machine_learning.py

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

def datasets_demo():

"""

sklearn数据集使用

"""

#获取数据集

iris = load_iris()

print("鸢尾花数据集:\n", iris)

print("查看数据集描述:\n", iris["DESCR"])

print("查看特征值的名字:\n", iris.feature_names)

print("查看特征值几行几列:\n", iris.data.shape)

#数据集的划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=22)

print("训练集的特征值:\n", x_train, x_train.shape)

return None

def dict_demo():

"""

字典特征抽取

"""

data = [{'city': '北京','temperature':100},{'city': '上海','temperature':60},{'city': '深圳','temperature':30}]

# 1、实例化一个转换器类

transfer = DictVectorizer()

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new)

return None

if __name__ == "__main__":

# 代码1:sklearn数据集使用

#datasets_demo()

# 代码2:字典特征抽取

dict_demo()operation result:

data_new:

(0, 1) 1.0

(0, 3) 100.0

(1, 0) 1.0

(1, 3) 60.0

(2, 2) 1.0

(2, 3) 30.0Why is this result: After this

API passes in

data_new:

[[ 0. 1. 0. 100.]

[ 1. 0. 0. 60.]

[ 0. 0. 1. 30.]]How to look at sparse matrices: In some matrices, there are far fewer non-zero elements than 0 elements. Typical storage method: Triplet method, (row, column, element value)

marks the position of the non-zero elements in the sparse matrix and the corresponding value. In layman's terms: the value in row 0 and column 1 is 1. In the expected result You can find it here.

The advantage is to save memory and improve loading efficiency.

Add printing feature name:

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

def datasets_demo():

"""

sklearn数据集使用

"""

#获取数据集

iris = load_iris()

print("鸢尾花数据集:\n", iris)

print("查看数据集描述:\n", iris["DESCR"])

print("查看特征值的名字:\n", iris.feature_names)

print("查看特征值几行几列:\n", iris.data.shape)

#数据集的划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=22)

print("训练集的特征值:\n", x_train, x_train.shape)

return None

def dict_demo():

"""

字典特征抽取

"""

data = [{'city': '北京','temperature':100},{'city': '上海','temperature':60},{'city': '深圳','temperature':30}]

# 1、实例化一个转换器类

transfer = DictVectorizer(sparse=False)

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new)

print("特征名字:\n", transfer.get_feature_names())

return None

if __name__ == "__main__":

# 代码1:sklearn数据集使用

#datasets_demo()

# 代码2:字典特征抽取

dict_demo()data_new:

[[ 0. 1. 0. 100.]

[ 1. 0. 0. 60.]

[ 0. 0. 1. 30.]]

特征名字:

['city=上海', 'city=北京', 'city=深圳', 'temperature']6. Text feature extraction

1. Function:

Characterize text data.

Words are used as features, and feature words are

2. Method 1: CountVectorizer

sklearn.feature_extraction.text.CountVectorizer(stop_words=[])

return value: return word frequency matrix

3. CountVectorizer.fit_transform(X)

X: text or an iterable object containing a text string

Return value: Returns a sparse matrix

4. CountVectorizer.inverse_transform(X)

X: array array or sparse matrix

Return value: data format before conversion

5. CountVectorizer.get_feature_names()

return value: word list

6. Example:

Let’s extract features from the following data

["life is short,i like python",

"life is too long,i dislike python"]

Why is there no i? Because when the API was designed, it was considered that word classification or sentiment analysis was of little significance.

7. Modify day01_machine_learning.py

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import CountVectorizer

def datasets_demo():

"""

sklearn数据集使用

"""

#获取数据集

iris = load_iris()

print("鸢尾花数据集:\n", iris)

print("查看数据集描述:\n", iris["DESCR"])

print("查看特征值的名字:\n", iris.feature_names)

print("查看特征值几行几列:\n", iris.data.shape)

#数据集的划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=22)

print("训练集的特征值:\n", x_train, x_train.shape)

return None

def dict_demo():

"""

字典特征抽取

"""

data = [{'city': '北京','temperature':100},{'city': '上海','temperature':60},{'city': '深圳','temperature':30}]

# 1、实例化一个转换器类

transfer = DictVectorizer(sparse=False)

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new)

print("特征名字:\n", transfer.get_feature_names())

return None

def count_demo():

"""

文本特征抽取

"""

data = ["life is short,i like like python", "life is too long,i dislike python"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

if __name__ == "__main__":

# 代码1:sklearn数据集使用

#datasets_demo()

# 代码2:字典特征抽取

#dict_demo()

# 代码3:文本特征抽取

count_demo()operation result:

data_new:

[[0 1 1 2 0 1 1 0]

[1 1 1 0 1 1 0 1]]

特征名字:

['dislike', 'is', 'life', 'like', 'long', 'python', 'short', 'too']Count the number of occurrences of feature words in each sample

8. Chinese text feature extraction

Question: What if we replace the data into Chinese?

Then the result is that the entire sentence is treated as one word, and manual blanking does not support a single Chinese word!

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import CountVectorizer

def datasets_demo():

"""

sklearn数据集使用

"""

#获取数据集

iris = load_iris()

print("鸢尾花数据集:\n", iris)

print("查看数据集描述:\n", iris["DESCR"])

print("查看特征值的名字:\n", iris.feature_names)

print("查看特征值几行几列:\n", iris.data.shape)

#数据集的划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=22)

print("训练集的特征值:\n", x_train, x_train.shape)

return None

def dict_demo():

"""

字典特征抽取

"""

data = [{'city': '北京','temperature':100},{'city': '上海','temperature':60},{'city': '深圳','temperature':30}]

# 1、实例化一个转换器类

transfer = DictVectorizer(sparse=False)

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new)

print("特征名字:\n", transfer.get_feature_names())

return None

def count_demo():

"""

文本特征抽取

"""

data = ["life is short,i like like python", "life is too long,i dislike python"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

def count_chinese_demo():

"""

中文文本特征抽取

"""

data = ["我 爱 北京 天安门", "天安门 上 太阳 升"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray());

print("特征名字:\n", transfer.get_feature_names())

return None

if __name__ == "__main__":

# 代码1:sklearn数据集使用

#datasets_demo()

# 代码2:字典特征抽取

#dict_demo()

# 代码3:文本特征抽取

#count_demo()

# 代码4:中文文本特征抽取

count_chinese_demo()

operation result:

data_new:

[[1 1 0]

[0 1 1]]

特征名字:

['北京', '天安门', '太阳']9. Stop_words of CountVectorizer stop_words

: Stop words.

We think there are some words that are not beneficial to the final classification. You can search the stop word list.

10. Convert the following three sentences into eigenvalues

Today is cruel, tomorrow will be even crueler, and the day after tomorrow will be wonderful,

but most of them will die tomorrow night, so don’t give up today.

The light we see from distant galaxies was emitted millions of years ago,

so when we see the universe, we are looking at its past.

You don't really understand something if you only understand it one way.

The secret to understanding what something really means depends on how it relates to what we know.

(1) Prepare sentences, use jieba.cut for word segmentation, and install the library: pip3 install jieba

(2) Instantiate CountVectorizer

(3) Convert the word segmentation result into a string as the input value of fit_transform

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import CountVectorizer

import jieba

def datasets_demo():

"""

sklearn数据集使用

"""

#获取数据集

iris = load_iris()

print("鸢尾花数据集:\n", iris)

print("查看数据集描述:\n", iris["DESCR"])

print("查看特征值的名字:\n", iris.feature_names)

print("查看特征值几行几列:\n", iris.data.shape)

#数据集的划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=22)

print("训练集的特征值:\n", x_train, x_train.shape)

return None

def dict_demo():

"""

字典特征抽取

"""

data = [{'city': '北京','temperature':100},{'city': '上海','temperature':60},{'city': '深圳','temperature':30}]

# 1、实例化一个转换器类

transfer = DictVectorizer(sparse=False)

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new)

print("特征名字:\n", transfer.get_feature_names())

return None

def count_demo():

"""

文本特征抽取

"""

data = ["life is short,i like like python", "life is too long,i dislike python"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

def count_chinese_demo():

"""

中文文本特征抽取

"""

data = ["我 爱 北京 天安门", "天安门 上 太阳 升"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray());

print("特征名字:\n", transfer.get_feature_names())

return None

def cut_word(text):

"""

进行中文分词

"""

return " ".join(list(jieba.cut(text))) #返回一个分词生成器对象,强转成list,再join转成字符串

def count_chinese_demo2():

"""

中文文本特征抽取,自动分词

"""

# 1、将中文文本进行分词

data = ["今天很残酷,明天更残酷,后天很美好,但绝对大部分是死在明天晚上,所以每个人不要放弃今天。",

"我们看到的从很远星系来的光是在几百万年前之前发出的,这样当我们看到宇宙时,我们是在看它的过去。",

"如果只用一种方式了解某样事物,你就不会真正了解它。了解事物真正含义的秘密取决于如何将其与我们所了解的事物相联系。"]

data_new = []

for sent in data:

data_new.append(cut_word(sent))

print(data_new)

# 2、实例化一个转换器类

transfer = CountVectorizer()

# 3、调用fit_transform()

data_final = transfer.fit_transform(data_new)

print("data_final:\n", data_final.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

if __name__ == "__main__":

# 代码1:sklearn数据集使用

#datasets_demo()

# 代码2:字典特征抽取

#dict_demo()

# 代码3:文本特征抽取

#count_demo()

# 代码4:中文文本特征抽取

#count_chinese_demo()

# 代码5:中文文本特征抽取,自动分词

count_chinese_demo2()

# 代码6: 测试jieba库中文分词

#print(cut_word("我爱北京天安门"))

Results of the:

Building prefix dict from the default dictionary ...

Loading model from cache /tmp/jieba.cache

Loading model cost 1.304 seconds.

Prefix dict has been built successfully.

['今天 很 残酷 , 明天 更 残酷 , 后天 很 美好 , 但 绝对 大部分 是 死 在 明天 晚上 , 所以 每个 人 不要 放弃 今天 。', '我们 看到 的 从 很 远 星系 来 的 光是在 几百万年 前 之前 发出 的 , 这样 当 我们 看到 宇宙 时 , 我们 是 在 看 它 的 过去 。', '如果 只用 一种 方式 了解 某样 事物 , 你 就 不会 真正 了解 它 。 了解 事物 真正 含义 的 秘密 取决于 如何 将 其 与 我们 所 了解 的 事物 相 联系 。']

data_final:

[[0 0 1 0 0 0 2 0 0 0 0 0 1 0 1 0 0 0 0 1 1 0 2 0 1 0 2 1 0 0 0 1 1 0 0 0]

[0 0 0 1 0 0 0 1 1 1 0 0 0 0 0 0 0 1 3 0 0 0 0 1 0 0 0 0 2 0 0 0 0 0 1 1]

[1 1 0 0 4 3 0 0 0 0 1 1 0 1 0 1 1 0 1 0 0 1 0 0 0 1 0 0 0 2 1 0 0 1 0 0]]

特征名字:

['一种', '不会', '不要', '之前', '了解', '事物', '今天', '光是在', '几百万年', '发出', '取决于', '只用', '后天', '含义', '大部分', '如何', '如果', '宇宙', '我们', '所以', '放弃', '方式', '明天', '星系', '晚上', '某样', '残酷', '每个', '看到', '真正', '秘密', '绝对', '美好', '联系', '过去', '这样']This method is based on the number of times a word appears in an article. However, some words such as "we" and "you" appear many times in all articles. What we prefer is that they appear many times in articles of a certain category but rarely in articles of other categories. In this way words are more conducive to our classification.

Find this keyword. Introduced TfidfVectorizer

11, Method 2: TfidfVectorizer

sklearn.feature_extraction.text.TfidfVectorizer

12. Tf-idf text feature extraction

(1) The main idea of TF-IDF is: if a word or phrase has a high probability of appearing in an article and rarely appears in other articles, then this word or phrase is considered It has good category discrimination ability and is suitable for classification

(2) TF-IDF function: used to evaluate the importance of a word to a document set or one of the documents in a corpus.

13. Tf-idf formula

(1) Term frequency (tf) refers to the frequency of a given word appearing in the document

(2) Inverse document frequency (idf) is the universal importance of a word measure of sex. The idf of a specific word can be obtained by dividing the total number of files by the number of files containing the word, and then taking the logarithm of the base 10 to obtain the quotient (3) The final result can be understood as the degree of

importance

Note: If the total number of words in a document is 100, and the word "very" appears 5 times, then the word frequency of the word "very" in the document is 5/100=0.05. The method of calculating file frequency (IDF) is to divide the total number of files in the file set by the number of files in which the word "very" appears. Therefore, if the word "very" appears in 1,000 documents and the total number of documents is 10,000,000, the reverse document frequency is lg (10,000,000 / 1,0000) = 3. Finally, the "very" score of tf-idf for this document is 0.05 * 3=0.15

14. Tf-idf indicates the degree of importance.

Two words: economy, very

1000 articles - corpus

100 articles - very

10 articles - economy

two articles

Article A (100 words): 10 times economic

tf: 10 / 100 = 0.1

idf: log 10 (1000 / 10) = 2

tf-idf: 0.2

Article B (100 words): 10 times very

tf: 10 / 100 = 0.1

idf: log 10 (1000 / 100) = 1

tf-idf :0.1

logarithm?

2 ^ 3 = 8

log 2 (8) = 3

log 10 (10) = 1

15. API

sklearn.feature_extraction.text.TfidfVectorizer(stop_words=None,...)

Return value: Returns the weight matrix of the words

16. TfidfVectorizer.fit_transform(X)

X: Text or iterable object containing text string

Return value: Returns sparse matrix

17. TfidfVectorizer.inverse_transform(X)

X: array array or sparse matrix

Return value: data format before conversion

18. TfidfVectorizer.get_feature_names()

return value: word list

19. Use tfidf for feature extraction

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

import jieba

def datasets_demo():

"""

sklearn数据集使用

"""

#获取数据集

iris = load_iris()

print("鸢尾花数据集:\n", iris)

print("查看数据集描述:\n", iris["DESCR"])

print("查看特征值的名字:\n", iris.feature_names)

print("查看特征值几行几列:\n", iris.data.shape)

#数据集的划分

x_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=22)

print("训练集的特征值:\n", x_train, x_train.shape)

return None

def dict_demo():

"""

字典特征抽取

"""

data = [{'city': '北京','temperature':100},{'city': '上海','temperature':60},{'city': '深圳','temperature':30}]

# 1、实例化一个转换器类

transfer = DictVectorizer(sparse=False)

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new)

print("特征名字:\n", transfer.get_feature_names())

return None

def count_demo():

"""

文本特征抽取

"""

data = ["life is short,i like like python", "life is too long,i dislike python"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform()

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

def count_chinese_demo():

"""

中文文本特征抽取

"""

data = ["我 爱 北京 天安门", "天安门 上 太阳 升"]

# 1、实例化一个转换器类

transfer = CountVectorizer()

# 2、调用fit_transform

data_new = transfer.fit_transform(data)

print("data_new:\n", data_new.toarray());

print("特征名字:\n", transfer.get_feature_names())

return None

def cut_word(text):

"""

进行中文分词

"""

return " ".join(list(jieba.cut(text))) #返回一个分词生成器对象,强转成list,再join转成字符串

def count_chinese_demo2():

"""

中文文本特征抽取,自动分词

"""

# 1、将中文文本进行分词

data = ["今天很残酷,明天更残酷,后天很美好,但绝对大部分是死在明天晚上,所以每个人不要放弃今天。",

"我们看到的从很远星系来的光是在几百万年前之前发出的,这样当我们看到宇宙时,我们是在看它的过去。",

"如果只用一种方式了解某样事物,你就不会真正了解它。了解事物真正含义的秘密取决于如何将其与我们所了解的事物相联系。"]

data_new = []

for sent in data:

data_new.append(cut_word(sent))

print(data_new)

# 2、实例化一个转换器类

transfer = CountVectorizer()

# 3、调用fit_transform()

data_final = transfer.fit_transform(data_new)

print("data_final:\n", data_final.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

def tfidf_demo():

"""

用tf-idf的方法进行文本特征抽取

"""

# 1、将中文文本进行分词

data = ["今天很残酷,明天更残酷,后天很美好,但绝对大部分是死在明天晚上,所以每个人不要放弃今天。",

"我们看到的从很远星系来的光是在几百万年前之前发出的,这样当我们看到宇宙时,我们是在看它的过去。",

"如果只用一种方式了解某样事物,你就不会真正了解它。了解事物真正含义的秘密取决于如何将其与我们所了解的事物相联系。"]

data_new = []

for sent in data:

data_new.append(cut_word(sent))

print(data_new)

# 2、实例化一个转换器类

transfer = TfidfVectorizer()

# 3、调用fit_transform()

data_final = transfer.fit_transform(data_new)

print("data_final:\n", data_final.toarray())

print("特征名字:\n", transfer.get_feature_names())

return None

if __name__ == "__main__":

# 代码1:sklearn数据集使用

#datasets_demo()

# 代码2:字典特征抽取

#dict_demo()

# 代码3:文本特征抽取

#count_demo()

# 代码4:中文文本特征抽取

#count_chinese_demo()

# 代码5:中文文本特征抽取,自动分词

#count_chinese_demo2()

# 代码6: 测试jieba库中文分词

#print(cut_word("我爱北京天安门"))

# 代码7:用tf-idf的方法进行文本特征抽取

tfidf_demo()

Results of the:

Building prefix dict from the default dictionary ...

Loading model from cache /tmp/jieba.cache

Loading model cost 1.415 seconds.

Prefix dict has been built successfully.

['今天 很 残酷 , 明天 更 残酷 , 后天 很 美好 , 但 绝对 大部分 是 死 在 明天 晚上 , 所以 每个 人 不要 放弃 今天 。', '我们 看到 的 从 很 远 星系 来 的 光是在 几百万年 前 之前 发出 的 , 这样 当 我们 看到 宇宙 时 , 我们 是 在 看 它 的 过去 。', '如果 只用 一种 方式 了解 某样 事物 , 你 就 不会 真正 了解 它 。 了解 事物 真正 含义 的 秘密 取决于 如何 将 其 与 我们 所 了解 的 事物 相 联系 。']

data_final:

[[0. 0. 0.21821789 0. 0. 0.

0.43643578 0. 0. 0. 0. 0.

0.21821789 0. 0.21821789 0. 0. 0.

0. 0.21821789 0.21821789 0. 0.43643578 0.

0.21821789 0. 0.43643578 0.21821789 0. 0.

0. 0.21821789 0.21821789 0. 0. 0. ]

[0. 0. 0. 0.2410822 0. 0.

0. 0.2410822 0.2410822 0.2410822 0. 0.

0. 0. 0. 0. 0. 0.2410822

0.55004769 0. 0. 0. 0. 0.2410822

0. 0. 0. 0. 0.48216441 0.

0. 0. 0. 0. 0.2410822 0.2410822 ]

[0.15698297 0.15698297 0. 0. 0.62793188 0.47094891

0. 0. 0. 0. 0.15698297 0.15698297

0. 0.15698297 0. 0.15698297 0.15698297 0.

0.1193896 0. 0. 0.15698297 0. 0.

0. 0.15698297 0. 0. 0. 0.31396594

0.15698297 0. 0. 0.15698297 0. 0. ]]

特征名字:

['一种', '不会', '不要', '之前', '了解', '事物', '今天', '光是在', '几百万年', '发出', '取决于', '只用', '后天', '含义', '大部分', '如何', '如果', '宇宙', '我们', '所以', '放弃', '方式', '明天', '星系', '晚上', '某样', '残酷', '每个', '看到', '真正', '秘密', '绝对', '美好', '联系', '过去', '这样']20. The importance of Tf-idf.

Classification machine learning algorithm for early data processing in article classification.