1. First introduction to context switching

We first need to understand what context switching is.

In fact, in the era of a single processor, the operating system could handle multi-threaded concurrent tasks. The processor allocates a CPU time slice (Time Slice) to each thread, and the thread executes tasks within the allocated time slice.

CPU time slice is the time period allocated by the CPU to each thread for execution, generally tens of milliseconds. We can't feel the threads switching between each other in such a short period of time, so it looks like they are happening at the same time.

The time slice determines how long a thread can continuously occupy the processor. When a thread's time slice runs out, or is forced to pause due to its own reasons, at this time, another thread (which can be the same thread or a thread of another process) will be selected by the operating system to occupy the processor. This process in which one thread is suspended and deprived of the right to use, and another thread is selected to start or continue running is called context switch.

Specifically, when a thread is deprived of the right to use the processor and is suspended, it is "cut out"; when a thread is selected to occupy the processor to start or continue running, it is "cut in". In this process of switching in and out, the operating system needs to save and restore the corresponding progress information. This progress information is the "context".

So what does the context include? Specifically, it includes the storage contents of the registers and the instruction contents stored in the program counter. The CPU registers are responsible for storing tasks that have been, are, and will be executed, and the program counter is responsible for storing the location of the instruction being executed by the CPU and the location of the next instruction to be executed.

In the current situation where the number of CPUs is far more than one, the operating system allocates CPUs to thread tasks in turn. At this time, context switching becomes more frequent, and there is cross-CPU context switching. Compared with single-core context switching, cross-core Switching is more expensive.

2. Multi-thread context switching incentives

In the operating system, the types of context switching can also be divided into context switching between processes and context switching between threads. In multi-threaded programming, what we mainly face is the performance problem caused by context switching between threads. Let's focus on what causes multi-threaded context switching. Before we begin, let's take a look at the life cycle status of Java threads.

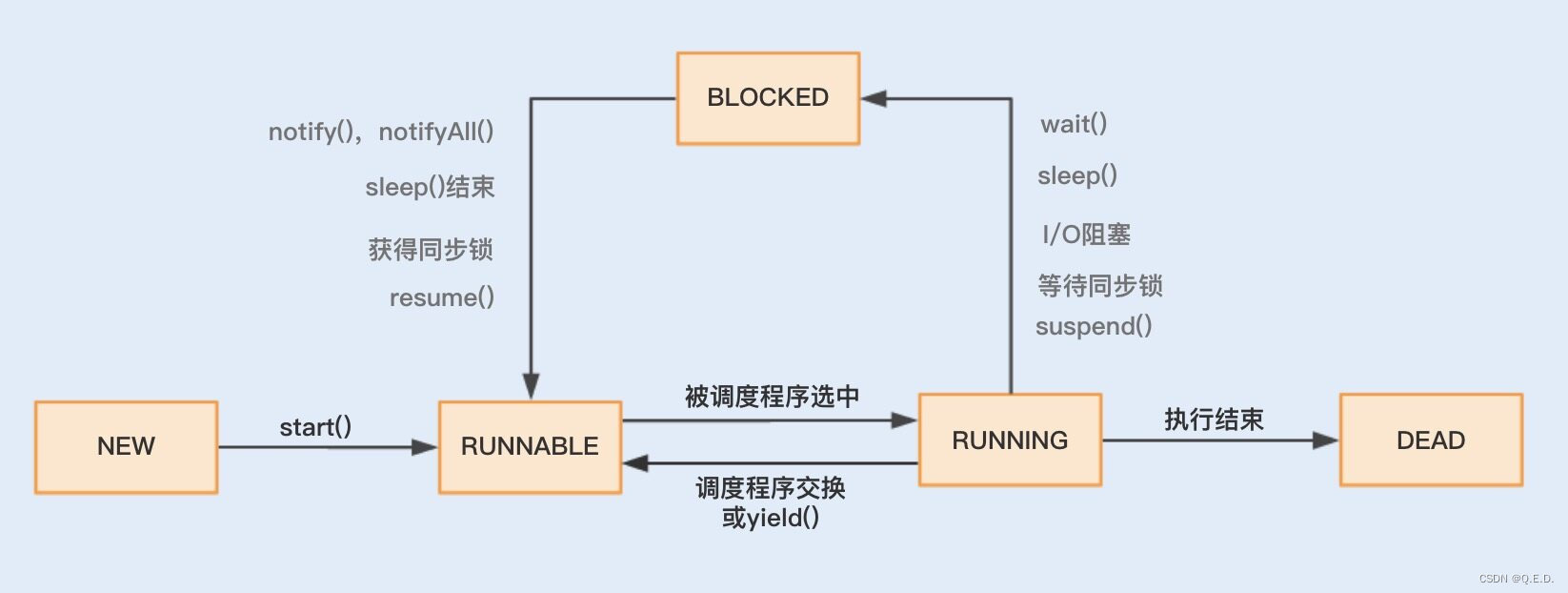

From the diagram, we can see that threads mainly have five states: "NEW", "Ready" (RUNNABLE), "RUNNING", "BLOCKED", and "DEAD".

During this running process, the process of turning a thread from RUNNABLE to non-RUNNABLE is thread context switching.

The state of a thread changes from RUNNING to BLOCKED, then from BLOCKED to RUNNABLE, and then is selected for execution by the scheduler. This is a context switching process.

When a thread changes from the RUNNING state to the BLOCKED state, we call it a thread's pause. After the thread pause is cut out, the operating system will save the corresponding context so that the thread can execute the previous progress when it enters the RUNNABLE state again later. Continue execution on the basis of.

When a thread enters the RUNNABLE state from the BLOCKED state, we call it the wake-up of a thread. At this time, the thread will obtain the last saved context and continue to complete execution.

Through the running state of threads and the mutual switching between states, we can understand that multi-threaded context switching is actually caused by the mutual switching of two running states of multi-threads.

So when the thread is running, the thread status changes from RUNNING to BLOCKED or from BLOCKED to RUNNABLE. What causes this?

We can analyze it in two situations, one is a switch triggered by the program itself, which we call a spontaneous context switch, and the other is a non-spontaneous context switch induced by the system or virtual machine.

Spontaneous context switching means that a thread is cut out due to a call from a Java program. In multi-threaded programming, calling the following methods or keywords often triggers spontaneous context switching.

- sleep()

- wait()

- yield()

- join()

- park()

- synchronized

- lock

Unspontaneous context switching means that the thread is forced out due to scheduler reasons. Common ones include: the thread's allocated time slice is used up, virtual machine garbage collection or execution priority issues.

The focus here is "Why virtual machine garbage collection causes context switching". In the Java virtual machine, the memory of objects is allocated by the heap in the virtual machine. During the running of the program, new objects will be continuously created. If the old objects are not recycled after use, the heap memory will be quickly used. exhausted. The Java virtual machine provides a recycling mechanism to recycle objects that are no longer used after creation, thereby ensuring sustainable allocation of heap memory. The use of this garbage collection mechanism may cause the stop-the-world event to occur, which is actually a thread suspension behavior.

3. Discover context switching

We always say that context switching will bring system overhead, but are the performance problems it brings really that bad? How do we detect context switches? What exactly is the cost of context switching? Next, I will give a piece of code to compare the speed of serial execution and concurrent execution, and then answer these questions one by one.

public class DemoApplication {

public static void main(String[] args) {

// 运行多线程

MultiThreadTester test1 = new MultiThreadTester();

test1.Start();

// 运行单线程

SerialTester test2 = new SerialTester();

test2.Start();

}

static class MultiThreadTester extends ThreadContextSwitchTester {

@Override

public void Start() {

long start = System.currentTimeMillis();

MyRunnable myRunnable1 = new MyRunnable();

Thread[] threads = new Thread[4];

// 创建多个线程

for (int i = 0; i < 4; i++) {

threads[i] = new Thread(myRunnable1);

threads[i].start();

}

for (int i = 0; i < 4; i++) {

try {

// 等待一起运行完

threads[i].join();

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

long end = System.currentTimeMillis();

System.out.println("multi thread exce time: " + (end - start) + "s");

System.out.println("counter: " + counter);

}

// 创建一个实现 Runnable 的类

class MyRunnable implements Runnable {

public void run() {

while (counter < 100000000) {

synchronized (this) {

if(counter < 100000000) {

increaseCounter();

}

}

}

}

}

}

// 创建一个单线程

static class SerialTester extends ThreadContextSwitchTester{

@Override

public void Start() {

long start = System.currentTimeMillis();

for (long i = 0; i < count; i++) {

increaseCounter();

}

long end = System.currentTimeMillis();

System.out.println("serial exec time: " + (end - start) + "s");

System.out.println("counter: " + counter);

}

}

// 父类

static abstract class ThreadContextSwitchTester {

public static final int count = 100000000;

public volatile int counter = 0;

public int getCount() {

return this.counter;

}

public void increaseCounter() {

this.counter += 1;

}

public abstract void Start();

}

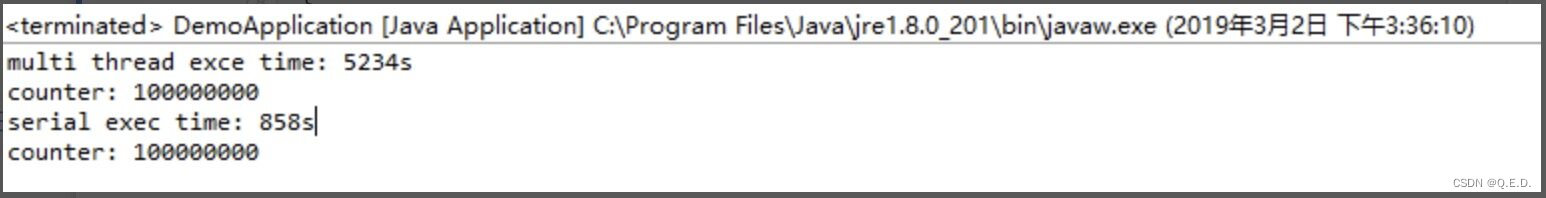

}After execution, take a look at the time test results of the two:

Through data comparison, we can see that the serial execution speed is faster than the concurrent execution speed. This is because the context switching of threads causes additional overhead. Using the Synchronized lock keyword leads to resource competition, which causes context switching. However, even if the Synchronized lock keyword is not used, the concurrent execution speed cannot exceed the serial execution speed. , this is because context switching also exists in multi-threads. The design of Redis and NodeJS well reflects the advantages of single-threaded serialization.

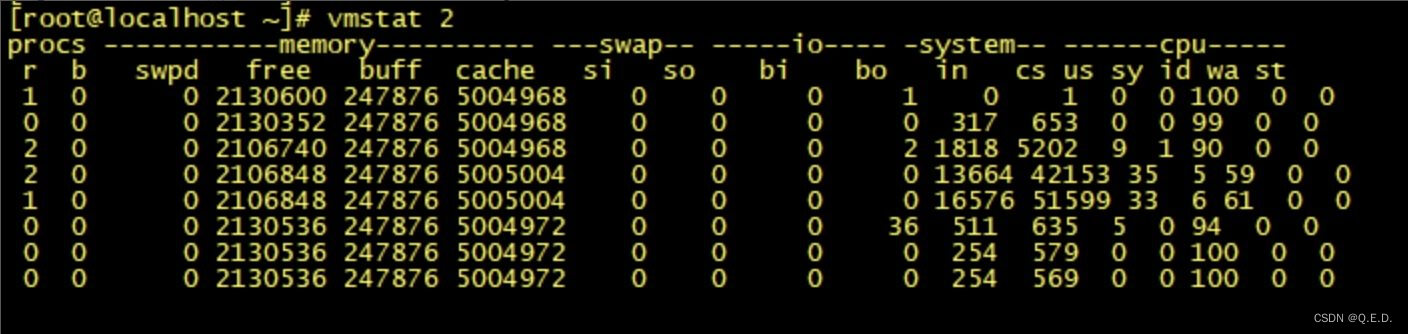

Under the Linux system, you can use the vmstat command provided by the Linux kernel to monitor the context switching frequency of the system during the running of the Java program. cs is as shown in the following figure:

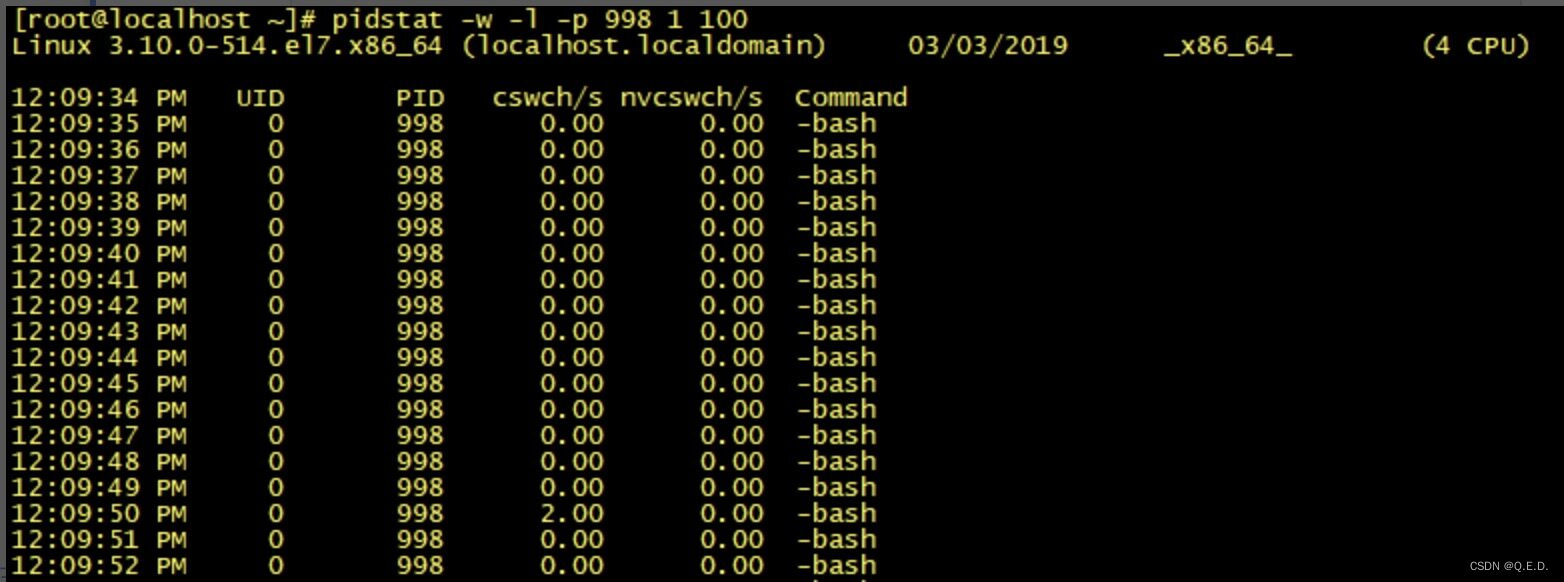

If you are monitoring the context switch of an application, you can use the pidstat command to monitor the Context Switch of the specified process.

Since Windows does not have a tool like vmstat, under Windows, we can use Process Explorer to view the number of context switches between threads when the program is executed.

As for the specific links in the switching process where system overhead occurs, the summary is as follows:

- The operating system saves and restores context;

- The scheduler performs thread scheduling;

- Processor cache reload;

- Context switching may also cause the entire cache area to be flushed, thereby incurring time overhead.

4. Summary

Context switching is the process in which one working thread is suspended by another thread, and the other thread occupies the processor and starts executing the task. Spontaneous and non-spontaneous calling operations of the system and Java programs will lead to context switching, thus causing system overhead.

The more threads there are, the faster the system will not necessarily run. So when we usually use single threading and when do we use multithreading when the amount of concurrency is relatively large?

Generally, we can use a single thread when a single logic is relatively simple and the speed is relatively very fast. For example, the Redis we talked about earlier can quickly read values from memory without considering the blocking problem caused by I/O bottlenecks. In scenarios where the logic is relatively complex, the waiting time is relatively long, or a large amount of calculations are required, I recommend using multi-threading to improve the overall performance of the system. For example, file read and write operations, image processing, and big data analysis in the NIO era.

5. Thinking questions

What we mainly discussed above is multi-threaded context switching. When I talked about classification earlier, I also mentioned context switching between processes. So did you know that context switching between processes will occur when using Synchronized in multi-threading? In what specific areas will it happen?