Hello friends, I am rumor.

The most lying in my WeChat floating window is the work of generating images from text. I thought I could handle this direction, but since they started using the Diffusion model, I can no longer keep up with it, and this kind of articles are frequently From page 20, I slowly let myself go.

When I was clearing out the inventory of these reports that day, I suddenly had a torture question: Why are everyone looking in this direction? These generated paintings are amazing, but can they produce actual value? How should it be implemented or even commercialized?

With this question in mind, I downloaded all the papers I knew. They are:

OpenAI的DALLE (21/01)、GLIDE (22/03)、DALLE2 (22/04)

Meta的Make-A-Scene (22/03)

Google的Image (05/22)、Party (06/22)

Zhiyuan Enlightenment 2.0 (21/06), CogView (21/11) jointly developed by Zhiyuan Ali Dharma Academy and Tsinghua University

Baidu's Wenxin ERNIE-ViLG (21/12)

Bytes CLIP-GEN (22/03)

It can be seen how lively this direction is this year. Google and OpenAI even started to roll their own hands, releasing different jobs before and after.

Back to the topic, about why everyone is going in this direction , after reading the Intro of the above articles, I found that the views are relatively unified, and the core is to constantly pursue the ability to understand the model .

As Richard Feynman is quoted by ERNIE-ViLG:

What I cannot create, I do not understand

When the model can create an accurate corresponding image based on the text, it proves that it has the four capabilities listed in CogView:

A series of features such as shape and color are extracted from the pixels. That is to say, when a picture is input to the model, it can really "see" various objects and features in it like a human being, rather than one by one. pointless pixels

understand the text

Aligning objects and features in images to words (including synonyms), means the model is able to relate two modalities of the same thing

Learn how to combine various objects and features, this generative ability requires a higher level of cognition

With the continuous investment of resources, these generated pictures are getting stronger and stronger at a speed visible to the naked eye. So although these pictures are fancy, how can they be implemented and what actual value can they produce?

First of all, the most direct one is to assist artists to create, or even create independently . For example, at the end of last year, I found that four paintings generated by a PhD student using AI were sold for 2304 yuan in Taobao auctions . I also researched some foreign websites. Using AI Technology pays to generate a particular style of creation. When I was writing this article, I searched on Taobao again, and found that there are still people auctioning AI-generated artwork, and the price is not cheap:

To be honest, I am not a supporter of this landing direction. I think the thinking and experience of the "people" behind the paintings are the reason for the achievement of art. But the value is determined by people, and it just so happens that NFT has recently caught up, so it is unknown what this direction will develop into.

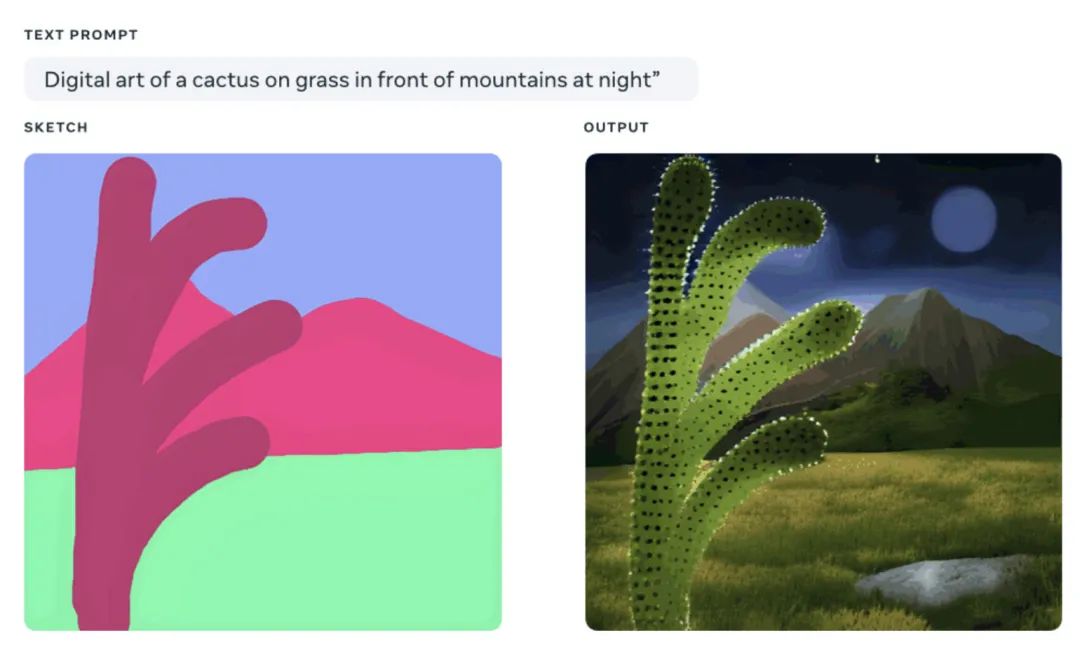

Another landing direction is children's education . Meta's latest work, Make-A-Scene, is very suitable for this direction. Let children draw sketches and describe scenes, and then they can generate great works, thereby enlightening children's interest and imagination in art. This direction is still very practical and can be realized.

Another direction is content production. The prospect of this direction is very broad. Let’s talk about 3D first. Now there is no need to say more about the market of 3D animation and games (it also includes the metaverse), but making a 3D character and scene requires modeling, texture, and binding. If AI can assist in the production of complex processes such as setting bones, making animations, and adding special effects, it will save a lot of labor costs and increase the speed of content output. Moreover, the real objects generated by the current model are also very realistic. If you think about it further, maybe you can input a script and output a movie in the future.

However, the current model capabilities are still far from the ultimate goal, and the controllability of the model needs to be further improved.

AI has been subtly affecting our lives, both good and bad. For example, search engines use AI algorithms to sort, and some people use AI algorithms to generate a lot of garbage content for SEO; another example is editing software that uses AI to reduce the cost of manual dubbing. Steal other people's videos, use AI to change the voice to wash manuscripts and make a lot of junk clips.

Although bad things happen, fortunately, this is a process of confrontation, and the algorithms of both parties will become stronger and stronger in the confrontation.

I am a punk and geek AI algorithm lady rumor

Graduated from Beihang University, NLP Algorithm Engineer, Google Developer Expert

Welcome to follow me, take you to learn and take your liver

Spin, jump, and blink together in the age of artificial intelligence

"I hope that I can increase the speed of my B station video as soon as possible"