Link to the paper: Prototypical Matching and Open Set Rejection for Zero-Shot Semantic Segmentation

is not open source

1. Abstract

- The DCNN approach to the semantic segmentation problem requires a large number of pixel-annotated training samples.

- We propose zero-shot semantic segmentation, which aims to recognize not only known classes included in training, but also novel classes never seen before.

- We employ a strict inductive setting where only the visible classes (

seen classes) are available at training time - We propose an open -aware prototypical matching method to accomplish segmentation: the Prototypic method extracts visual features through a set of prototypes (

prototypes), allowing for the convenience and flexibility of adding new unseen classes (unseen classes). Prototype projections are trained to map semantic features onto seen prototypes and generateunseen classesprototypes ( ) for unseen categories (prototypes). - Furthermore, open -set rejection is utilized to detect all objects that are not of the seen class, which greatly reduces the misclassification of unseen objects as seen classes due to lack of training instances of seen classes.

- We applied the framework on two split datasets, Pascal VOC 2012 and Pascal Context , and achieved state-of-the-art

2. Introduction

2.1. Defining ZSS

- zero-shot segmenta-tion ( ZSS ): ZSS was proposed in many papers such as "Zero-shot semantic segmentation", "Semantic projection network for zero-and few-label semantic segmentation", and the goal is to provide visible and invisible categories The object generates a segmentation mask (segmentation mask), as shown in the figure:

- The original ZSL ( zero-shot learning ) does not need to distinguish between seen and unseen classes, which is unrealistic and contradicts the recognition conditions in the real world. Later, "Toward open set recognition" proposed generalized zero - shot learning ( GZSL ), because image samples from both seen and unseen classes often appear together, and it is important to recognize both groups at the same time. ZSS in this paper stands for generalized case.

- In ZSS, an important source of information is semantic features—semantic information encoded by high-dimensional vectors. Semantic information can include: automatically extracted word vectors, manually defined attribute vectors, context-based embeddings, or a combination of them. Each class (visible or not) has its own semantic representation. The method of using invisible information divides ZSS into two settings: inductive training and transductive setting ( see Figure 1)

- Inductive training: only the basic features and semantic representations of the seen classes are available

- Transformation training: In addition to the base and semantic features of seen classes, access to semantic features of unseen classes (sometimes unannotated images)

- Although several methods (such as ZS3 [5], CaGNet [20], and CSRL [33]) have been developed under transfer learning, this setting is indeed impractical because it violates unacceptable assumptions, And significantly reduce the challenge. Nonetheless, there is consensus in both settings that unseen curriculum ground truths should not be present or exploited during training. Therefore, it should prevent the misuse of the ground truth of unseen classes when training the classifier

2.2. Contributions of this paper

- We elucidate the induction and conduction settings of ZSS and perform

- We use prototype matching in ZSS, combining semantic and visual information and giving it the flexibility to add new unseen categories during testing

- For the first time, we introduce open set rejection in ZSS, which effectively alleviates the bias problem and improves parsing performance

- We achieve state-of-the-art performance on ZSS

3. Related Work

3.1. Zero-Shot Segmentation

- The purpose of zero-shot segmentation is to transfer knowledge from seen classes to unseen classes by bridging semantic and visual information.

- The challenge of ZSS comes not only from domain shift , but also from the apparent difference of visible classes. Most of the existing works are devoted to solving the domain transfer problem in ZSS. Specifically, "Learning unbiased zero-shot semantic segmentation networks via transductive transfer" alleviates the problem of strong discrepancies to visible classes through a transductive approach, that is, using labeled visible images and unlabeled non- Visible images for training.

- Another perspective of ZSS is to generate comprehensive visual features for invisible classes, such as "From pixel to patch: Synthesize context-aware features for zero-shot semantic segmentation" Use category-level semantic representation and pixel-level context information to generate synthetic invisible feature.

3.2. Zero-Shot Learning

This part is not very useful

3.3. Generalized Zero-Shot Learning

Openmax ("Towards open set deep networks" CVPR2016) redistributes the probability scores generated by SoftMax and estimates the probability of inputs belonging to unknown classes. Furthermore, the difficulty of training an unknown set stems from the lack of unknown samples. Correspondingly, some works "Open set learning with counterfactual images" (ECCV 2018), "Gen- erative openmax for multi-class open set classification" (BMVC 2017) suggest synthesizing images of invisible classes for network training. In this work, we share the same spirit with the sample synthesis method. We randomly replace some known objects/contents in a given image with synthetic unknown objects/contents. The corresponding note in the ground truthmask is changed to "Unknown"

4. Model

4.1. Training phase

- Stage 1: Hope to get a representation of all visible classes

Vision Prototypes: train an open perception (open-aware) segmentation network, first obtain the(N,H,W)dimensional visual features (Vision Feature) through the feature extraction layer, the purpose of the segmentation network is to obtain a visual prototype (Trainable Vision Prototypes), theVision PrototypesIt can represent all visible classes and invisible classes (here all invisible classes are unified intoUnknownone class), and each class(N,1,1)is represented by a feature vector, which is obtained by convolving each class(N,1,1)vector with ( ) , and the above The category whose position takes the maximum value is used as the predicted category for the current position. The final output obtained, and the value of Mask generates loss, to optimize , and finally get the representation of all visible classes (Vision Feature(N,H,W)(H,W)(i,j)(1,H,W)(1,H,W)Vision PrototypesVision PrototypesVisible classes have supervisory elements in Mask, and Unknown supervisory units are invisible classes and backgrounds, that is, areas other than all visible classes, so the learning is not good. However, stage 1 does not care about these, which is also stage 2. Reasons for adding the Unknown branch) - Stage 2: Hope to get a mapping model ( ) from the semantic space (

Semantic Embedding) to the visual feature ( ) space: the prediction result of this model is the mapping prototype ( ). First, through the pre-trained Word2Vector model, the category information is converted to the semantic space ( ), and then the visual prototype obtained in stage 1 ( ) is used as a supervisory element to supervise the learning of the mapping model ( Projection Network ).Vision PrototypesProjection NetworkProjected PrototypesSeen ClassSemantic EmbeddingVision Prototypes - Looking back, isn't this a multimodal matching model from images to Word! It's just that there are more visual prototypes (

Vision Prototypes) in the middle as supervision information.

4.2. Inference phase

- On the road branch: through training, the vision prototype ( Vision Prototypes ) and the mapping model ( Projection Network ) are obtained , and a picture is input, and the vision feature (Vision Feature) is first obtained through feature extraction, and then the vision prototype (Vision Prototypes) is obtained, and the vision After the prototype (

Vision Prototypes) is convolved , the image(1,H,W)on the left is obtainedMask. At this time, it is predicted that the visible class is directly output; the predicted invisible class needs to be further confirmed, and theMaskmiddleUnknownpart is separated (of course not deducted, and 0 is assigned to the visible class area. OK) - The next branch: the Word information of the invisible class gets the semantic features ( ) through Word2Vetor , and the mapping model ( Projection Network

Semantic Embedding) obtained in the training stage obtains the mapping prototype of the invisible class, and each class is a vector(N,1,1) - Combination: Calculate the similarity between the mapping prototype of the invisible class and the visual features of the unknown class

costo obtain the final score map, and splicing with the previous visible class area to obtain the final result

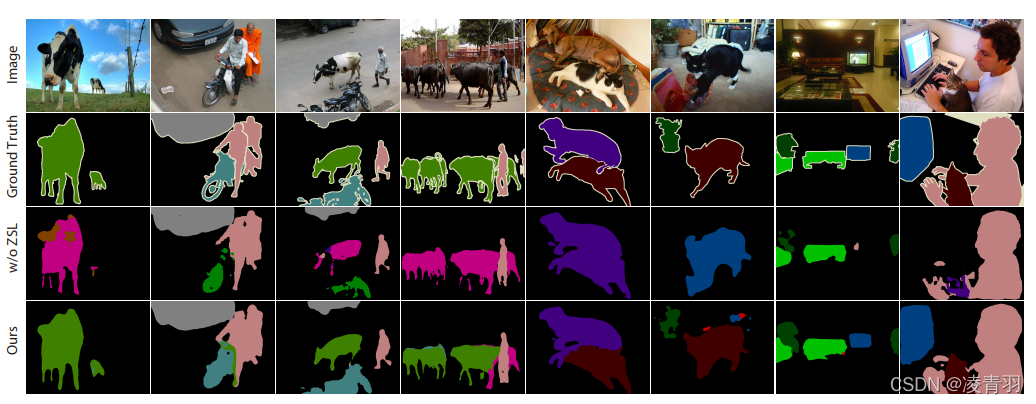

5. Model effect