Table of contents

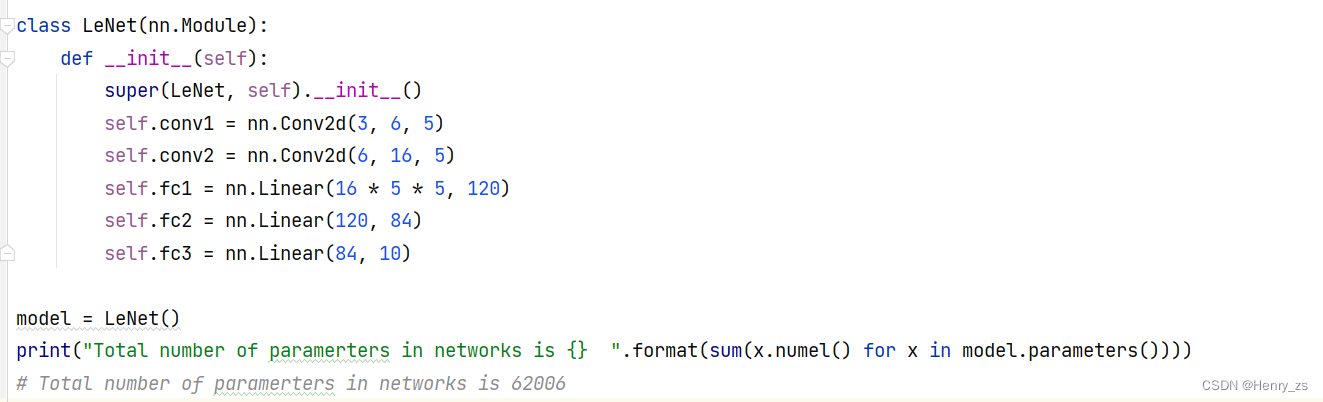

3. Calculate the number of parameters of the LeNet network

As the number of layers of the neural network deepens, the number of network parameters will increase. Small networks have tens of thousands of parameters, and large networks can reach tens of millions of network parameters.

So how should we calculate the number of neural network parameters?

The neural network for image classification consists of two parts: feature extraction layer + classification layer

The feature extraction layer is to extract the features in the image, where the features are the details of the image, such as edges, key points, and so on. Similar to when a person recognizes an object, he often does not need to see what the object looks like. He only needs to observe the general outline to know whether the object is a person or a tree. Then the outline of the person or the outline of the tree here is the feature, and we don't need to know what the person looks like.

Therefore, the feature extraction layer of the neural network is constantly extracting these features

If the neural network is compared to a myopic person, then when he classifies people and trees, for example, the degree can be deeper (the neural network can be shallower), as long as he is not too blind, he can classify people and trees. But you can’t be so blind when classifying men and women, because the outlines of men and women are similar, so with the difficulty of classification tasks, the depth of the neural network must be superimposed, so that the neural network does not look so blurred

In neural networks, the operation of feature extraction is to use convolution. The most typical example is that if the convolution kernel is a soebl operator, vertical or horizontal edge detection can be performed (for details, please refer to the spatial filtering of image processing). Therefore, the convolution operation of feature extraction is nothing more than the neural network gradually extracting the key features it considers.

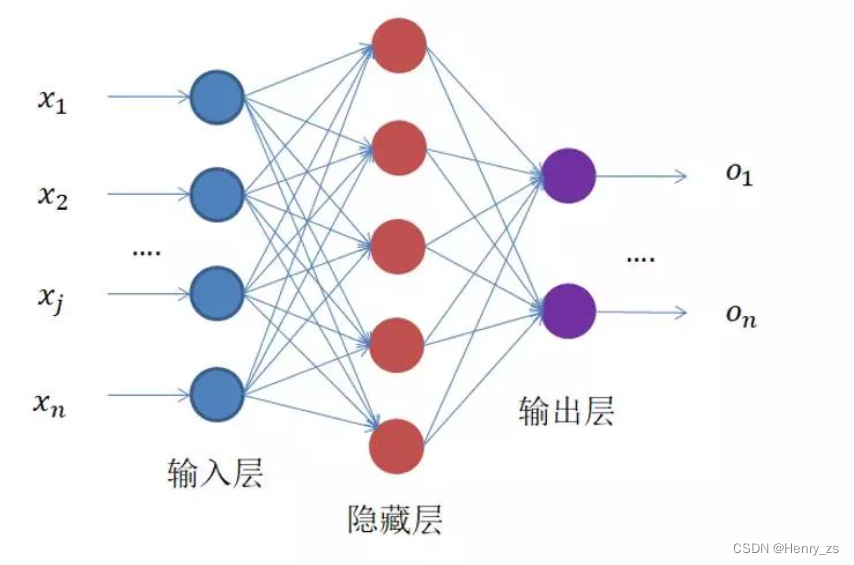

The classification layer classifies the extracted features according to the label, using linear full connection

Therefore, calculating the parameters in the neural network consists of two parts: feature extraction layer + classification layer

1. Convolutional layer

Here we first introduce the parameters of the convolutional layer

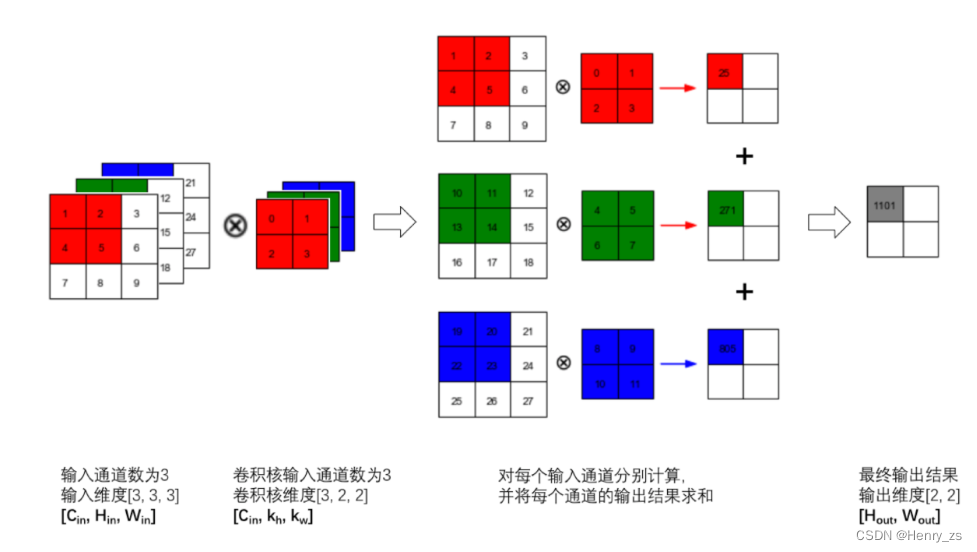

The above picture is a convolution process of a 3D image, where the dimension of the image is called a channel. The process of convolution is to establish a convolution kernel to convolve the image of each channel ( each channel corresponds to a different convolution kernel ), and then add them to produce a single-channel image

Because the learning content of the neural network is the weight in the convolution kernel, then the number of parameters required here is 3*3*3 = 27

What if the dimensions of the image are not 3-channel? Or the dimension of the output must be single channel?

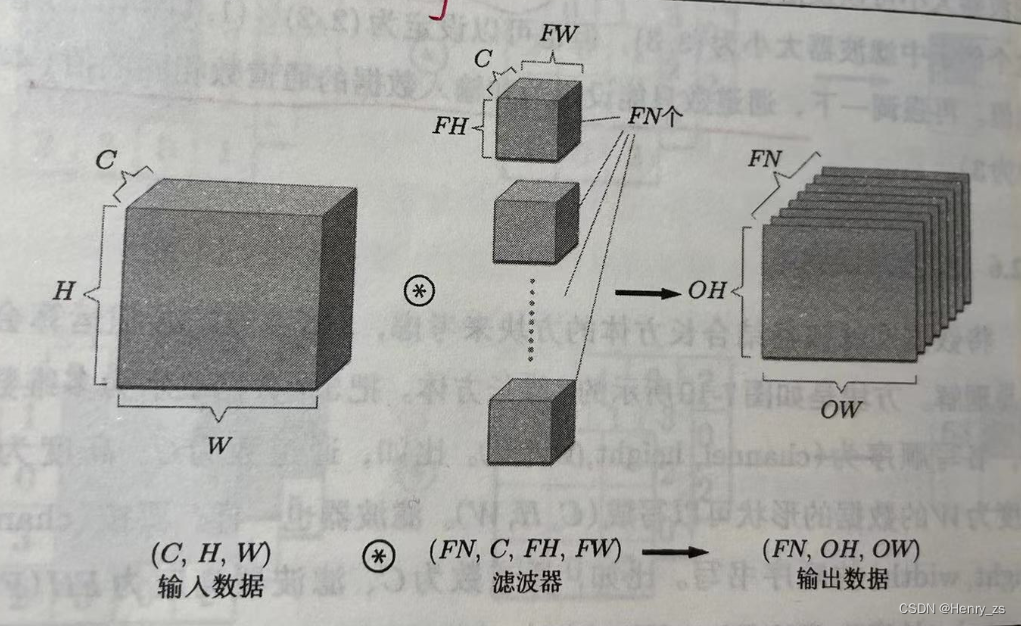

We think of it as a square, and a square is a set of convolution kernels. If the dimension of the image is C, stacking C convolution kernels together is a set of convolution kernels, and the resulting image is still single-channel (one layer of output feature map)

As long as the number of squares (a set of convolution kernels) is expanded arbitrarily, the output image will be multi-channel

So the number of image parameters above is: FH * FW * C * FN, that is, the number of weights of a block * FN, and then each block is piled up by a piece of convolution kernel, which is equal to FH * FW *C

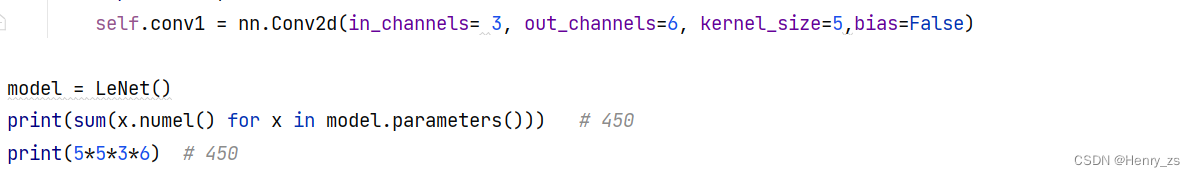

Therefore, the calculation formula for the parameters of the convolution layer is: the size of the convolution kernel * the depth of a set of convolution kernels * how many groups are there in total

The corresponding relationship here is: the depth of the convolution kernel = the depth of the input image, the number of squares = the depth of the output image

Therefore, the following number of parameters can be obtained

The bias bias is equal to the number of blocks, which is the depth of the output

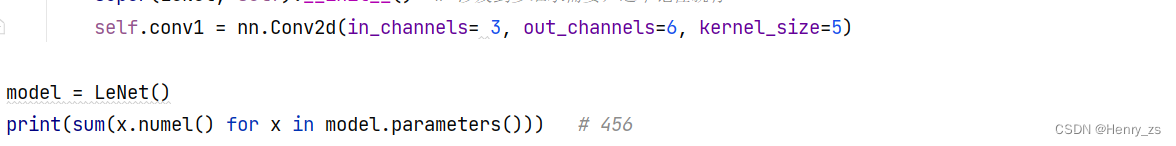

If you change the bias to True, just add the depth of the output, here is 6, so the answer is 456

2. Classification layer

The calculation of the fully connected layer is very simple, that is: input depth * output depth

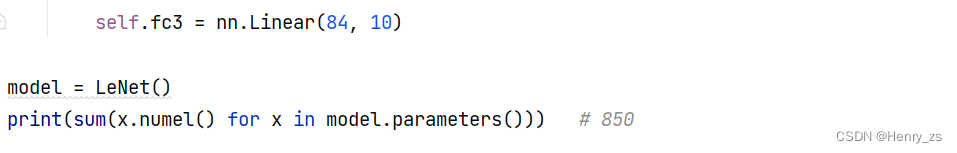

The following linear layer, here is 84*10 = 840

And the bias bias is equal to the number of outputs

If you change the bias to True, just add the depth of the output, here is 10, so the answer is 850

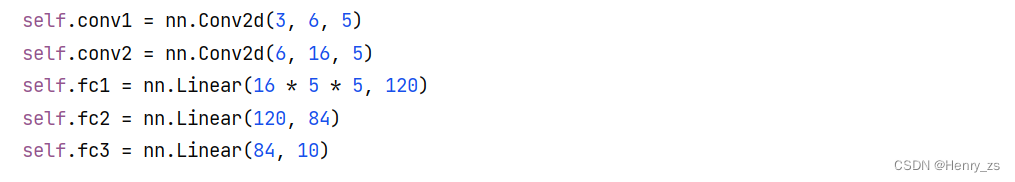

3. Calculate the number of parameters of the LeNet network

The LeNet network structure is shown in the figure

The number of parameters of conv1 is: 3*6*5*5 + 6 = 456

Here 3 is the depth of the input, 6 is the depth of the output, 5 is the size of the convolution kernel, here is the square 5 * 5

Therefore, the total number of network parameters should be: 456+2416+48120+10164+850=62006

![]()

As shown in the figure, the result is the same as our calculation