1. Principles of Data Quality Assurance

How to evaluate the quality of data, the industry has different standards, Alibaba mainly evaluates from four aspects: completeness, accuracy, consistency, and timeliness;

1. Integrity

Data integrity is the most basic guarantee of data;

-

Integrity: Refers to whether the records and information of the data are complete or missing;

-

Missing data: mainly includes missing records and missing information in a certain field in a record;

Loss of records: For example, the number of orders sent every day in the transaction is about 1 million. If the payment orders suddenly drop to 10,000 on a certain day, it is likely that the records are lost;

Loss of fields in the record: For example, the product ID and seller ID of the order must exist, and the number of null values in these fields must be 0. Once it is greater than 0, the integrity constraint is violated;

2. Accuracy

-

Accuracy: refers to whether the information and data recorded in the data summary are accurate, and whether there is any abnormal or wrong information;

Accuracy: The information recorded in the data sheet must be consistent with the actual facts in the business process; how to judge whether it is accurate: card point monitoring - formulate corresponding rules, and verify the data according to the root, and the data that conforms to the rules is considered accurate;

For example, if the confirmed receipt amount of an order is negative, or the order is placed before the establishment of the company, or the order has no buyer information, etc., there must be problems;

3. Consistency

-

Consistency: It is generally reflected in a data warehouse system with a large span, such as Ali's data warehouse, which has many branches of business data warehouses. For the same data, consistency must be guaranteed;

Consistency: that is, the public data between multiple business data warehouses must be consistent in each data warehouse;

For example, user IDs must be of the same type from the processing of the online business database to the data warehouse, and then to each consumption node, and the length must also be consistent;

-

Therefore, when Ali built the data warehouse, it had the processing of the public layer to ensure the consistency of the data;

4. Timeliness

-

Timeliness: refers to the timely output of data;

It is mainly reflected in the application of data, and timely output should be given to the demand side;

-

General decision support analysts hope to see the data of the previous day on the same day, instead of waiting three to five days to see a certain data analysis result; otherwise, the value of data timeliness has been lost;

For example, Ali's "Double 11" transaction large-screen data must be at the second level;

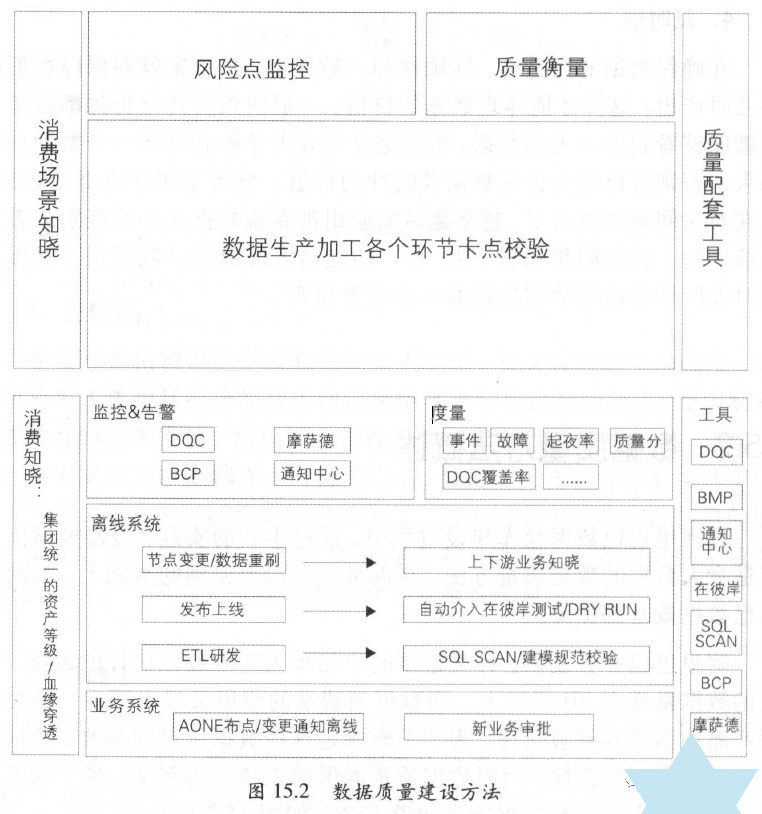

2. Overview of data quality methods

Ali's data quality construction system:

1. Awareness of consumption scenarios

-

Function: analyze and solve the problems of consumption scene awareness;

-

method:

Through the data asset level and metadata-based application links, analyze and solve the problem of consumption scene awareness; determine the data asset level: determine the level of data assets according to the degree of influence of the application;

-

process:

According to the blood relationship of the data link, the asset level is pushed up to each link of data production and processing, the asset level of all data involved in the link is determined, and different processing methods are adopted according to the different asset levels in each processing link;

2. Card point verification in each link of data production and processing

Mainly check the two parts of the data card point: the card point verification of each link of data production and processing in the online system and the offline system;

-

Online system: OLTP (On - Line Transaction Processing, online transaction processing) system;

Card point verification in each link of production and processing in the online system:

-

According to different asset levels, when the corresponding business system changes, decide whether to notify the downstream of the change;

-

For businesses with high asset levels, when new business data appears, whether to include it in the statistics needs to be checked and approved;

-

Offline system: OLAP (On - Line Analytical Processing, online analytical processing) system;

Card point verification in each link of offline system production and processing:

Mainly include: code development, testing, release, historical or error data flashback and other links of card point verification; code development stage, test stage before release

For different data asset levels, the requirements for verification are different;

3. Risk point monitoring

Risk point monitoring: mainly monitors data quality and timeliness issues that may arise during data operation;

Risk point monitoring is mainly carried out in two aspects:

-

Risk point monitoring of online data:

Mainly check the business rules for the data generated by the daily operation of the online system;

Mainly use the "real-time business detection platform BCP (Biz Check Platform)";

-

Risk point monitoring of offline data:

Mainly for data quality monitoring and timeliness monitoring of the data generated by the daily operation of the offline system;

DQC: monitor data quality;

Mossad: monitor data timeliness;

4. Quality measurement

-

Measure of quality:

Ex-ante measurement: such as DQC coverage;

Measurement after the fact:

Follow up quality problems, determine the cause of quality problems, responsible persons, solutions, etc., and use them for data quality review to avoid similar incidents from happening again;

According to the degree of impact of quality problems on assets of different grades, determine whether they are low-impact events or failures with greater impact;

-

Quality score: scoring based on pre- and post-event measurement data;

5. Quality supporting tools

-

For all aspects of data quality, there are related tools to ensure that in order to improve efficiency;

01 Awareness of consumption scenarios

-

Questions to know about consumption scenarios:

It is difficult for data R&D engineers to confirm whether the data of hundreds of PB is important? Are they all guaranteed? Is some data out of date? Does everything need to be precisely QA?

-

Solution: data asset level scheme;

-

output:

According to the degree of influence of data products and applications, classify the assets of data products and applications, and mark them;

According to the blood relationship of the data link, the asset level is pushed up to each link of each data production and processing, and the asset level of all data involved in the link is determined, and marked; (the level label is consistent with the corresponding data product/application)

1. Definition of data asset class

Background: For Ali's huge data warehouse, the scale of data has reached exabytes. For such a large amount of data, if generalized, it will inevitably lead to inability to concentrate and guarantee inaccuracy;

Five data levels, with the importance of different properties reduced at a time:

-

destructive nature

That is, once the data is wrong, it will cause major asset losses, face major loss of benefits, and cause major public risks;

-

global nature

That is, the data is directly or indirectly used for the evaluation of the group's business and effects, the operation and maintenance of important platforms, the disclosure of external data products, and the influence of user behavior on Ali's websites, etc.;

-

local properties

That is, the data is directly or indirectly used for internal general data products or operational/product reports, and if there is a problem, it will affect the business department or business line, or cause loss of work efficiency;

-

general nature

That is, the data is mainly used for the daily data analysis of Xiao Er, and the occurrence of problems will have little or no impact;

-

unknown nature

That is, the data is mainly used for the daily data analysis of Xiao Er, and the occurrence of problems will have little or no impact;

-

unknown nature

If the application scenario of the data cannot be clearly stated, it is marked as unknown;

-

For different data asset levels, use English Asset to mark:

Destructive properties: Class A1;

Global nature: A2 grade;

Local nature: A3 grade;

General nature: A4 grade;

Unknown properties: A5 grade;

Importance: A1 > A2 > A3 > A4 > A5;

If a piece of data appears in multiple application scenarios, follow the high principle;

-

Data Asset Level Landing Method

Problems to be solved: For such a huge amount of data, how to label each piece of data with a grade?

Methods/steps for implementing data asset levels:

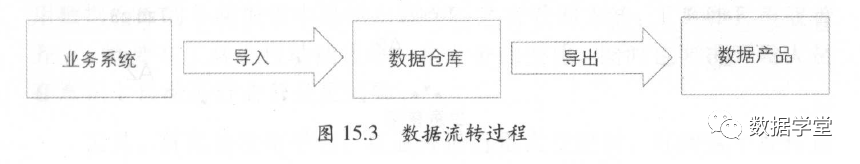

Data flow process

-

The data is generated from the business system, enters the data warehouse system through synchronization tools, and performs a series of operations such as cleaning, processing, integration, algorithms, and models in the general sense in the data warehouse;

-

Output to data products for consumption through synchronization tools;

The data from the business system to the data warehouse and then to the data products are all reflected in the form of tables. The flow process is as follows:

What is synchronized to the data warehouse (corresponding to Ali is the MaxCompute platform) is the original table of the business database, which is mainly used to carry business needs, and often cannot be directly used for data products; (generally, the full amount of data in the ODS layer)

All data products used are output tables processed by the data warehouse; (processed according to requirements/reports)

-

Classify data assets

-

According to the data transfer process, establish metadata and record the corresponding relationship between data tables and data products or applications;

-

According to the degree of influence, classify data assets for data products and applications;

-

Marking: Relying on the upstream and downstream kinship of metadata, label the entire consumption link with a certain type of data asset label (that is, mark the consumption link data);

Link: refers to the flow of data from business systems to data products;

Summarize:

Through the above steps, the confirmation of the data asset level is completed, and different levels of importance are defined for different data, which requires the support of metadata;

02 Data processing process card point verification

Purpose: To ensure data accuracy and consistency with offline data;

-

Online business system card point verification (data output link)

-

Card point verification in the online system data processing process mainly refers to the card point verification in the data production process of the online system;

-

Purpose: To ensure consistency with offline data;

-

Background/problem: The online business is complex and changeable, and it is always changing. Every change will bring about changes in data, so two things need to be done:

-

The data warehouse needs to adapt to the ever-changing business development and ensure the accuracy of the data in a timely manner;

-

It is necessary to efficiently notify the online business changes to the offline data warehouse; Ali’s solution to the above two problems: a two-pronged approach of tools and labor: it is necessary to automatically capture every business change on the tool, and at the same time require developers to be conscious. Notification of business changes;

-

-

tool

Publishing platform: sending notifications of major changes;

Notification content: change reason, change logic, change test report, change time, etc.;

Database platform: send database table change notification;

Notification content: change reason, change logic, change test report, change time, etc.;

-

Publish content

Function: When the business undergoes major changes, subscribe to the publishing process, and then give it to offline developers to make them aware of the content of the change;

Note: The business system is busy, and there are countless daily changes. Not every business change will only be offline business, which will cause unnecessary waste and affect the efficiency of online business iteration;

Subscription content: For the important high-level data assets of the whole group, sort out which changes will affect the processing of data, and then subscribe to these contents;

For example, financial reports, which are naturally A1-level assets. If the transformation of the business system will affect the calculation of financial reports, such as the agreed calculation caliber is modified by the business system, then the offline business must be notified. As an offline developer, you must also Actively pay attention to such release change information;

Checkpoints: The release platform integrates the notification function, and checkpoints are made for important scene releases, and the release can only be completed after the notification is confirmed;

-

Change awareness of database tables

Whether it is database expansion or table DDL changes with business development, offline developers need to be notified;

DDL ((Data Definition Language): Database Schema Definition Language; a language used to describe real-world entities to be stored in a database.

DDL database schema definition language is an integral part of the SQL language (Structured Query Language);

Example: CREATE DATABASE (create database), CREATE TABLE (create table);

DML (Data Manipulation Language): Data manipulation language commands; enables users to query databases and manipulate data in existing databases.

Example: insert, delete, update, select, etc. are all DML;

Background/problem: The data warehouse uses the DataX tool for data extraction, which may limit a certain database table. If the database is expanded or migrated, the DataX tool will not be aware of it, and the result may lead to data extraction errors and omissions, affecting A range of downstream applications;

Solution: Send the library table change notification through the database platform;

-

Developer

The upstream and downstream of the data asset level are connected, and this process must also be given to online developers to let them know which are important core data assets and which are only used as internal analysis data for the time being;

To improve the awareness of online developers, tell online business developers the demands of offline data, the processing process of offline data, and the application of data products through training, so that they can realize the importance of data and understand the value of data, and at the same time It also informs the consequences of errors, so that online developers should also pay attention to the goals of data when completing business goals, so that the business end and the data end are consistent;

-

Offline system card point verification (data offline processing link)

Background/problem: In the process of data from online business system to data warehouse and then to data products, data cleaning and processing need to be completed at the data warehouse level; it is precisely with data processing that there are data warehouse models and data The construction of warehouse code; how to ensure the quality of data processing is an important part of offline data warehouse to ensure data quality;

Purpose: To ensure the quality of data processing (mainly refers to the accuracy of data);

In addition, card point verification needs to be carried out in two links:

-

Card point verification when code is submitted

Background/reason: The quality of data R&D personnel is different, and the coding ability is also different, so it is difficult to guarantee the code quality efficiently;

Solution: Develop a code scanning tool SQLSCAN to scan every submitted code and extract risk points;

Card point method: use the code scanning tool SQLSCAN to scan the code to extract risk points;

-

Card point verification when the task is released and online

In order to ensure the accuracy of online data, every change needs to be tested offline and released to the online environment. The release is successful only after the online test is passed;

Card point method: test the task (referring to the changed business) before and after it goes online;

-

Testing before release: mainly including Code Review and regression testing;

-

Code Review: It is a process of improving code quality by reviewing code;

-

Regression testing: refers to retesting after modifying the old code to confirm that the modification does not introduce new errors or cause errors in other codes;

Purpose of regression testing:

Guarantee the correctness of the new logic; guarantee that it will not affect the logic that is not changed this time;

Note: For the release of task changes with a higher asset level, a strong blocking form is adopted, and the release is allowed only after the regression test is completed on the other side;

-

Post-launch test: Do dry run test online or run test in real environment;

-

Dry Run Test:

Do not execute the code, only run the execution plan to avoid syntax errors caused by inconsistencies between the online and offline environments;

-

Running test in real environment:

Test with real data;

-

Change notification before node change or data refresh

Notification content: change reason, change logic, change test report, change time, etc.;

process:

Use the notification center to automatically notify the downstream of the change reason, change logic, change test report, change time, etc. After the downstream has no objection to the change, it will release the change according to the agreed time to minimize the impact of the change on the downstream;

03 Risk point monitoring

Risk point monitoring: mainly refers to monitoring the risks that are likely to occur in the daily operation of the data, and setting up an alarm mechanism;

It mainly includes online data and offline data operation risk point monitoring;

Purpose: to ensure the accuracy of the data;

-

Online data risk point monitoring

-

Purpose: To reduce the dirty data generated by the online business system and ensure the first pass for data accuracy;

In addition, it reduces the complaints of user error information, and also reduces the rollback of offline data errors;

-

BCP: Ali's real-time business detection platform;

-

Idea/monitoring process: In each business system, when the business process is completed and the data is stored in the database, BCP subscribes to the same data, and performs logic verification in the BCP system according to the business rules set in advance. When the verification fails, it will be disclosed in the form of an alarm and given to the rule subscribers to complete the data verification;

-

The verification process of BCP:

Obtain data sources: users subscribe to data sources on the BCP platform to obtain data sources that need to be verified;

Write rules: Write rules for the subscribed data sources, that is, the logic of verification;

-

Rules/logic: It is crucial and the core of verification. Only after passing these rules can the record be considered correct;

For example, check the "time when the order was taken"; logic: the time when the order was taken will definitely not be greater than the time of the day, nor less than the time when Taobao was founded;

Configure alarms: configure different alarm forms for different rules;

Note: Due to the high configuration and operating costs of BCP, it is mainly monitored according to the data asset level;

-

Offline data risk point monitoring

Offline data risk point monitoring mainly includes monitoring data accuracy and timeliness of data output;

-

Data Accuracy Monitoring

Data accuracy is the key to data quality, so data accuracy has become the top priority of data quality, and it is the first guarantee element for all offline system processing;

Method: Data accuracy monitoring by DQC;

DQC (Data Quality Center, Data Quality Center): It mainly focuses on data quality, and automatically monitors data quality during data processing tasks by configuring data quality verification rules;

Note: To monitor data quality and report to the police, it does not process the data output itself, and the receiver of the report needs to judge and decide how to deal with it;

Monitoring method: By configuring data quality inspection rules, automatic monitoring is performed during data processing tasks;

Monitoring rules:

Strong rules: will block the execution of tasks;

Set the task to a failed state, and its downstream tasks will not be executed;

Weak rules: only alert but not block the execution of tasks;

Common DQC monitoring rules: primary key monitoring, table data volume and fluctuation monitoring, non-empty monitoring of important fields, discrete value monitoring of important enumerated fields, index value fluctuation monitoring, business rule monitoring, etc.;

Rule configuration: rely on data asset level to determine monitoring rules;

DQC checks are actually running SQL tasks, but this task is nested in the main task. Once there are too many checkpoints, it will naturally affect the overall performance; therefore, it still depends on the data asset level to determine the configuration of the rules;

Note: Different businesses will be constrained by business rules. These rules are derived from data products or consumption business requirements, configured by consumption nodes, and then pushed up to the starting point of the offline system for monitoring, so as to minimize the impact of rules;

-

data timeliness

On the basis of ensuring the accuracy of the data, it is necessary to further enable the data to provide services in a timely manner; otherwise, the value of the data will be greatly reduced, or even have no value;

Most of Ali's offline tasks:

Generally, the time interval is days, which are called "day tasks". For daily tasks, data products or data decision reports are generally required to be produced at 9:00 or even earlier every day;

In order to ensure the integrity of the data of the previous day, the daily tasks are run from zero. Since the calculation and processing tasks are all run at night, and to ensure that the daily data can be produced on time, a series of alarms and priority settings are required. , so that important tasks are prioritized and output correctly;

Important tasks: businesses with higher asset levels;

-

task priority

For Map task and Reduce task, the scheduling is a tree structure (RelNode tree). When the priority of the leaf node (RelNode node) is configured, this priority will be passed to all upstream nodes, so the priority setting is given to to the leaf node, and the leaf node is often the consumer node of the service business;

Set priority: first determine the asset level of the business, the consumption node corresponding to the high-level business is naturally configured with a high priority, and the general business corresponds to a low priority to ensure that the high-level business is output on time;

-

task alarm

Similar to task alarm and priority, it is also transmitted through leaf nodes;

It is inevitable that tasks will make mistakes during operation. Therefore, to ensure that tasks can be executed efficiently and smoothly, a monitoring and alarm system is required. For high-priority tasks, once task errors are found or output delays may occur, an alarm must be sent to Task and Business Owner;

Mossad: Ali self-developed monitoring and alarm system;

-

Mossad

Mossad: monitoring and alarm system for offline tasks; an indispensable guarantee tool for data operation and maintenance;

According to the running status of offline tasks, make real-time decisions on whether to alarm, when to alarm, alarm method, who to alarm, etc.;

Two main functions: strong security monitoring, custom alarm;

Strong security monitoring

Strong security monitoring is the core function of Mossad. It is designed only around the operation and maintenance goal, that is, business security. As long as the business is threatened at the early warning time, Mossad will definitely send an alarm to the relevant personnel;

Strong assurance monitoring mainly includes:

Monitoring scope: the task of setting strong guarantee business and all upstream tasks will be monitored;

Abnormal monitoring: task error, task slowdown, early warning service delay;

Alarm object: the default is the task owner, and you can also set the duty list to a certain person;

When to alarm: judge when to alarm according to the early warning time set by the business;

Business delay warning and error warning are judged according to the "output warning time";

Output warning time: Mossad calculates the approximate time used by the current business based on the average running time of all tasks in the current business in the last 7 days as the output warning time;

Alarm method: According to the importance and urgency of the business, it supports telephone, SMS, Wangwang, and email alarms;

Example: business consultant business (warning business delay)

Asset level and demand: The defined asset level is A2, and the output data is required to be put on the shelf at 9:00 in the morning;

Setting: Define a strong guarantee monitoring for the business consultant business, the business output time is 9:00, and the business early warning time is 7:00;

The early warning time here means that once Mossad monitors that the output time of the current business exceeds the early warning time, it will call the on-duty personnel for early warning;

For example, Mossad speculates that the output time of the business staff will be 7:30, then the telephone alarm will come out, and the on-duty personnel will judge how to speed up the output; output time calculation (early warning judgment, that is, output delay judgment) : Mossad is calculated based on the average running time of all tasks in the current business in the last 7 days; although there is a possibility of misjudgment, it is still very accurate and acceptable;

-

custom monitoring

Custom monitoring is a relatively lightweight monitoring function of Mossad. Users can configure it according to their own needs, mainly including:

-

Error alarm: Error alarm configuration can be performed according to the three monitoring objects of application, business, and task. When the monitoring object makes an error, the alarm will be sent to the person/Owner/duty table;

-

Completion alarm: According to the three monitoring objects of application, business, and task, the completion alarm configuration can be performed. When the monitoring object is completed, the alarm will be sent to the person/Owner/duty table;

-

Unfinished alarm: According to the three monitoring objects of application, business, and task, the unfinished situation alarm configuration can be performed. If the monitoring object is not completed, the alarm will be sent to the person/Owner/duty table;

-

Periodic alarm: For an hourly task in a certain period, if it is not completed at a certain time, the alarm will be given to the person/Owner/duty table;

-

Timeout alarm: configure the timeout alarm according to the running time of the task. If the task runs beyond the specified time, the alarm will be sent to the person/Owner/duty table;

-

Mossad Gantt Chart Services

For the operation of the business, Mossad will provide a one-day critical path, that is, the slowest task link diagram to complete the business; because there may be thousands of tasks upstream of each business, this critical path is optimized for the business link is very important;

04 Quality measurement

There are many solutions to ensure the data quality of the data warehouse. To evaluate the pros and cons of these solutions, a set of metrics is required:

-

Data quality improvement rate

General data warehouse tasks are carried out at night, and once a problem occurs, the on-duty staff needs to wake up at night to deal with it;

Wake-up rate: Use the number of night wake-ups per month as an indicator to measure the perfection of data quality construction;

-

Data Quality Incident

Data quality events: record every data quality problem;

For each data quality issue, a data quality event is logged:

Function: It is not only used to measure the quality of the data itself, but also used to measure the quality of the upstream and downstream of the data link. It is an important measure of data quality;

-

Processes used to follow up on data quality issues;

-

Used to inductively analyze the reasons for data quality;

-

To check for gaps and make up for omissions based on data quality reasons, it is necessary to find out the reasons for data problems and provide follow-up prevention plans for similar problems;

-

Data Quality Failure System

Serious data quality incidents will be escalated to faults;

Fault: Refers to the serious impact caused by the problem, which has already brought asset losses or public relations risks to the company;

Background: From data collection to final consumption, the entire link has to go through dozens of systems. Any problem in any link will affect the output of data. Therefore, a mechanism is needed to tie all teams together with the same goal. Forming a joint force, the failure system came into being under this background;

In the fault system, once a fault occurs, it will pass the fault system and require the relevant team to follow up and solve the problem at the first time to eliminate the impact;

-

failure definition

First, identify important business data and register it in the system, and fill in relevant business conditions, such as the person in charge of the technology, the person in charge of the business, the data application scenario, the impact of delay or error, whether there will be asset loss, etc. After completion , the task of this part of data will be hung on the platform baseline, and once there is a delay or error, a trouble ticket will be automatically generated to form a fault;

-

failure level

After a failure occurs, the failure level will be judged according to certain standards, such as the duration of the failure, the amount of customer complaints, financial losses, etc., and the failure will be graded according to P1~P4. To judge the operation and maintenance effect of this year;

-

Troubleshooting

After a fault occurs, it is necessary to quickly identify the cause of the fault and resolve it quickly to eliminate the impact;

In the process of handling the fault, we will try our best to notify the relevant parties of the processing progress of the fault to minimize the impact on the business;

-

Fault Review

Fault Review: analyze the cause of the fault, review the processing process, and form a follow-up solution Action, and all will be recorded in detail in the form of text, and the responsibility for the fault will be attributed, generally to the person;

Note: The determination of fault responsibility is not to punish individuals, but to form a solution through replaying the fault to avoid the recurrence of the problem;