Edge Computing | Liquid Cooling Server | GPT-4

Deep Learning | AI Server | ChatGPT

At a launch event last week, OpenAI announced the launch of the GPT-4 model. Compared with previous versions, the biggest improvement of GPT-4 is its multimodal ability-it can not only read text, but also recognize images. It is worth noting that although it was previously reported that GPT-4 has 100 trillion parameters, OpenAI did not confirm this number. Compared with it, OpenAI puts more emphasis on the multimodal ability of GPT-4 and its performance in various tests.

According to OpenAI, GPT-4 outperforms the vast majority of humans on a variety of benchmarks. For example, GPT-4 scored higher than 88% of test takers on the Uniform Bar Exam, the LSAT, the SAT math section, and the Evidential Reading and Writing section.

Additionally, OpenAI is working with several companies to incorporate GPT-4 into their products, including Duolingo, Stripe, and Khan Academy, among others. At the same time, the GPT-4 model will also be provided to subscribers of the paid version of ChatGPT Plus in the form of API. Developers can use this API to create various applications. Microsoft also said in the announcement that the new Bing search engine will run on the GPT-4 system.

At the press conference, the demonstrator drew a very rough sketch with a draft pad, paper and pen, and then took a photo to tell GPT-4 that he needed to make a website like this, and needed to generate website code. Impressively, GPT-4 only took 10 seconds to generate a complete website code, realizing the effect of generating a website with one click.

The high-performance operation of ChatGPT is inseparable from a stable server-side architecture. Establishing a sustainable server-side architecture can not only guarantee the stability and reliability of ChatGPT, but also help reduce energy consumption, reduce costs and support the sustainable development strategy of enterprises. Therefore, this article will explore how to build a sustainable ChatGPT high-performance server-side architecture.

Beyond the "shackles of the past" ChatGPT breakthrough or AI extension?

ChatGPT is a natural language processing technology that can train models based on existing data to generate more realistic and natural conversations. The emergence of this technology is naturally a continuation of the previous AI development process, but it has also achieved breakthroughs in some aspects.

1. ChatGPT has more powerful dialogue generation capabilities. In the early AI technology, dialogue generation was based on rules and patterns, so it often faced limitations and constraints. However, the GPT series models can achieve more realistic and natural dialogue generation that flexibly adapts to dialogue scenarios through a large amount of language training data.

2. The training method of ChatGPT has also changed. In the past, humans were usually required to participate in the data labeling process so that machines could understand the meaning of human language. However, the GPT series can learn the laws and structures of human language expression from huge language data through unsupervised training, and then achieve more realistic and vivid dialogue generation.

3. The GPT series models also have advantages in handling multilingual and multi-scenario dialogues. Traditional AI technology mainly adapts to a single scene and lacks language diversity. However, because of its ability to train multiple languages, the GPT series models can handle dialogue between different languages, and can also meet the dialogue needs of different scenarios.

ChatGPT development and prospect in two years

The GPT-4 jointly developed by OpneAI has been developed for more than three years, and it will be released soon. It is very likely that its efficiency will be greatly improved, but it is uncertain what new code capabilities will emerge. What is still certain is that GPT-4 will solve some important problems like GPT-3.5, such as optimizing the ratio of data parameters, improving the efficiency of information processing and pattern discovery, and improving the quality of information input. It is very likely that the efficiency will be improved a lot, and its reasoning cost will be greatly reduced (possibly reduced to a hundred times). What is uncertain is how large a model GPT-4 will be (it may be larger than the rumored large model), and whether it will have multimodal capabilities (this has not been determined before, and the future is difficult to predict). Even if the model is multimodal, it is currently limited in ChatGPT's colorful imagination of the world, since multimodal information is difficult to normalize into textual patterns.

1. Many problems faced by ChatGPT at this stage have relatively simple solutions in engineering. For example:

1. Solving the "hallucination" problem (ChatGPT tends to produce inaccurate output) can be corrected by optimizing accuracy and introducing search data, and humans can participate in the judgment process to increase accuracy. In addition, when applying ChatGPT, auxiliary judgment can be made in the scene where humans determine whether it is good or bad.

2. For the limited memory of ChatGPT, you can use the open interface provided by OpenAI to solve it. In particular, the existing solution is very magical, just before prompting ChatGPT to answer, explain to it that the content provided is only part of the whole information, and ask it to answer after listening.

3. ChatGPT's self-censorship ability is not only based on rules, but also based on understanding. This understanding-based self-censorship ability is actually more adjustable. OpenAI also proposes the vision that ChatGPT can adjust the speech scale according to the demand under the premise of respecting the basic rules.

2. The cost of ChatGPT will plummet, especially the cost of inference will be more than two orders of magnitude smaller

The cost of ChatGPT will plummet, especially the cost of inference will be more than two orders of magnitude smaller. Sam once said in public that the inference cost of ChatGPT is a few cents per message. In addition, "Key Takes from ChatGPT and Generative AI.pdf" according to the detailed research of Jefferies Research, the high probability of ChatGPT's inference is based on idle x86 CPU instead of GPU.

Based on the understanding of inference and the optimization space of large language models, we believe that the cost of inference will plummet, which is very possible. The reduction in cost means the expansion of application scope and data collection ability. Even if ChatGPT's users reach the level of one billion DAU (the current estimate of 100 million DAU is not accurate), it will be free. At most there is a limit on the number of uses. New Bing once limited the number of searches to 60, but that has now been lifted as well. These conversations in actual use will undoubtedly further strengthen the dominant position of ChatGPT.

3. For the "ability" sub-model of ChatGPT, it may need to be retrained, but the "knowledge" sub-model only needs to input new knowledge through instruction prompting, without modifying the existing pre-trained model.

For many subtasks, as long as ChatGPT has the ability to understand and the amount of knowledge, it can continuously adjust the performance of ChatGPT through dialogue, guidance and education, so that it can exert new capabilities in each subtask. In contrast, the past AI technology needs to retrain the model when faced with new tasks, instead of just inputting new knowledge like ChatGPT.

If you use Iron Man 3 as a metaphor, ChatGPT is like a general-purpose armor that can do most of the work. Through the methods of "education" and "guidance", ChatGPT can complete various tasks in multiple fields, such as giving medical advice, legal reference, writing code framework, formulating marketing plan, providing psychological consultation, serving as an interviewer, etc.

What needs to be emphasized is the importance of prompting. Microsoft's New Bing did not make too many changes to ChatGPT, but guided ChatGPT to conduct a reasonable search through prompting. On the basis of prompting, if you want to focus on certain aspects, such as sacrificing dialogue continuity to improve information accuracy, you need to retrain the model and make adjustments. This may require integrating other ability modules, such as search and interfaces to other models, and incorporating some tools, like those specialization armors. In short, by continuously refining ChatGPT's capabilities and tools, you can expand its scope of application and unlock more possibilities.

4. With the passage of time, we predict that the prompting capability of the self-service ChatGPT will be greatly improved, and more functions will be gradually opened.

This is not only a commercially obvious advantage, but also allows users to gradually tune their own ChatGPT, allowing it to adapt to their preferences and learn unique knowledge (rather than limited to the stimulation of skills). In addition, although the model of ChatGPT is still closed source, the competitiveness on different application layers can still be developed and improved, which solves the doubt that only the UI design can be provided to OpenAI. Imagine a scenario where your ChatGPT is able to record all your conversations with TA and gradually learn from your feedback. If you are a good marketing manager, after a while, your ChatGPT will also acquire better marketing skills than others.

5. GPT-4 is expected to greatly enhance the capabilities of ChatGPT and reach the level of "excellent employees" in many fields.

The recent paradigm revolution has manifested a huge difference between New Bing and ChatGPT. We have every reason to believe that GPT-4 will almost certainly deliver huge improvements in the following areas:

1. Large model, big data, more optimized parameters and data ratio. The optimization direction of these factors is obvious, because the more parameters, the more data, but only the appropriate ratio can make the model fully absorb data knowledge.

2. More targeted training data sets. OpenAI's ability to "create high-quality big data" is almost unique in the world, and after years of exploration after GPT-3, they have been able to better adjust what data is more useful for enhancing what model capabilities (such as reading more codes and adjust the scale for multiple languages, etc.).

3. Possible "capability module integration". New Bing is based on ChatGPT and extended search capabilities. Is there a way to directly incorporate search capabilities into pretrained large models? Similarly, we can consider how to efficiently integrate other capabilities into ChatGPT based on pre-trained large models, and combine more scenarios for training. Therefore, it is predicted that in the next two years, ChatGPT based on GPT-4 will be able to reach the level of level 9 employees in most scenarios, with stronger induction and "understanding" capabilities.

Research on ChatGPT and GPT ability barriers

The barriers of ChatGPT come from the following aspects:

1. GPT-3 is closed source. OpenAI maintains a very cautious attitude, and it is impossible to open source ChatGPT. Therefore, the path of domestic machine learning relying on "domestic implementation of open source models" seems unrealistic on ChatGPT.

2. The increase of model parameters requires strong engineering capabilities. At the same time, it is also necessary for large models to effectively learn knowledge from big data. The issues of how to tune the model to produce the output required by humans have been emphasized in the OpenAI blog. Engineers with "principle" thinking habits are needed to participate in breaking through these engineering bottlenecks. It is reported that OpenAI's ultra-high talent density has successfully broken through many engineering bottlenecks. Therefore, it is necessary to carry out the next step of engineering accumulation on the basis of the previous step of engineering breakthrough.

3. Pay attention to practicality in a specific business environment. For example, although the recommendation algorithm model of ByteDance is very large, it is also very difficult. However, continuous optimization based on existing models cannot form a paradigm breakthrough. In a real business environment, if positive feedback cannot be provided for the business, the development of the model will be greatly hindered.

4. Leadership's technical judgment is a scarce resource. The successful combination of New Bing and ChatGPT is regarded as a rare miracle, far surpassing others on the market. This aspect is hard to come by, and it is not a replicable model.

5. The data flywheel has been formed. ChatGPT is one of the phenomenon-level successful C-end products. Combined with Microsoft's resources and channel bonuses, it got stuck in a very good position as soon as it came up. Therefore, the usage data of ChatGPT can continuously supplement the model itself. ChatGPT's blog also highlights their unique mechanism that closes the loop on data usage, understanding, and production.

ChatGPT is a new tool for the future AI era

The DAU of ChatGPT has grown phenomenally, and user feedback has also shown that it is extremely useful. While ChatGPT has imaginatively high entertainment value, its ability to significantly increase productivity is even more prominent. Dialogue and reading are actually entertainment with a high threshold. In most cases, richness and depth are not the main determinants of entertainment value. Therefore, we recommend focusing more on improving productivity when using ChatGPT.

Also, it needs to be remembered that ChatGPT is a disruptive product, not an incremental improvement. For early adopters of technology, it may be impossible to leave ChatGPT, but for the general public, opening a search engine to search is not even a common habit, and the degree of using clear and reasonable prompts to chat with ChatGPT is even lower. Therefore, in the next few years, ChatGPT will replace more various SaaS, cloud, and efficiency tools, such as search engines.

In practical scenarios, we should follow two principles: prescribing the right medicine and following the good. ChatGPT is not equivalent to search engines and programs, we should let it play its strengths instead of trying to replace other more efficient tools or services. In addition, considering the obvious hallucination problem of ChatGPT at present, we should remain vigilant and not blindly believe the conclusions of ChatGPT on all occasions, but use ChatGPT when human judgment is required, and let people examine the authenticity of its conclusions.

ChatGPT and humans essentially explore the difference between the two

Since the development of brain science and neuroscience is not yet mature enough, we can only explore the essential differences and similarities between humans and ChatGPT from a philosophical perspective.

1. From the perspective of judgment, ChatGPT can only absorb digital signals from virtual numbers, and cannot interact with the real world. Only through practice can the foundation of judgment be established.

Second, if speculation is only based on digital signals, ChatGPT is likely to draw wrong conclusions. For example, Newton's discovery of universal gravitation was based on seeing an apple fall and predicting the movement of stars. At that time, many people believed that the sun revolved around the earth. If there was a ChatGPT, it was likely to draw wrong conclusions. Therefore, in daily life, it is also very meaningful to recognize thinking ability, such as the moment of "inspiration and flash of light".

3. If you just generalize existing knowledge, ChatGPT is likely to do better than humans. But creating new knowledge that does not exist on the Internet is what ChatGPT cannot do.

4. From the perspective of understanding people, human beings can understand human nature without going through research, questionnaires and Internet materials. At the same time, through real-world practice, humans can bring incremental understanding of human nature. This is beyond the reach of ChatGPT. This implies that when we truly understand human beings, we need to practice in the real world instead of repeating routines.

ChatGPT explores the demand for computing power

The AI model's demand for computing power is mainly reflected in two levels: training and reasoning. The current mainstream artificial intelligence algorithms can usually be divided into two stages: "training" and "reasoning". According to CCID data, in 2022 China's digital economy will explode with strong growth momentum, an increase of 20.7% over the previous year, an increase of 2.9 percentage points compared with 2021, far exceeding the world average level, and the scale of the digital economy will reach 45.5 trillion yuan, ranking No. Germany is more than twice as big as Germany, and its digital economy development level ranks second in the world. In recent years, China has also been actively promoting the accelerated improvement of the innovation capabilities of the digital industry, accelerating the acceleration of industrial digital transformation, and gradually narrowing the gap with the United States in terms of digital economic competitiveness.

-

training phase

The process of tuning and optimizing an AI model to achieve a desired level of accuracy. In order to make the model more accurate, the training phase usually needs to process a large amount of data sets, and iterative calculations require a lot of computing resources. The reasoning phase is to apply the established artificial intelligence model to reason or predict the output of the input data after the training phase is completed.

-

reasoning stage

Comparing with the training stage, the requirement for computing power is not so high after all, but since the trained artificial intelligence model needs to be used for inference tasks many times, the total amount of calculation for inference operations is still considerable.

The computing power demand scenario of ChatGPT can be further divided into three stages according to the actual application: pre-training, Finetune and daily operation. In the pre-training stage , a large amount of unlabeled text data is used to train the basic language ability of the model to obtain basic large models, such as GPT-1, GPT-2 and GPT-3. In the Finetune stage , on the basis of the basic large model, two or more trainings such as supervised learning, reinforcement learning, and migration learning are performed to optimize and adjust the model parameters. In the daily operation stage , based on user input information, model parameters are loaded for reasoning and calculation, and the feedback output of the final result is realized.

ChatGPT is a language model whose architecture is based on Transformer. The Transformer architecture consists of encoding and decoding modules, where GPT only uses the decoding module. In addition, Transformer also contains three layers: feed-forward neural network, self-attention mechanism layer and self-attention mask layer, which all interact to achieve the high efficiency of the model.

The self-attention mechanism is one of the most important parts of Transformer. Its main function is to calculate the weight of a word for all words (ie Attention). In this way, the model can better understand the internal relationship of the text and achieve efficient learning of the relationship between inputs. The self-attention mechanism layer also allows the model to perform larger-scale parallel computing, which greatly improves the computational efficiency.

Feed-forward neural network layers provide efficient data information storage and retrieval. At this level, the model can effectively handle large-scale datasets and achieve efficient computation.

The masking layer is to filter the words that do not appear on the right side in the self-attention mechanism. This masking allows the model to only pay attention to what has been shown in the text, thus ensuring the accuracy of the calculation.

Compared with previous deep learning frameworks, the Transformer architecture has obvious advantages. The parallel computing capability of the Transformer architecture is stronger, which can greatly improve computing efficiency. This allows GPT to train larger and more complex language models, and it can better solve language processing problems.

According to previous data, it is estimated that daily operations will require about 7034.7 PFlop/s-day of computing power per month. User interaction also requires computing power support, and the cost of each interaction is about 0.01 US dollars. According to the ChatGPT official website in the past month (January 17 to February 17, 2023), the total number of visits reached 889 million. Therefore, in January 2023, the operating computing cost paid by OpenAI for ChatGPT is about 8.9 million US dollars. In addition, Lambda said that the cost of computing power required to train a GPT-3 model with 174.6 billion parameters exceeds $4.6 million; while OpenAI said that the computing power required to train a GPT-3 model with 174.6 billion parameters is about 3640 PFlop/ s-day. We assume that the unit cost of computing power remains unchanged, so the computing power required for ChatGPT's single-month operation is about 7034.7PFlop/s-day.

ChatGPT is a model that requires constant tuning of the Finetune model to ensure it is in the best application state. This tuning process requires developers to adjust model parameters to ensure that the output content is not harmful and distorted, and to perform large-scale or small-scale iterative training on the model based on user feedback and PPO strategies. The computing power required in this process will bring costs to OpenAI, and the specific computing power requirements and cost amount depend on the iteration speed of the model.

It is estimated that the monthly Finetune computing power demand of ChatGPT is at least 1350.4PFlop/s-day. According to IDC's forecast, in 2022, the proportion of reasoning and training will be 58.5% and 41.5% of China's artificial intelligence server load. If it is assumed that ChatGPT’s computing power requirements for inference and training are consistent, and it is known that a single month’s operation requires a computing power of 7034.7 PFlop/s-day, and a pre-training requires a computing power of 3640 PFlop/s-day, then we can go further It is assumed that pre-training is performed at most once a month. From this, we calculated that the monthly Finetune computing cost of ChatGPT is at least 1350.4PFlop/s-day.

From 117 million in GPT-1 to 1.5 billion in GPT-2, the parameter gap of more than 10 times has brought a leap in performance. This seems to mean that with the increase in capacity and parameter volume, there is still greater potential for model performance-so, in 2020, the parameter volume of GPT-3 has doubled by 100 times: 175 billion, and its pre-training data volume is also as high as 45TB (GPT-2 is 40GB, GPT-1 about 5GB). Facts have proved that massive parameters do give GPT-3 a more powerful performance, and it performs very well in downstream tasks. Even for complex NLP tasks, GPT-3 performs amazingly: it can imitate human writing, write SQL query statements, React or JavaScript code, etc. Looking back at the development of GPT-1, GPT-2, and GPT-3, many people have high hopes for GPT-4, and there are even rumors that the number of parameters of GPT-4 will be as high as 100 trillion.

Given that under the human feedback mechanism, the model needs to continuously obtain human guidance to achieve parameter tuning, so the model adjustment may be performed many times. The computational cost required for this will be higher.

What are the types of ChatGPT servers?

1. China's server development status

Countries are speeding up the development of the digital economy, the digitization process of traditional industries is accelerating, and the demand for digital intelligence of enterprises is strong. Especially the rapid development of emerging technology fields such as 5G, big data, and artificial intelligence continues to empower the server industry.

1. The demand for high computing power drives the server industry to usher in new opportunities for development

As a core productivity, computing power is applied in Internet, government affairs, finance and other fields. With the emergence of new concepts such as Metaverse and Web3.0, more complex computing scenarios generate high computing power requirements. Promote the upgrade of server products to higher computing performance.

2. The construction of large data centers accelerates the growth of the server market

The construction of large-scale data centers is the main driving force for the growth of the global server market, and the procurement of data center servers in most regions of the world, such as North America, Asia, Asia and Western Europe, continues to grow.

2. The server required by ChatGPT: AI training server + AI reasoning server

Edge computing requires a large number of machines to handle high-load requests, and the traditional CS mode can no longer meet this demand. The current Internet architecture is changing to the CES model with CDN service as the core, but the CES model has limitations in dealing with the needs of unstructured data storage and processing on the edge. Therefore, Edge is introduced to solve the problem of being unable to handle business. In the AI training scenario, due to changes in the amount of calculations and data types, CES cannot meet the needs. Therefore, the computing architecture is returning to CS and evolving towards high-efficiency parallel computing.

As the core of hardware, servers face different computing scenarios, and the change of computing architecture is the key to the evolution of server technology. With the emergence of computing architectures such as cloud computing, edge computing, and AI training, server requirements are constantly changing. A single server pays more attention to individual performance, while a cloud data center server pays more attention to overall performance. Edge computing has higher requirements for real-time data interaction and requires more server facilities. AI servers are mainly used for artificial intelligence training, using vector/tensor data types, and improving efficiency through massively parallel computing.

Under the same technical route, the server continues to iterate for data processing requirements. According to the review of the development history of mainstream servers, with the surge in data volume and the complexity of data scenarios, the driving forces for the development of different types of servers are also different. Specifically:

The development of traditional general-purpose servers is relatively slow, mainly through the improvement of hardware indicators such as processor clock frequency, instruction set parallelism, and number of cores to optimize its performance. In contrast, cloud computing servers have matured rapidly, a process that began in the 1980s and then accelerated with the introduction of products such as VMware Workstation, Amazon AWS, and the OpenStack open source project. At present, cloud computing has become popular all over the world, and many companies use popular cloud service providers (such as AWS, Azure, Google Cloud, etc.) to store and process data. The concept of edge computing server was incubated in 2015. In recent years, edge computing platforms such as AWS Greengrass and Google GMEC have emerged. As more and more devices, such as wearables and smart home devices, are connected to the internet, there is a growing need for edge computing technology. Finally, the AI server is tailor-made for artificial intelligence and machine learning work, and its hardware architecture is more suitable for the demand for training computing power. As the application of artificial intelligence becomes more and more widespread, the demand for AI servers is also increasing.

3. Cloud Computing Server: Business Model Change Under Large-Scale Data Processing Demand

The emergence of cloud computing servers is to meet the demand for high-performance computing brought about by the surge in data volume. Traditional general-purpose servers improve performance by improving hardware indicators, but as the CPU technology and the number of single CPU cores approach the limit, they cannot meet the performance requirements of the surge in data volume. In contrast, cloud computing servers use virtualization technology to pool computing and storage resources, virtualize and centralize the originally physically isolated single computing resources, and use cluster processing to achieve what a single server needs. Difficult high-performance computing. In addition, the computing power of cloud computing servers can be expanded by increasing the number of virtualized servers, breaking through the hardware limitation of a single server, and responding to the performance requirements brought about by the surge in data volume.

Cloud computing servers actually save part of the hardware cost and lower the threshold for purchasing computing power. In the past, the cost of large-scale data processing was extremely high, mainly because the purchase and operation and maintenance costs of general-purpose servers remained high. Traditional servers usually include a complete set of equipment such as processor modules, storage modules, network modules, power supplies, and fans. The cloud computing server architecture is streamlined, eliminating repeated modules and improving utilization. In addition, the cloud computing server virtualizes the storage module and removes non-essential hardware on the motherboard to reduce the overall computing cost. In addition, the traffic billing model also helps many manufacturers to afford computing power expenses, lowering the threshold for purchasing computing power.

4. Edge server: Guaranteed low latency under high data density and bandwidth constraints

Edge computing is a computing model that introduces an edge layer based on cloud computing. It sits at the edge of the network close to the source of things or data, and assists applications by providing resources such as computing, storage, and networking. Edge computing is based on a new architecture that introduces an edge layer so that cloud services can be extended to the edge of the network. In this architecture, the terminal layer is composed of IoT devices, which are located closest to the user, responsible for collecting raw data and uploading it to the upper layer for calculation; the edge layer is composed of routers, gateways, edge servers and other devices, these devices Because it is close to users, it can run delay-sensitive applications to meet users' requirements for low latency; the cloud layer is composed of high-performance servers and other devices that can handle complex computing tasks.

Compared with cloud computing, edge computing has the advantages of real-time, low cost and security. It migrates part or all of the computing tasks from the cloud computing center to the edge of the network closer to the user for processing, thereby improving the performance of data transmission and real-time processing. At the same time, edge computing can also avoid the cost problem caused by long-distance transmission of data, and reduce the computing load of the cloud computing center. In addition, edge computing processes most of the data in local devices and edge devices, reducing the amount of data uploaded to the cloud and reducing the risk of data leakage, so it has higher security.

5. AI server: more suitable for AI training scenarios such as deep learning

In the field of modern AI, due to large-scale computing requirements, ordinary CPU servers can no longer meet the needs. Compared with CPU, GPU (Graphics Processing Unit) has an architecture design that is more suitable for large-scale parallel computing, so AI servers use GPU architecture to improve computing performance.

Different from general servers, AI servers are heterogeneous servers. It means that it can use different combinations to improve computing performance, such as using CPUGPU, CPUTPU, CPU and other accelerator cards, etc., but the GPU provides computing power as the main method.

Taking the ChatGPT model as an example, it uses parallel computing. Compared with the RNN model, it can provide context for any character in the input sequence, which not only has higher accuracy, but also can process all inputs at once instead of only processing one word at a time.

From the calculation method of the GPU, the GPU architecture uses a large number of computing units and an ultra-long pipeline, so compared with the CPU, it can perform parallel computing with high throughput. This kind of computing power is especially suitable for large-scale AI parallel computing.

Deep learning mainly performs matrix and vector calculations, and the AI server has higher processing efficiency. From the perspective of the ChatGPT model structure, based on the Transformer architecture, the ChatGPT model uses the attention mechanism to assign text word weights and output numerical results to the feedforward neural network. This process requires a large number of vector and tensor operations. AI servers often integrate multiple AI GPUs, and AI GPUs usually support multiple matrix operations, such as convolution, pooling, and activation functions, to accelerate the operation of deep learning algorithms. Therefore, in artificial intelligence scenarios, AI servers are often more efficient than GPU servers and have certain application advantages.

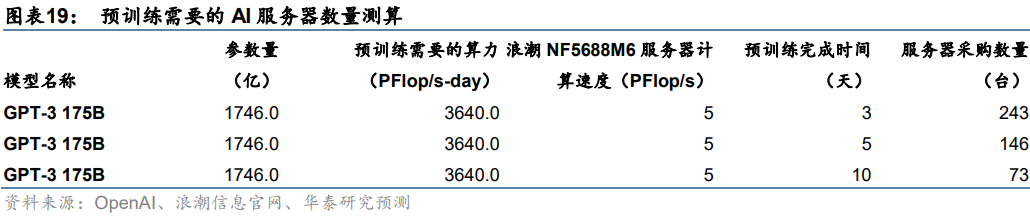

6. Chips required by ChatGPT: CPU+GPU, FPGA, ASIC

GPT model training requires large computing power support, which may bring about the need for AI server construction. We believe that as domestic manufacturers continue to deploy ChatGPT-like products, GPT large-scale model pre-training, tuning, and daily operations may bring a large demand for computing power, which in turn will drive the domestic AI server market to increase in volume. Taking the pre-training process of the GPT-3 175B model as an example, according to OpenAI, the computing power required for a GPT-3 175B model pre-training is about 3640 PFlop/s-day. We assume that the current AI server NF5688M6 (PFlop/s) with the strongest computing power of Inspur Information is used for calculation. Under the assumption that the pre-training period is 3, 5, and 10 days respectively, the number of AI servers that a single manufacturer needs to purchase is 243, 146, 73 units.

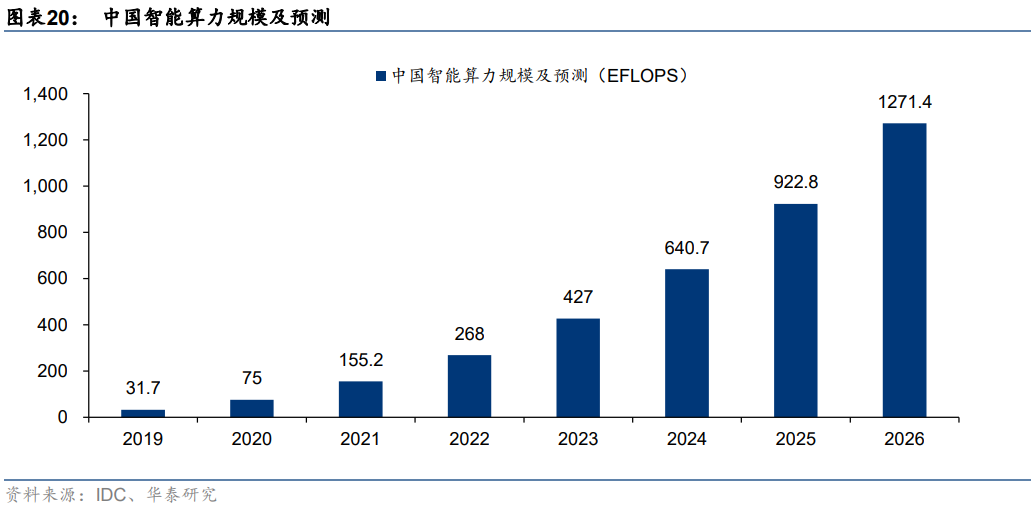

The demand for AI large-scale model training is hot, and the scale growth of intelligent computing power is expected to drive the increase of AI servers. According to IDC data, in terms of half-precision (FP16) computing power, the scale of China's smart computing power in 2021 will be about 155.2 EFLOPS. With the increasing complexity of AI models, the rapid growth of computing data, and the continuous deepening of artificial intelligence application scenarios, the scale of domestic intelligent computing power is expected to achieve rapid growth in the future. IDC predicts that the scale of domestic intelligent computing power will increase by 72.7% year-on-year to 268.0 EFLOPS in 2022. It is estimated that the scale of intelligent computing power will reach 1271.4 EFLOPS in 2026, and the CAGR of computing power scale will reach 69.2% from 2022 to 2026. We believe that AI servers, as the main infrastructure for carrying intelligent computing power, are expected to benefit from the increase in downstream demand.

Summarize

ChatGPT is a high-performance file transfer protocol that requires a sustainable server-side architecture to support its continuous development. Here's a simple guide:

1. Understand customer needs

Before building any server-side architecture, one needs to know what the client needs. Questions to consider include:

1. Number of users: How many users are expected to use the service?

2. Data volume: How much data will each user store? How much data is expected to be processed by the service?

3. Device types and platforms: What devices and platforms will users use to access the service?

2. Choose the right infrastructure

Choosing the right infrastructure is critical to building a sustainable server-side architecture. Some of these common choices include:

1. Physical Server: This is the classic way of running a server locally. This requires purchasing server hardware and management infrastructure.

2. Virtual Private Server (VPS) : A VPS is a virtual server running on a shared physical server. Most cloud service providers offer VPS.

3. Cloud computing: Cloud computing allows you to gradually expand and shrink the infrastructure according to actual usage. Some of these providers include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

3. Design a scalable architecture

When designing your server-side architecture, you need to consider how it will scale to handle more traffic and users. Some of these key considerations include:

1. Horizontal scaling: This is the process of adding more servers to the system to handle more traffic and users.

2. Vertical scaling: This is the process of upgrading the same server to handle more traffic and users.

3. Load balancing: This is the process of distributing requests to multiple servers to reduce load.

4. Caching: This is the process of storing the results of requests in memory to improve response speed.

4. Ensure safety and reliability

Security and reliability are paramount when building any server-side architecture. This means you need to consider the following:

1. Data backup and recovery: You need to back up data regularly to prevent data loss and to be able to quickly restore data when necessary.

2. Security: You need to ensure that your server-side architecture is secure, including using secure transmission protocols, encrypting data, etc.

3. Monitoring and alerting: You need to set up a monitoring and alerting system so that you can be notified in time when there is a problem with the server.

A sustainable ChatGPT high-performance server-side architecture needs to consider multiple factors, including user needs, infrastructure selection, scalability design, and security and reliability guarantees. By comprehensively evaluating these elements and taking corresponding measures.