The weekend finished off reading "59 effective ways to write high quality Effective Python's Python code", I feel pretty good, it has great guiding value. The following will be the easiest way to record these 59 recommendations, most of the recommendations in the back and add a description and example, a large length of the article, you prepare in advance seeds and beer!

Pythonic way to think

Article: Make sure to use your own version of Python

(1) There are two versions of python is active, python2 and python3

(2) There are many popular Python runtime environment, CPython, Jython, IronPython and PyPy etc.

(3) When developing the project, priority should be given Python3

Article II: PEP follow the style guide

PEP8 is a style guide for Python and compiled code format, refer to: http://www.python.org/dev/peps/pep-0008

(1) When writing Python code, you should always follow the style guide PEP8

(2) When the majority of Python developers to use the same set of code style, you can make the project more conducive to people cooperation

(3) a consistent style to write code, you can make subsequent modifications made work easier

Article III: Understanding the difference between bytes, str, with the unicode

(1) python2 provides a unicode str, bytes and is modified to python3 str, 8-bit bytes of the original value, str decode and encode method comprising the use during unicode character encoding conversion performed

(2) reading binary data from a file, or binary data is written thereto, should always 'rb' or 'wb' binary mode to open the file, etc.

Article: auxiliary function to replace complicated expressions

(1) developers to easily use excessive grammar features of Python, so write the kind of particularly complex and difficult to understand a single line expression

(2) Please complicated expressions into auxiliary function, if you want to re-use the same logic, we should do so

Article: Understanding the method of cutting sequences

(1) Do not write extra code: 0 index when the start or end of a sequence length index, omitted if the A [:]

(2) Slicing start and end index does not care whether the bounds, are such that we can easily start from the front end or rear end of the sequence, its fixed range for slicing operation, a [: 20] or a [-20 :]

(3) when the list of assignment, if a slice operation, the original list will replace the value in the relevant range to a new value, even though they are of different lengths may be replaced is still

Article VI: in the words slicing operation, not while guiding start, end and step

(1) The main purpose of this article is afraid of the code difficult to read, the authors suggested that it be disassembled into two assignments, a range of cutting to do, do another stepping cutting

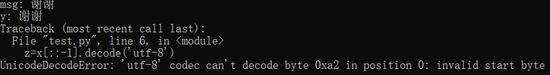

(2) Note: Use the [:: --1] does not match the expected error occurs when, look at the following example

msg = '谢谢' print('msg:',msg) x = msg.encode('utf-8') y = x.decode('utf-8') print('y:',y) z=x[::-1].decode('utf-8') print('z:', z)

Output:

Article VII: with a formula to derive a list unsubstituted map and filter

(1) a list comprehension than the built-in map and filter functions clear, because it does not require additional writing lambda expressions

(2) also supports a set of dictionaries and derive expressions

Article VIII: Do not use list comprehensions contain more than two expressions

IX: with generator expressions large amount of data is rewritten to derive a list of the formula

Disadvantages (1) to derive a list of the formula

In the derivation, for each value of the input sequence, you may have to create a new list contains only one element, when the input data is relatively small, no problem, but if the input data is very large, it may consume large amounts of memory and cause a crash, the face of this situation, python provides a generator expression, which is a generalized list comprehensions and generator, generator expressions at run time, not put the entire output sequence revealed, but will be valued at iterators.

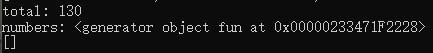

The formula used to derive a list realize that the wording placed in parentheses in one pair, constitutes a generator expression

numbers = [1,2,3,4,5,6,7,8] li = (i for i in numbers) print(li)

<generator object

at 0x0000022E7E372228>

(2) strung together generator expression performs fast

Article: enumerate possible substituents range with

(1) is rewritten to make use of that sequence to enumerate through code in the table to access a range of binding

(2) may be provided to the enumerate the second parameter, to specify the start counter value is used, the default is 0

color = ['red','black','write','green'] #range方法 for i in range(len(color)): print(i,color[i]) #enumrate方法 for i,value in enumerate(color): print(i,value)

Article 11: zip function simultaneously with two iterators to traverse

(1) built-in zip function may traverse a plurality of parallel iterators

(2) Python3 corresponds to the zip generator will generate successive tuple during traversal, and the zip is python2 directly tuples generated completely well, and return entire list at once,

(3) If the iterator provided unequal length, the zip will automatically advance termination

attr = ['name','age','sex'] values = ['zhangsan',18,'man'] people = zip(attr,values) for p in people: print(p)

Rule 12: Do not write the else block in the back for and while loops

(1) python programming language provides a lot of functionality that is not supported, and that is to write the else block directly behind the block inside the loop

for i in range(3): print('loop %d' %(i)) else: print('else block!')

The above wording is very easy to misunderstand: If the cycle is not finished properly executed, then execution else, in fact, just the opposite

(2) Do not use recycled back else, because such an approach is neither intuitive, but also misleading

Article 13: rational use of each code block try / except / else / finally structure

try: # code execution except: # unusual else: # can try to reduce the code execution finally when no exception occurs: # handle release operation

function

Article 14: try to use exceptions to indicate special circumstances, and do not return None

(1) None of this return value to represent the special meaning of a function, it is easy to mistake the caller, because the values 0 and empty string None and the like, will assess the expressions Bay to False

(2) after the function in the face of exceptional circumstances should throw an exception, rather than returning None, exception documents to see the caller of the function as described, you should write the code to handle them

Article 15: Learn how to use the variable scope of the periphery in the closed bag

(1) understand what closures

Closure is a function of a defined scope, this function reference variable scope of that

(2) expression in reference to a variable, python interpreter traversal order of the scope:

a. a function of the current scope

. B scope any peripheral (e.g.: contains the current function other functions)

c. modules that contain the scope of the current code (also called global scope)

d. Built scope (that is, the scope of a function comprising str len and the like)

e. If a show off these places have not been defined variable names match, then an exception is thrown NameError

(3) assignment, python rule interpreter

When assigned to a variable, if the current scope has been defined this variable, then the variable will have a new value, if the current scope is not variable, python this assignment will be regarded as the definition of the variables

(4)nonlocal

nonlocal mean: time to give the relevant variable assignment, should find the variable in the upper scope, nomlocal only limitation is that it can not be extended to apply to the module level, this is to prevent it from polluting the global scope

(5)global

global assignment to represent the variables will directly modify the module variable scope

Article 16: consider the function generator to return directly rewrite the list

Reference Article IX

Article 17: The iteration of the above parameters, be careful

(1) function when multiple iterations parameters entered above should be careful, if the argument is an iteration object, it may cause strange behavior and missing some values

See the following two examples:

Example 1:

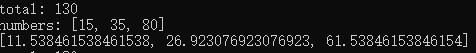

def normalize(numbers): total = sum(numbers) print('total:',total) print('numbers:',numbers) result = [] for value in numbers: percent = 100 * value / total result.append(percent) return result

numbers = [15,35,80] print(normalize(numbers))

Output:

Example 2: The numbers into the generator

def fun(): li = [15,35,80] for i in li: yield i

print(normalize(fun()))

Output:

The reason: the iterator produced only one result, to continue in the second round of the iteration StopIteration exception was thrown iterator or generator above, there will be no results.

(2) python iterator protocol described how the container and iterator iter and next to the built-in functions, and related expressions loop for interworking

(3) determining a like an iterator or container, which can take the parameter value, the function calls iter two, if the same result is the iterator, built next function call, prior to further enabling the iterator

if iter(numbers) is iter(numbers): raise TypeError('Must supply a container')

Article 18: The position of a variable number of parameters reduce visual noise

(1) In the def statement * args, can make function receives a variable number of positional parameters

(2) function is called, the operator * may be used, as the elements of a sequence positional parameters passed to the function

(3) use of the generator operator, and may cause the program to run out of memory crash, so only if we can determine the number of parameters entered comparison small, so it should function accepts arg formula vararg

(4) has been received at the above function * args parameter Add another location parameters, errors can occur in difficult to troubleshoot

Article 19: The optional keyword arguments to express behavior

(1) Function parameters can be specified by location or keywords

(2) to call the function using only positional parameters, may lead to the meaning of these parameters are not clear, but it is possible to clarify the intent of the keyword parameters for each parameter

(3) This function adds a new behavior, you can use keyword arguments with default values, in order to maintain the original function call code compatible

(4) optional keyword parameters should always be specified as a keyword, and should not be in a position to specify the parameters

Section 20: None, and documents with a string describing the dynamic parameters have default values

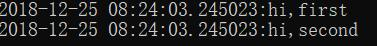

import datetime import time def log(msg,when=datetime.datetime.now()): print('%s:%s' %(when,msg))

log('hi,first') time.sleep(1) log('hi,second')

Output:

The same time the two shows, because datetime.now () is executed only once, that is only executed once, the default value of the parameter when the function definition, will be determined at the time of each module is loaded coming in, many modules are loaded when the program starts. We can change the function above:

import datetime import time def log(msg,when=None): """ arg when:datetime of when the message occurred """

if when is None: when=datetime.datetime.now() print('%s:%s' %(when,msg))

log('hi,first') time.sleep(1) log('hi,second')

Output:

Assessing default (1) parameters, only the load module in the program and read function is defined once present, or for the dynamic value [] {} and the like, which may result in strange behavior

(2) For the keywords in the dynamic parameter value as a default value for the actual, default values should formally written as None, and the default value of the actual behavior is described by the document string corresponding to the function in which

Article 21: The only be specified as a keyword parameters to ensure that the code clear

(1) keyword arguments can be made more explicit intent of the function call

(2) For each parameter function between easily confused, you can only specify the keyword declare formal parameters to ensure that the caller must specify them by keyword. For Boolean function receives multiple markers should do so

Classes and Inheritance

Article 22: auxiliary class to try to maintain the status of the program, rather than using a dictionary or tuple

Author's meaning is: If we use a dictionary or a tuple of a part of the information stored procedures, but with the ever-changing demands, needs a good dictionary or tuple structure is defined before gradually changes, there will be nested many times, too the expansion will lead to code problems, and difficult to understand. Encountered such a situation, we can reconstruct the nested structure for the class.

(1) Do not use a dictionary that contains other dictionaries, and do not use too long tuple

(2) If the container contains simple and immutable data, then use the first namedtupe can be expressed, to be necessary later to modify the complete class

Note: namedtuple class can not specify a default value of each parameter, for optional attributes for more data, inconvenient to use namedtuple

(3) Save the internal state of the dictionary if it becomes more complex, it should be split into multiple secondary code group class

Examples of simple interface should receive functions, rather than the class: Article 23

(1) for connecting the various components python simple interface, it is generally to be directly passed to the function, not define a class, instance of that class and then passed

(2) Python kinds of functions and methods that may be cited as the class, and therefore, they are the same as with other types of objects can be placed inside the expression

(3) called by call a special method can make instances of the class can be obtained as a function call as normal Python

Article 24: Construction of objects @classmethod polymorphic form common to

In python species, not only object supports multi-state, multi-state class also supports

(1) In the Python program types, each class can have a constructor, which is the init method

(2) @classmethod mechanism to the constructor in a similar manner to construct an object class

(3), we are able to build and spliced in a more concrete subclass versatile manner by class methods mechanism

The following processes in order to achieve a MapReduce count the number of lines in the file as an example to illustrate:

(1) ideas

(2) Code

import threading import os class InputData: def read(self): raise NotImplementedError class PathInputData(InputData): def init(self,path): super().init() self.path = path

def read(self): return open(self.path).read()

class worker: def init(self,input_data): self.input_data = input_data self.result = None

def map(self): raise NotImplementedError

def reduce(self): raise NotImplementedError

class LineCountWorker(worker): def map(self): data = self.input_data.read() self.result = data.count('\n')

def reduce(self,other): self.result += other.result

def generate_inputs(data_dir): for name in os.listdir(data_dir): yield PathInputData(os.path.join(data_dir,name))

def create_workers(input_list): workers = [] for input_data in input_list: workers.append(LineCountWorker(input_data)) return workers

def execute(workers): threads = [threading.Thread(target=w.map) for w in workers] for thread in threads: thread.start() for thread in threads: thread.join()

first,rest = workers[0],workers[1:] for worker in rest: first.reduce(worker) return first.result

def mapreduce(data_dir): inputs = generate_inputs(data_dir) workers = create_workers(inputs) return execute(workers)

if name == "main": print(mapreduce('D:\mapreduce_test')) MapReduce

The above code is very laborious splicing in the various components, to improve the re-use of the following @classmethod

import threading import os class InputData: def read(self): raise NotImplementedError

@classmethod def generate_inputs(cls,data_dir): raise NotImplementedError class PathInputData(InputData): def init(self,path): super().init() self.path = path

def read(self): return open(self.path).read()

@classmethod def generate_inputs(cls,data_dir): for name in os.listdir(data_dir): yield cls(os.path.join(data_dir,name))

class worker: def init(self,input_data): self.input_data = input_data self.result = None

def map(self): raise NotImplementedError

def reduce(self): raise NotImplementedError

@classmethod def create_workers(cls,input_list): workers = [] for input_data in input_list: workers.append(cls(input_data)) return workers

class LineCountWorker(worker): def map(self): data = self.input_data.read() self.result = data.count('\n')

def reduce(self,other): self.result += other.result

def execute(workers): threads = [threading.Thread(target=w.map) for w in workers] for thread in threads: thread.start() for thread in threads: thread.join()

first,rest = workers[0],workers[1:] for worker in rest: first.reduce(worker) return first.result

def mapreduce(data_dir): inputs = PathInputData.generate_inputs(data_dir) workers = LineCountWorker.create_workers(inputs) return execute(workers)

if name == "main": print(mapreduce('D:\mapreduce_test'))

MapReduce modified

Class method implementation mechanism through polymorphism, we can use a more versatile way to build concrete classes and splicing

Article 25: initialization with super parent

If you start from the python2 described in detail using super method requires a lot of space, here only use python3 in and MRO

(1) MRO is the method resolution order, a standard procedure to arrange the initialization sequence between the superclass, depth-first, left to right, it also ensures the top of the diamond that common base class init method is run only once

The use of super (2) python3

Providing super python3 method of call with no parameters, the effect of the embodiment with the class and the same self to call super

class A(Base): def init(self,value): super(class,self).init(value)

class A(Base): def init(self,value): super().init(value)**

Recommended above two methods, a method may be to python3 by class refers to the current class, and accurate variable is not defined in Python2 class method

(3) should always use the built-in super function to initialize the parent class

Article 26: Multiple inheritance only when using Mix-in component production tools

python is object-oriented programming language that provides a number of built-in programming mechanism, allows developers to properly implement multiple inheritance, but we should try to avoid multiple inheritance, if we must use, consider the preparation of mix-in class, mix-in is a small class, other classes only define a set of additional methods may be required to provide, without defining its attributes instances, in addition, it requires the user to call their init function

(1) can be used to achieve the effect of mix-in component, do not use multiple inheritance to do

(2) Each function is implemented as pluggable mix-in component, and then make the relevant class inherits those components they need, you can customize the behavior of the class instance with

(3) the simple act of encapsulating the mix-in component, and then you can use a combination of a plurality of mix-in behavior of the complex

Article 27: multi-purpose public property, private property less

python is not strictly ensure the privacy of private field from the grammar, in simple terms, we are adults.

Personal habits: _XXX single designated representatives protected; XXX double underscore start and not the end _ to represent private; the properties and methods defined by the system XXX__

class People: __name="zhanglin"

def init(self): self.__age = 16

print(People.dict) p = People() print(p.dict)

Will find the name and age property names have changed, have become (_ + class name property name), only changes in __XXX this naming, so in this way as a pseudo private Description

(1) python compiler can not guarantee strict privacy private field

(2) Do not blindly property to private, but should do a good job planning from the outset, and to allow more access to subclasses of the superclass internal api

(3) should be more use of protected property, and the rational use of these fields to tell the developers subclass in the document, rather than trying to use private property to restrict access to these fields subclasses

(4) only when subclasses from their control when we can consider private property to avoid name conflicts

Article 28: Inheritance collections.abc to achieve a custom container type

collections.abc module defines a set of abstract base class that provide a common way for each type of container should have, you can refer to its own source

all = ["Awaitable", "Coroutine", "AsyncIterable", "AsyncIterator", "AsyncGenerator", "Hashable", "Iterable", "Iterator", "Generator", "Reversible", "Sized", "Container", "Callable", "Collection", "Set", "MutableSet", "Mapping", "MutableMapping", "MappingView", "KeysView", "ItemsView", "ValuesView", "Sequence", "MutableSequence", "ByteString", ]**

(1) If a custom subclass relatively simple, it can be directly inherited from the Python container types (e.g., list, dict) in

(2) wants to achieve the correct type of container custom, you may need to write a lot of special methods

(3) the preparation of homemade container type may inherit from the abstract base class collections.abc module, we can ensure that the base class subclass includes appropriate interface and Behavior