最近又再次学习了一些卷积神经网络,有了一些深层的理解

1、工具安装

安装tensorflow和keras又花了一番功夫,找到了一篇还不错的安装博客,链接如下:

Anaconda下安装Tensorflow和Keras的安装教程

现在使用比较多

值得注意的是

我们使用anaconda进行安装,因为keras还需要这几个库的支持,然后再安装上面链接的那一篇博客安装。

pip install numpy

pip install matplotlib

pip install scipy

pip install tensorflow

pip install keras

2、理解和感悟

(1) 优化器Optimizer

其中,优化器有以下这些

深度学习——优化器算法Optimizer详解(BGD、SGD、MBGD、Momentum、NAG、Adagrad、Adadelta、RMSprop、Adam)

当然比较常用的是SGD,即随机梯度下降算法。

随机梯度下降b站教程

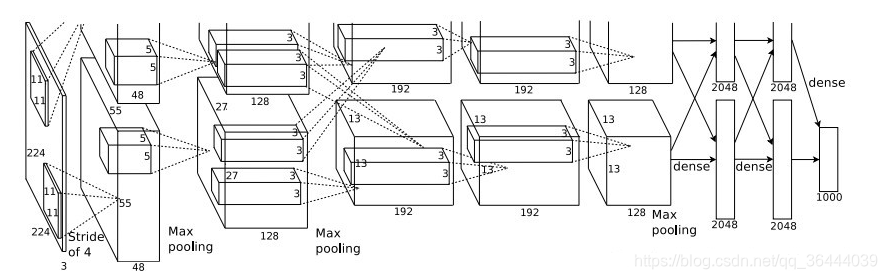

(2)Alex经典cnn网络

AlexNet的整个网络结构就是由5个卷积层和3个全连接层组成的,深度总共8层

# 导包

import keras

from keras.models import Sequential

from keras.layers import Dense,Activation,Dropout,Flatten,Conv2D,MaxPool2D

from keras.layers.normalization import BatchNormalization

import numpy as np

np.random.seed(1000)

# 获取数据

import tflearn.datasets.oxflower17 as oxflower17

x,y = oxflower17.load_data(one_hot=True)

# 定义AlexNet模型

model = Sequential()

# 1block

model.add(Conv2D(filters = 97,

kernel_size = (11,11),

strides = (4,4),

padding = "valid",

input_shape = (224,224,3)))

model.add(Activation("relu"))

model.add(MaxPool2D(pool_size = (2,2),

strides = (2,2),

padding = "valid"))

model.add(BatchNormalization())

# 2block

model.add(Conv2D(filters = 256,

kernel_size = (11,11),

strides = (1,1),

padding = "valid"))

model.add(Activation("relu"))

model.add(MaxPool2D(pool_size = (2,2),

strides = (2,2),

padding = "valid"))

model.add(BatchNormalization())

# 3 block

model.add(Conv2D(filters = 384,

kernel_size = (3,3),

strides = (1,1),

padding = "valid"))

model.add(Activation("relu"))

model.add(BatchNormalization())

# 4 block

model.add(Conv2D(filters = 384,

kernel_size = (3,3),

strides = (1,1),

padding = "valid"))

model.add(Activation("relu"))

model.add(BatchNormalization())

# 5 block

model.add(Conv2D(filters = 256,

kernel_size = (3,3),

strides = (1,1),

padding = "valid"))

model.add(Activation("relu"))

model.add(MaxPool2D(pool_size = (2,2),

strides = (2,2),

padding = "valid"))

model.add(BatchNormalization())

# 6 dense

model.add(Flatten())

model.add(Dense(4096, input_shape=(224*224*3,)))

model.add(Activation("relu"))

model.add(Dropout(0.4))

model.add(BatchNormalization())

# 7 dense

model.add(Dense(4096))

model.add(Activation("relu"))

model.add(Dropout(0.4))

model.add(BatchNormalization())

# 8 dense

model.add(Dense(17))

model.add(Activation("softmax"))

model.summary()

# compile

model.compile(loss = "categorical_crossentropy",

optimizer = "adam",

metrics = ["accuracy"])

# train

model.fit(x,

y,

batch_size = 32,

epochs = 8,

verbose = 1,

validation_split = 0.3,

shuffle = True)

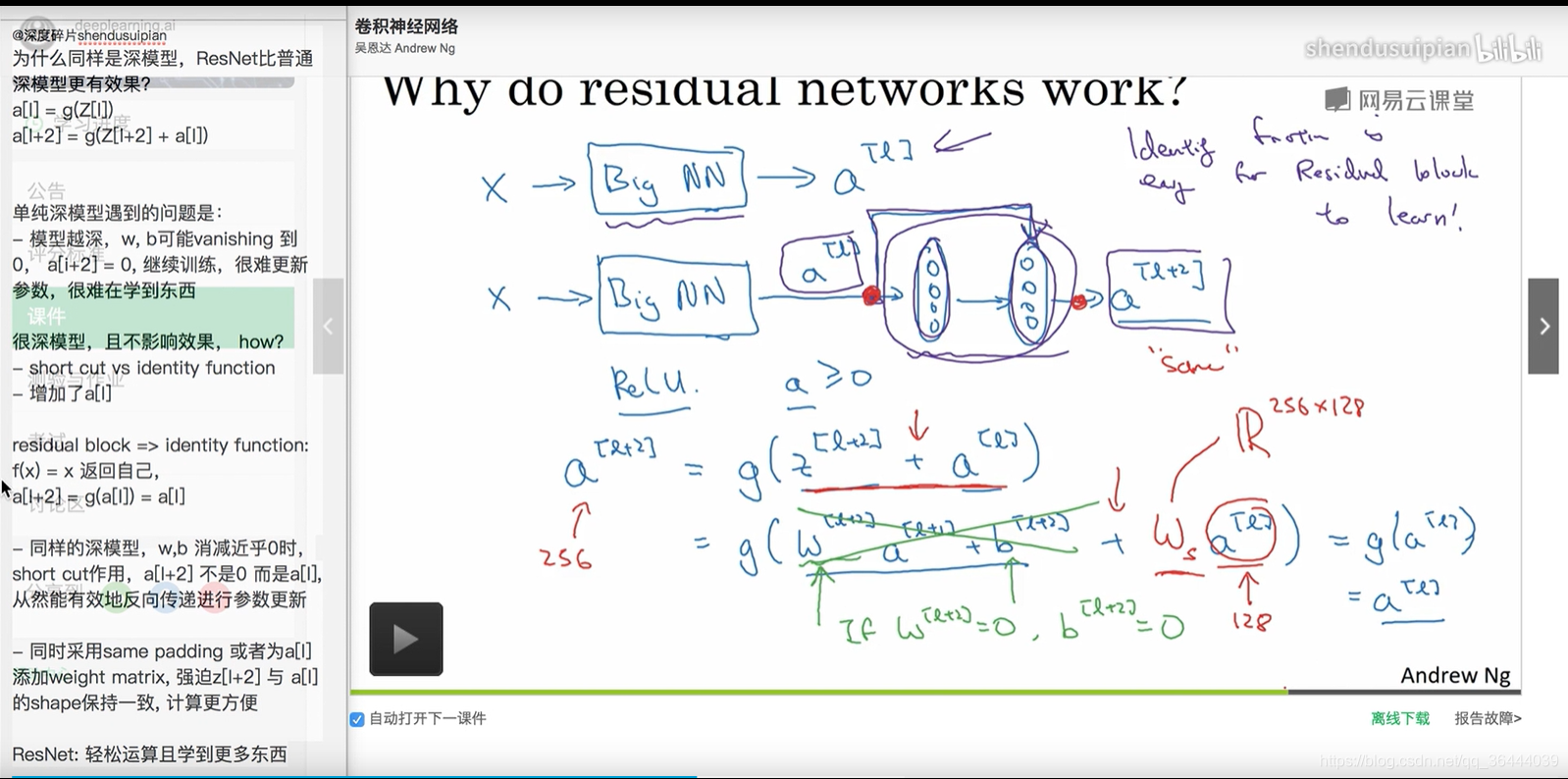

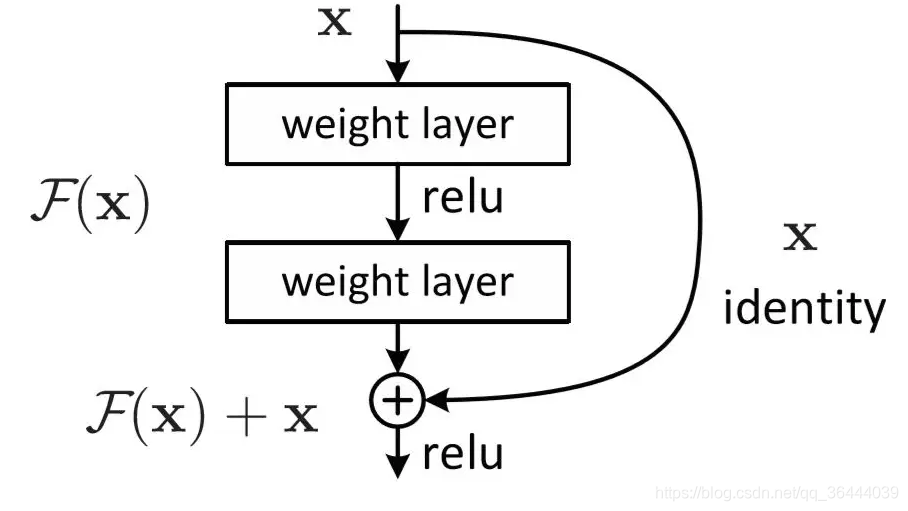

(3)残差网络

ResNet中解决深层网络梯度消失的问题的核心结构是残差网络

残差网络增加了一个identity mapping(恒等映射),把当前输出直接传输给下一层网络(全部是1:1传输,不增加额外的参数),相当于走了一个捷径,跳过了本层运算,这个直接连接命名为"skip connection"。同时在反向传播过程中,也是将下一层网络的梯度直接传递给上一层网络,这样就解决了深层网络的梯度消失问题。

import keras

from keras.layers import Conv2D,Input

x = Input(shape=(224,224,3))

y = Conv2D(3,(3,3),padding="same")(x)

z = keras.layers.add([x,y])

from keras.applications.resnet50 import ResNet50

from keras.preprocessing import image

from keras.applications.resnet50 import preprocess_input, decode_predictions

import numpy as np

model = ResNet50(weights="imagenet")

img_path = "elephant.jpg"

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

preds = model.predict(x)

# decode the results into a list of tuples (class, description, probability)

# (one such list for each sample in the batch)

print("Predicted:", decode_predictions(preds, top=3)[0])