本文主要针对pb文件,用Android TensorFlow API实现目标检测和识别,不需要NDK和CMake混合编程 编译c/c++文件

只需要在Android项目模块的Module的build.gradle输入

// Tensorflow

compile 'org.tensorflow:tensorflow-android:1.13.1'由于很多处都可以查阅源代码,便不附加项目工程,只是记录学习过程中的采坑点

一般图像需要经过检测,定位和识别

方式一:单目标识别

该方式可以通过手机拍照或相册选择图片,手动选择裁剪的目标,将目标放进模型中识别

采用预训练模型主要是inception v1、v2、v3等框架

自己数据模型训练工具可下载:

https://github.com/tensorflow/hub/blob/master/examples/image_retraining/retrain.py训练自己模型过程可参考:

https://www.tensorflow.org/hub/tutorials/image_retrainingAndroid端主要调用接口

/**

* A classifier specialized to label images using TensorFlow.

*/

public class TensorFlowImageClassifier implements Classifier {

private static final String TAG = "ImageClassifier";

// Only return this many results with at least this confidence.

private static final int MAX_RESULTS = 3;

private static final float THRESHOLD = 0.3f;

// Config values.

private String inputName;

private String outputName;

private int inputSize;

private int imageMean;

private float imageStd;

// Pre-allocated buffers.

private Vector<String> labels = new Vector<String>();

private int[] intValues;

private float[] floatValues;

private float[] outputs;

private String[] outputNames;

private TensorFlowInferenceInterface inferenceInterface;

private boolean runStats = false;

private TensorFlowImageClassifier() {

}

/**

* Initializes a native TensorFlow session for classifying images.

*

* @param assetManager The asset manager to be used to load assets.

* @param modelFilename The filepath of the model GraphDef protocol buffer.

* @param labelFilename The filepath of label file for classes.

* @param inputSize The input size. A square image of inputSize x inputSize is assumed.

* @param imageMean The assumed mean of the image values.

* @param imageStd The assumed std of the image values.

* @param inputName The label of the image input node.

* @param outputName The label of the output node.

* @throws IOException

*/

public static Classifier create(

AssetManager assetManager,

String modelFilename,

String labelFilename,

int inputSize,

int imageMean,

float imageStd,

String inputName,

String outputName)

throws IOException {

TensorFlowImageClassifier c = new TensorFlowImageClassifier();

c.inputName = inputName;

c.outputName = outputName;

// Read the label names into memory.

// TODO(andrewharp): make this handle non-assets.

//read labels for label file

c.labels = readLabels(assetManager, labelFilename);

c.inferenceInterface = new TensorFlowInferenceInterface(assetManager, modelFilename);

// The shape of the output is [N, NUM_CLASSES], where N is the batch size.

int numClasses = (int) c.inferenceInterface.graph().operation(outputName).output(0).shape().size(1);

Log.i(TAG, "Read " + c.labels.size() + " labels, output layer size is " + numClasses);

// Ideally, inputSize could have been retrieved from the shape of the input operation. Alas,

// the placeholder node for input in the graphdef typically used does not specify a shape, so it

// must be passed in as a parameter.

c.inputSize = inputSize;

c.imageMean = imageMean;

c.imageStd = imageStd;

// Pre-allocate buffers.

c.outputNames = new String[]{outputName};

c.intValues = new int[inputSize * inputSize];

c.floatValues = new float[inputSize * inputSize * 3];

c.outputs = new float[numClasses];

return c;

}

//given a saved drawn model, lets read all the classification labels that are

//stored and write them to our in memory labels list

private static Vector<String> readLabels(AssetManager am, String fileName) throws IOException {

BufferedReader br = new BufferedReader(new InputStreamReader(am.open(fileName)));

String line;

Vector<String> labels = new Vector<>();

while ((line = br.readLine()) != null) {

labels.add(line);

}

br.close();

return labels;

}

@Override

public List<Recognition> recognizeImage(final Bitmap bitmap) {

// Log this method so that it can be analyzed with systrace.

TraceCompat.beginSection("recognizeImage");

TraceCompat.beginSection("preprocessBitmap");

// Preprocess the image data from 0-255 int to normalized float based

// on the provided parameters.

bitmap.getPixels(intValues, 0, bitmap.getWidth(), 0, 0, bitmap.getWidth(), bitmap.getHeight());

for (int i = 0; i < intValues.length; ++i) {

final int val = intValues[i];

floatValues[i * 3 + 0] = (((val >> 16) & 0xFF) - imageMean) / imageStd;

floatValues[i * 3 + 1] = (((val >> 8) & 0xFF) - imageMean) / imageStd;

floatValues[i * 3 + 2] = ((val & 0xFF) - imageMean) / imageStd;

}

TraceCompat.endSection();

// Copy the input data into TensorFlow.

TraceCompat.beginSection("feed");

inferenceInterface.feed(inputName, floatValues, new long[]{1, inputSize, inputSize, 3});

// inferenceInterface.feed("is_training",new boolean[]{false});

// inferenceInterface.feed("keep_prob",new float[]{1.0f});

TraceCompat.endSection();

// Run the inference call.

TraceCompat.beginSection("run");

inferenceInterface.run(outputNames, runStats);

TraceCompat.endSection();

// Copy the output Tensor back into the output array.

TraceCompat.beginSection("fetch");

inferenceInterface.fetch(outputName, outputs);

TraceCompat.endSection();

// Find the best classifications.

PriorityQueue<Recognition> pq =

new PriorityQueue<Recognition>(

3,

new Comparator<Recognition>() {

@Override

public int compare(Recognition lhs, Recognition rhs) {

// Intentionally reversed to put high confidence at the head of the queue.

return Float.compare(rhs.getConfidence(), lhs.getConfidence());

}

});

for (int i = 0; i < outputs.length; ++i) {

if (outputs[i] > THRESHOLD) {

pq.add(

new Recognition("" + i, labels.size() > i ? labels.get(i) : "unknown", outputs[i], null));

}

}

final ArrayList<Recognition> recognitions = new ArrayList<Recognition>();

int recognitionsSize = Math.min(pq.size(), MAX_RESULTS);

for (int i = 0; i < recognitionsSize; ++i) {

recognitions.add(pq.poll());

}

TraceCompat.endSection(); // "recognizeImage"

return recognitions;

}采坑点:

- 不同模型框架,训练模型输入的占位符不同,一定要一一对应;

TraceCompat.beginSection("feed");

inferenceInterface.feed(inputName, floatValues, new long[]{1, inputSize, inputSize, 3});

// inferenceInterface.feed("is_training",new boolean[]{false});

// inferenceInterface.feed("keep_prob",new float[]{1.0f});

TraceCompat.endSection();

可以用Android studio 查看pb文件,一般前面几行会告诉你有无is_training、keep_prob等占位符,如果有,一定在Android程序加入。

- 训练的数据集一定要对齐,resize与采用模型框架图像的大小一致,Android端调用接口,输入参数一定要和训练图像一致,否则会出现分类错误。个人推测:不同类别图像占用空间大小是一致的,如若图像不一,会导致pb图矢量数据错乱。

private static final int FACE_SIZE = 299;

private static final int IMAGE_MEAN = 128;

private static final float IMAGE_STD = 128;

- 生成的模型不能直接放置到Android中,需要一步转化:官方的解释:

To use v3 Inception model, strip the DecodeJpeg Op from your retrained

// model first:cd 进入D:\Program Files\Anaconda3\Lib\site-packages\tensorflow\python\tools 目录下,将上步中生成的 output_graph.pb 文件复制到改目录下,执行命令:

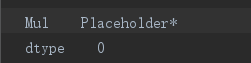

python strip_unused.py --input_graph=face.pb --input_binary=true --output_graph=facenet.pb --input_node_names="Mul" --output_node_names="output" - Android platform SDK大于24以上,调用相机或裁剪图片都会出现闪退现象,不足为惧,多因Android7.0的禁止不安全路径被外部访问,数据临时存储导致,可以网络查询此问题解决方案;

方法二:目标检测和识别

该方式可以通过摄像、拍照或选择相册,无需裁剪图像,直接将目标放入模型中进行目标检测、定位和识别

采用预训练模型主要是ssd_inception_v1 、v2、v3等框架

自己数据模型训练工具可下载:

https://github.com/tensorflow/models训练自己模型过程可参考:

https://blog.csdn.net/zj1131190425/article/details/80711857public class TensorFlowObjectDetectionAPIModel implements Classifier {

private static final Logger LOGGER = new Logger();

// Only return this many results.

private static final int MAX_RESULTS = 100;

// Config values.

private String inputName;

private int inputSize;

// Pre-allocated buffers.

private Vector<String> labels = new Vector<String>();

private int[] intValues;

private byte[] byteValues;

private float[] outputLocations;

private float[] outputScores;

private float[] outputClasses;

private float[] outputNumDetections;

private String[] outputNames;

private boolean logStats = false;

private TensorFlowInferenceInterface inferenceInterface;

/**

* Initializes a native TensorFlow session for classifying images.

*

* @param assetManager The asset manager to be used to load assets.

* @param modelFilename The filepath of the model GraphDef protocol buffer.

* @param labelFilename The filepath of label file for classes.

*/

public static Classifier create(

final AssetManager assetManager,

final String modelFilename,

final String labelFilename,

final int inputSize) throws IOException {

final TensorFlowObjectDetectionAPIModel d = new TensorFlowObjectDetectionAPIModel();

InputStream labelsInput = null;

String actualFilename = labelFilename.split("file:///android_asset/")[1];

labelsInput = assetManager.open(actualFilename);

BufferedReader br = null;

br = new BufferedReader(new InputStreamReader(labelsInput));

String line;

while ((line = br.readLine()) != null) {

LOGGER.w(line);

d.labels.add(line);

}

br.close();

d.inferenceInterface = new TensorFlowInferenceInterface(assetManager, modelFilename);

final Graph g = d.inferenceInterface.graph();

d.inputName = "image_tensor";

// The inputName node has a shape of [N, H, W, C], where

// N is the batch size

// H = W are the height and width

// C is the number of channels (3 for our purposes - RGB)

final Operation inputOp = g.operation(d.inputName);

if (inputOp == null) {

throw new RuntimeException("Failed to find input Node '" + d.inputName + "'");

}

d.inputSize = inputSize;

// The outputScoresName node has a shape of [N, NumLocations], where N

// is the batch size.

final Operation outputOp1 = g.operation("detection_scores");

if (outputOp1 == null) {

throw new RuntimeException("Failed to find output Node 'detection_scores'");

}

final Operation outputOp2 = g.operation("detection_boxes");

if (outputOp2 == null) {

throw new RuntimeException("Failed to find output Node 'detection_boxes'");

}

final Operation outputOp3 = g.operation("detection_classes");

if (outputOp3 == null) {

throw new RuntimeException("Failed to find output Node 'detection_classes'");

}

// Pre-allocate buffers.

d.outputNames = new String[] {"detection_boxes", "detection_scores",

"detection_classes", "num_detections"};

d.intValues = new int[d.inputSize * d.inputSize];

d.byteValues = new byte[d.inputSize * d.inputSize * 3];

d.outputScores = new float[MAX_RESULTS];

d.outputLocations = new float[MAX_RESULTS * 4];

d.outputClasses = new float[MAX_RESULTS];

d.outputNumDetections = new float[1];

return d;

}

private TensorFlowObjectDetectionAPIModel() {}

@Override

public List<Recognition> recognizeImage(final Bitmap bitmap) {

// Log this method so that it can be analyzed with systrace.

Trace.beginSection("recognizeImage");

Trace.beginSection("preprocessBitmap");

// Preprocess the image data from 0-255 int to normalized float based

// on the provided parameters.

bitmap.getPixels(intValues, 0, bitmap.getWidth(), 0, 0, bitmap.getWidth(), bitmap.getHeight());

for (int i = 0; i < intValues.length; ++i) {

byteValues[i * 3 + 2] = (byte) (intValues[i] & 0xFF);

byteValues[i * 3 + 1] = (byte) ((intValues[i] >> 8) & 0xFF);

byteValues[i * 3 + 0] = (byte) ((intValues[i] >> 16) & 0xFF);

}

Trace.endSection(); // preprocessBitmap

// Copy the input data into TensorFlow.

Trace.beginSection("feed");

inferenceInterface.feed(inputName, byteValues, 1, inputSize, inputSize, 3);

Trace.endSection();

// Run the inference call.

Trace.beginSection("run");

inferenceInterface.run(outputNames, logStats);

Trace.endSection();

// Copy the output Tensor back into the output array.

Trace.beginSection("fetch");

outputLocations = new float[MAX_RESULTS * 4];

outputScores = new float[MAX_RESULTS];

outputClasses = new float[MAX_RESULTS];

outputNumDetections = new float[1];

inferenceInterface.fetch(outputNames[0], outputLocations);

inferenceInterface.fetch(outputNames[1], outputScores);

inferenceInterface.fetch(outputNames[2], outputClasses);

inferenceInterface.fetch(outputNames[3], outputNumDetections);

Trace.endSection();

// Find the best detections.

final PriorityQueue<Recognition> pq =

new PriorityQueue<Recognition>(

1,

new Comparator<Recognition>() {

@Override

public int compare(final Recognition lhs, final Recognition rhs) {

// Intentionally reversed to put high confidence at the head of the queue.

return Float.compare(rhs.getConfidence(), lhs.getConfidence());

}

});

// Scale them back to the input size.

for (int i = 0; i < outputScores.length; ++i) {

final RectF detection =

new RectF(

outputLocations[4 * i + 1] * inputSize,

outputLocations[4 * i] * inputSize,

outputLocations[4 * i + 3] * inputSize,

outputLocations[4 * i + 2] * inputSize);

pq.add(

new Recognition("" + i, labels.get((int) outputClasses[i]), outputScores[i], detection));

}

final ArrayList<Recognition> recognitions = new ArrayList<Recognition>();

for (int i = 0; i < Math.min(pq.size(), MAX_RESULTS); ++i) {

recognitions.add(pq.poll());

}

Trace.endSection(); // "recognizeImage"

return recognitions;

}

@Override

public void enableStatLogging(final boolean logStats) {

this.logStats = logStats;

}

@Override

public String getStatString() {

return inferenceInterface.getStatString();

}

@Override

public void close() {

inferenceInterface.close();

}

}采坑点:

- 复制assets目录下的标签.txt文件

第一行是???

不用动,千万别删

-

labelImg的数据集一定不可以重名

- label.pbtxt文件的id和标签名,一定对应于tfrecord文件内分类id和标签名