spark的运行环境配置:

第一步:

pycharm远程连接服务器之后,配置python解释器的路径(可以是自己本地服务器的Python路径或者虚拟环境中的Python解释器路径),样例是远程连接的虚拟环境

第二步:

将spark安装目录python目录下面的pyspark文件夹复制到python的解释器所在的安装目录的site-packages包中:

本地服务器python解释器的site-packages包路径

cd /usr/local/python3/lib/python3.6/site-packages

虚拟环境中的python解释器的site-packages包路径

cd /root/miniconda3/envs/ai/lib/python3.6/site-packages

spark的安装目录中的python目录:

/export/servers/spark-2.3.4-bin-hadoop2.7/python

进入spark的Python目录下:

# 复制pyspark到虚拟环境中python解释器的lib安装路径

cp -r pyspark /root/miniconda3/envs/ai/lib/python3.6/site-packages

第三步:

配置pycharm的环境变量,可以在代码中添加到最前面或者在Configuration中添加环境变量:

法1:直接添加代码:

import os

# spark_home 的环境变量

SPARK_HOME = "/export/servers/spark-2.3.4-bin-hadoop2.7"

# 由于系统中存在两种不同版本的python,需要配置PYSPARK_PYTHON和PYSPARK_DRIVER_PYTHON指定运行为python3

# python3的路径使用本地或者虚拟机的均可

# PYSPARK_PYTHON = "/root/miniconda3/envs/ai/bin/python3"

# PYSPARK_DRIVER_PYTHON = "/root/miniconda3/envs/ai/bin/python3"

PYSPARK_PYTHON = "/usr/local/python3/bin/python3"

PYSPARK_DRIVER_PYTHON = "/usr/local/python3/bin/python3"

# 添加到环境变量中

os.environ['SPARK_HOME'] = SPARK_HOME

os.environ['PYSPARK_PYTHON'] = PYSPARK_PYTHON

os.environ['PYSPARK_DRIVER_PYTHON'] = PYSPARK_DRIVER_PYTHON

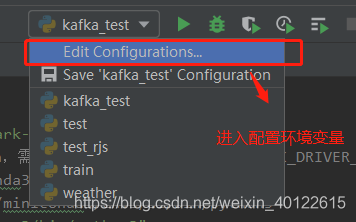

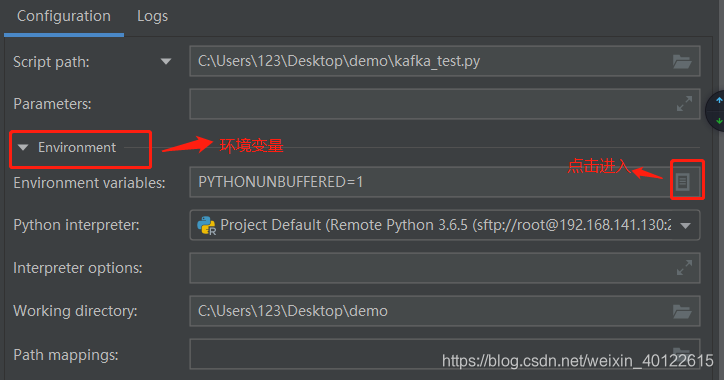

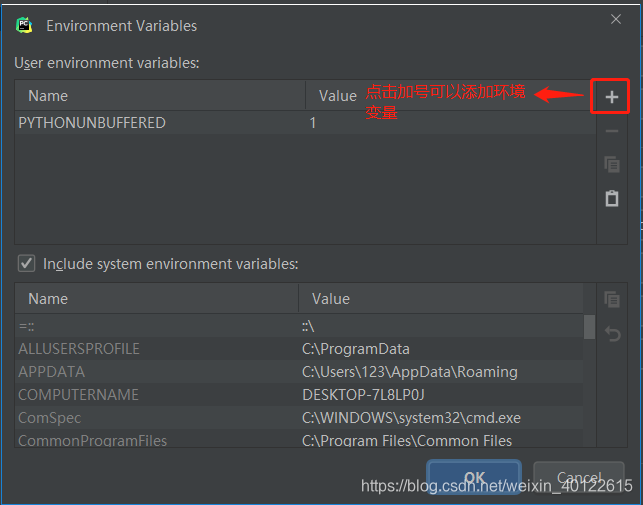

或者法2:配置环境变量:

点击编辑配置

第三步:运行测试样例

测试样例代码:

import os

# spark_home 的环境变量

SPARK_HOME = "/export/servers/spark-2.3.4-bin-hadoop2.7"

# 由于系统中存在两种不同版本的python,需要配置PYSPARK_PYTHON和PYSPARK_DRIVER_PYTHON指定运行为python3

PYSPARK_PYTHON = "/usr/local/python3/bin/python3"

PYSPARK_DRIVER_PYTHON = "/usr/local/python3/bin/python3"

os.environ['SPARK_HOME'] = SPARK_HOME

os.environ['PYSPARK_PYTHON'] = PYSPARK_PYTHON

os.environ['PYSPARK_DRIVER_PYTHON'] = PYSPARK_DRIVER_PYTHON

from pyspark.sql import SparkSession

# 创建SparkSession实例

spark = SparkSession.builder.appName("test").getOrCreate()

sc = spark.sparkContext

# 统计文件中的词的频率

counts = sc.textFile('file:///root/tmp/word.txt').map(lambda x: (x, 1)).reduceByKey(lambda x, y: x + y)

output = counts.collect()

print(output)

sc.stop()

异常处理方式

1.如果出现No module named 'py4j’异常,请安装py4j模块

# 安装py4j

pip install py4j

异常代码

ssh://root@192.168.141.130:22/root/miniconda3/envs/ai/bin/python3.6 -u /root/Desktop/kafka_test.py

Traceback (most recent call last):

File "/root/Desktop/kafka_test.py", line 13, in <module>

from pyspark.sql import SparkSession

File "/root/miniconda3/envs/ai/lib/python3.6/site-packages/pyspark/__init__.py", line 46, in <module>

from pyspark.context import SparkContext

File "/root/miniconda3/envs/ai/lib/python3.6/site-packages/pyspark/context.py", line 29, in <module>

from py4j.protocol import Py4JError

ModuleNotFoundError: No module named 'py4j'

2.如果出现异常“No module named ‘pyspark’”,需要把spark下面python目录下的pyspak目录,复制到python解释器所在路径的site-packages中;

异常代码:

ssh://root@192.168.141.130:22/root/miniconda3/envs/ai/bin/python3.6 -u /root/Desktop/kafka_test.py

Traceback (most recent call last):

File "/root/Desktop/kafka_test.py", line 13, in <module>

from pyspark.sql import SparkSession

ModuleNotFoundError: No module named 'pyspark'

3.如果出现下面这种异常“Exception: Python in worker has different version 3.7 than that in driver 3.6, PySpark cannot run with different minor versions.Please check environment variables PYSPARK_PYTHON and PYSPARK_DRIVER_PYTHON are correctly set.”,说明python解释器版本冲突,PYSPARK_PYTHON和PYSPARK_DRIVER_PYTHON 的路径配置存在问题,请检查自己第一步和第二部的配置是否正确。

异常代码

2019-10-16 18:23:00 ERROR TaskSetManager:70 - Task 0 in stage 0.0 failed 1 times; aborting job

Traceback (most recent call last):

File "/root/Desktop/kafka_test.py", line 13, in <module>

output = counts.collect()

File "/export/servers/spark-2.3.4-bin-hadoop2.7/python/pyspark/rdd.py", line 814, in collect

sock_info = self.ctx._jvm.PythonRDD.collectAndServe(self._jrdd.rdd())

File "/export/servers/spark-2.3.4-bin-hadoop2.7/python/lib/py4j-0.10.7-src.zip/py4j/java_gateway.py", line 1257, in __call__

File "/export/servers/spark-2.3.4-bin-hadoop2.7/python/lib/py4j-0.10.7-src.zip/py4j/protocol.py", line 328, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.collectAndServe.

: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0, localhost, executor driver): org.apache.spark.api.python.PythonException: Traceback (most recent call last):

File "/export/servers/spark-2.3.4-bin-hadoop2.7/python/lib/pyspark.zip/pyspark/worker.py", line 181, in main

("%d.%d" % sys.version_info[:2], version))

Exception: Python in worker has different version 3.7 than that in driver 3.6, PySpark cannot run with different minor versions.Please check environment variables PYSPARK_PYTHON and PYSPARK_DRIVER_PYTHON are correctly set.

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.handlePythonException(PythonRunner.scala:336)

at org.apache.spark.api.python.PythonRunner$$anon$1.read(PythonRunner.scala:475)

at org.apache.spark.api.python.PythonRunner$$anon$1.read(PythonRunner.scala:458)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.hasNext(PythonRunner.scala:290)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37)

at scala.collection.Iterator$GroupedIterator.fill(Iterator.scala:1126)

at scala.collection.Iterator$GroupedIterator.hasNext(Iterator.scala:1132)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:408)

at org.apache.spark.shuffle.sort.BypassMergeSortShuffleWriter.write(BypassMergeSortShuffleWriter.java:125)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:96)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:53)

at org.apache.spark.scheduler.Task.run(Task.scala:109)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)