文章目录

后期文章陆续登在公众号

github地址https://github.com/fz861062923/TensorFlow

实验平台google Colab

安装TensorFlow GPU版本

!pip install tensorflow-gpu

Collecting tensorflow-gpu

[?25l Downloading https://files.pythonhosted.org/packages/55/7e/bec4d62e9dc95e828922c6cec38acd9461af8abe749f7c9def25ec4b2fdb/tensorflow_gpu-1.12.0-cp36-cp36m-manylinux1_x86_64.whl (281.7MB)

[K 100% |████████████████████████████████| 281.7MB 66kB/s

[?25hRequirement already satisfied: keras-preprocessing>=1.0.5 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.0.9)

Requirement already satisfied: grpcio>=1.8.6 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.15.0)

Requirement already satisfied: absl-py>=0.1.6 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (0.7.0)

Requirement already satisfied: protobuf>=3.6.1 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (3.6.1)

Requirement already satisfied: tensorboard<1.13.0,>=1.12.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.12.2)

Requirement already satisfied: termcolor>=1.1.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.1.0)

Requirement already satisfied: six>=1.10.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.11.0)

Requirement already satisfied: keras-applications>=1.0.6 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.0.7)

Requirement already satisfied: gast>=0.2.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (0.2.2)

Requirement already satisfied: numpy>=1.13.3 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (1.14.6)

Requirement already satisfied: wheel>=0.26 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (0.32.3)

Requirement already satisfied: astor>=0.6.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow-gpu) (0.7.1)

Requirement already satisfied: setuptools in /usr/local/lib/python3.6/dist-packages (from protobuf>=3.6.1->tensorflow-gpu) (40.8.0)

Requirement already satisfied: werkzeug>=0.11.10 in /usr/local/lib/python3.6/dist-packages (from tensorboard<1.13.0,>=1.12.0->tensorflow-gpu) (0.14.1)

Requirement already satisfied: markdown>=2.6.8 in /usr/local/lib/python3.6/dist-packages (from tensorboard<1.13.0,>=1.12.0->tensorflow-gpu) (3.0.1)

Requirement already satisfied: h5py in /usr/local/lib/python3.6/dist-packages (from keras-applications>=1.0.6->tensorflow-gpu) (2.8.0)

Installing collected packages: tensorflow-gpu

Successfully installed tensorflow-gpu-1.12.0

安装keras

!pip install keras

Requirement already satisfied: keras in /usr/local/lib/python3.6/dist-packages (2.2.4)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.6/dist-packages (from keras) (3.13)

Requirement already satisfied: keras-applications>=1.0.6 in /usr/local/lib/python3.6/dist-packages (from keras) (1.0.7)

Requirement already satisfied: h5py in /usr/local/lib/python3.6/dist-packages (from keras) (2.8.0)

Requirement already satisfied: keras-preprocessing>=1.0.5 in /usr/local/lib/python3.6/dist-packages (from keras) (1.0.9)

Requirement already satisfied: scipy>=0.14 in /usr/local/lib/python3.6/dist-packages (from keras) (1.1.0)

Requirement already satisfied: six>=1.9.0 in /usr/local/lib/python3.6/dist-packages (from keras) (1.11.0)

Requirement already satisfied: numpy>=1.9.1 in /usr/local/lib/python3.6/dist-packages (from keras) (1.14.6)

用TensorFlow查看GPU信息

查看是否有GPU

gpu_device_name = tf.test.gpu_device_name()

print(gpu_device_name)

/device:GPU:0

GPU是否可以用

tf.test.is_gpu_available()

True

列出本地的机器信息

from tensorflow.python.client import device_lib # 列出所有的本地机器设备

local_device_protos = device_lib.list_local_devices() # 打印

print(local_device_protos)

# 只打印GPU设备

[print(x) for x in local_device_protos if x.device_type == 'GPU']

[name: "/device:CPU:0"

device_type: "CPU"

memory_limit: 268435456

locality {

}

incarnation: 94770586443875998

, name: "/device:XLA_CPU:0"

device_type: "XLA_CPU"

memory_limit: 17179869184

locality {

}

incarnation: 3717203045672747445

physical_device_desc: "device: XLA_CPU device"

, name: "/device:XLA_GPU:0"

device_type: "XLA_GPU"

memory_limit: 17179869184

locality {

}

incarnation: 13950212339754898365

physical_device_desc: "device: XLA_GPU device"

, name: "/device:GPU:0"

device_type: "GPU"

memory_limit: 11276946637

locality {

bus_id: 1

links {

}

}

incarnation: 3242165785135882586

physical_device_desc: "device: 0, name: Tesla K80, pci bus id: 0000:00:04.0, compute capability: 3.7"

]

name: "/device:GPU:0"

device_type: "GPU"

memory_limit: 11276946637

locality {

bus_id: 1

links {

}

}

incarnation: 3242165785135882586

physical_device_desc: "device: 0, name: Tesla K80, pci bus id: 0000:00:04.0, compute capability: 3.7"

[None]

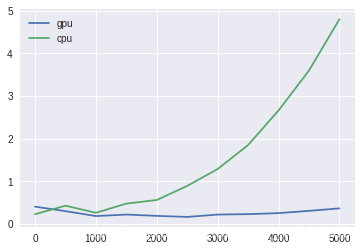

测试GPU和CPU的执行性能

def performanceTest(device_name,size):

with tf.device(device_name): #选择具体的哪一个设备

W = tf.random_normal([size, size],name='W') #以随机产生数值的方式建立W矩阵

X = tf.random_normal([size, size],name='X') #以随机产生数值的方式建立X矩阵

mul = tf.matmul(W, X,name='mul')

sum_result = tf.reduce_sum(mul,name='sum') #将mul矩阵里面的值加总

startTime = time.time() #记录开始运行的时间

tfconfig=tf.ConfigProto(log_device_placement=True) #代表显示设备的相关信息

with tf.Session(config=tfconfig) as sess:

result = sess.run(sum_result)

takeTimes=time.time() - startTime

print(device_name," size=",size,"Time:",takeTimes )

return takeTimes #返回运行时间

g=performanceTest("/gpu:0",100)

c=performanceTest("/cpu:0",100)

/gpu:0 size= 100 Time: 0.7048304080963135

/cpu:0 size= 100 Time: 0.038219451904296875

g=performanceTest("/gpu:0",200)

c=performanceTest("/cpu:0",200)

/gpu:0 size= 200 Time: 0.01403498649597168

/cpu:0 size= 200 Time: 0.0194242000579834

g=performanceTest("/gpu:0",1000)

c=performanceTest("/cpu:0",1000)

/gpu:0 size= 1000 Time: 0.01311945915222168

/cpu:0 size= 1000 Time: 0.1013486385345459

g=performanceTest("/gpu:0",5000)

c=performanceTest("/cpu:0",5000)

/gpu:0 size= 5000 Time: 0.17576313018798828

/cpu:0 size= 5000 Time: 3.400740146636963

gpu_set=[];cpu_set=[];i_set=[]

for i in range(0,5001,500):

g=performanceTest("/gpu:0",i)

c=performanceTest("/cpu:0",i)

gpu_set.append(g);cpu_set.append(c);i_set.append(i)

print("##########################")

/gpu:0 size= 0 Time: 0.4013850688934326

/cpu:0 size= 0 Time: 0.22393250465393066

##########################

/gpu:0 size= 500 Time: 0.296217679977417

/cpu:0 size= 500 Time: 0.4245729446411133

##########################

/gpu:0 size= 1000 Time: 0.18050003051757812

/cpu:0 size= 1000 Time: 0.2570662498474121

##########################

/gpu:0 size= 1500 Time: 0.21580886840820312

/cpu:0 size= 1500 Time: 0.4735269546508789

##########################

/gpu:0 size= 2000 Time: 0.18517041206359863

/cpu:0 size= 2000 Time: 0.5582160949707031

##########################

/gpu:0 size= 2500 Time: 0.16060090065002441

/cpu:0 size= 2500 Time: 0.8890912532806396

##########################

/gpu:0 size= 3000 Time: 0.2157294750213623

/cpu:0 size= 3000 Time: 1.2854430675506592

##########################

/gpu:0 size= 3500 Time: 0.2256307601928711

/cpu:0 size= 3500 Time: 1.849276065826416

##########################

/gpu:0 size= 4000 Time: 0.24950551986694336

/cpu:0 size= 4000 Time: 2.6612839698791504

##########################

/gpu:0 size= 4500 Time: 0.3029475212097168

/cpu:0 size= 4500 Time: 3.5977590084075928

##########################

/gpu:0 size= 5000 Time: 0.361494779586792

/cpu:0 size= 5000 Time: 4.803512334823608

##########################

将结果进行可视化

%matplotlib inline

import matplotlib.pyplot as plt

fig = plt.gcf()

fig.set_size_inches(6,4)

plt.plot(i_set, gpu_set, label = 'gpu')

plt.plot(i_set, cpu_set, label = 'cpu')

plt.legend()

<matplotlib.legend.Legend at 0x7f43ab152c88>