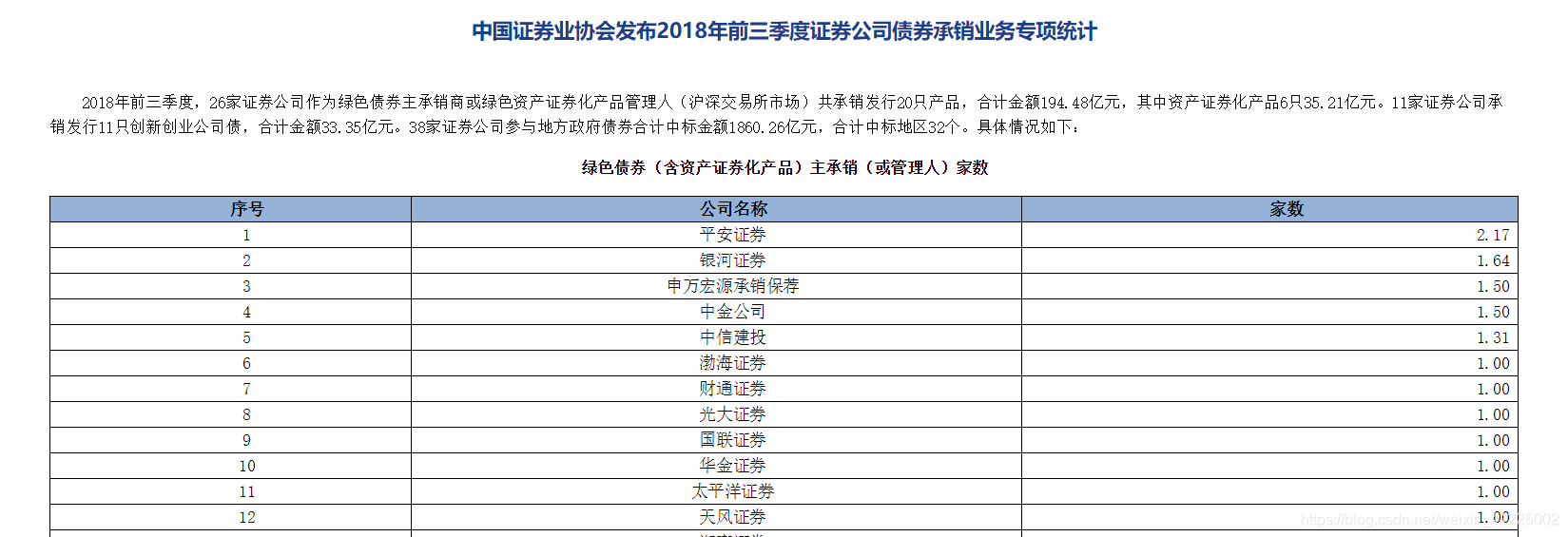

以此网站为例

基础代码如下:

from lxml import etree

import requests

import csv

# 检查url地址

def check_link(url):

try:

ua = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36"

Cookie = "__jsluid=a0ff288cfece0ec1cb5e22c4a24a6675; __jsl_clearance=1555571859.427|0|xIYeAeXRX%2BFS334V1evcRQStJdA%3D"

Host = "www.sac.net.cn"

r = requests.get(url, headers={'User-agent': ua, 'Cookie': Cookie, 'Host': Host})

# 设置头部信息

r.raise_for_status()

r.encoding = "utf-8"

#utf-8编码,具体根据实际情况而定

return r.text#返回文本,传入get_content

except:

print('无法链接服务器!!!')

# 爬取资源

def get_contents(text):

mytree = etree.HTML(text)#解析

file_names =["1", "2", "3", "4", "5", "6", "7", "8", "9", "10", "11"]

#命名随意

tables = mytree.xpath("//table[@class='MsoNormalTable']")#定位表格,返回列表

for i in range(len(tables)):#循环表格

onetable = []

trs = tables[i].xpath('.//tr')#取出所有tr标签

for tr in trs:

ui = []

for td in tr:

texts = td.xpath(".//text()")#取出所有td标签下的文本

mm = []

#对文本经行处理

for text in texts:

text = text.strip(" ")

if u'\u4e00' <= text <= u'\u9fff' or text.isdigit() or '.'in text :

mm.append(text)

ui.append(mm)

print(ui) # 一行数据

onetable.append(ui)#整张表格

# print("start onetable:")

# print(onetable)

save_contents(file_names[i], onetable)

# print("end")

# exit()

yield onetable

# 保存资源

def save_contents(file_name,urlist):

with open(file_name, 'w', newline='') as f:

writer = csv.writer(f)#打开文件(一张表格)

try:

for i in range(len(urlist)):#循环表格每一行

print(urlist[i])

writer.writerow([urlist[i][0][0], urlist[i][1][0], urlist[i][2][0]])#写入,三列

except:

writer.writerow([urlist[i][0][0]])#只有一列的情况

def main():

url = "http://www.sac.net.cn/hysj/zqgsyjpm/201811/t20181114_137039.html"

text = check_link(url)

get_contents(text)

main()

1.取出的表格里的text列表有可能存在\n等字符,所以加了一个判断是否为中文和数字的方法

2.目前运行结果保存在程序路径下,如果需要更改路径,可以修改save_content函数

3.文件名也可以传入,添加一个参数就可以了

修改代码如下

# 保存资源

def save_contents(file_path,file_name,urlist):

if not os.path.exists(file_path):#是否存在文件夹,不存在就创建

os.makedirs(file_path)

path = file_path + os.path.sep + '{file_name}'.format(file_name=file_name + ".csv")

with open(path, 'w', newline='') as f:

writer = csv.writer(f)

try:

for i in range(len(urlist)):

writer.writerow([urlist[i][0][0], urlist[i][1][0], urlist[i][2][0]])

except:

try:

writer.writerow([urlist[i][0][0]])

except:

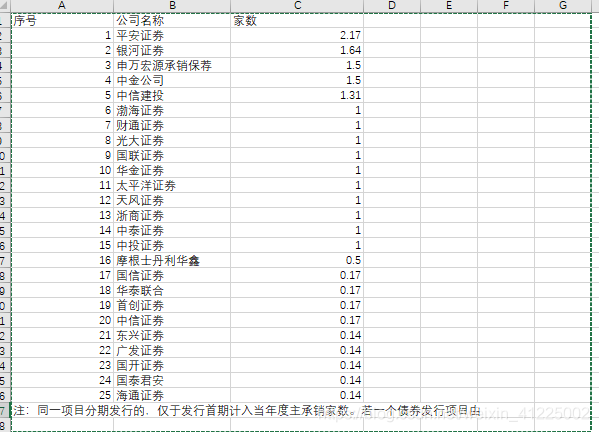

pass最后运行结果: