下面的代码使用的是全连接神经网络而不是卷积神经网络的方式:有助于深刻理解全连接神经网络与卷积神经网络的区别与联系

在tensorflow1.11中运行 mnist 代码 ,下面两行代码已被高版本tensorflow弃用

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/MNIST_data/", one_hot=True)运行后报下面的错误,不能加载mnist数据集

WARNING:tensorflow:From F:/MachineLearning_DeepLearning/Tensorflow/深度学习之TensorFlow配套资源/随书资源/代码/7-5 mnist多层分类.py:12: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

WARNING:tensorflow:From D:\ProgramData\Anaconda3\envs\keras\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please write your own downloading logic.

WARNING:tensorflow:From D:\ProgramData\Anaconda3\envs\keras\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\base.py:252: _internal_retry.<locals>.wrap.<locals>.wrapped_fn (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please use urllib or similar directly.tensorflow.examples.tutorials 现在已经弃用了,推荐使用tensorflow.keras.datasets ,代码如下:

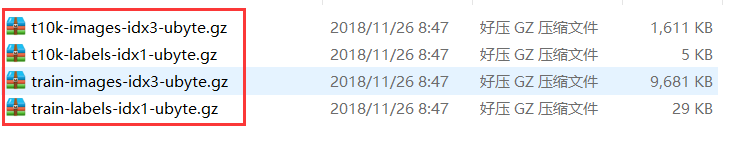

会自动下载mnist数据集,该方式下载的mnist与之前的input_data的 格式不同,先前的数据集格式为:

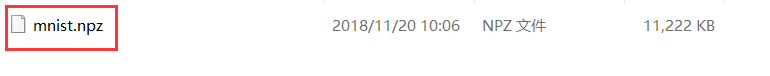

因为tensorflow 的 read_data_sets()能直接读取 压缩包并处理数据,这个可以看其他版本的 tensorflow 的read_data_sets 定义源码进一步了解,下面的代码下载的mnist数据集的格式为:

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()注意:使用上述代码加载的 mnist 数据集,与直接使用tensorflow的 格式是不一样的,labels没用使用 onehot 编码,这就使得在训练过程中的出错,因为loss 使用的是 softmax_cross_entropy_with_logits 函数,要求 labels的 shape为 [None,n_classes],即这里要求labels的shape为[None,10]

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y))而使用 上述代码获得 train 和 test 的labels 为:

X_train.shape: (60000, 28, 28)

X_test.shape: (10000, 28, 28)

y_train.shape: (60000,)

y_test.shape: (10000,)可以看出y_train 和 y_test(即labels的shape)分别为(60000,)、(10000,),是一维的,而需要的labels的shape为[None,n_classes]

这就需要使用 onehot 编码了,在低版本的 tensorflow的代码中:mnist = input_data.read_data_sets("/MNIST_data/", one_hot=True),在加载 mnist数据集时直接使用 one_hot=True,就能轻松搞定,但是高版本的 tensorflow不能再用低版本tensorflow加载 mnist 的方式;所以需要定义 onehot 函数:

import tensorflow as tf

mnist = tf.keras.datasets.mnist

import numpy as np

from sklearn.utils import shuffle

from sklearn.preprocessing import OneHotEncoder

(X_train, y_train), (X_test, y_test) = mnist.load_data()

def onehot(y,start,end,categories='auto'):

ohot = OneHotEncoder()

a = np.linspace(start,end-1,end-start)

b = np.reshape(a,[-1,1]).astype(np.int32)

ohot.fit(b)

c = ohot.transform(y).toarray()

return c

def MNISTLable_TO_ONEHOT(X_Train,Y_Train,X_Test,Y_Test,shuff=True):

Y_Train = np.reshape(Y_Train,[-1,1])

Y_Test = np.reshape(Y_Test,[-1,1])

Y_Train = onehot(Y_Train.astype(np.int32),0,n_classes)

Y_Test = onehot(Y_Test.astype(np.int32),0,n_classes)

if shuff ==True:

X_Train,Y_Train = shuffle(X_Train,Y_Train)

X_Test,Y_Test = shuffle(X_Test,Y_Test)

return X_Train,Y_Train,X_Test,Y_Test

X_train,y_train,X_test,y_test = MNISTLable_TO_ONEHOT(X_train,y_train,X_test,y_test)上述代码完成了mnist数据集的 onehot 和 shuffle 功能,但是还没完,batch加载数据的方式也要修改;

先前的加载方式:代码很简洁,但是不能用,因为通过我们加载mnist数据集的方式mnist 不再具有 train 和 next_batch的属性,所以要想使用批量训练,只能自定义

total_batch = int(mnist.train.num_examples/batch_size)

# 遍历全部数据集

for i in range(total_batch):

batch_x, batch_y = mnist.train.next_batch(batch_size)修改后的代码:

total_batch = int(X_train.shape[0]/batch_size)

# 遍历全部数据集

for i in range(total_batch):

# batch_x, batch_y = mnist.train.next_batch(batch_size)

# Run optimization op (backprop) and cost op (to get loss value)

batch_x = X_train[i*batch_size:(i+1)*batch_size,:]

batch_x = np.reshape(batch_x,[-1,28*28])

batch_y = y_train[i*batch_size:(i+1)*batch_size,:]注意上述代码中的 :batch_x = np.reshape(batch_x,[-1,28*28]),batch_x的shape为[batch_size,28,28], 这不符合我们定义的输入占位符:

n_input = 784 # MNIST data 输入 (img shape: 28*28)

x = tf.placeholder("float", [None, n_input])所以需要reshape batch_x的shape:

batch_x = np.reshape(batch_x,[-1,28*28])别忘记X_test也要reshape:

X_test = np.reshape(X_test,[-1,28*28])需要做的修改工作大概就上面这么多

-----------------------------------------------------------------我是可爱的分界线-------------------------------------------------------------------------------

下面的代码是输出test的 Accuracy以及测试集的前 30 个数字真实值和预测值

print ("Test Accuracy:", accuracy.eval({x: X_test, y: y_test}))

print(sess.run(tf.argmax(y_test[:30],1)),"Real Number")

print(sess.run(tf.argmax(pred[:30],1),feed_dict={x:X_test,y:y_test}),"Prediction Number")完整的代码如下:

import tensorflow as tf

import numpy as np

from sklearn.utils import shuffle

from sklearn.preprocessing import OneHotEncoder

# 导入 MINST 数据集

# from tensorflow.examples.tutorials.mnist import input_data

#mnist = input_data.read_data_sets("/MNIST_data/", one_hot=True)

mnist = tf.keras.datasets.mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

print('X_train.shape:',X_train.shape)

print('X_test.shape:',X_test.shape)

print('y_train.shape:',y_train.shape)

print('y_test.shape:',y_test.shape)

#参数设置

learning_rate = 0.001

training_epochs = 50

batch_size = 100

display_step = 1

# Network Parameters

n_hidden_1 = 256 # 1st layer number of features

n_hidden_2 = 256 # 2nd layer number of features

n_input = 784 # MNIST data 输入 (img shape: 28*28)

n_classes = 10 # MNIST 列别 (0-9 ,一共10类)

def onehot(y,start,end,categories='auto'):

ohot = OneHotEncoder()

a = np.linspace(start,end-1,end-start)

b = np.reshape(a,[-1,1]).astype(np.int32)

ohot.fit(b)

c = ohot.transform(y).toarray()

return c

def MNISTLable_TO_ONEHOT(X_Train,Y_Train,X_Test,Y_Test,shuff=True):

Y_Train = np.reshape(Y_Train,[-1,1])

Y_Test = np.reshape(Y_Test,[-1,1])

Y_Train = onehot(Y_Train.astype(np.int32),0,n_classes)

Y_Test = onehot(Y_Test.astype(np.int32),0,n_classes)

if shuff ==True:

X_Train,Y_Train = shuffle(X_Train,Y_Train)

X_Test,Y_Test = shuffle(X_Test,Y_Test)

return X_Train,Y_Train,X_Test,Y_Test

X_train,y_train,X_test,y_test = MNISTLable_TO_ONEHOT(X_train,y_train,X_test,y_test)

# tf Graph input

x = tf.placeholder("float", [None, n_input])

y = tf.placeholder("float", [None, n_classes])

# Create model

def multilayer_perceptron(x, weights, biases):

# Hidden layer with RELU activation

layer_1 = tf.add(tf.matmul(x, weights['h1']), biases['b1'])

layer_1 = tf.nn.relu(layer_1)

# Hidden layer with RELU activation

layer_2 = tf.add(tf.matmul(layer_1, weights['h2']), biases['b2'])

layer_2 = tf.nn.relu(layer_2)

# Output layer with linear activation

out_layer = tf.matmul(layer_2, weights['out']) + biases['out']

return out_layer

# Store layers weight & bias

weights = {

'h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])),

'h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

'out': tf.Variable(tf.random_normal([n_hidden_2, n_classes]))

}

biases = {

'b1': tf.Variable(tf.random_normal([n_hidden_1])),

'b2': tf.Variable(tf.random_normal([n_hidden_2])),

'out': tf.Variable(tf.random_normal([n_classes]))

}

# 构建模型

pred = multilayer_perceptron(x, weights, biases)

# Define loss and optimizer

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

# 初始化变量

init = tf.global_variables_initializer()

# 启动session

with tf.Session() as sess:

sess.run(init)

# 启动循环开始训练

for epoch in range(training_epochs):

avg_cost = 0.

total_batch = int(X_train.shape[0]/batch_size)

# 遍历全部数据集

for i in range(total_batch):

# batch_x, batch_y = mnist.train.next_batch(batch_size)

# Run optimization op (backprop) and cost op (to get loss value)

batch_x = X_train[i*batch_size:(i+1)*batch_size,:]

batch_x = np.reshape(batch_x,[-1,28*28])

batch_y = y_train[i*batch_size:(i+1)*batch_size,:]

correct_prediction = tf.equal(tf.argmax(pred,1),tf.argmax(y,1))

Accuracy = tf.reduce_mean(tf.cast(correct_prediction,"float"))

_, c , Acc= sess.run([optimizer, cost,Accuracy], feed_dict={x: batch_x,

y: batch_y})

# Compute average loss

avg_cost += c / total_batch

# 显示训练中的详细信息

if epoch % display_step == 0:

print ("Epoch:", '%04d' % (epoch+1), "cost=", \

"{:.9f}".format(avg_cost),"Accuracy:",Acc)

print (" Finished!")

# 测试 model

correct_prediction = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

# 计算准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

X_test = np.reshape(X_test,[-1,28*28])

print ("Test Accuracy:", accuracy.eval({x: X_test, y: y_test}))

print(sess.run(tf.argmax(y_test[:30],1)),"Real Number")

print(sess.run(tf.argmax(pred[:30],1),feed_dict={x:X_test,y:y_test}),"Prediction Number")下面是 Epoch=19时的测试结果:这里的cost是batch的cost的均值,即每个 epoch的cost均值

Epoch: 0001 cost= 39176.105575358 Accuracy: 0.86

Epoch: 0002 cost= 9821.440245972 Accuracy: 0.89

Epoch: 0003 cost= 6080.894665782 Accuracy: 0.94

Epoch: 0004 cost= 4205.276867029 Accuracy: 0.94

Epoch: 0005 cost= 3046.774873254 Accuracy: 0.96

Epoch: 0006 cost= 2243.320303809 Accuracy: 0.97

Epoch: 0007 cost= 1703.103301872 Accuracy: 0.98

Epoch: 0008 cost= 1278.634195075 Accuracy: 0.98

Epoch: 0009 cost= 965.120566346 Accuracy: 1.0

Epoch: 0010 cost= 761.859680518 Accuracy: 1.0

Epoch: 0011 cost= 568.961994058 Accuracy: 0.98

Epoch: 0012 cost= 471.827384580 Accuracy: 1.0

Epoch: 0013 cost= 384.026608258 Accuracy: 0.99

Epoch: 0014 cost= 275.975348798 Accuracy: 1.0

Epoch: 0015 cost= 222.884815322 Accuracy: 0.98

Epoch: 0016 cost= 191.231369177 Accuracy: 1.0

Epoch: 0017 cost= 164.544457097 Accuracy: 0.99

Epoch: 0018 cost= 153.009574097 Accuracy: 0.99

Epoch: 0019 cost= 126.814215659 Accuracy: 1.0

Finished!

Test Accuracy: 0.95

[6 4 7 1 1 3 9 1 0 1 6 7 4 2 3 3 3 9 6 4 6 2 4 5 2 3 2 1 7 2] Real Number

[6 4 7 1 1 3 9 1 0 7 6 7 4 2 3 3 3 9 6 4 6 2 4 5 2 3 2 1 7 2] Prediction Number

Reference: