版权声明:本文为博主原创文章,转载请注明出处 https://blog.csdn.net/u010647035/article/details/87865756

1、编译环境

Windows 7

JDK 1.8

Scala-2.12.4

Maven-3.6.0

Spark-2.3.0

spark源码下载地址:https://github.com/apache/spark

2、遇到的问题

2.1、直接在源码根目录编译报以下错误

mvn -DskipTests clean package

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-antrun-plugin:1.8:run (default) on project spark-core_2.11: An Ant BuildException has occured: Execute failed: java.io.IOException: Cannot run program "bash" (in directory "D:\workspace\IDEA2017\gitl

ab\spark-2.3.0\core"): CreateProcess error=2, 系统找不到指定的文件。

[ERROR] around Ant part ...<exec executable="bash">... @ 4:27 in D:\workspace\IDEA2017\gitlab\spark-2.3.0\core\target\antrun\build-main.xml

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <goals> -rf :spark-core_2.11

解决办法

借助 git bash 进行编译,打开 git bash 进入spark 源码目录

root@Elag MINGW64 /d/workspace/IDEA2017/gitlab/spark-2.3.0

$ cd d:

root@Elag MINGW64 /d

$ cd workspace/IDEA2017/gitlab/spark-2.3.0/

root@Elag MINGW64 /d/workspace/IDEA2017/gitlab/spark-2.3.0

2.2、Maven 找不到

./build/mvn: line 143: /d/workspace/IDEA2017/gitlab/spark-2.3.0/build/zinc-0.3.15/bin/zinc: No such file or directory

./build/mvn: line 145: /d/workspace/IDEA2017/gitlab/spark-2.3.0/build/zinc-0.3.15/bin/zinc: No such file or directory

Using `mvn` from path: /d/Program Files/Java/apache-maven-3.6.0/bin/mvn

./build/mvn: line 157: /d/Program: No such file or directory

解决办法

由错误提示可知,maven 路径中有更换maven安装路径,并修改环境变量

2.3、Maven 工作内存不足

[ERROR] Java heap space -> [Help 1]

解决办法

设置maven工作内存

export MAVEN_OPTS="-Xmx2g -XX:ReservedCodeCacheSize=512m"

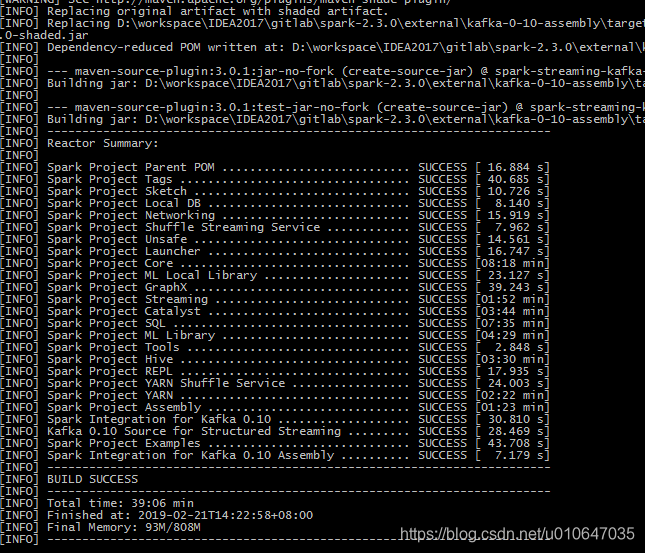

3、编译方式

3.1、使用 build 下的 mvn

./build/mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.0 -DskipTests clean package

3.2、使用 dev 下的 make-distribution.sh

编译成可部署在分布式系统上的可执行版本,最后的包名为: spark-2.3.0-bin-my_spark_2.3.0.tgz

./dev/make-distribution.sh --name my_spark_2.3.0 --tgz -Pyarn -Phive -Phive-thriftserver -Phadoop-2.7 -Dhadoop.version=2.7.0 -DskipTests -X

–name:自定义名称

-Pyarn:启用yarn支持

-Phive -Phive-thriftserver:启用支持hive和jdbc

-Phadoop-2.7 -Dhadoop.version=2.7.0:支持2.7.0版本的Hadoop

3.3、单独构建子模块

可以使用 mvn -pl 选项构建Spark子模块。

例如,您可以使用以下命令构建Spark Streaming模块:

./build/mvn -pl :spark-streaming_2.11 clean install

注意事项:

使用 git bash 对spark 源码进行编译或打包

本机maven的环境变量路径中不要出现空格

参考资料:

http://spark.apache.org/docs/latest/building-spark.html#change-scala-version