一、概述

Checkpoint是什么?

Spark在生产环境下经常会面临Tranformations的RDD非常多(例如一个Job中包含1万个RDD)或者具体Tranformation产生的RDD本身计算特别复杂和耗时(例如计算时常超过1~5个小时),此时我们必须考虑对计算结果数据的持久化。如果采用persist把数据放在内存中的话,虽然是最快速的但是也是最不可靠的;如果放在磁盘上也不是完全可靠的!例如磁盘会损坏。那么也就是说,出现失败的时候,没有容错机制,所以当后面的transformation操作,又要使用到该RDD时,就会发现数据丢失了(CacheManager),此时如果没有进行容错处理的话,那么可能就又要重新计算一次数据。所以,针对上述的复杂Spark应用的问题(没有容错机制的问题)。就可以使用checkponit功能。

Checkpoint的功能

Checkpoint 的产生就是为了相对而言更加可靠的持久化数据,在 Checkpoint 可以指定把数据放在本地并且是多副本的方式,但是在正常生产环境下放在 HDFS 上,这就天然的借助HDFS 高可靠的特征来完成最大化的可靠的持久化数据的方式

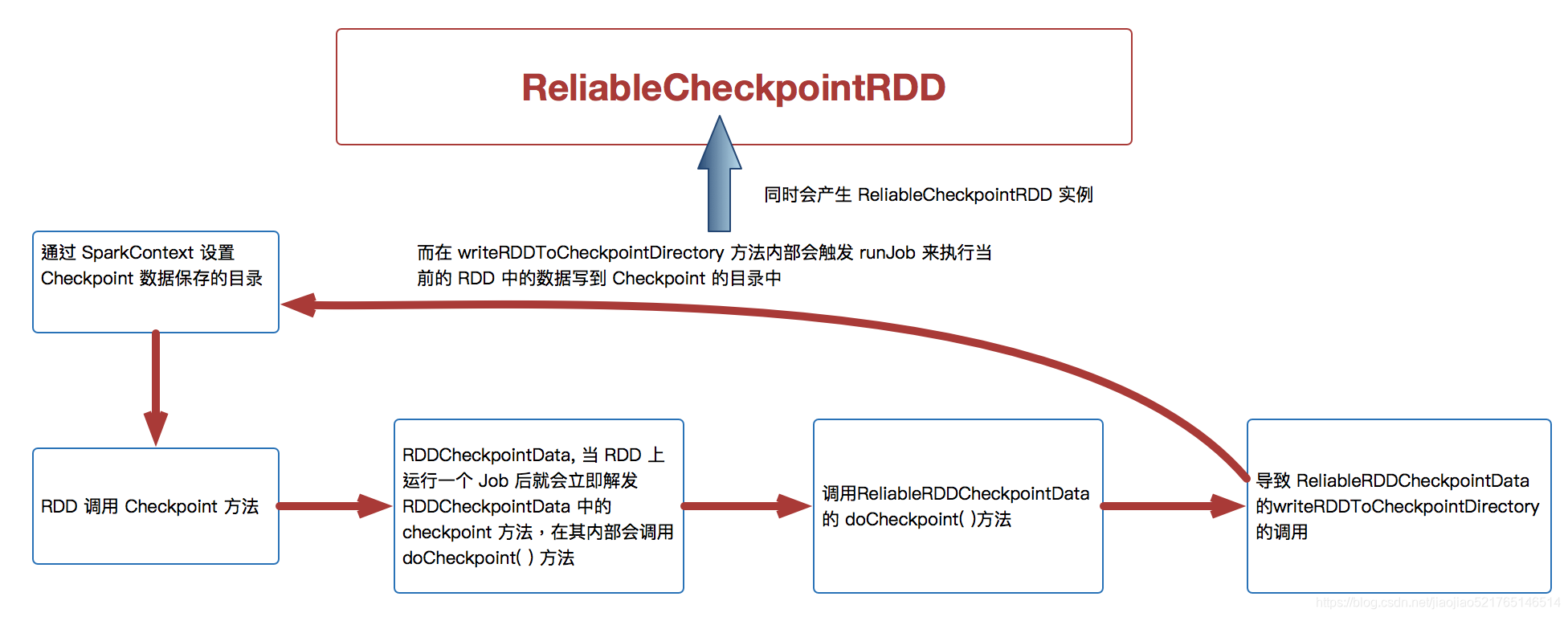

checkpoint,就是说,首先呢,要调用SparkContext的setCheckpointDir()方法,设置一个容错的文件系统的目录,比如说HDFS;然后,对RDD调用调用checkpoint()方法。之后,在RDD所处的job运行结束之后,会启动一个单独的job,来将checkpoint过的RDD的数据写入之前设置的文件系统,进行高可用、容错的类持久化操作。

Checkpoint 运行流程图

checkpoint与presist持久化的区别:

- 持久化将数据保存到BlockManager中,rdd的lineage(血缘关系,依赖关系)不变;checkpoint执行完成rdd已经有没有了依赖关系,同时把父rdd设置成了CheckpointRDD,rdd的lineage改变了。

- 持久化的数据存储在内存或磁盘,丢失的可能性很大;checkpoint通常选择的是高容错性的文件系统,如HDFS,数据的安全性非常高。

注意:

rdd的如果没有持久化,但是设置了checkpoint可能很悲剧。本来job已经执行结束,但是由于中间的rdd没有持久化,checkpoint想要保存计算结果,还得重新计算一遍。所以,一般在checkpoint之前,设置持久化操作。建议对要使用checkpoint()的RDD使用

rdd.presist(StoreLevel.DISK_ONLY) ;该RDD计算之后,就直接将其持久化到磁盘上。然后后面的checkpoint操作时,直接从磁盘上读取数据,并checkpoint到外部文件即可,不需要重新计算一次了。

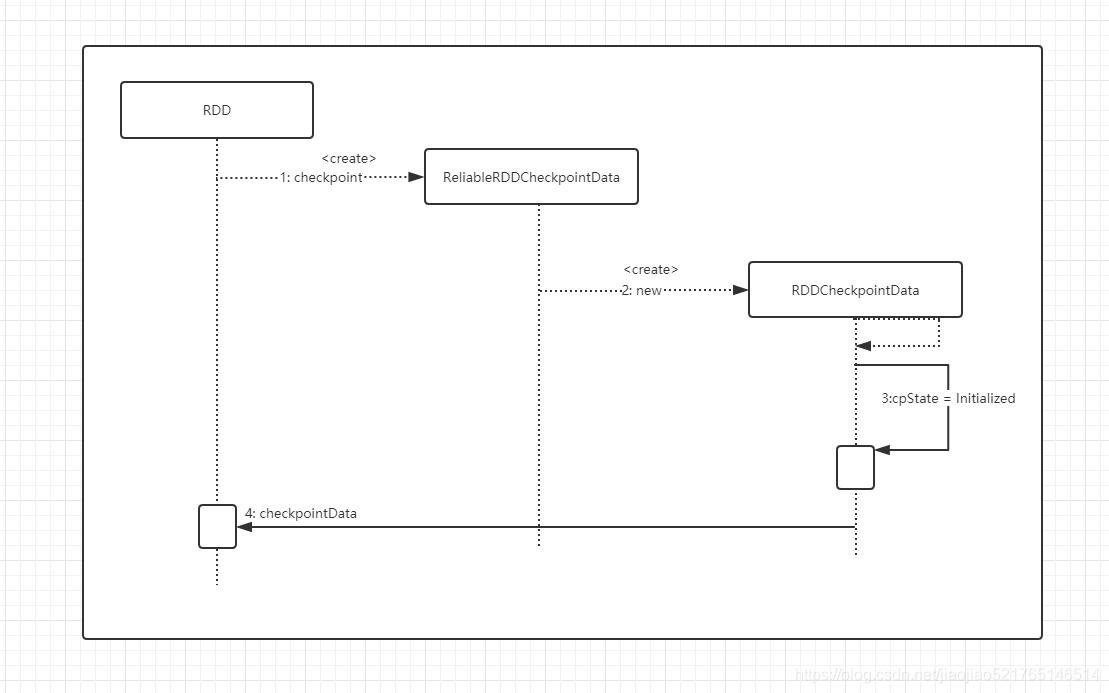

checkpoint初始化

源码分析

第一步:SparkContext的setCheckpointDir 设置了一个checkpoint 目录

源码地址:org.apache.spark.SparkContext.scala

def setCheckpointDir(directory: String) {

// If we are running on a cluster, log a warning if the directory is local.

// Otherwise, the driver may attempt to reconstruct the checkpointed RDD from

// its own local file system, which is incorrect because the checkpoint files

// are actually on the executor machines.

if (!isLocal && Utils.nonLocalPaths(directory).isEmpty) {

logWarning("Spark is not running in local mode, therefore the checkpoint directory " +

s"must not be on the local filesystem. Directory '$directory' " +

"appears to be on the local filesystem.")

}

//利用hadoop的api创建了一个hdfs目录

checkpointDir = Option(directory).map { dir =>

val path = new Path(dir, UUID.randomUUID().toString)

val fs = path.getFileSystem(hadoopConfiguration)

fs.mkdirs(path)

fs.getFileStatus(path).getPath.toString

}

}

第二步:rdd.checkpoint() RDD核心的checkpoint 方法

源码地址:org.apache.spark.rdd.RDD.scala

def checkpoint(): Unit = RDDCheckpointData.synchronized {

// NOTE: we use a global lock here due to complexities downstream with ensuring

// children RDD partitions point to the correct parent partitions. In the future

// we should revisit this consideration.

if (context.checkpointDir.isEmpty) {

throw new SparkException("Checkpoint directory has not been set in the SparkContext")

} else if (checkpointData.isEmpty) {

//创建ReliableRDDCheckpointData指向的RDDCheckpointData的实例

checkpointData = Some(new ReliableRDDCheckpointData(this))

}

}

第三步:创建了一个ReliableRDDCheckpointData

//ReliableRDDCheckpointData 的父类RDDCheckpointData

private[spark] class ReliableRDDCheckpointData[T: ClassTag](@transient private val rdd: RDD[T])

extends RDDCheckpointData[T](rdd) with Logging {

......

}

第四步:父类RDDCheckpointData

/**

* RDD 需要经过

*

* [ Initialized --> CheckpointingInProgress--> Checkpointed ]

* 这几个阶段才能被 checkpoint

*

*/

private[spark] abstract class RDDCheckpointData[T: ClassTag](@transient private val rdd: RDD[T])

extends Serializable {

import CheckpointState._

// The checkpoint state of the associated RDD.

//标识CheckPoint的状态,第一次初始化时是Initialized

protected var cpState = Initialized

......

}

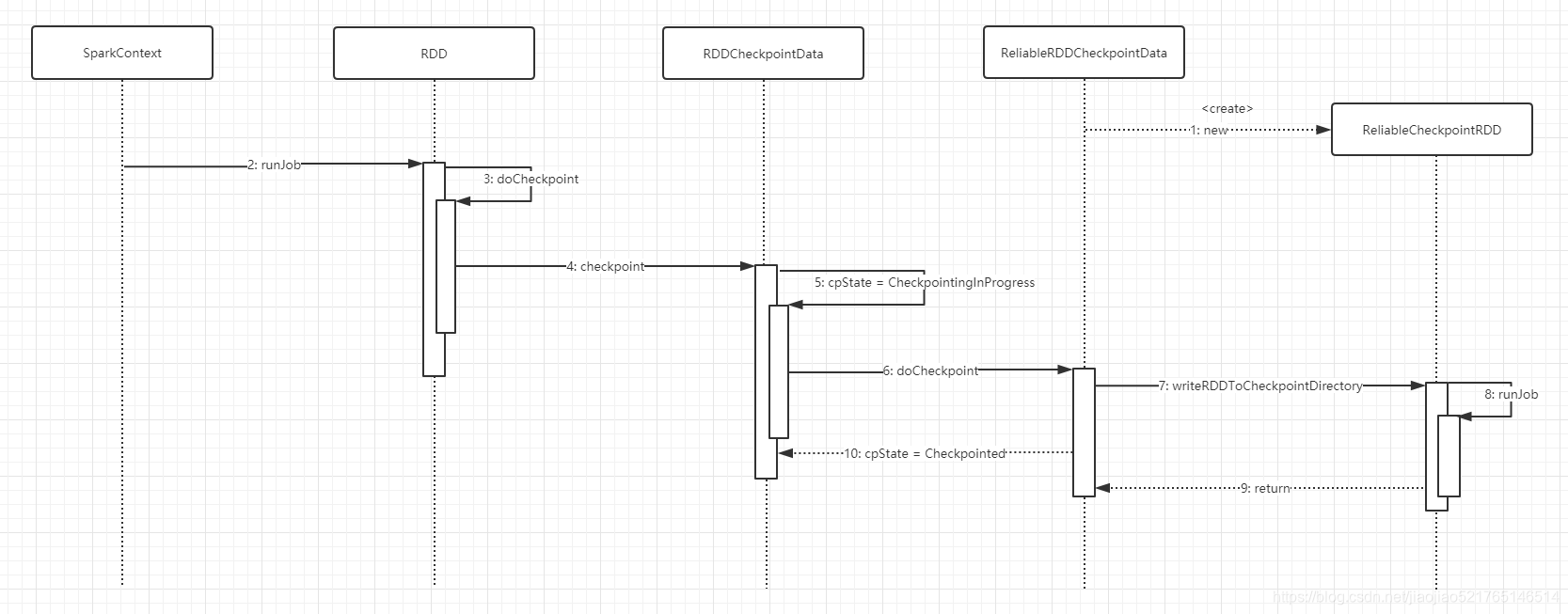

checkpoint写入数据

第一步:spark job运行最终会调用SparkContext的runJob方法将任务提交给Executor去执行

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

resultHandler: (Int, U) => Unit): Unit = {

if (stopped.get()) {

throw new IllegalStateException("SparkContext has been shutdown")

}

val callSite = getCallSite

val cleanedFunc = clean(func)

logInfo("Starting job: " + callSite.shortForm)

if (conf.getBoolean("spark.logLineage", false)) {

logInfo("RDD's recursive dependencies:\n" + rdd.toDebugString)

}

dagScheduler.runJob(rdd, cleanedFunc, partitions, callSite, resultHandler, localProperties.get)

progressBar.foreach(_.finishAll())

//在生产环境下会调用ReliableRDDCheckpointData的doCheckpoint方法

rdd.doCheckpoint()

}

第二步: rdd.doCheckpoint()方法

private[spark] def doCheckpoint(): Unit = {

RDDOperationScope.withScope(sc, "checkpoint", allowNesting = false, ignoreParent = true) {

if (!doCheckpointCalled) {

doCheckpointCalled = true

if (checkpointData.isDefined) {

if (checkpointAllMarkedAncestors) {

// TODO We can collect all the RDDs that needs to be checkpointed, and then checkpoint

// them in parallel.

// Checkpoint parents first because our lineage will be truncated after we

// checkpoint ourselves

dependencies.foreach(_.rdd.doCheckpoint())

}

checkpointData.get.checkpoint()

} else {

//遍历依赖的rdd,调用每个rdd的doCheckpoint方法

dependencies.foreach(_.rdd.doCheckpoint())

}

}

}

}

第三步:checkpointData 类型是什么吗?就是RDDCheckpointData中的 doCheckpoint()方法

final def checkpoint(): Unit = {

// Guard against multiple threads checkpointing the same RDD by

// atomically flipping the state of this RDDCheckpointData

RDDCheckpointData.synchronized {

if (cpState == Initialized) {

//1、标记当前状态为正在checkpoint中

cpState = CheckpointingInProgress

} else {

return

}

}

//2 这里调用的是子类的doCheckpoint()

val newRDD = doCheckpoint()

// Update our state and truncate the RDD lineage

// 3 标记checkpoint已完成,清空RDD依赖

RDDCheckpointData.synchronized {

cpRDD = Some(newRDD)

cpState = Checkpointed

rdd.markCheckpointed()

}

}

第四步:子类ReliableRDDCheckpointData的doCheckpoint方法

protected override def doCheckpoint(): CheckpointRDD[T] = {

/**

* 为该 rdd 生成一个新的依赖,设置该 rdd 的 parent rdd 为 CheckpointRDD

* 该 CheckpointRDD 负责以后读取在文件系统上的 checkpoint 文件,生成该 rdd 的 partition

*/

val newRDD = ReliableCheckpointRDD.writeRDDToCheckpointDirectory(rdd, cpDir)

// Optionally clean our checkpoint files if the reference is out of scope

// 是否清除checkpoint文件如果超出引用的资源范围

if (rdd.conf.getBoolean("spark.cleaner.referenceTracking.cleanCheckpoints", false)) {

rdd.context.cleaner.foreach { cleaner =>

cleaner.registerRDDCheckpointDataForCleanup(newRDD, rdd.id)

}

}

logInfo(s"Done checkpointing RDD ${rdd.id} to $cpDir, new parent is RDD ${newRDD.id}")

// 将新产生的RDD返回给父类

newRDD

}

第五步:ReliableCheckpointRDD.writeRDDToCheckpointDirectory(rdd, cpDir)方法

/**

* Write RDD to checkpoint files and return a ReliableCheckpointRDD representing the RDD.

*

* 触发runJob 来执行当前的RDD 中的数据写到Checkpoint 的目录中,同时会产生ReliableCheckpointRDD 实例

*/

def writeRDDToCheckpointDirectory[T: ClassTag](

originalRDD: RDD[T],

checkpointDir: String,

blockSize: Int = -1): ReliableCheckpointRDD[T] = {

val checkpointStartTimeNs = System.nanoTime()

val sc = originalRDD.sparkContext

// Create the output path for the checkpoint

//把checkpointDir设置我们checkpoint的目录

val checkpointDirPath = new Path(checkpointDir)

// 获取HDFS文件系统API接口

val fs = checkpointDirPath.getFileSystem(sc.hadoopConfiguration)

// 创建目录

if (!fs.mkdirs(checkpointDirPath)) {

throw new SparkException(s"Failed to create checkpoint path $checkpointDirPath")

}

// Save to file, and reload it as an RDD

// 将配置文件信息广播到所有节点

val broadcastedConf = sc.broadcast(

new SerializableConfiguration(sc.hadoopConfiguration))

// TODO: This is expensive because it computes the RDD again unnecessarily (SPARK-8582)

// 核心代码

/**

* 此处新提交一个Job,也是对RDD进行计算,那么如果原有的RDD对结果进行了cache的话,

* 那么是不是减少了很多的计算呢,这就是为啥checkpoint的时候强烈推荐进行cache的缘故

*/

sc.runJob(originalRDD,

writePartitionToCheckpointFile[T](checkpointDirPath.toString, broadcastedConf) _)

// 如果rdd的partitioner不为空,则将partitioner写入checkpoint目录

if (originalRDD.partitioner.nonEmpty) {

writePartitionerToCheckpointDir(sc, originalRDD.partitioner.get, checkpointDirPath)

}

val checkpointDurationMs =

TimeUnit.NANOSECONDS.toMillis(System.nanoTime() - checkpointStartTimeNs)

logInfo(s"Checkpointing took $checkpointDurationMs ms.")

// 创建一个CheckpointRDD,该分区数目应该和原始的rdd的分区数是一样的

val newRDD = new ReliableCheckpointRDD[T](

sc, checkpointDirPath.toString, originalRDD.partitioner)

if (newRDD.partitions.length != originalRDD.partitions.length) {

throw new SparkException(

"Checkpoint RDD has a different number of partitions from original RDD. Original " +

s"RDD [ID: ${originalRDD.id}, num of partitions: ${originalRDD.partitions.length}]; " +

s"Checkpoint RDD [ID: ${newRDD.id}, num of partitions: " +

s"${newRDD.partitions.length}].")

}

newRDD

}

最终会返回新的CheckpointRDD ,父类将它复值给成员变量cpRDD,最终标记当前状态为Checkpointed并清空当RDD的依赖链。到此Checkpoint的数据就被序列化到HDFS上了。

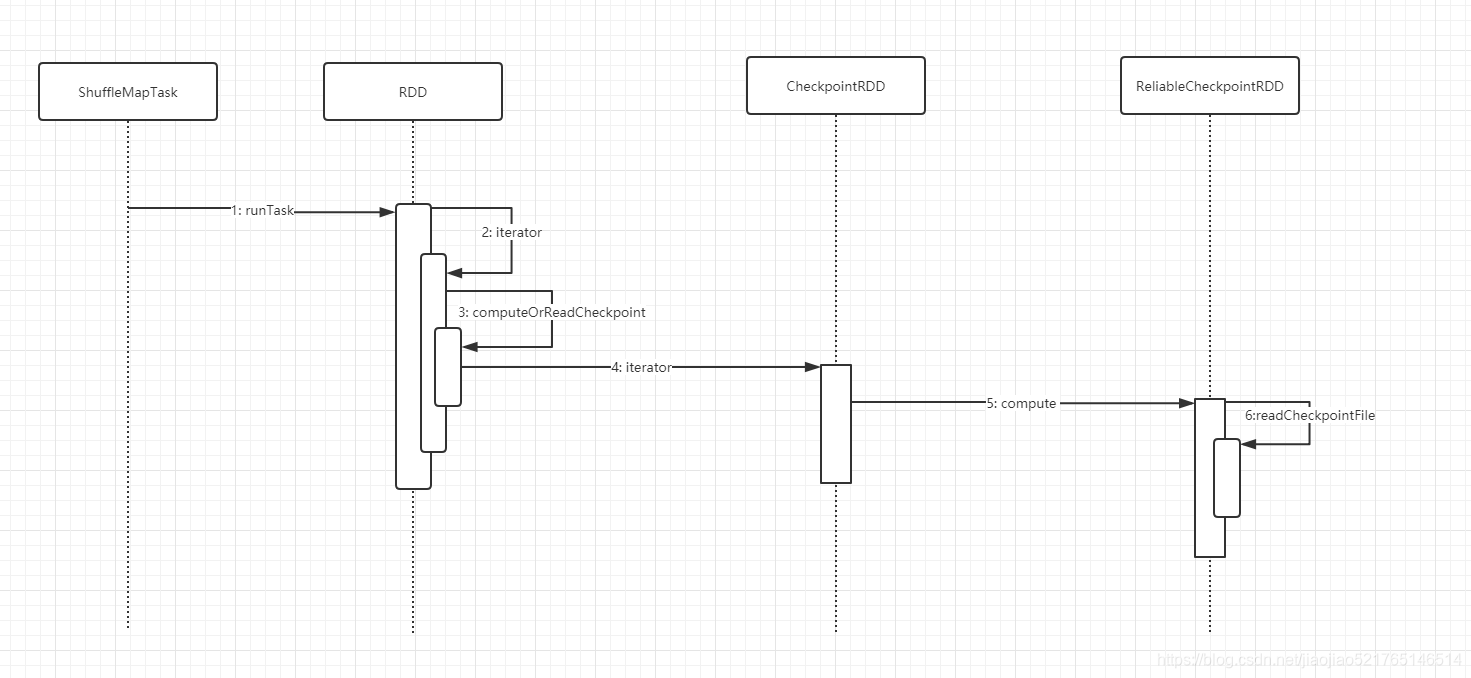

checkpoint读取数据

spark真正调用计算方法的逻辑runTask调用 rdd.iterator() 去计算该 rdd 的 partition

第一步:RDD的iterator()

源码地址:org.apache.spark.rdd.RDD.scala

/**

* 先调用presist(),再调用checkpoint()

* 先执行到rdd的iterator()的时候,storageLevel != StorageLevel.NONE,就会通过CacheManager获取数据

* 此时发生BlockManager获取不到数据,就会第一次计算数据,在通过BlockManager进行持久化

*

* rdd的job执行结束,启动单独的一个job,进行checkpoint,

* 下一次又运行到rdd的iterator()方法就会发现持久化级别不为空,默认从BlockManager中读取持久化数据(正常情况下)

*

* 在非正常情况下,就会调用computeOrReadCheckpoint方法,判断如果isCheckpoint为ture,

* 就会调用父rdd的iterator(),从外部文件系统中读取数据

*/

final def iterator(split: Partition, context: TaskContext): Iterator[T] = {

if (storageLevel != StorageLevel.NONE) {

getOrCompute(split, context)

} else {

//进行RDD的partition计算

computeOrReadCheckpoint(split, context)

}

}

第二步:继续调用computeOrReadCheckpoint

private[spark] def computeOrReadCheckpoint(split: Partition, context: TaskContext): Iterator[T] =

{

if (isCheckpointedAndMaterialized) {

firstParent[T].iterator(split, context)

} else {

//抽象方法,找具体实现类,比如MapPartitionsRDD

compute(split, context)

}

}

当调用 rdd.iterator() 去计算该 rdd 的 partition 的时候,会调用 computeOrReadCheckpoint(split: Partition) 去查看该 rdd 是否被 checkpoint 过了,如果是,就调用该 rdd 的 parent rdd 的 iterator() 也就是 CheckpointRDD.iterator(),否则直接调用该RDD的compute

第三步:CheckpointRDD(ReliableCheckpointRDD)的compute

源码地址:org.apache.spark.rdd.ReliableCheckpointRDD.scala

//Path上读取我们的CheckPoint数据

override def compute(split: Partition, context: TaskContext): Iterator[T] = {

val file = new Path(checkpointPath, ReliableCheckpointRDD.checkpointFileName(split.index))

ReliableCheckpointRDD.readCheckpointFile(file, broadcastedConf, context)

}

第三步:readCheckpointFile方法

def readCheckpointFile[T](

path: Path,

broadcastedConf: Broadcast[SerializableConfiguration],

context: TaskContext): Iterator[T] = {

val env = SparkEnv.get

// 用hadoop API 读取HDFS上的数据

val fs = path.getFileSystem(broadcastedConf.value.value)

val bufferSize = env.conf.getInt("spark.buffer.size", 65536)

val fileInputStream = {

val fileStream = fs.open(path, bufferSize)

if (env.conf.get(CHECKPOINT_COMPRESS)) {

CompressionCodec.createCodec(env.conf).compressedInputStream(fileStream)

} else {

fileStream

}

}

val serializer = env.serializer.newInstance()

val deserializeStream = serializer.deserializeStream(fileInputStream)

// Register an on-task-completion callback to close the input stream.

context.addTaskCompletionListener[Unit](context => deserializeStream.close())

//反序列化数据后转换为一个Iterator

deserializeStream.asIterator.asInstanceOf[Iterator[T]]

}