Python列车信息爬虫

Python爬虫我已经写过很多了,其实很大部分都是在重复。所以这篇或者是以后的博客都不会从头到尾讲爬虫,只是会将之前没有遇到的问题给重点列出来。

1.任务分析

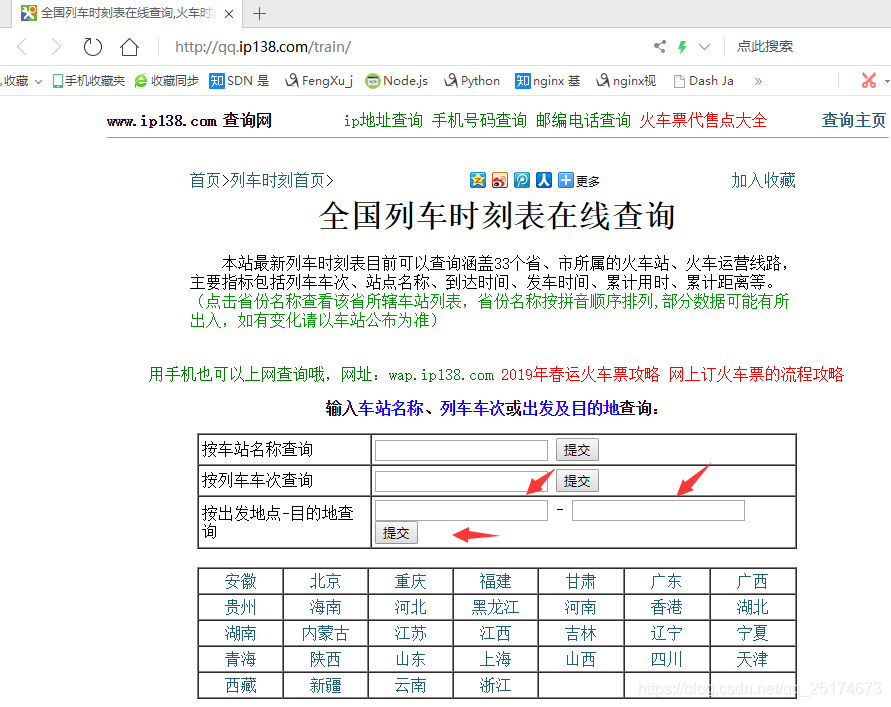

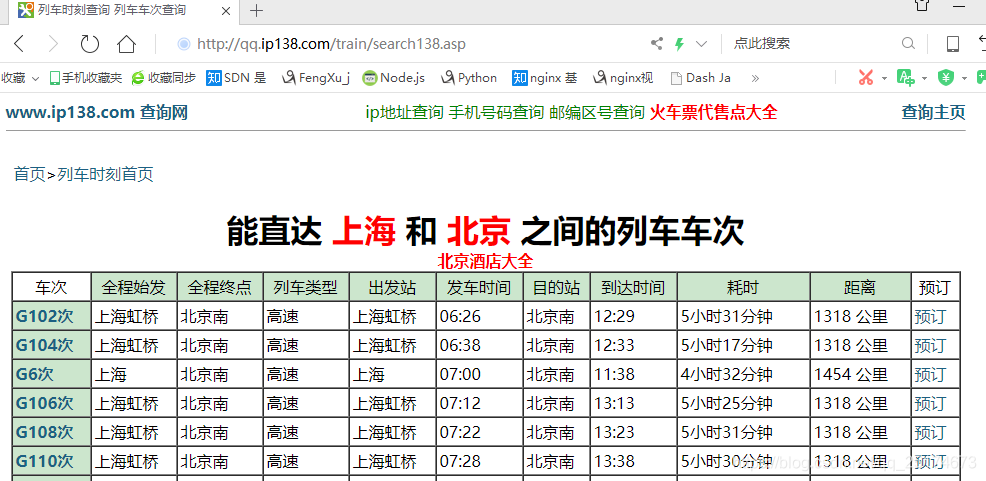

这是我们要爬取的网站,我们要做的就是输入出发地和目的地 然后点击提交按钮。例如输入上海,北京,点击提交即会出现这个如下页面。

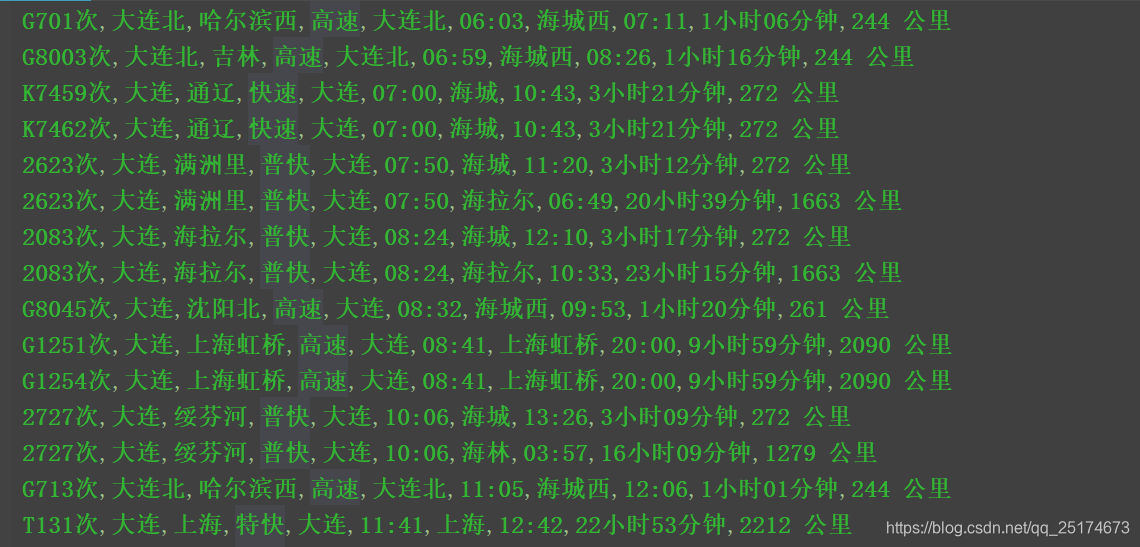

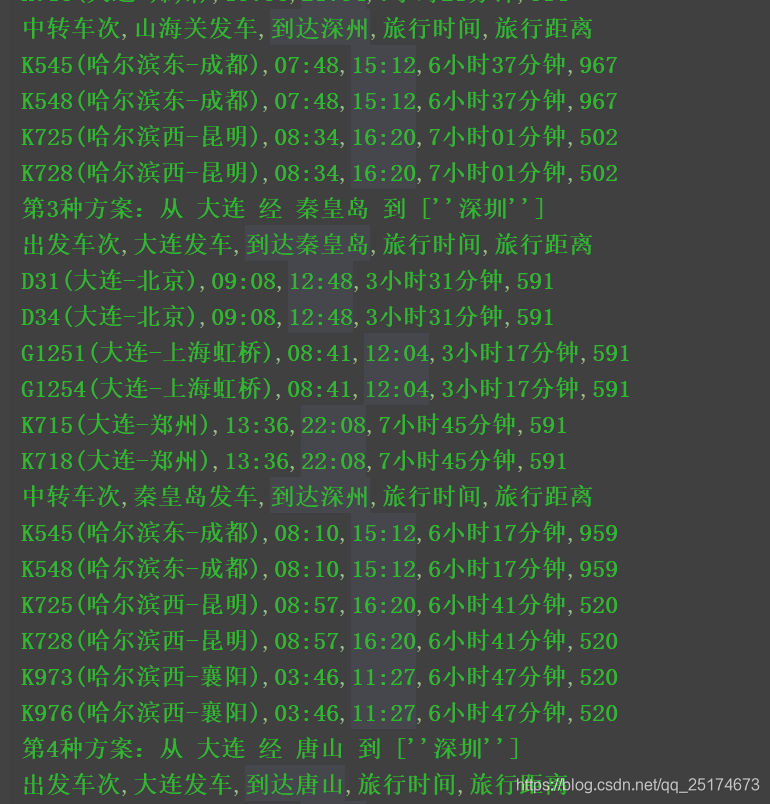

这是有直达的情况,当然也有非直达的情况,例如大连和东莞。

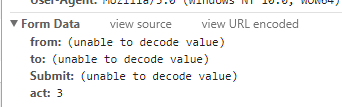

所以我们在获取数据的时候要分析这两种情况。还有我们发现这是一个POST请求,我们打开开发者工具,查看请求参数。像这样的请求参数多半是因为中文引起的,而根据我以往的经验我猜测from,to是我输入的出发地和目的地。Submit是提交。

2.构造POST请求

post_data = {

'from': '大连',#自己设置A城市

'to': '上海',

'Submit': '提交',

'act': '3',

}

post_url = 'http://qq.ip138.com/train/search138.asp'

response = requests.post(post_url, data=post_data, headers=headers, )

response.encoding = 'gb2312' # 设置字符编码

html = response.text按照我们以往的方法就是这样构造POST请求,但这次构造出来的请求却发现每次都获取不到数据,经过检验发现我们传入的POST请求也必须是gb2312编码。所以代码修改如下。

post_data = {

'from': '大连',#自己设置A城市

'to': '上海',

'Submit': '提交',

'act': '3',

}

post_data = urlencode(post_data, encoding='gb2312')

post_url = 'http://qq.ip138.com/train/search138.asp'

response = requests.post(post_url, data=post_data, headers=headers, )

response.encoding = 'gb2312' # 设置字符编码

html = response.text3.数据获取

刚刚提到了数据获取有两种情况,一种是直达,一种是转乘的。

直达的数据很好获取,转乘的就需要考虑一些逻辑问题了,这里不属于什么技术难点我就不再赘述。

def parse_html(url, post_data):

html = get_html(url, post_data)

info_pattern = re.compile('<td bgcolor="#CCE6CD">.*?<b>(.*?)</b>'#G1251

'.*?<td>(.*?)</td>'#大连

'<td>(.*?)</td>'#上海虹桥

'<td>(.*?)</td>'#高速

'.*?<td>(.*?)</td>'#大连

'.*?<td>(.*?)</td>'#08:41

'.*?<td>(.*?)</td>'#上海虹桥

'.*?<td>(.*?)</td>'#20:00

'.*?<td>(.*?)</td>'#时长

'.*?<td>(.*?)</td>',re.S)#多少公里

info_list = re.findall(info_pattern,html)

with open('info.csv','a',newline='',encoding='utf-8-sig') as f:

for info in info_list:

writer = csv.writer(f)

writer.writerow(info)

if len(info_list) == 0:

counts_pattern = re.compile('table cellpadding="3"(.*?)ble>',re.S)

counts = re.findall(counts_pattern,html)

way_list = []

first_title = []

second_title = []

first_detail_info_list = []

second_detail_info_list = []

for count in counts:

# print(count)

way_pattern = re.compile('<th colspan="5">(.*?)</td>',re.S)

way = re.findall(way_pattern,count)

title_pattern = re.compile('bgcolor="#CCE6CD"><td>(.*?)</td>' # 出发车次

'<td width="120">(.*?)</td>' # 大连北发车

'<td width="120">(.*?)</td>' # 到达葫芦岛北

'<td width="120">(.*?)</td>' # 旅行时间

'<td width="80">(.*?)</td>' # 旅行距离

, re.S)

title = re.findall(title_pattern, count)

first_info_pattern = re.compile('旅行距离(.*?)td width="80">',re.S)

first_info = re.findall(first_info_pattern,count)

first_detail_info_pattern = re.compile('onmouseover=.*?blank">(.*?)</a>' # D35(大连北-北京)

'.*?<td>(.*?)</td>' # 10:34

'.*?<td>(.*?)</td>' # 13:22

'.*?<td>(.*?)</td>' # 2小时29分钟

'.*?<td>(.*?)</td>' # 436

, re.S)

first_detail_info = re.findall(first_detail_info_pattern, first_info[0])

second_info_pattern = re.compile('旅行距离.*?旅行距离(.*?)</ta',re.S)

second_info = re.findall(second_info_pattern,count)

second_detail_info_pattern = re.compile('onmouseover=.*?blank">(.*?)</a>' # D35(大连北-北京)

'.*?<td>(.*?)</td>' # 10:34

'.*?<td>(.*?)</td>' # 13:22

'.*?<td>(.*?)</td>' # 2小时29分钟

'.*?<td>(.*?)</td>' # 436

, re.S)

second_detail_info = re.findall(second_detail_info_pattern,second_info[0])

way_list.append(way)

first_title.append(title[0])

second_title.append(title[1])

first_detail_info_list.append(first_detail_info)

second_detail_info_list.append(second_detail_info)

with open('info.csv', 'a', newline='', encoding='utf-8-sig') as f:

writer = csv.writer(f)

for i in range(len(way_list)):

writer.writerow(way_list[i])

writer.writerow(first_title[i])

for j in range(len(first_detail_info_list[i])):

writer.writerow(first_detail_info_list[i][j])

writer.writerow(second_title[i])

for j in range(len(second_detail_info_list[i])):

writer.writerow(second_detail_info_list[i][j])4.数据展示