版权声明:本文为博主原创文章,转载请标明出处。https://blog.csdn.net/kXYOnA63Ag9zqtXx0/article/details/82954503 https://blog.csdn.net/forever428/article/details/85757353 </div>

<div id="content_views" class="markdown_views prism-github-gist">

<!-- flowchart 箭头图标 勿删 -->

<svg xmlns="http://www.w3.org/2000/svg" style="display: none;"><path stroke-linecap="round" d="M5,0 0,2.5 5,5z" id="raphael-marker-block" style="-webkit-tap-highlight-color: rgba(0, 0, 0, 0);"></path></svg>

<p></p><div class="toc"><h3><a name="t0"></a>文章目录</h3><ul><ul><ul><li><a href="#__1" rel="nofollow" target="_self">一 算子总结</a></li><ul><li><a href="#11_mapmapPartitions_3" rel="nofollow" target="_self">1.1 map和mapPartitions的区别</a></li><li><a href="#12__mapforeach_6" rel="nofollow" target="_self">1.2 map和foreach的区别:</a></li><li><a href="#13_foreachforeachPartition_11" rel="nofollow" target="_self">1.3 foreach和foreachPartition的区别:</a></li></ul><li><a href="#_RDD_21" rel="nofollow" target="_self">二 RDD类型</a></li><li><a href="#_RDD_30" rel="nofollow" target="_self">三 RDD依赖关系</a></li><ul><li><a href="#31__33" rel="nofollow" target="_self">3.1 窄依赖</a></li><li><a href="#32__37" rel="nofollow" target="_self">3.2 宽依赖</a></li><li><a href="#33_join_41" rel="nofollow" target="_self">3.3 join有时宽依赖有时窄依赖</a></li><li><a href="#34__48" rel="nofollow" target="_self">3.4 宽窄依赖区分</a></li></ul><li><a href="#__1_55" rel="nofollow" target="_self">四 案例一:学科访问量统计_1</a></li><ul><li><a href="#41__56" rel="nofollow" target="_self">4.1 数据</a></li><li><a href="#42__58" rel="nofollow" target="_self">4.2 需求</a></li><li><a href="#43__60" rel="nofollow" target="_self">4.3 实现思路</a></li><li><a href="#44__64" rel="nofollow" target="_self">4.4 实现代码</a></li><li><a href="#45__113" rel="nofollow" target="_self">4.5 运行结果</a></li></ul><li><a href="#___2_116" rel="nofollow" target="_self">五 案例二: 学科访问量统计_2(缓存)</a></li><ul><li><a href="#51__117" rel="nofollow" target="_self">5.1 解读缓存源码</a></li><li><a href="#52__129" rel="nofollow" target="_self">5.2 使用缓存</a></li><li><a href="#53__169" rel="nofollow" target="_self">5.3 运行结果</a></li></ul><li><a href="#___3_173" rel="nofollow" target="_self">六 案例三: 学科访问量统计_3(自定义分区器)</a></li><ul><li><a href="#61__174" rel="nofollow" target="_self">6.1 实现自定义分区按照不同的学科信息将数据进行分区</a></li><li><a href="#62_Hash_268" rel="nofollow" target="_self">6.2 验证Hash碰撞</a></li></ul><li><a href="#_DAG_294" rel="nofollow" target="_self">七 DAG</a></li><ul><li><a href="#71_DAG_295" rel="nofollow" target="_self">7.1 DAG概念</a></li><li><a href="#72_DAG_297" rel="nofollow" target="_self">7.2 DAG划分过程</a></li></ul><li><a href="#__321" rel="nofollow" target="_self">八 执行任务时创建对象和序列化</a></li><ul><li><a href="#81__328" rel="nofollow" target="_self">8.1 将创建对象放到算子中</a></li><li><a href="#82__363" rel="nofollow" target="_self">8.2 将创建对象放到算子外部</a></li><li><a href="#83__400" rel="nofollow" target="_self">8.3 使用单例模式</a></li><ul><li><a href="#831_Serializable_420" rel="nofollow" target="_self">8.3.1 单例继承Serializable特质</a></li><li><a href="#832_Serializable_459" rel="nofollow" target="_self">8.3.2 单例不继承Serializable特质</a></li></ul></ul></ul></ul></ul></div><p></p>

一 算子总结

1.1 map和mapPartitions的区别

map是处理RDD里的每个元素, mapPartitions是用于处理RDD里的每个分区

1.2 map和foreach的区别:

- map有返回值, foreach没有返回值

- map常用于将某个RDD做元素的处理, 而foreach常用于作为结果的输出到其他的存储系统中

- map是transformation算子, 而foreach是属于action算子

1.3 foreach和foreachPartition的区别:

- foreach是针对RDD的每个元素来操作的, foreachPartition是针对RDD的每个分区进行操作的

- 从优化层面讲: foreachPartition常用于存储大量结果数据的场景, 可以一个分区对应一个数据库的连接, 这样就可以减少很多数据库的连接

rdd.foreachPartition(part =>{ //val conn = ... //获取一个数据库的连接 part.foreach(操作元素) //遍历每一个元素进行操作 })- 1

- 2

- 3

- 4

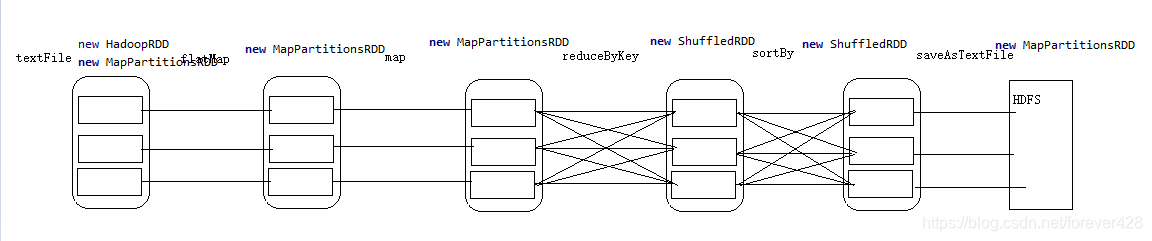

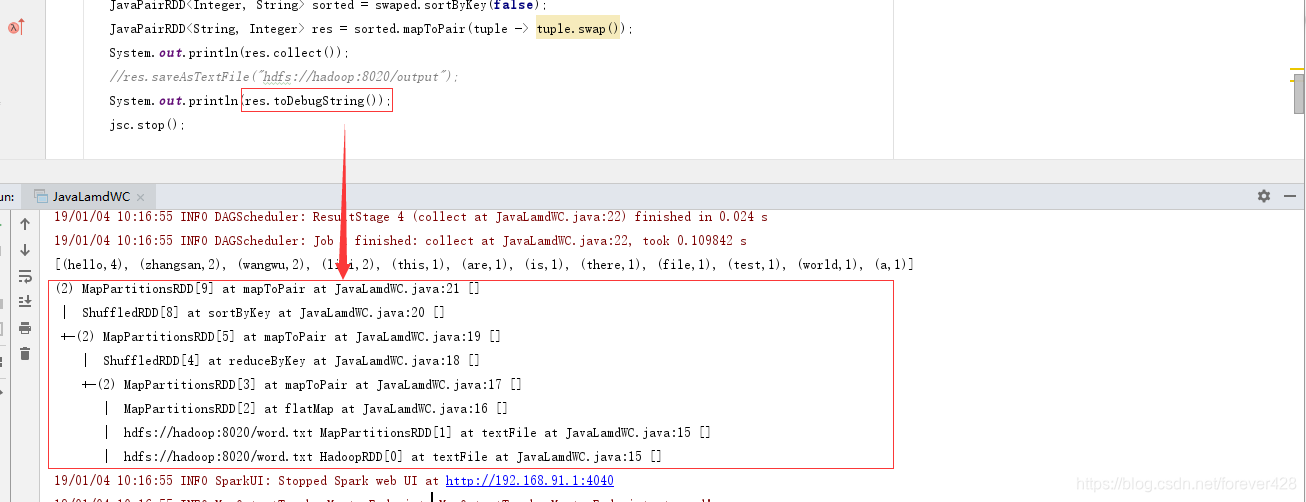

二 RDD类型

直接打印RDD类型

System.out.println(res.toDebugString());

- 1

三 RDD依赖关系

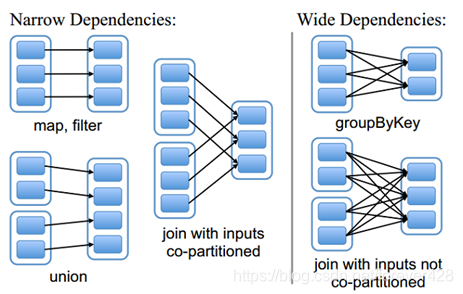

RDD和它依赖的父RDD(s)的关系有两种不同的类型,即窄依赖(narrow dependency)和宽依赖(wide dependency)。

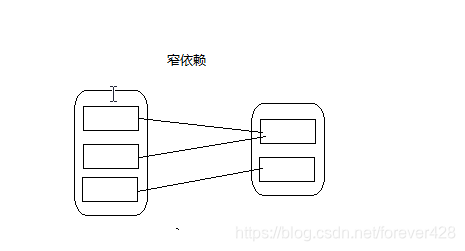

3.1 窄依赖

窄依赖指的是每一个父RDD的Partition最多被子RDD的一个Partition使用

总结:窄依赖我们形象的比喻为独生子女

3.2 宽依赖

宽依赖指的是多个子RDD的Partition会依赖同一个父RDD的Partition

总结:宽依赖我们形象的比喻为超生

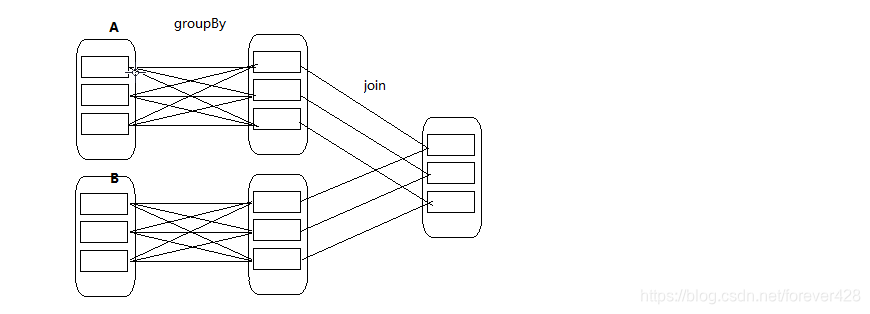

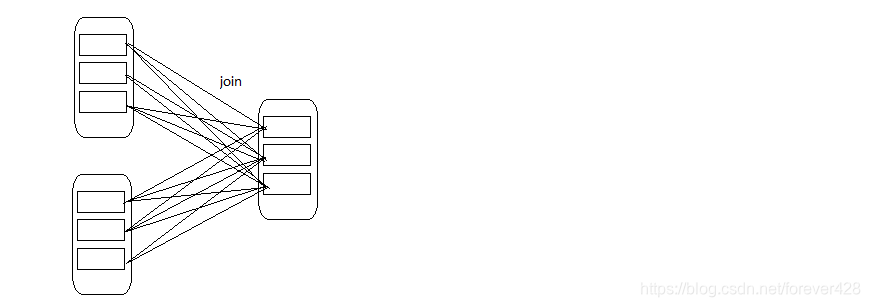

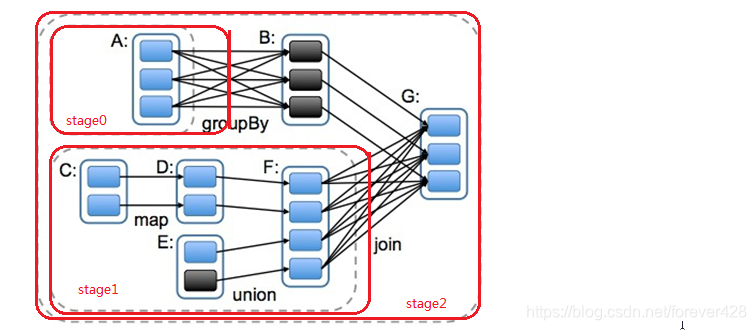

3.3 join有时宽依赖有时窄依赖

-

如果在join之前进行了groupBy的操作, join的过程就不会发生shuffle, 也就是窄依赖

-

如果join操作之前没有groupBy等操作, 直接进行join操作一般都会发生shuffle, 这个地方在实操中可以做一个优化

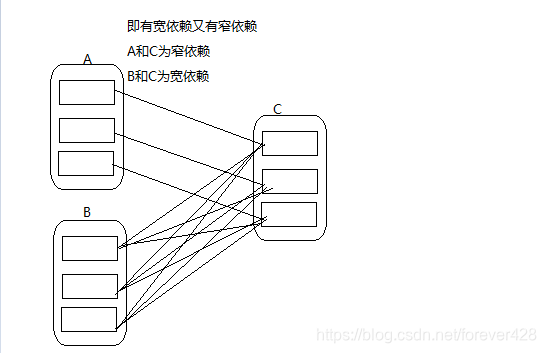

3.4 宽窄依赖区分

-

既有宽依赖又有窄依赖

-

前后分区不一致

四 案例一:学科访问量统计_1

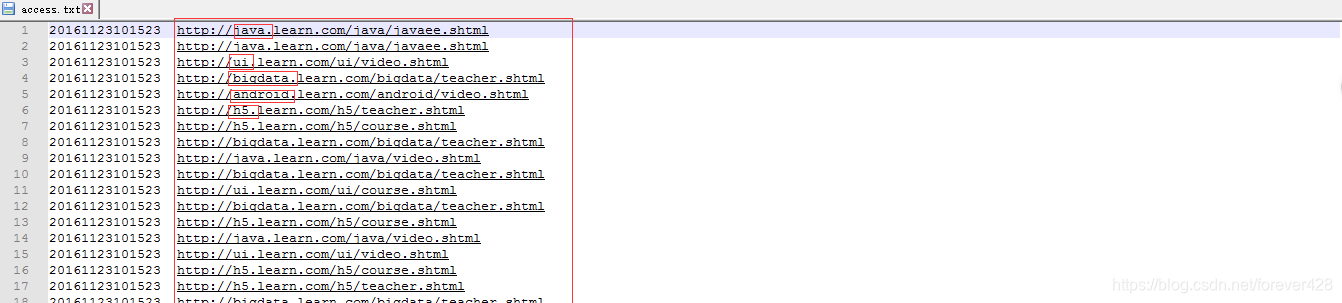

4.1 数据

4.2 需求

- 求各个学科各个模块的访问量

4.3 实现思路

- 计算出每个学科各个模块(url)的访问量

- 按照学科进行分组

- 组内排序, 取top(n)

4.4 实现代码

import java.net.URL

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SubjectAccessCount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster(“local”).setAppName(“SubjectAccessCount”)

val sc = new SparkContext(conf)

<span class="token comment">// 获取数据</span>

val logs<span class="token operator">:</span> RDD<span class="token punctuation">[</span>String<span class="token punctuation">]</span> <span class="token operator">=</span> sc<span class="token punctuation">.</span><span class="token function">textFile</span><span class="token punctuation">(</span><span class="token string">"G:\\06-Spark\\sparkcoursesinfo\\spark\\data\\subjectaccess\\access.txt"</span><span class="token punctuation">)</span>

<span class="token comment">// 将用户访问日志进行切分并返回URL</span>

val url<span class="token operator">:</span> RDD<span class="token punctuation">[</span>String<span class="token punctuation">]</span> <span class="token operator">=</span> logs<span class="token punctuation">.</span><span class="token function">map</span><span class="token punctuation">(</span>line <span class="token operator">=</span><span class="token operator">></span> line<span class="token punctuation">.</span><span class="token function">split</span><span class="token punctuation">(</span><span class="token string">"\t"</span><span class="token punctuation">)</span><span class="token punctuation">(</span><span class="token number">1</span><span class="token punctuation">)</span><span class="token punctuation">)</span>

<span class="token comment">// 将URL生成元组, 便于聚合</span>

val tupUrl<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> url<span class="token punctuation">.</span><span class="token function">map</span><span class="token punctuation">(</span><span class="token punctuation">(</span>_<span class="token punctuation">,</span> <span class="token number">1</span><span class="token punctuation">)</span><span class="token punctuation">)</span>

<span class="token comment">// 获取每个学科的各个模块的访问量</span>

val reducedUrl<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> tupUrl<span class="token punctuation">.</span><span class="token function">reduceByKey</span><span class="token punctuation">(</span>_ <span class="token operator">+</span> _<span class="token punctuation">)</span>

<span class="token comment">// 通过上面的数据来获取学科信息</span>

val subjectAndUrlInfo<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> <span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> reducedUrl<span class="token punctuation">.</span><span class="token function">map</span><span class="token punctuation">(</span>tup <span class="token operator">=</span><span class="token operator">></span> <span class="token punctuation">{</span>

val url<span class="token operator">:</span> String <span class="token operator">=</span> tup<span class="token punctuation">.</span>_1 <span class="token comment">// 用户请求的url</span>

val count<span class="token operator">:</span> Int <span class="token operator">=</span> tup<span class="token punctuation">.</span>_2 <span class="token comment">// url对应的pv</span>

val subject<span class="token operator">:</span> String <span class="token operator">=</span> <span class="token keyword">new</span> <span class="token class-name">URL</span><span class="token punctuation">(</span>url<span class="token punctuation">)</span><span class="token punctuation">.</span>getHost

<span class="token punctuation">(</span>subject<span class="token punctuation">,</span> <span class="token punctuation">(</span>url<span class="token punctuation">,</span> count<span class="token punctuation">)</span><span class="token punctuation">)</span>

<span class="token punctuation">}</span><span class="token punctuation">)</span>

<span class="token comment">//println(subjectAndUrlInfo.collect.toBuffer)</span>

<span class="token comment">// 按照学科进行分组</span>

val grouped<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Iterable<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span><span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> subjectAndUrlInfo<span class="token punctuation">.</span>groupByKey

<span class="token comment">// 组内进行降序排序</span>

val sorted<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> List<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span><span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> grouped<span class="token punctuation">.</span><span class="token function">mapValues</span><span class="token punctuation">(</span>_<span class="token punctuation">.</span>toList<span class="token punctuation">.</span><span class="token function">sortBy</span><span class="token punctuation">(</span>_<span class="token punctuation">.</span>_2<span class="token punctuation">)</span><span class="token punctuation">.</span>reverse<span class="token punctuation">)</span>

<span class="token comment">//获取top3</span>

val res<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> List<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span><span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> sorted<span class="token punctuation">.</span><span class="token function">mapValues</span><span class="token punctuation">(</span>_<span class="token punctuation">.</span><span class="token function">take</span><span class="token punctuation">(</span><span class="token number">3</span><span class="token punctuation">)</span><span class="token punctuation">)</span>

<span class="token function">println</span><span class="token punctuation">(</span>res<span class="token punctuation">.</span>collect<span class="token punctuation">.</span>toBuffer<span class="token punctuation">)</span>

sc<span class="token punctuation">.</span><span class="token function">stop</span><span class="token punctuation">(</span><span class="token punctuation">)</span>

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

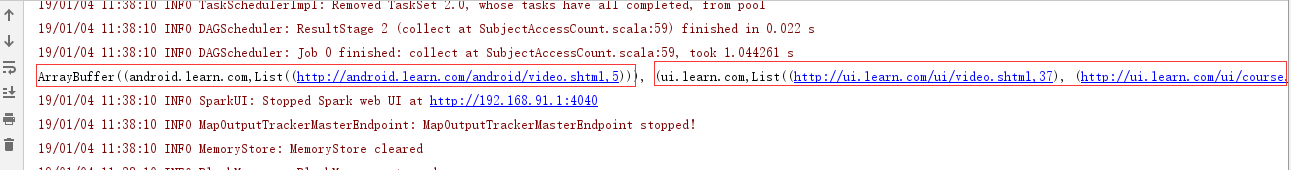

4.5 运行结果

五 案例二: 学科访问量统计_2(缓存)

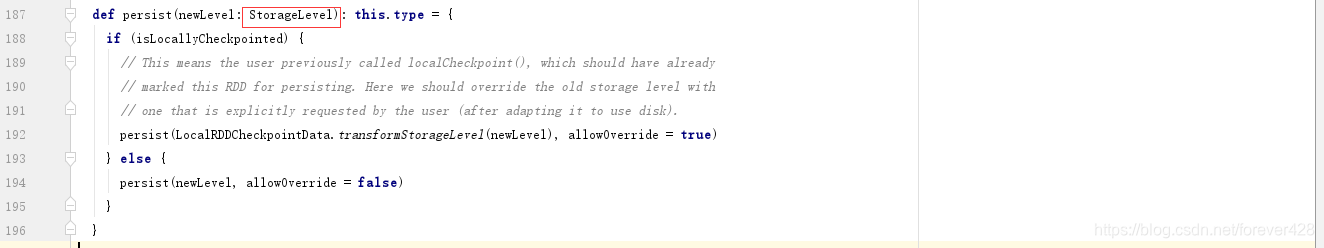

5.1 解读缓存源码

缓存有两个方法cache和persist, 通过源码可以看出cache调用了persist, 所以这两个方法运行的效率可以看做是一样的

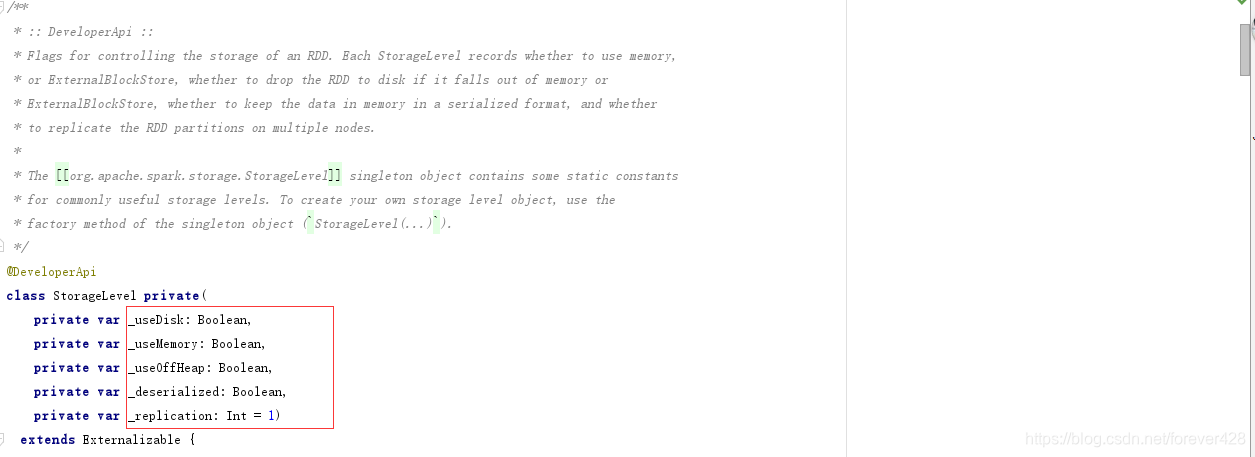

在persist方法中需要传入StorageLevel这个对象

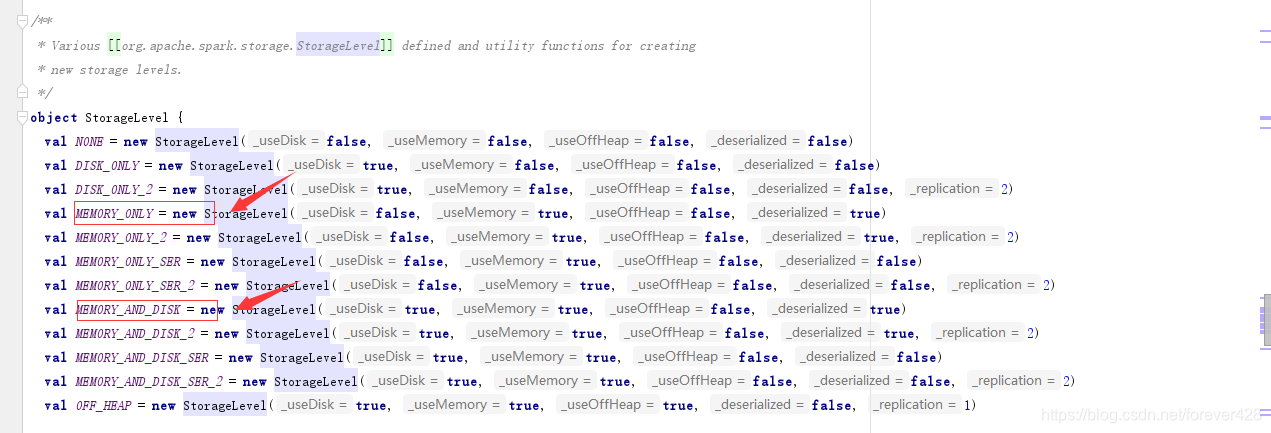

StorageLevel对象中可以指定缓存的数据存入到内存中, 磁盘中, 堆外缓存中, 是否关闭序列化, 以及副本数量

StorageLevel有一个伴生类, 在这个伴生类中给定了一些常量(层级划分), 其中有两个比较常用的, 一个是仅内存(MEMORY_ONLY), 另一个是磁盘和内存(MEMORY_AND_DISK). 这里强调一下, MEMORY_AND_DISK并不是在内存和磁盘中各存一份, 而是优先存储到内存中, 当内存不足时, 缓存的数据会存储到磁盘中.

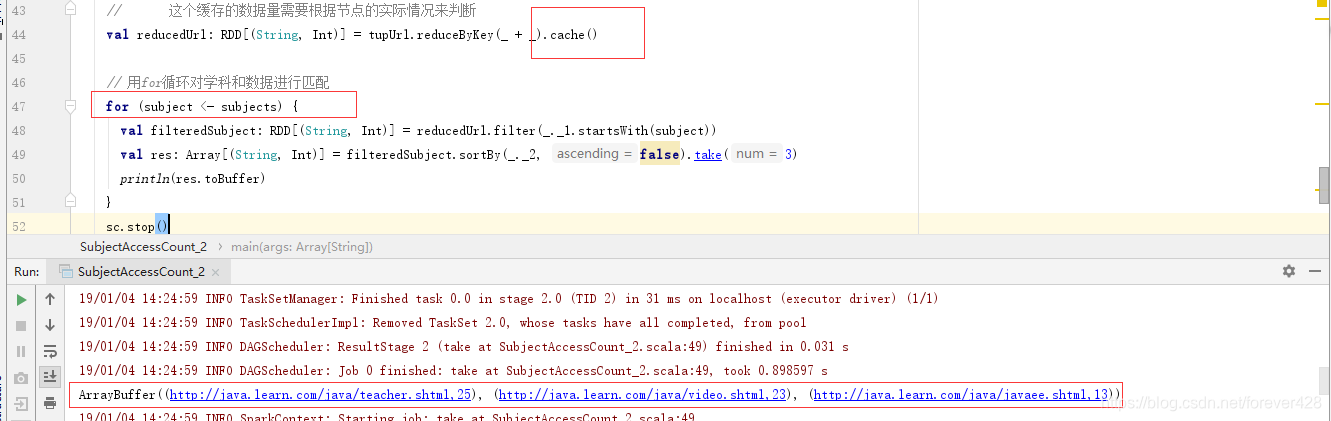

5.2 使用缓存

import java.net.URL

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

object SubjectAccessCount_2 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster(“local”).setAppName(“SubjectAccessCount”)

val sc = new SparkContext(conf)

<span class="token comment">// 获取数据</span>

val logs<span class="token operator">:</span> RDD<span class="token punctuation">[</span>String<span class="token punctuation">]</span> <span class="token operator">=</span> sc<span class="token punctuation">.</span><span class="token function">textFile</span><span class="token punctuation">(</span><span class="token string">"G:\\06-Spark\\sparkcoursesinfo\\spark\\data\\subjectaccess\\access.txt"</span><span class="token punctuation">)</span>

<span class="token comment">// 学科信息</span>

val subjects <span class="token operator">=</span> <span class="token function">Array</span><span class="token punctuation">(</span><span class="token string">"http://java.learn.com"</span><span class="token punctuation">,</span> <span class="token string">"http://ui.learn.com"</span><span class="token punctuation">,</span> <span class="token string">"http://bigdata.learn.com"</span><span class="token punctuation">,</span> <span class="token string">"http://android.learn.com"</span><span class="token punctuation">,</span> <span class="token string">"http://h5.learn.com"</span><span class="token punctuation">)</span>

<span class="token comment">// 将用户访问日志进行切分并返回URL</span>

val url<span class="token operator">:</span> RDD<span class="token punctuation">[</span>String<span class="token punctuation">]</span> <span class="token operator">=</span> logs<span class="token punctuation">.</span><span class="token function">map</span><span class="token punctuation">(</span>line <span class="token operator">=</span><span class="token operator">></span> line<span class="token punctuation">.</span><span class="token function">split</span><span class="token punctuation">(</span><span class="token string">"\t"</span><span class="token punctuation">)</span><span class="token punctuation">(</span><span class="token number">1</span><span class="token punctuation">)</span><span class="token punctuation">)</span>

<span class="token comment">// 将URL生成元组, 便于聚合</span>

val tupUrl<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> url<span class="token punctuation">.</span><span class="token function">map</span><span class="token punctuation">(</span><span class="token punctuation">(</span>_<span class="token punctuation">,</span> <span class="token number">1</span><span class="token punctuation">)</span><span class="token punctuation">)</span>

<span class="token comment">// 获取每个学科的各个模块的访问量</span>

<span class="token comment">// 注意: 在cache的时候需要考虑的是cache的数据量不能太大, 否则缓存数据太大会影响效率</span>

<span class="token comment">// 这个缓存的数据量需要根据节点的实际情况来判断</span>

val reducedUrl<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> tupUrl<span class="token punctuation">.</span><span class="token function">reduceByKey</span><span class="token punctuation">(</span>_ <span class="token operator">+</span> _<span class="token punctuation">)</span><span class="token punctuation">.</span><span class="token function">cache</span><span class="token punctuation">(</span><span class="token punctuation">)</span>

<span class="token comment">// 用for循环对学科和数据进行匹配</span>

<span class="token keyword">for</span> <span class="token punctuation">(</span>subject <span class="token operator"><</span><span class="token operator">-</span> subjects<span class="token punctuation">)</span> <span class="token punctuation">{</span>

val filteredSubject<span class="token operator">:</span> RDD<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> reducedUrl<span class="token punctuation">.</span><span class="token function">filter</span><span class="token punctuation">(</span>_<span class="token punctuation">.</span>_1<span class="token punctuation">.</span><span class="token function">startsWith</span><span class="token punctuation">(</span>subject<span class="token punctuation">)</span><span class="token punctuation">)</span>

val res<span class="token operator">:</span> Array<span class="token punctuation">[</span><span class="token punctuation">(</span>String<span class="token punctuation">,</span> Int<span class="token punctuation">)</span><span class="token punctuation">]</span> <span class="token operator">=</span> filteredSubject<span class="token punctuation">.</span><span class="token function">sortBy</span><span class="token punctuation">(</span>_<span class="token punctuation">.</span>_2<span class="token punctuation">,</span> <span class="token boolean">false</span><span class="token punctuation">)</span><span class="token punctuation">.</span><span class="token function">take</span><span class="token punctuation">(</span><span class="token number">3</span><span class="token punctuation">)</span>

<span class="token function">println</span><span class="token punctuation">(</span>res<span class="token punctuation">.</span>toBuffer<span class="token punctuation">)</span>

<span class="token punctuation">}</span>

sc<span class="token punctuation">.</span><span class="token function">stop</span><span class="token punctuation">(</span><span class="token punctuation">)</span>

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

5.3 运行结果

六 案例三: 学科访问量统计_3(自定义分区器)

6.1 实现自定义分区按照不同的学科信息将数据进行分区

import java.net.URL

import org.apache.spark.{HashPartitioner, Partitioner, SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

import scala.collection.mutable

/**

-

实现自定义分区, 按照不同的学科信息将数据进行分区

*/

object SubjectAccessCount_3 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster(“local”).setAppName(“SubjectAccessCount”)

val sc = new SparkContext(conf)// 获取数据

val logs: RDD[String] = sc.textFile(“G:\06-Spark\sparkcoursesinfo\spark\data\subjectaccess\access.txt”)// 学科信息

// val subjects = Array(“http://java.learn.com”, “http://ui.learn.com”, “http://bigdata.learn.com”, “http://android.learn.com”, “http://h5.learn.com”)// 将用户访问日志进行切分并返回URL

val url: RDD[String] = logs.map(line => line.split("\t")(1))// 将URL生成元组, 便于聚合

val tupUrl: RDD[(String, Int)] = url.map((_, 1))// 获取每个学科的各个模块的访问量

// 注意: 在cache的时候需要考虑的是cache的数据量不能太大, 否则缓存数据太大会影响效率

// 这个缓存的数据量需要根据节点的实际情况来判断

val reducedUrl: RDD[(String, Int)] = tupUrl.reduceByKey(_ + _).cache()val subjectInfo: RDD[(String, (String, Int))] = reducedUrl.map(tup => {

val url: String = tup._1

val count: Int = tup._2

val subject: String = new URL(url).getHost

(subject, (url, count))

}).cache()// 出现数据倾斜

// val partitioned: RDD[(String, (String, Int))] = subjectInfo.partitionBy(new HashPartitioner(3))

// partitioned.saveAsTextFile(“output”)// 获取学科信息

val subjects: Array[String] = subjectInfo.keys.distinct().collect()// 调用自定义分区器

val partitioner = new SubjectPartitioner(subjects)// 开始进行分区

val partitionered: RDD[(String, (String, Int))] = subjectInfo.partitionBy(partitioner)val res: RDD[(String, (String, Int))] = partitionered.mapPartitions(it => {

it.toList.sortBy(_._2._2).reverse.take(3).iterator

})

res.saveAsTextFile(“output2’”)sc.stop()

}

}

// 自定义分区器

class SubjectPartitioner(subjects: Array[String]) extends Partitioner {

// 声明一个Map, 用于存储学科对应的分区号

private val subjectAndPartition = new mutable.HashMap[String, Int]()

// 将学科存储在集合中

var i = 0

for (subject <- subjects) {

subjectAndPartition += (subject -> i)

i += 1

}

/**

* 获取分区数

*/

override def numPartitions: Int = subjects.length

/**

* 获取分区号

* @param key

* @return

*/

override def getPartition(key: Any): Int = {

// 获取分区号, 如果没有放到0号分区

subjectAndPartition.getOrElse(key.toString, 0)

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

6.2 验证Hash碰撞

package Day04

/*

-

@Description: 验证Hash碰撞

-

ClassName HashTest01

-

@Author: WCH

-

@CreateDate: 2019/1/4$ 15:01$

-

@Version: 1.0

*/

object HashTest01 {

def main(args: Array[String]): Unit = {

val key = “ui.learn.com”

val numPartitions = 3val rawMod: Int = key.hashCode % numPartitions

val num: Int = rawMod + (if (rawMod < 0) numPartitions else 0)println(num)

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

七 DAG

7.1 DAG概念

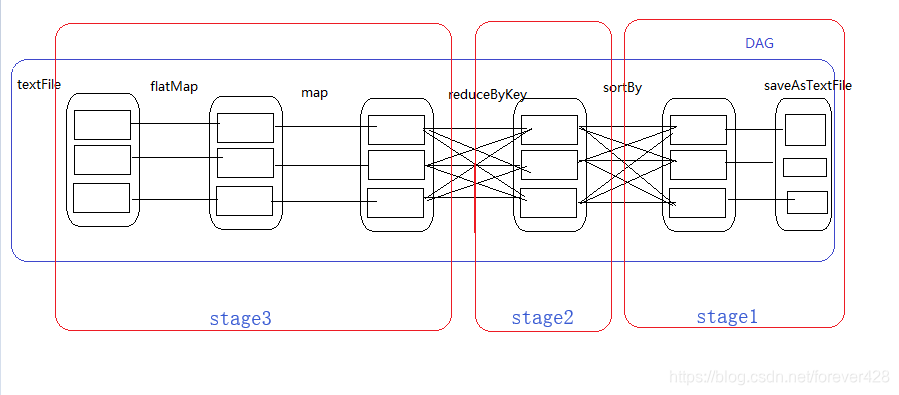

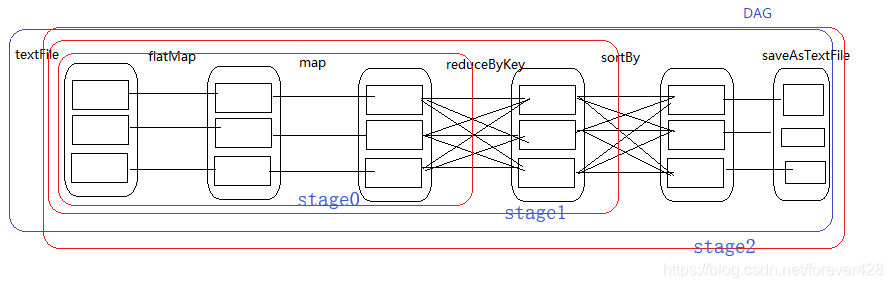

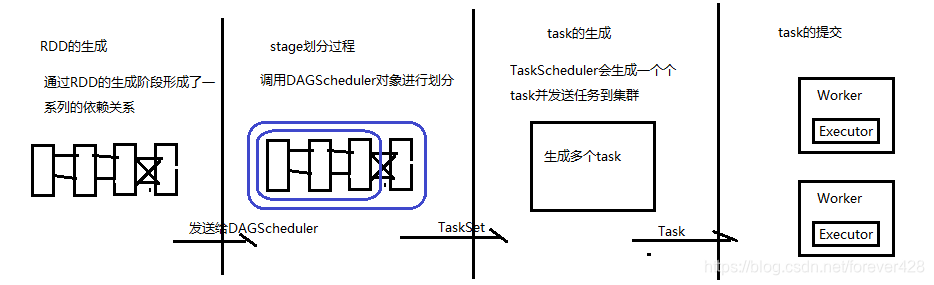

DAG(Directed Acyclic Graph)叫做有向无环图,原始的RDD通过一系列的转换就就形成了DAG,根据RDD之间的依赖关系的不同将DAG划分成不同的Stage,对于窄依赖,partition的转换处理在Stage中完成计算。对于宽依赖,由于有Shuffle的存在,只能在parent RDD处理完成后,才能开始接下来的计算,因此宽依赖是划分Stage的依据。划分stage的目的是为了生成Task.

7.2 DAG划分过程

利用递归算法, 从finalRDD开始找父RDD, 判断他们之间的依赖关系如果是宽依赖, 就划分为一个stage, 就把前面所有的RDD和当前的RDD划分为一个stage, 如果是窄依赖, 就继续找它的父RDD, 直到找不到父RDD, 该递归退出, 最后把所有的RDD划分为一个stage

- RDD的生成: 通过RDD的生成阶段形成了一系列的依赖关系, 发送给DAGScheduler

- stage划分过程:调用DAGScheduler对象进行划分, 通过TaskSet发送

- task的生成: TaskScheduler会生成一个个task并发送任务到集群

- task的提交: 多个worker端中的Executor接收一个个的task进行处理

- RDD的生成在Driver端发生的

- stage划分在Driver端发生的

- task的生成在Driver端发生的

- task的提交在Driver端发生的

- task的执行时在Executor端发生的

八 执行任务时创建对象和序列化

有如下规则, 现在需要在算子中使用规则

class Rules extends Serializable {

val rulesMap = Map("xiaoli" -> 25, "xiaofang" -> 27)

}

- 1

- 2

- 3

8.1 将创建对象放到算子中

将创建对象放到算子中, 这个时候无需实现序列化, 因为每一个节点中的每一个线程中遍历每一个元素的时候都会创建一个对象, 通过打印对象的地址可以观察到每个地址都是不同的, 即没有发生磁盘IO, 所以无需实现序列化

import java.net.InetAddress

import org.apache.spark.{SparkConf, SparkContext}

/**

-

一条数据创建一次对象, 效率及其低下,不建议在算子内创建对象

*/

object SerializeTest_1 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName(“SerTest”).setMaster(“local[2]”)

val sc = new SparkContext(conf)val lines = sc.parallelize(Array(“xiaoli”, “xiaofang”, “xiaolin”))

// map方法中的函数是在Executor的某个Task中执行的

val res = lines.map(x => {

// 该对象是在Executor端创建的

val rules = new Rules

// 获取task的hostname,也就是判断该task是在哪个节点执行的

val hostname = InetAddress.getLocalHost.getHostName

// 获取当前线程名称

val threadName = Thread.currentThread().getName

//rules是在Executor中使用的

(hostname, threadName, rules.rulesMap.getOrElse(x, 0), rules.toString)

})println(res.collect.toBuffer)

sc.stop()

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

8.2 将创建对象放到算子外部

当把创建对象的步骤放在算子外部的时候, 每个节点的每个线程会创建一个对象, 在执行任务的时候, Driver端需要将序列化后的对象传给Executor, Executor接收到之后会进行反序列化, 所以这里创建的Rules 对象必须要继承序列化特质

import java.net.InetAddress

import org.apache.spark.{SparkConf, SparkContext}

/**

-

用序列化的方式

*/

object SerializeTest_2 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName(“SerTest”).setMaster(“local[2]”)

val sc = new SparkContext(conf)val lines = sc.parallelize(Array(“xiaoli”, “xiaofang”, “xiaolin”))

// 该对象在Driver端创建

val rules = new Rules

println(“Driver端的哈希值:” + rules.toString)// map方法中的函数是在Executor的某个Task中执行的

val res = lines.map(x => {

// 获取task的hostname,也就是判断该task是在哪个节点执行的

val hostname = InetAddress.getLocalHost.getHostName

// 获取当前线程名称

val threadName = Thread.currentThread().getName

// rules是在Executor中使用的

(hostname, threadName, rules.rulesMap.getOrElse(x, 0), rules.toString)

})println(res.collect.toBuffer)

sc.stop()

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

8.3 使用单例模式

使用单例模式, 可以在本地运行时达到全局共享的目的, 这样就多个线程共享一个对象, 对象的地址也是一个

import java.net.InetAddress

/**

- 第三种方式

*/

//object ObjectRules extends Serializable{

// val rulesMap = Map(“xiaoli” -> 25, “xiaofang” -> 27)

//}

/**

- 第四种方式

*/

object ObjectRules {

val rulesMap = Map(“xiaoli” -> 25, “xiaofang” -> 27)

println(“hostname的名称为:” + InetAddress.getLocalHost.getHostName)

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

8.3.1 单例继承Serializable特质

import java.net.InetAddress

import org.apache.spark.{SparkConf, SparkContext}

/**

-

用单例对象的方式

*/

object SerializeTest_3 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName(“SerTest”).setMaster(“local[2]”)

val sc = new SparkContext(conf)val lines = sc.parallelize(Array(“xiaoli”, “xiaofang”, “xiaolin”))

// 该对象在Driver端创建

val rules = ObjectRules

println(“Driver端的哈希值:” + rules.toString)// map方法中的函数是在Executor的某个Task中执行的

val res = lines.map(x => {

// 获取task的hostname,也就是判断该task是在哪个节点执行的

val hostname = InetAddress.getLocalHost.getHostName

// 获取当前线程名称

val threadName = Thread.currentThread().getName

// rules是在Executor中使用的

(hostname, threadName, rules.rulesMap.getOrElse(x, 0), rules.toString)

})println(res.collect.toBuffer)

// res.saveAsTextFile(“hdfs://node01:9000/out-20181128-1”)sc.stop()

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

8.3.2 单例不继承Serializable特质

import java.net.InetAddress

import org.apache.spark.{SparkConf, SparkContext}

/**

-

用单例对象的方式

*/

object SerializeTest_3 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName(“SerTest”).setMaster(“local[2]”)

val sc = new SparkContext(conf)val lines = sc.parallelize(Array(“xiaoli”, “xiaofang”, “xiaolin”))

// 该对象在Driver端创建

val rules = ObjectRules

println(“Driver端的哈希值:” + rules.toString)// map方法中的函数是在Executor的某个Task中执行的

val res = lines.map(x => {

// 获取task的hostname,也就是判断该task是在哪个节点执行的

val hostname = InetAddress.getLocalHost.getHostName

// 获取当前线程名称

val threadName = Thread.currentThread().getName

// rules是在Executor中使用的

(hostname, threadName, rules.rulesMap.getOrElse(x, 0), rules.toString)

})println(res.collect.toBuffer)

// res.saveAsTextFile(“hdfs://node01:9000/out-20181128-1”)sc.stop()

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

</div>

<link href="https://csdnimg.cn/release/phoenix/mdeditor/markdown_views-2011a91181.css" rel="stylesheet">

</div>

版权声明:本文为博主原创文章,转载请标明出处。https://blog.csdn.net/kXYOnA63Ag9zqtXx0/article/details/82954503 https://blog.csdn.net/forever428/article/details/85757353 </div>

<div id="content_views" class="markdown_views prism-github-gist">

<!-- flowchart 箭头图标 勿删 -->

<svg xmlns="http://www.w3.org/2000/svg" style="display: none;"><path stroke-linecap="round" d="M5,0 0,2.5 5,5z" id="raphael-marker-block" style="-webkit-tap-highlight-color: rgba(0, 0, 0, 0);"></path></svg>

<p></p><div class="toc"><h3><a name="t0"></a>文章目录</h3><ul><ul><ul><li><a href="#__1" rel="nofollow" target="_self">一 算子总结</a></li><ul><li><a href="#11_mapmapPartitions_3" rel="nofollow" target="_self">1.1 map和mapPartitions的区别</a></li><li><a href="#12__mapforeach_6" rel="nofollow" target="_self">1.2 map和foreach的区别:</a></li><li><a href="#13_foreachforeachPartition_11" rel="nofollow" target="_self">1.3 foreach和foreachPartition的区别:</a></li></ul><li><a href="#_RDD_21" rel="nofollow" target="_self">二 RDD类型</a></li><li><a href="#_RDD_30" rel="nofollow" target="_self">三 RDD依赖关系</a></li><ul><li><a href="#31__33" rel="nofollow" target="_self">3.1 窄依赖</a></li><li><a href="#32__37" rel="nofollow" target="_self">3.2 宽依赖</a></li><li><a href="#33_join_41" rel="nofollow" target="_self">3.3 join有时宽依赖有时窄依赖</a></li><li><a href="#34__48" rel="nofollow" target="_self">3.4 宽窄依赖区分</a></li></ul><li><a href="#__1_55" rel="nofollow" target="_self">四 案例一:学科访问量统计_1</a></li><ul><li><a href="#41__56" rel="nofollow" target="_self">4.1 数据</a></li><li><a href="#42__58" rel="nofollow" target="_self">4.2 需求</a></li><li><a href="#43__60" rel="nofollow" target="_self">4.3 实现思路</a></li><li><a href="#44__64" rel="nofollow" target="_self">4.4 实现代码</a></li><li><a href="#45__113" rel="nofollow" target="_self">4.5 运行结果</a></li></ul><li><a href="#___2_116" rel="nofollow" target="_self">五 案例二: 学科访问量统计_2(缓存)</a></li><ul><li><a href="#51__117" rel="nofollow" target="_self">5.1 解读缓存源码</a></li><li><a href="#52__129" rel="nofollow" target="_self">5.2 使用缓存</a></li><li><a href="#53__169" rel="nofollow" target="_self">5.3 运行结果</a></li></ul><li><a href="#___3_173" rel="nofollow" target="_self">六 案例三: 学科访问量统计_3(自定义分区器)</a></li><ul><li><a href="#61__174" rel="nofollow" target="_self">6.1 实现自定义分区按照不同的学科信息将数据进行分区</a></li><li><a href="#62_Hash_268" rel="nofollow" target="_self">6.2 验证Hash碰撞</a></li></ul><li><a href="#_DAG_294" rel="nofollow" target="_self">七 DAG</a></li><ul><li><a href="#71_DAG_295" rel="nofollow" target="_self">7.1 DAG概念</a></li><li><a href="#72_DAG_297" rel="nofollow" target="_self">7.2 DAG划分过程</a></li></ul><li><a href="#__321" rel="nofollow" target="_self">八 执行任务时创建对象和序列化</a></li><ul><li><a href="#81__328" rel="nofollow" target="_self">8.1 将创建对象放到算子中</a></li><li><a href="#82__363" rel="nofollow" target="_self">8.2 将创建对象放到算子外部</a></li><li><a href="#83__400" rel="nofollow" target="_self">8.3 使用单例模式</a></li><ul><li><a href="#831_Serializable_420" rel="nofollow" target="_self">8.3.1 单例继承Serializable特质</a></li><li><a href="#832_Serializable_459" rel="nofollow" target="_self">8.3.2 单例不继承Serializable特质</a></li></ul></ul></ul></ul></ul></div><p></p>