A、系统:

| centos7.2 |

|

| hadoop-2.6.0-cdh5.15.1 |

http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.15.1.tar.gz |

B、角色分配(修改/etc/hostname,/etc/hosts):

192.168.2.199 bigdata0000.tfpay.com bigdata000

192.168.2.201 bigdata01.tfpay.com bigdata01

192.168.2.202 bigdata02.tfpay.com bigdata02

bigdata000 NameNode DataNode ResourceManager Master

bigdata01 DataNode NodeManageer

bigdata02 DataNode NodeManageer

所需文件:

CDH-5.15.1-1.cdh5.15.1.p0.4-el7.parcel

CDH-5.15.1-1.cdh5.15.1.p0.4-el7.parcel.sha1

cloudera-manager-centos7-cm5.15.1_x86_64.tar.gz

creat_sh.sh

hadoop-2.6.0-cdh5.15.1.tar.gz

hadoop-native-64-2.6.0.tar

jdk-8u191-linux-x64.rpm

manifest.json

MySQL-5.6.26-1.linux_glibc2.5.x86_64.rpm-bundle.tar

mysql-connector-java-5.1.47-bin.jar

mysql-connector-java-5.1.47.zip

mysql-connector-java-6.0.2.jar

setup.sh

C、环境搭建

一、ssh配置免密码

ssh-keygen -t rsa(所有节点)

ssh-copy-id -i ~/.ssh/id_rsa.pub bigdata01

ssh-copy-id -i ~/.ssh/id_rsa.pub bigdata02

ssh-copy-id -i ~/.ssh/id_rsa.pub bigdata000

验证:

ssh bigdata000

ssh bigdata01

ssh bigdata02

二、JDK安装:

rpm -ivh --prefix=/app/ ./jdk-8u191-linux-x64.rpm

配置环境变量:

在~/.bash_profile写入

export JAVA_HOME=/app/jdk1.8.0_191-amd64/

export PATH=$PATH:$JAVA_HOME/bin

生效环境变量:

source ~/.bash_profile

验证:

java -version

java version "1.8.0_191"

Java(TM) SE Runtime Environment (build 1.8.0_191-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode)

javac -version

javac 1.8.0_191

三、集群搭建

解压hadoop

mkdir /app

chmod 777 /app

tar -zxvf ./hadoop-2.6.0-cdh5.15.1.tar.gz -C /app/

tar -xvf /mnt/bi/hadoop-native-64-2.6.0.tar -C /app/hadoop-2.6.0-cdh5.15.1/lib/native/

配置环境变量:

在~/.bash_profile写入

export HADOOP_HOME=/app/hadoop-2.6.0-cdh5.15.1

export PATH=$PATH:$HADOOP_HOME/bin

生效环境变量:

source ~/.bash_profile

验证:

hadoop

Usage: hadoop [--config confdir] COMMAND

where COMMAND is one of:

fs run a generic filesystem user client

.....................

配置hadoop-env.sh和core-site.xml

etc/hadoop/hadoop-env.sh

写入

export JAVA_HOME=/app/jdk1.8.0_191-amd64/

etc/hadoop/core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<!--<name>fs.default.name</name>-->

<!--不能加:hdfs://bigdata000:8020,不知道为何-->

<value>hdfs://bigdata000</value>

</property>

<!--添加一个临时文件,重启时候不会删除-->

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop-2.6.0-cdh5.15.1/tmp</value>

</property>

</configuration>

etc/hadoop/hdfs-site.xml:

<configuration>

<!--

副本系数默认为三个,伪分布式环境下一般不用修改

-->

<!--

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

-->

<!--

配置namenode路径

-->

<property>

<name>dfs.namenode.name.dir</name>

<value>/app/hadoop-2.6.0-cdh5.15.1/tmp/dfs/name</value>

</property>

<!--

配置datanode路径

-->

<property>

<name>dfs.datanode.data.dir</name>

<value>/app/hadoop-2.6.0-cdh5.15.1/tmp/dfs/data</value>

</property>

</configuration>

etc/hadoop/yarn-site.xml:

<!--

使用mapreduce

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>bigdata000</value>

</property>

</configuration>

etc/hadoop/mapred-site.xml(需要从etc/mapred-site.xml.template复制):

<configuration>

<!--

mapreduce使用的框架

-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

etc/hadoop/slaves写入slave node的host:

bigdata000

bigdata01

bigdata02

四、master分配到slaves

scp -r /app root@bigdata02:/

scp -r /root/.bash_profile root@bigdata02:/root

scp -r /app root@bigdata01:/

scp -r /root/.bash_profile root@bigdata01:/root

五、格式化NameNode

hdfs namenode -format

六、开启关闭

/app/hadoop-2.6.0-cdh5.15.1/sbin/stop-all.sh

/app/hadoop-2.6.0-cdh5.15.1/sbin/start-all.sh

使用jps验证:

bigdata000:

3620 ResourceManager

3717 NodeManager

5461 Jps

3450 SecondaryNameNode

3197 NameNode

3294 DataNode

bigdata01:

1923 NodeManager

1819 DataNode

2253 Jps

bigdata02:

1639 DataNode

2071 Jps

1743 NodeManager

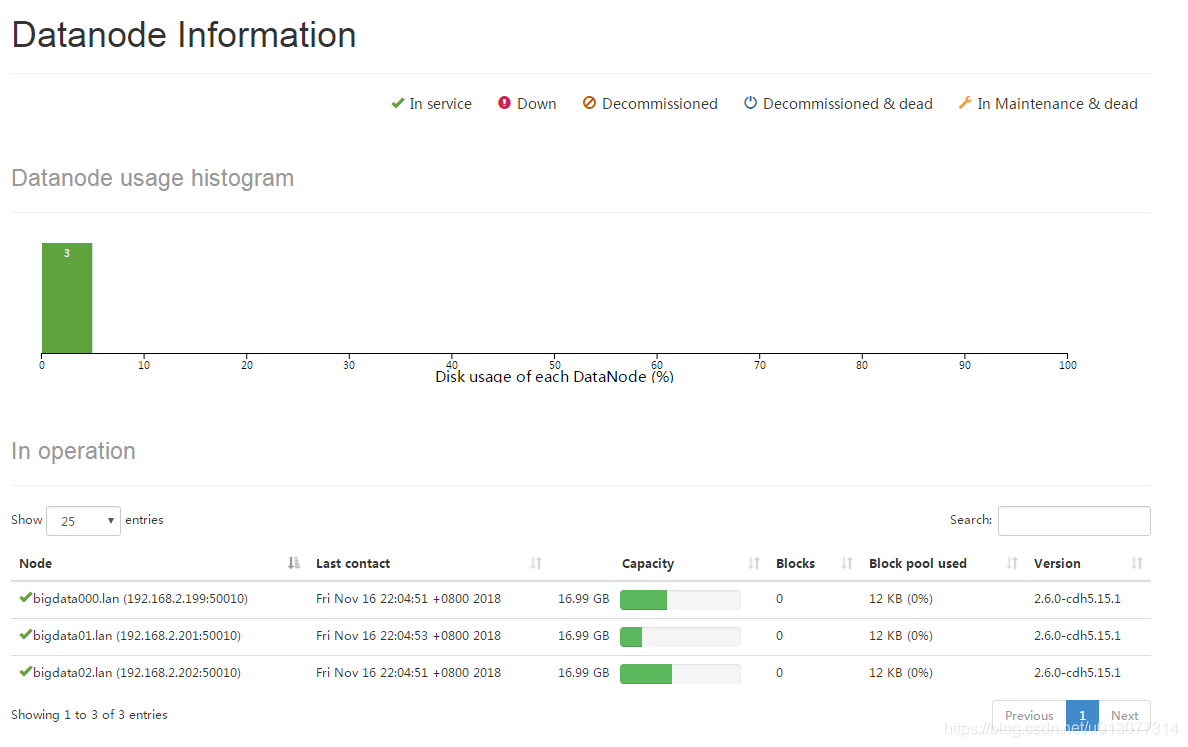

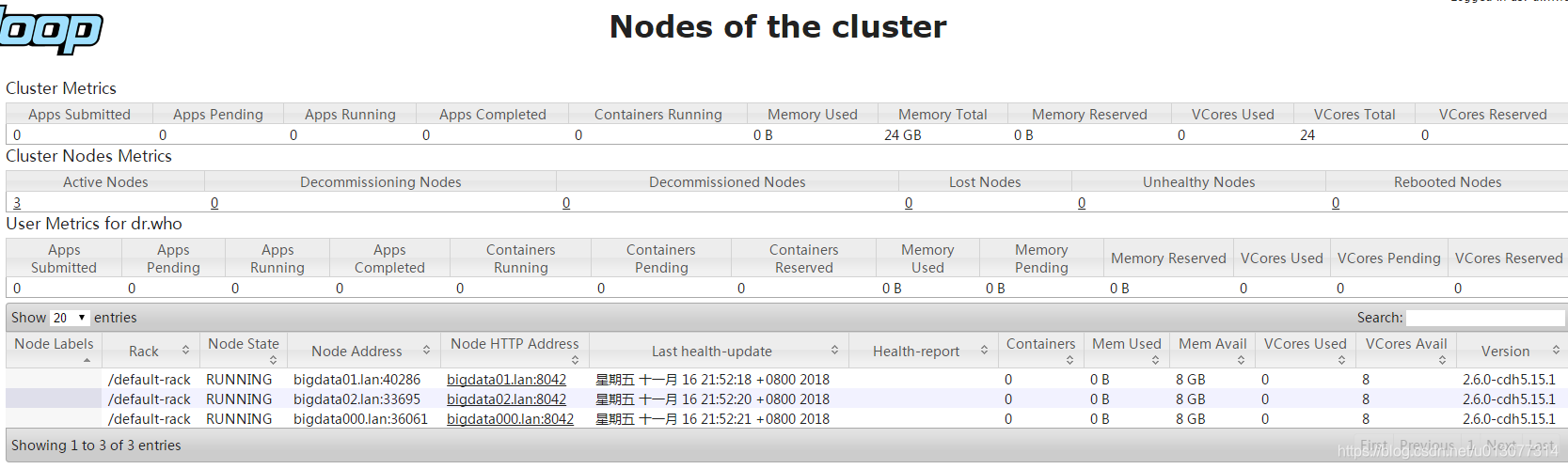

使用Web Interfaces验证:

使用命令行验证:

[root@bigdata000 ~]# hadoop fs -put /tmp/yarn-root-nodemanager.pid

[root@bigdata000 ~]# hadoop fs -ls /

Found 1 items

-rw-r--r-- 3 root supergroup 5 2018-11-17 01:56 /yarn-root-nodemanager.pid

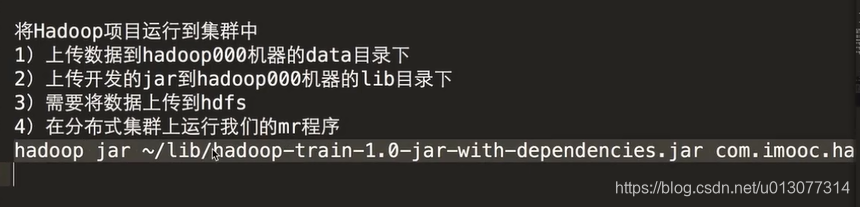

七、使用hadoop集群

八、异常

多次执行格式化时会出错(hdfs namenode -format):

a、datanode无法启动成功

重新格式化后NameNode的clusterID变化,与DataNode中的不一致

修改方法:从/app/hadoop-2.6.0-cdh5.15.1/tmp/dfs/name/current/VERSION中获取clusterID=CID-c043fc46-adf6-4ad9-ab73-a66e75e32567,将其修改至每一个/app/hadoop-2.6.0-cdh5.15.1/tmp/dfs/data/current/VERSION中,重启集群

b、web ui中只显示一个datanode

slaves从master scp时,/app/hadoop-2.6.0-cdh5.15.1/tmp/dfs/data/current/VERSION也拷贝过去了,每个DataNode的storageID都一致,只能显示一个,需修改每个storageID,重启集群