版权声明:本文为博主原创文章,转载请注明出处:http://blog.csdn.net/liudongdong0909。 https://blog.csdn.net/liudongdong0909/article/details/78380779

光说不练假把式。撸起袖子干起来!本文就是学习。。。。。。选用 hadoop-2.4.0

第一步:必要环境准备

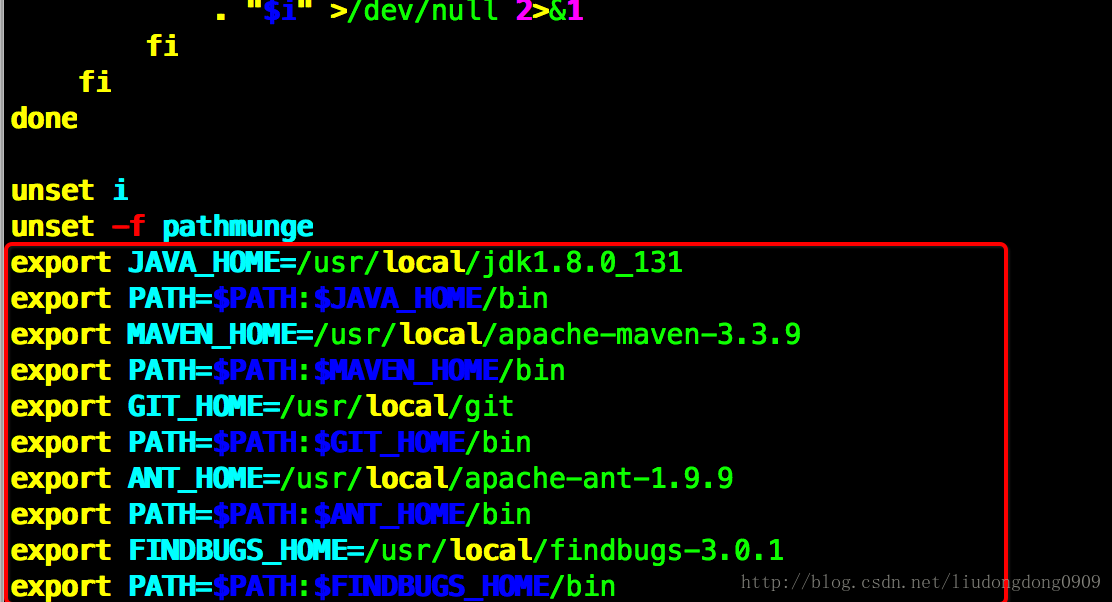

yum install cmake lzo-devel zlib-devel gcc gcc-c++ autoconf automake libtool ncurses-devel openssl-devel libXtst前4个解压之后需要配置到 /etc/profile,这个就不用多说了。。。

| 序号 | 名称 | 版本 | 下载路径 | 安装路径 |

|---|---|---|---|---|

| 1 | java | 1.8.0 | http://download.oracle.com/otn-pub/java/jdk/8u151-b12/e758a0de34e24606bca991d704f6dcbf/jdk-8u151-linux-x64.tar.gz | /usr/local/jdk1.8.0 |

| 2 | maven | 3.3.9 | https://archive.apache.org/dist/maven/maven-3/3.3.9/binaries/apache-maven-3.3.9-bin.tar.gz | /usr/local/apache-maven-3.3.9 |

| 3 | apache-ant | 1.9.9 | https://archive.apache.org/dist/ant/binaries/apache-ant-1.9.9-bin.tar.gz | /usr/local/apache-ant-1.9.9 |

| 4 | findbugs | 3.0.1 | http://prdownloads.sourceforge.net/findbugs/findbugs-3.0.1.tar.gz?download | /usr/local/findbugs-3.0.1 |

| 5 | protobuf | 2.5.0 | https://github.com/google/protobuf/releases/download/v2.5.0/protobuf-2.5.0.tar.gz | 需要编译安装 |

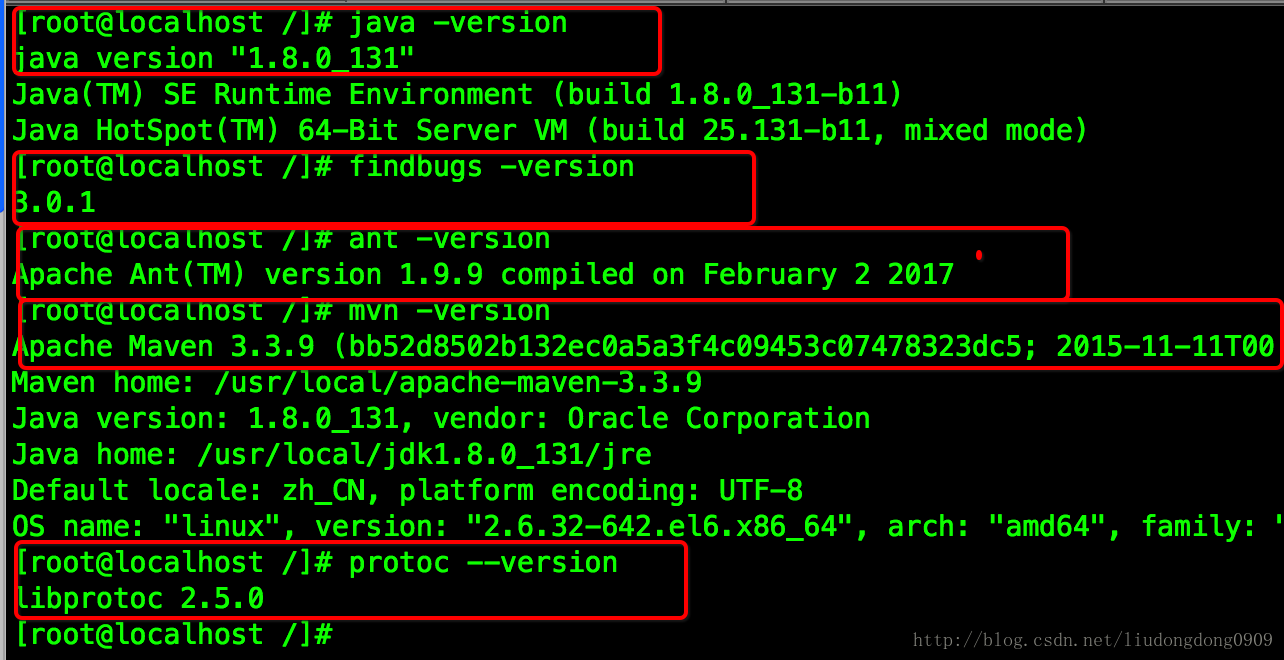

检查是否安装成功:

1.1 protobuf安装:

1.解压 :

tar -zxvf protobuf-2.5.0.tar.gz 2.编译安装:

进入解压后的protobuf-2.5.0目录:

[root@localhost protobuf-2.5.0]# ./config

[root@localhost protobuf-2.5.0]# make

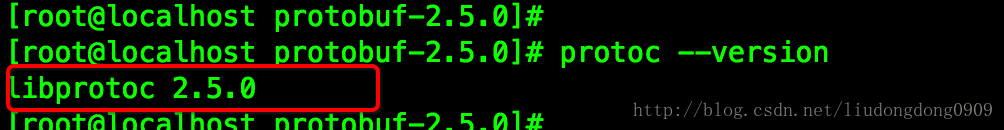

[root@localhost protobuf-2.5.0]# make install3.检查是否安装成功:

[root@localhost protobuf-2.5.0]# protoc --version第二步:将32位编译成64位本地库

2.1 解压tar.gz

[root@localhost ~]# tar -zxvf hadoop-2.4.0-src.tar.gz 2.2 进入已解压的目录

[root@localhost ~]# cd hadoop-2.4.0-src

[root@localhost hadoop-2.4.0-src]# pwd

/root/hadoop-2.4.0-src

[root@localhost hadoop-2.4.0-src]# 2.3 执行maven编译命令

[root@localhost hadoop-2.4.0-src]# mvn clean install -Dmaven.test.skip=true2.4 执行maven打包命令

[root@localhost hadoop-2.4.0-src]# mvn package -Pdist,native -DskipTests -Dtar

出现错误:使用jdk1.8语法造成的

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:57 min

[INFO] Finished at: 2017-09-11T20:27:15+08:00

[INFO] Final Memory: 42M/99M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-javadoc-plugin:2.8.1:jar (module-javadocs) on project hadoop-annotations: MavenReportException: Error while creating archive:

[ERROR] Exit code: 1 - /root/hadoop-2.4.0-src/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/InterfaceStability.java:27: 错误: 意外的结束标记: </ul>

[ERROR] * </ul>

[ERROR] ^

[ERROR]

[ERROR] Command line was: /usr/local/jdk1.8.0_131/jre/../bin/javadoc @options @packages

[ERROR] 2.5 执行新的打包命令:

[root@localhost hadoop-2.4.0-src]# mvn package -Pdist,native -DskipTests -Dtar -Dmaven.javadoc.skip=true执行命令-成功提示:

[INFO]

[INFO] --- maven-javadoc-plugin:2.8.1:jar (module-javadocs) @ hadoop-dist ---

[INFO] Skipping javadoc generation

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Apache Hadoop Main ................................. SUCCESS [ 1.510 s]

[INFO] Apache Hadoop Project POM .......................... SUCCESS [ 1.206 s]

[INFO] Apache Hadoop Annotations .......................... SUCCESS [ 0.947 s]

[INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.224 s]

[INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 1.847 s]

[INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 1.587 s]

[INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 0.576 s]

[INFO] Apache Hadoop Auth ................................. SUCCESS [ 0.595 s]

[INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 0.820 s]

[INFO] Apache Hadoop Common ............................... SUCCESS [ 22.098 s]

[INFO] Apache Hadoop NFS .................................. SUCCESS [ 1.230 s]

[INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.036 s]

[INFO] Apache Hadoop HDFS ................................. SUCCESS [ 41.151 s]

[INFO] Apache Hadoop HttpFS ............................... SUCCESS [02:01 min]

[INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 24.726 s]

[INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 0.966 s]

[INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.053 s]

[INFO] hadoop-yarn ........................................ SUCCESS [ 0.041 s]

[INFO] hadoop-yarn-api .................................... SUCCESS [ 6.211 s]

[INFO] hadoop-yarn-common ................................. SUCCESS [ 2.666 s]

[INFO] hadoop-yarn-server ................................. SUCCESS [ 0.036 s]

[INFO] hadoop-yarn-server-common .......................... SUCCESS [ 1.162 s]

[INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 4.972 s]

[INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 0.457 s]

[INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 0.561 s]

[INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 3.124 s]

[INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 0.524 s]

[INFO] hadoop-yarn-client ................................. SUCCESS [ 0.829 s]

[INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.032 s]

[INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 0.344 s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 0.318 s]

[INFO] hadoop-yarn-site ................................... SUCCESS [ 0.029 s]

[INFO] hadoop-yarn-project ................................ SUCCESS [ 2.916 s]

[INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.067 s]

[INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 2.171 s]

[INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 2.463 s]

[INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 0.383 s]

[INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 2.328 s]

[INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 1.443 s]

[INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 4.531 s]

[INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 0.240 s]

[INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 0.545 s]

[INFO] hadoop-mapreduce ................................... SUCCESS [ 2.755 s]

[INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 0.701 s]

[INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 1.138 s]

[INFO] Apache Hadoop Archives ............................. SUCCESS [ 0.333 s]

[INFO] Apache Hadoop Rumen ................................ SUCCESS [ 0.784 s]

[INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 0.798 s]

[INFO] Apache Hadoop Data Join ............................ SUCCESS [ 0.368 s]

[INFO] Apache Hadoop Extras ............................... SUCCESS [ 0.480 s]

[INFO] Apache Hadoop Pipes ................................ SUCCESS [ 5.452 s]

[INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 0.612 s]

[INFO] Apache Hadoop Client ............................... SUCCESS [ 4.039 s]

[INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 0.082 s]

[INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 0.523 s]

[INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 3.091 s]

[INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.030 s]

[INFO] Apache Hadoop Distribution ......................... SUCCESS [ 7.470 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 04:50 min

[INFO] Finished at: 2017-09-11T20:56:56+08:00

[INFO] Final Memory: 121M/237M

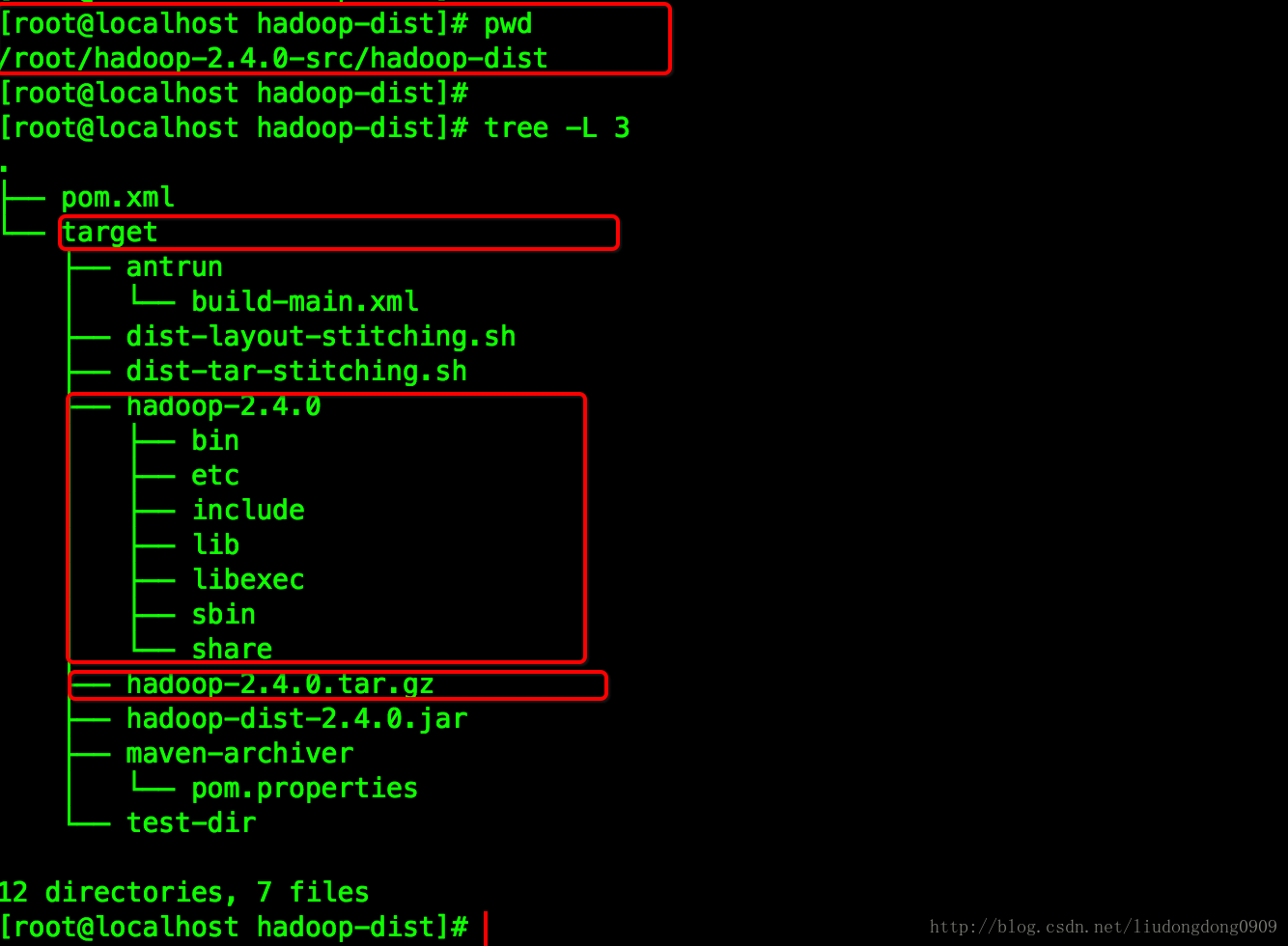

[INFO] ------------------------------------------------------------------------第三步: 检查打包编译之后的hadoop位数

[root@localhost hadoop-2.4.0-src]# cd hadoop-dist/target/hadoop-2.4.0/lib/native/

[root@localhost native]#

[root@localhost native]# pwd

/root/hadoop-2.4.0-src/hadoop-dist/target/hadoop-2.4.0/lib/native

[root@localhost native]#

[root@localhost native]# ll

总用量 4052

-rw-r--r--. 1 root root 982778 9月 11 20:56 libhadoop.a

-rw-r--r--. 1 root root 1487220 9月 11 20:56 libhadooppipes.a

lrwxrwxrwx. 1 root root 18 9月 11 20:56 libhadoop.so -> libhadoop.so.1.0.0

-rwxr-xr-x. 1 root root 586504 9月 11 20:56 libhadoop.so.1.0.0

-rw-r--r--. 1 root root 581952 9月 11 20:56 libhadooputils.a

-rw-r--r--. 1 root root 298154 9月 11 20:56 libhdfs.a

lrwxrwxrwx. 1 root root 16 9月 11 20:56 libhdfs.so -> libhdfs.so.0.0.0

-rwxr-xr-x. 1 root root 200018 9月 11 20:56 libhdfs.so.0.0.0

[root@localhost native]#

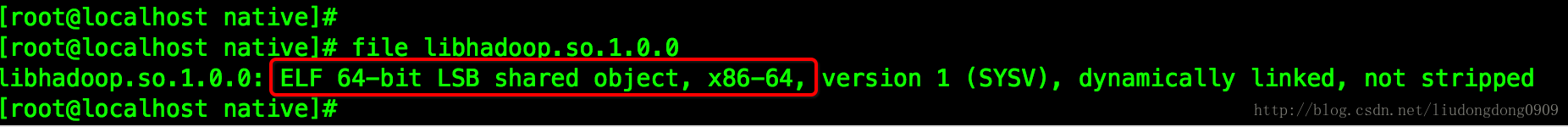

[root@localhost native]# file libhadoop.so.1.0.0

libhadoop.so.1.0.0: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, not stripped

[root@localhost native]# 如上图所示,表示成功编译位64位hadoop-2.4.0到本地库。

以上就是全文的内容,由于水平有限,文章中难免会有错误,希望大家指正。谢谢~