爬虫网页:https://hr.tencent.com/position.php

应用Scrapy框架,具体步骤就不详细说明,前面几篇Scrapy有一定的介绍

因为要涉及到翻页,下面的代码使用拼接的方式获取url,应用在一些没办法提取下一页链接的情况下

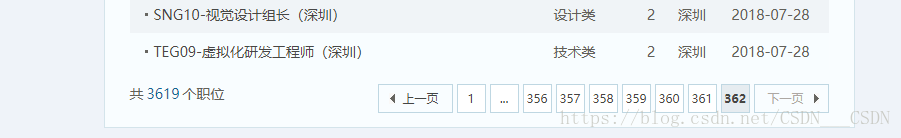

直接写 if self.offset < 3610: 不太好,之后可能会发生变化,所以最好的方式就是获取下一页的url再发送请求。

yield 返回的就是需要管道处理的有用数据 或者 返回下一页的请求

#tencent.py

# -*- coding: utf-8 -*-

import scrapy

from Tencent.items import TencentItem

class TencentSpider(scrapy.Spider):

name = 'tencent'

allowed_domains = ['tencent.com']

baseURL = "https://hr.tencent.com/position.php?&start="

offset = 0

start_urls = [baseURL + str(offset)]

def parse(self, response):

node_list = response.xpath("//tr[@class='even'] | //tr[@class='odd']")

for node in node_list:

item = TencentItem()

item["positionName"] = node.xpath("./td[1]/a/text()").extract()[0]

item["positionLink"] = "https://hr.tencent.com/"+ node.xpath("./td[1]/a/@href").extract()[0]

if len(node.xpath("./td[2]/text()")):

item["positionType"] = node.xpath("./td[2]/text()").extract()[0]

else:

item["positionType"] = ""

item["peopleNumber"] = node.xpath("./td[3]/text()").extract()[0]

item["workLocation"] = node.xpath("./td[4]/text()").extract()[0]

item["publishTime"] = node.xpath("./td[5]/text()").extract()[0]

yield item

if self.offset < 3610:

self.offset += 10

url = self.baseURL + str(self.offset)

yield scrapy.Request(url,callback = self.parse)这是通过获取下一页链接的方法,就不管该工作岗位变成多少都可以爬取,替换上面的if语句

if len(response.xpath("//a[@class='noactive' and @id='next']"))==0:

url = response.xpath("//div[@class='pagenav']/a[@id='next']/@href").extract()[0]

yield scrapy.Request("https://hr.tencent.com/"+url,callback = self.parse)#items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class TencentItem(scrapy.Item):

#职位名称

positionName = scrapy.Field()

#职位详情的链接

positionLink = scrapy.Field()

#职位类别

positionType = scrapy.Field()

#招聘人数

peopleNumber = scrapy.Field()

#工作地点

workLocation = scrapy.Field()

#发布时间

publishTime = scrapy.Field()

#pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import json

class TencentPipeline(object):

def __init__(self):

self.f = open("tencent.json","w",encoding = "utf-8")

def process_item(self, item, spider):

self.f.write(json.dumps(dict(item),ensure_ascii = False) + ",\n")

return item

def close_spider(self,spider):

self.f.close()#settings.py

# -*- coding: utf-8 -*-

BOT_NAME = 'Tencent'

SPIDER_MODULES = ['Tencent.spiders']

NEWSPIDER_MODULE = 'Tencent.spiders'

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'Tencent.pipelines.TencentPipeline': 300,

}最后在cmd中输入

scrapy crawl tencent

会在当前目录下创建一个“tencent.json”,

爬取成功!