前言

鉴于caffe2和pytorch要合并的消息,再加之pytorch实现模型的开发效率优势,虽然PyTorch 1.0 Stable版本预计还有一段时间才能面试,不过现在可以基于PyTorch0.4.0版本进行学习。本系列主要记载一些常见的PyTorch问题和功能,并不是对PyTorch教程的全篇实现,有需要的朋友可以自行学习PyTorch官方文档

① requires_grad

Tensor变量的requires_grad的属性默认为False,若一个节点requires_grad被设置为True,那么所有依赖它的节点的requires_grad都为True。

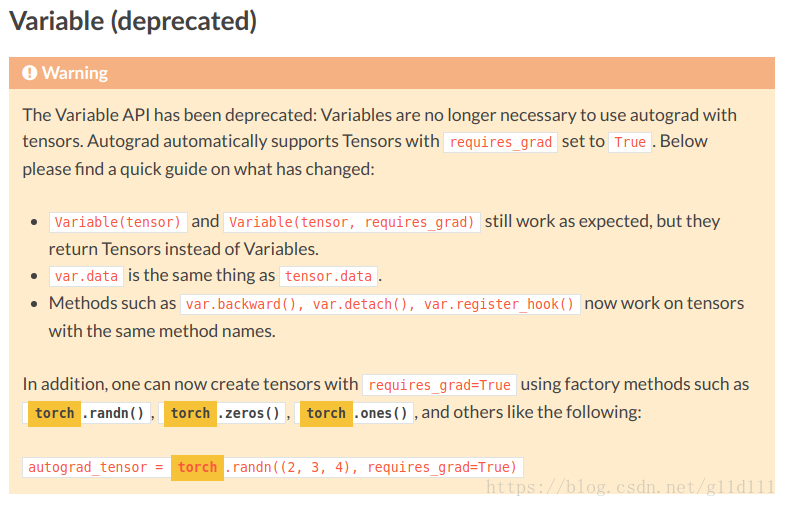

(注意:0.4.0版本将 Variable 和 Tensor合并,统称为 Tensor,在过去的版本中,requires_grad属性是Variable封装的属性)

Variable被废弃的说明

0 import torch

1 x=torch.ones(1)

2 w=torch.ones(1,requires_grad=True)

3 y=x*w

4 x.requires_grad,w.requires_grad,y.requires_grad

5 output:

6 (False, True, True)

y依赖于w,w的requires_grad=True,因此y的requires_grad=True (类似or操作)

② Variable和Tensor合并后,Variable的.data方法变化

关于.data方法,在新版本中保留功能, 但建议替代为x.detach()。

旧版中的.data方法是什么?

在Variable中将封装的Tensor数据存储在.data里

新版本中的.detach()方法

.data方法,本质上是给当前Tensor加一个新引用, 它们指向的内存都是一样的, 因此不安全 。比如y = x.data(), 而x参与了计算图的运算, 那么, 如果你不小心修改了y的data, x的data也会跟着变, 然而反向传播是监听不到x的data变化的, 因此造成梯度计算错误。

y = x.detach()正如其名, 从当前计算图中返回一个Tensor y(从计算图中隔离), 这个Tensor y是不需要梯度的。一旦试图改变修改Tensor y(比如做in-place操作), 会被语法检查和python解释器监测到, 并抛出错误.因为返回的 Tensor y 和 被 detach 的Tensor x 指向同一个Tensor.

③ autograd.no_grad和volatile

在以前的版本中,volatile在Variable中的属性,用于标志一个Variable是否要被计算图隔离出去。在Variable和Tensor合并之后,我们将使用torch.autograd里面的几个类torch.autograd.no_grad、torch.autograd.enable_grad、torch.autograd.set_grad_enabled(mode)来完成类似的工作。

volatile的功能

volatile的作用很简单,volatile=True表面对应的节点不会求导,即使requires_grad=True,也不会进行反向传播,对于不需要反向传播的情景(inference,测试推断)等能节省显存,提高速度——因为无需保存梯度。torch.autograd

这里以torch.autograd.no_grad为例进行说明,剩下两个的说明详见PyTorch——Locally disabling gradient computation

由下面代码可看出:torch.autograd.no_grad的作用是在上下文环境中切断梯度计算,在此模式下,每一步的计算结果中requires_grad都是False,即使input设置为requires_grad=True。因此可以用此功能替代之前的volatile功能。

0 import torch

1 x = torch.tensor([1], requires_grad=True)

2 with torch.no_grad():

3 y = x ** 2

4 print(y.requires_grad)

5 output:

6 False可能这样还不够清晰,举一个官方例子如下:

import torch

# N is batch size; D_in is input dimension;

# H is hidden dimension; D_out is output dimension.

N, D_in, H, D_out = 64, 1000, 100, 10

# Create random Tensors to hold inputs and outputs

x = torch.randn(N, D_in)

y = torch.randn(N, D_out)

# Use the nn package to define our model as a sequence of layers. nn.Sequential

# is a Module which contains other Modules, and applies them in sequence to

# produce its output. Each Linear Module computes output from input using a

# linear function, and holds internal Tensors for its weight and bias.

# 最简单的只有1层隐藏层的序惯模型

model = torch.nn.Sequential(

torch.nn.Linear(D_in, H),

torch.nn.ReLU(),

# 最后一层后面也要加逗号——————,

torch.nn.Linear(H, D_out),

)

# loss_function定义 size_average的说明见上面,也就是只算每个batch size的总loss。

loss_fn = torch.nn.MSELoss(size_average=False)

learning_rate = 1e-4

# 相当于将batchsize为64的数据喂入模型500次,即epoch=500

# 这里的权值更新很直观,torch.no_grad()每个参数直接执行 param -= learning_rate * param.grad

for t in range(500):

# Forward pass: compute predicted y by passing x to the model. Module objects

# override the __call__ operator so you can call them like functions. When

# doing so you pass a Tensor of input data to the Module and it produces

# a Tensor of output data.

# 0.4.0版本已经将 Variable 和 Tensor合并,统称为 Tensor

y_pred = model(x)

# Compute and print loss. We pass Tensors containing the predicted and true

# values of y, and the loss function returns a Tensor containing the

# loss.

loss = loss_fn(y_pred, y)

print('round', t+1, loss.item())

# Zero the gradients before running the backward pass.

model.zero_grad()

# Backward pass: compute gradient of the loss with respect to all the learnable

# parameters of the model. Internally, the parameters of each Module are stored

# in Tensors with requires_grad=True, so this call will compute gradients for

# all learnable parameters in the model.

loss.backward()

# 手动调整模型参数 当然,我们可以用optim包去定义Optimizer并自动帮助我们更新权值。optim包里包括了SGD+momentum, RMSProp, Adam等常见的深度学习优化算法.

with torch.no_grad():

for param in model.parameters():

param -= learning_rate * param.grad 下面的代码采用optim包的优化器更新权值的方法。

import torch

# N is batch size; D_in is input dimension;

# H is hidden dimension; D_out is output dimension.

N, D_in, H, D_out = 64, 1000, 100, 10

# Create random Tensors to hold inputs and outputs

x = torch.randn(N, D_in)

y = torch.randn(N, D_out)

# Use the nn package to define our model and loss function.

model = torch.nn.Sequential(

torch.nn.Linear(D_in, H),

torch.nn.ReLU(),

torch.nn.Linear(H, D_out),

)

loss_fn = torch.nn.MSELoss(size_average=False)

# Use the optim package to define an Optimizer that will update the weights of

# the model for us. Here we will use Adam; the optim package contains many other

# optimization algoriths. The first argument to the Adam constructor tells the

# optimizer which Tensors it should update.

learning_rate = 1e-4

# 优化器,AdamOptimizer

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

for t in range(500):

# Forward pass: compute predicted y by passing x to the model.

y_pred = model(x)

# Compute and print loss.

loss = loss_fn(y_pred, y)

print(t, loss.item())

# Before the backward pass, use the optimizer object to zero all of the

# gradients for the variables it will update (which are the learnable

# weights of the model). This is because by default, gradients are

# accumulated in buffers( i.e, not overwritten) whenever .backward()

# is called. Checkout docs of torch.autograd.backward for more details.

# 原来是model.zero_grad()

optimizer.zero_grad()

# Backward pass: compute gradient of the loss with respect to model

# parameters

loss.backward()

# 调用Optimizer的step function来更新Model的权值。

optimizer.step()