1 import requests 2 from bs4 import BeautifulSoup 3 import traceback 4 import re 5 6 7 def getHTMLText(url): 8 try: 9 r = requests.get(url) 10 r.raise_for_status() 11 r.encoding = r.apparent_encoding 12 return r.text 13 except: 14 return "" 15 16 17 def getStockList(lst, stockURL): 18 html = getHTMLText(stockURL) 19 soup = BeautifulSoup(html, 'html.parser') 20 a = soup.find_all('a') 21 for i in a: 22 try: 23 href = i.attrs['href'] 24 lst.append(re.findall(r"[s][hz]\d{6}", href)[0]) 25 except: 26 continue 27 28 29 def getStockInfo(lst, stockURL, fpath): 30 for stock in lst: 31 url = stockURL + stock + ".html" 32 html = getHTMLText(url) 33 try: 34 if html == "": 35 continue 36 infoDict = {} 37 soup = BeautifulSoup(html, 'html.parser') 38 stockInfo = soup.find('div', attrs={'class': 'stock-bets'}) 39 40 name = stockInfo.find_all(attrs={'class': 'bets-name'})[0] 41 infoDict.update({'股票名称': name.text.split()[0]}) 42 43 keyList = stockInfo.find_all('dt') 44 valueList = stockInfo.find_all('dd') 45 for i in range(len(keyList)): 46 key = keyList[i].text 47 val = valueList[i].text 48 infoDict[key] = val 49 50 with open(fpath, 'a', encoding='utf-8') as f: 51 f.write(str(infoDict) + '\n') 52 except: 53 traceback.print_exc() 54 continue 55 56 57 def main(): 58 stock_list_url = 'http://quote.eastmoney.com/stocklist.html' 59 stock_info_url = 'http://gupiao.baidu.com/stock/' 60 output_file = 'D:/BaiduStockInfo.txt' 61 slist = [] 62 getStockList(slist, stock_list_url) 63 getStockInfo(slist, stock_info_url, output_file) 64 65 66 main()

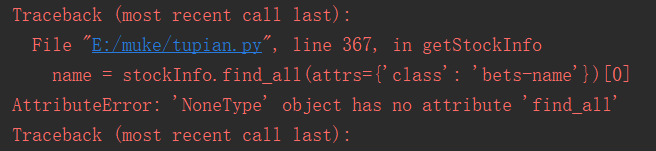

这是慕课上的源代码,直接粘贴的,不知道为什么运行一直报错。以下是错误。如果有人解决了,麻烦说一声,谢谢啦。

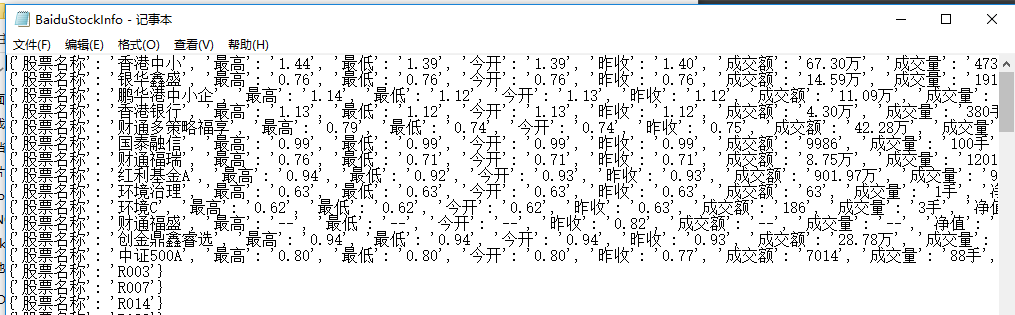

下面是慕课中修改的代码,也是源代码,直接粘贴的,但是是可以运行出来的。

1 import requests 2 from bs4 import BeautifulSoup 3 import traceback 4 import re 5 6 def getHTMLText(url, code="utf-8"): 7 try: 8 r = requests.get(url) 9 r.raise_for_status() 10 r.encoding = code 11 return r.text 12 except: 13 return "" 14 15 def getStockList(lst, stockURL): 16 html = getHTMLText(stockURL, "GB2312") 17 soup = BeautifulSoup(html, 'html.parser') 18 a = soup.find_all('a') 19 for i in a: 20 try: 21 href = i.attrs['href'] 22 lst.append(re.findall(r"[s][hz]\d{6}", href)[0]) 23 except: 24 continue 25 26 def getStockInfo(lst, stockURL, fpath): 27 count = 0 28 for stock in lst: 29 url = stockURL + stock + ".html" 30 html = getHTMLText(url) 31 try: 32 if html=="": 33 continue 34 infoDict = {} 35 soup = BeautifulSoup(html, 'html.parser') 36 stockInfo = soup.find('div',attrs={'class':'stock-bets'}) 37 38 name = stockInfo.find_all(attrs={'class':'bets-name'})[0] 39 infoDict.update({'股票名称': name.text.split()[0]}) 40 41 keyList = stockInfo.find_all('dt') 42 valueList = stockInfo.find_all('dd') 43 for i in range(len(keyList)): 44 key = keyList[i].text 45 val = valueList[i].text 46 infoDict[key] = val 47 48 with open(fpath, 'a', encoding='utf-8') as f: 49 f.write( str(infoDict) + '\n' ) 50 count = count + 1 51 print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="") 52 except: 53 count = count + 1 54 print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="") 55 continue 56 57 def main(): 58 stock_list_url = 'http://quote.eastmoney.com/stocklist.html' 59 stock_info_url = 'http://gupiao.baidu.com/stock/' 60 output_file = 'D:/BaiduStockInfo.txt' 61 slist=[] 62 getStockList(slist, stock_list_url) 63 getStockInfo(slist, stock_info_url, output_file) 64 65 main()

至于为什么,本人也不是特别清楚,代码主题部分是一样的,具体原因不清楚。我把编码方式删去了,和原先一样。但是程序依然可以运行。我不知道为什么,加了进度条,程序就可以运行了。

这是别人的代码分析 ,个人觉得还是很好的,很仔细:https://segmentfault.com/a/1190000010520835