We want to represent a word with a vector in NLP. There are many methods.

1 one-hot Vector

Represent every word as an

2 SVD Based Methods

2.1 Window based Co-occurrence Matrix

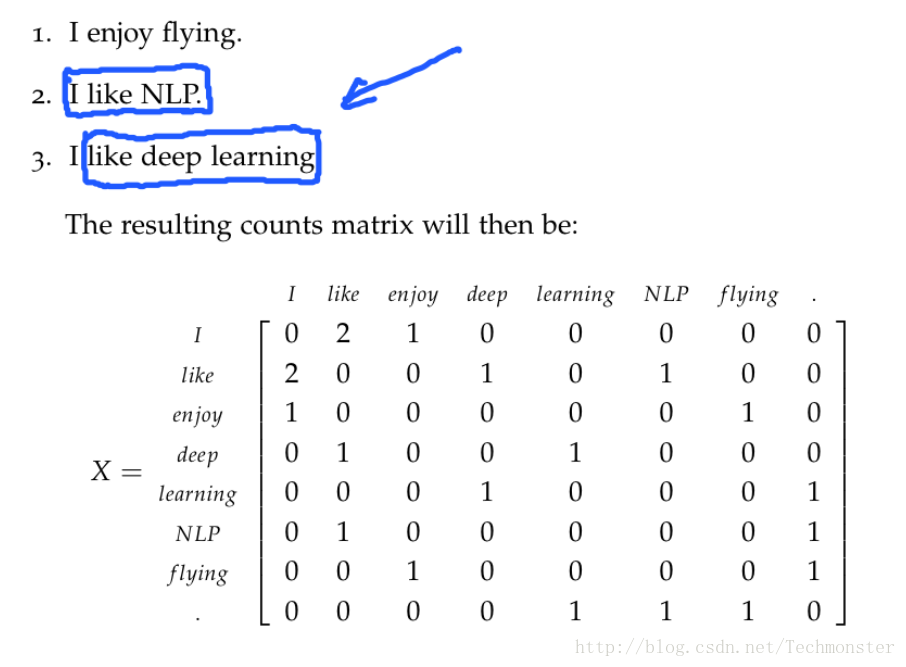

Representing a word by means of its neighbors.

In this method we count the number of times each word appears inside a window of a particular size around the word of interest.

For example:

The matrix is too large. We should make it smaller with SVD.

- Generate

|V|∗|V| co-occurrence matrix,X . - Apply SVD on

X to getX=USVT . - Select the first

k columns ofU to get ak -dimensional word vectors. -

∑ki=1σi∑|V|i=1σi indicates the amount of variance captured by the firstk dimensions.

2.2 shortage

SVD based methods do not scale well for big matrices and it is hard to incorporate new words or documents. Computational cost for a

3 Iteration Based Methods - Word2Vec

3.1 Language Models (Unigrams, Bigrams, etc.)

We need to create such a model that will assign a probability to a sequence of tokens.

For example

* The cat jumped over the puddle. —high probability

* Stock boil fish is toy. —low probability

Unigrams:

We can take the unary language model approach and break apart this probability by assuming the word occurrences are completely independent:

However, we know the next word is highly contingent upon the previous sequence of words. This model is bad.

Bigrams:

We let the probability of the sequence depend on the pairwise probability of a word in the sequence and the word next to it.

3.2 Continuous Bag of words Model (CBOW)

Example Sequence:

“The cat jumped over the puddle.”

What is Continuous Bag of words Model?

We treat {“the”, “cat” , “over”, “puddle”} as a context. And the word “jumped” is the center word. Context should be able to predict the center world. This type of model we call a Continuous Bag of words Model.

Known parameters:

If the index of center word is

The input of the model is the one-hot vector of context. We represent it with

And the outputs is the one-hot vector of center word.We represent it with

Parameters we need to learn:

Where

How does it work:

1. We get our embedded word vectors for the context:

2. Average these vectors:

3. Generate a score vector

4. Turn the scores into probabilities

5. We desire our probabilities generated

How to learn

learn them with stochastic gradient descent. So we need a loss function.

We use cross-entropy to measure the distance between two distributions:

Consider

We formulate our optimization objective as:

We use stochastic gradient descent to update