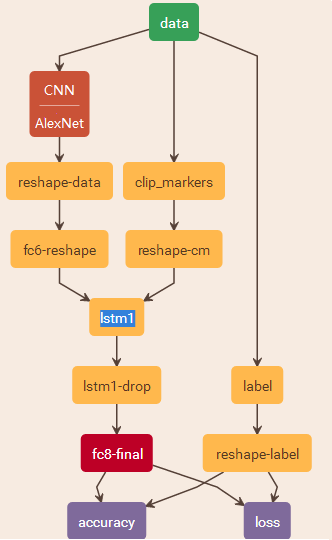

2.2 网络模型

Caffe训练网络的网络结果如下所示:

2.3相关术语及变量

N为LSTM同时处理的独立流的个数,在该实验中为输入LSTM相互独立的视频的个数,以该实验测试网络为例,本文取T=3。

T为LSTM网络层处理的时间步总数,在该实验中为输入LSTM的任意一独立视频的视频帧个数,以该实验测试网络为例,本文取T=16。

因此fc-reshape层输出维度为 T×N×4096T. 4096为AlexNet中全连接层的维度,即CNN特征的维度。

reshape-cm的输出维度为 T×N,即每一个帧有一个是否连续帧的标志。(第一帧为0,后面的连续帧为1)

reshape-label的维度同样为 T×N

name: "LeNet"

layer {

name: "mnist"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

}

data_param {

source: "lmdb/mnist_train_lmdb"

batch_size: 1

backend: LMDB

}

}

layer {

name: "mnist"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

}

data_param {

source: "lmdb/mnist_test_lmdb"

batch_size: 1

backend: LMDB

}

}

# 将 data 转换为 [T,Batch, H]

layer {

name: "reshape"

type: "Reshape"

bottom: "data"

top: "reshape1"

reshape_param {

shape { dim:28 dim:1 dim:28}

}

}

layer {

name: "cont"

type: "HDF5Data"

top: "cont"

include {

phase: TRAIN

}

hdf5_data_param {

source: "train_conv_h5.txt"

batch_size: 1

}

}

layer {

name: "cont"

type: "HDF5Data"

top: "cont"

include {

phase: TEST

}

hdf5_data_param {

source: "test_conv_h5.txt"

batch_size: 1

}

}

#将 cont 转换为 [T, batch]

layer {

name: "reshape"

type: "Reshape"

bottom: "cont"

top: "cont1"

reshape_param {

shape { dim:28 dim:1}

}

}

#num_output 代表每个 lstm的输出 为 10 个数值 ,只使用第28个时间步的值

layer {

name: "lstm1"

type: "LSTM"

bottom: "reshape1"

bottom: "cont1"

top: "lstm1"

recurrent_param {

num_output: 10

weight_filler {

type: "uniform"

min: -0.08

max: 0.08

}

bias_filler {

type: "constant"

value: 0

}

}

}

#输出为28个时间步的 output [t, batch, 10] 只使用第28个时间步的值 output2

layer {

name: "slice"

type: "Slice"

bottom: "lstm1"

top: "output1"

top: "output2"

slice_param {

axis: 0

slice_point: 27

}

}

#output1 一直输出影响观察,这里随便加的一层,没有作用

layer {

name: "reduction"

type: "Reduction"

bottom: "output1"

top: "output11"

reduction_param {

axis: 0

}

}

layer {

name: "reshape"

type: "Reshape"

bottom: "output2"

top: "output22"

reshape_param {

shape { dim:1, dim:-1}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "output22"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "output22"

bottom: "label"

top: "loss"

}

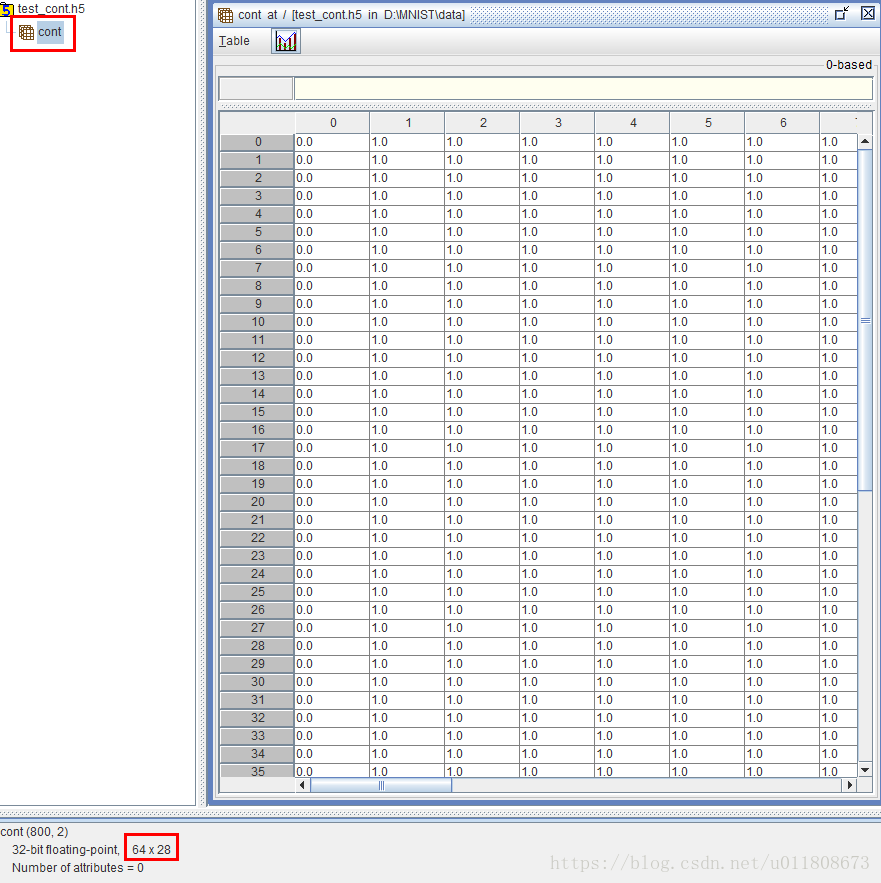

cont 数据生成

Mat data_train1(64, 28, CV_8UC1), data_test1(64, 28, CV_8UC1);

for (size_t i = 0; i < 64; i++)

{

for (size_t j = 0; j < 28; j++)

{

if (j == 0)

{

data_train1.at<uchar>(i, j) = 0;

data_test1.at<uchar>(i, j) = 0;

}

else {

data_train1.at<uchar>(i, j) = 1;

data_test1.at<uchar>(i, j) = 1;

}

}

}

mat2hdf5(data_train1, "D:\\MNIST\\data\\train_cont.h5", "cont");

mat2hdf5(data_test1, "D:\\MNIST\\data\\test_cont.h5", "cont");

system("PAUSE");

void mat2hdf5(Mat &data, const char * filepath, string dataset1)

{

int data_cols = data.cols;

int data_rows = data.rows;

hid_t file_id;

herr_t status;

file_id = H5Fcreate(filepath, H5F_ACC_TRUNC, H5P_DEFAULT, H5P_DEFAULT);

int rank_data = 2;

hsize_t dims_data[2];

dims_data[0] = data_rows;

dims_data[1] = data_cols;

hid_t data_id = H5Screate_simple(rank_data, dims_data, NULL);

hid_t dataset_id = H5Dcreate2(file_id, dataset1.c_str(), H5T_NATIVE_FLOAT, data_id, H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT);

int i, j;

float* data_mem = new float[data_rows*data_cols];

float **array_data = new float*[data_rows];

for (j = 0; j < data_rows; j++) {

array_data[j] = data_mem + j* data_cols;

for (i = 0; i < data_cols; i++)

{

array_data[j][i] = data.at<uchar>(j, i);

}

}

status = H5Dwrite(dataset_id, H5T_NATIVE_FLOAT, H5S_ALL, H5S_ALL, H5P_DEFAULT, array_data[0]);

//关闭

status = H5Sclose(data_id);

status = H5Dclose(dataset_id);

status = H5Fclose(file_id);

delete[] array_data;

}

test_cont 与 train_cont 数据一样,64*28,其中64 是可变的。

由于 输入数据 要进行 维度转换 由[batch, 1, w, h] 这里把 w 看成时间部 T

应该转换成 [t, batch, h] ,在caffe 里面没有找到维度变换的方法,所以直接使用的reshape ,为了保证有效,batch 必须设置成1,否者与实际转换不符。

训练结果

I0715 17:11:19.591768 10532 solver.cpp:348] Iteration 790000, Testing net (#0)

I0715 17:11:19.600821 10532 solver.cpp:415] Test net output #0: accuracy = 0.83

I0715 17:11:19.600821 10532 solver.cpp:415] Test net output #1: loss = 1.18451 (* 1 = 1.18451 loss)

I0715 17:11:19.600821 10532 solver.cpp:415] Test net output #2: output11 = -35.0393

I0715 17:11:19.600821 10532 solver.cpp:220] Iteration 790000 (6993.01 iter/s, 0.715s/5000 iters), loss = 1.00843

I0715 17:11:19.600821 10532 solver.cpp:239] Train net output #0: loss = 1.00847 (* 1 = 1.00847 loss)

I0715 17:11:19.600821 10532 solver.cpp:239] Train net output #1: output11 = -43.1359

I0715 17:11:19.600821 10532 sgd_solver.cpp:105] Iteration 790000, lr = 0.000373837

I0715 17:11:20.325745 10532 solver.cpp:348] Iteration 795000, Testing net (#0)

I0715 17:11:20.335746 10532 solver.cpp:415] Test net output #0: accuracy = 0.77

I0715 17:11:20.335746 10532 solver.cpp:415] Test net output #1: loss = 1.24829 (* 1 = 1.24829 loss)

I0715 17:11:20.335746 10532 solver.cpp:415] Test net output #2: output11 = -37.8989

I0715 17:11:20.335746 10532 solver.cpp:220] Iteration 795000 (6811.99 iter/s, 0.734s/5000 iters), loss = 0.868423

I0715 17:11:20.335746 10532 solver.cpp:239] Train net output #0: loss = 0.868455 (* 1 = 0.868455 loss)

I0715 17:11:20.335746 10532 solver.cpp:239] Train net output #1: output11 = -54.416

I0715 17:11:20.335746 10532 sgd_solver.cpp:105] Iteration 795000, lr = 0.000372094

I0715 17:11:21.056661 10532 solver.cpp:468] Snapshotting to binary proto file save_path/_iter_800000.caffemodel

I0715 17:11:21.058668 10532 sgd_solver.cpp:273] Snapshotting solver state to binary proto file save_path/_iter_800000.solverstate

I0715 17:11:21.059669 10532 solver.cpp:328] Iteration 800000, loss = 1.01535

I0715 17:11:21.059669 10532 solver.cpp:348] Iteration 800000, Testing net (#0)

I0715 17:11:21.068692 10532 solver.cpp:415] Test net output #0: accuracy = 0.75

I0715 17:11:21.068692 10532 solver.cpp:415] Test net output #1: loss = 1.32086 (* 1 = 1.32086 loss)

I0715 17:11:21.068692 10532 solver.cpp:415] Test net output #2: output11 = -33.7201

I0715 17:11:21.068692 10532 solver.cpp:333] Optimization Done.

I0715 17:11:21.068692 10532 caffe.cpp:260] Optimization Done.