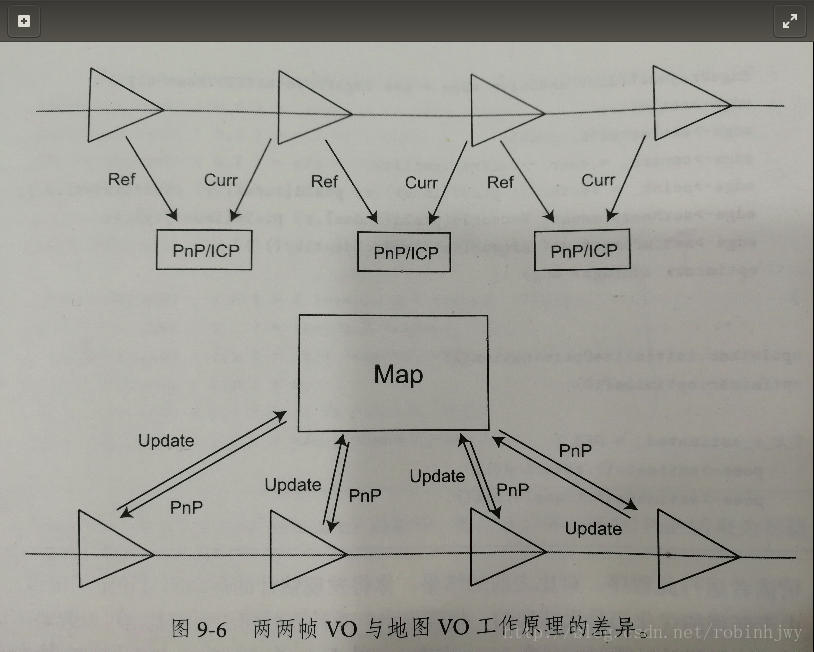

在两两帧的VO中,过于依赖参考帧,因为一旦参考帧丢失,就无法进行下去。在0.4版本,采用地图VO ,每一帧为地图贡献一些信息,比如添加新的特征点或更新旧特征点的位置估计。地图中的特征点一般都是使用世界坐标,所以当前帧到来时,匹配它和地图之间运动关系可以直接得到Tcw。上一张图:

这样做的好处就是,维护一个不断更新的地图,只要地图正确,即使中间某帧出了问题,不至于崩溃。

另外地图分为局部和全局。这里我们实时定位的需要的是局部地图,全局地图一般用于回环检测和整体建图。

首先,增加肯定就是之前没有用到的MapPoint类。

mappoint.h

#ifndef MAPPOINT_H

#define MAPPOINT_H

#include "myslam/common_include.h"

namespace myslam

{

class Frame;

class MapPoint

{

public:

typedef shared_ptr<MapPoint> Ptr;

unsigned long id_; // ID

static unsigned long factory_id_; // factory id

bool good_; // wheter a good point

Vector3d pos_; // Position in world

Vector3d norm_; // Normal of viewing direction

Mat descriptor_; // Descriptor for matching

list<Frame*> observed_frames_; // key-frames that can observe this point

int matched_times_; // being an inliner in pose estimation

int visible_times_; // being visible in current frame

MapPoint();

MapPoint(

unsigned long id,

const Vector3d& position,

const Vector3d& norm,

Frame* frame=nullptr,

const Mat& descriptor=Mat()

);

inline cv::Point3f getPositionCV() const {

return cv::Point3f( pos_(0,0), pos_(1,0), pos_(2,0) );

}

static MapPoint::Ptr createMapPoint();

static MapPoint::Ptr createMapPoint(

const Vector3d& pos_world,

const Vector3d& norm_,

const Mat& descriptor,

Frame* frame );

};

}

#endif // MAPPOINT_H

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

头文件很简单,地图点本质上就是空间中3D点,成员函数为地图点的基本信息。有一个Frame*类型的 list,用于记录观察到此地图点的帧。还有个取得地图点3维坐标的功能函数,比较简单,直接写成内联了。还有个地图点生成函数,传入成员变量所需要的信息就好了。

看一下实现 mappoint.cpp

#include "myslam/common_include.h"

#include "myslam/mappoint.h"

namespace myslam

{

//默认构造函数,设定各种默认值

MapPoint::MapPoint()

: id_(-1), pos_(Vector3d(0,0,0)), norm_(Vector3d(0,0,0)), good_(true), visible_times_(0), matched_times_(0)

{

}

//构造函数,将观察帧push_back进去

MapPoint::MapPoint ( long unsigned int id, const Vector3d& position, const Vector3d& norm, Frame* frame, const Mat& descriptor )

: id_(id), pos_(position), norm_(norm), good_(true), visible_times_(1), matched_times_(1), descriptor_(descriptor)

{

observed_frames_.push_back(frame);

}

//创建地图点时,直接在累加上ID然后构造一个就好了。返回定义的MapPoint类型指针Ptr

MapPoint::Ptr MapPoint::createMapPoint()

{

return MapPoint::Ptr(new MapPoint(factory_id_++, Vector3d(0,0,0), Vector3d(0,0,0)));

}

//同样也是

MapPoint::Ptr MapPoint::createMapPoint (

const Vector3d& pos_world,

const Vector3d& norm,

const Mat& descriptor,

Frame* frame )

{

return MapPoint::Ptr(

new MapPoint( factory_id_++, pos_world, norm, frame, descriptor )

);

}

//这个是出厂ID?做什么用?只要创建地图点对象,它的值就为0,并没有看到哪里对其更新。构造函数中也没有此成员。

unsigned long MapPoint::factory_id_ = 0;

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

由于工作流程的改变,所以主要的修改在VisualOdometry类。

头文件中改动不多,注释标注,主要是删除关于参考帧的东西,还有增加关于地图的一些操作:

#ifndef VISUALODOMETRY_H

#define VISUALODOMETRY_H

#include "myslam/common_include.h"

#include "myslam/map.h"

#include <opencv2/features2d/features2d.hpp>

namespace myslam

{

class VisualOdometry

{

public:

typedef shared_ptr<VisualOdometry> Ptr;

enum VOState {

INITIALIZING=-1,

OK=0,

LOST

};

VOState state_; // current VO status

Map::Ptr map_; // map with all frames and map points

Frame::Ptr ref_; // reference key-frame

Frame::Ptr curr_; // current frame

//在ORB部分去掉了关于参考帧的东西,3D点,描述子等

cv::Ptr<cv::ORB> orb_; // orb detector and computer

vector<cv::KeyPoint> keypoints_curr_; // keypoints in current frame

Mat descriptors_curr_; // descriptor in current frame

//在匹配器中,所需要的匹配变成了地图点和帧中的关键点。

cv::FlannBasedMatcher matcher_flann_; // flann matcher

vector<MapPoint::Ptr> match_3dpts_; // matched 3d points

vector<int> match_2dkp_index_; // matched 2d pixels (index of kp_curr)

//这里的T也变成了直接的cw,而不是之前的当前帧和参考帧的cr

SE3 T_c_w_estimated_; // the estimated pose of current frame

int num_inliers_; // number of inlier features in icp

int num_lost_; // number of lost times

// parameters

int num_of_features_; // number of features

double scale_factor_; // scale in image pyramid

int level_pyramid_; // number of pyramid levels

float match_ratio_; // ratio for selecting good matches

int max_num_lost_; // max number of continuous lost times

int min_inliers_; // minimum inliers

double key_frame_min_rot; // minimal rotation of two key-frames

double key_frame_min_trans; // minimal translation of two key-frames

double map_point_erase_ratio_; // remove map point ratio

public: // functions

VisualOdometry();

~VisualOdometry();

bool addFrame( Frame::Ptr frame ); // add a new frame

protected:

// inner operation

void extractKeyPoints();

void computeDescriptors();

void featureMatching();

void poseEstimationPnP();

//增加的优化地图的函数,这个函数可能实现的就是对整个后端地图的优化

void optimizeMap();

void addKeyFrame();

//增加地图点函数

void addMapPoints();

bool checkEstimatedPose();

bool checkKeyFrame();

//增加的取得视角函数??

double getViewAngle( Frame::Ptr frame, MapPoint::Ptr point );

};

}

#endif // VISUALODOMETRY_H

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

主要的更改在源文件实现上的一些函数,因为工作流程改变,所以每次循环中要对地图进行增删、统计每个地图点被观测到的次数,等等OptimizerMap()函数对地图进行优化,包括删除不在视野中的点,匹配数量减少时增加新点等操作。具体代码如下:

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <algorithm>

#include <boost/timer.hpp>

#include "myslam/config.h"

#include "myslam/visual_odometry.h"

#include "myslam/g2o_types.h"

namespace myslam

{

VisualOdometry::VisualOdometry() :

state_ ( INITIALIZING ), ref_ ( nullptr ), curr_ ( nullptr ), map_ ( new Map ), num_lost_ ( 0 ), num_inliers_ ( 0 ), matcher_flann_ ( new cv::flann::LshIndexParams ( 5,10,2 ) )

{

num_of_features_ = Config::get<int> ( "number_of_features" );

scale_factor_ = Config::get<double> ( "scale_factor" );

level_pyramid_ = Config::get<int> ( "level_pyramid" );

match_ratio_ = Config::get<float> ( "match_ratio" );

max_num_lost_ = Config::get<float> ( "max_num_lost" );

min_inliers_ = Config::get<int> ( "min_inliers" );

key_frame_min_rot = Config::get<double> ( "keyframe_rotation" );

key_frame_min_trans = Config::get<double> ( "keyframe_translation" );

map_point_erase_ratio_ = Config::get<double> ( "map_point_erase_ratio" );

orb_ = cv::ORB::create ( num_of_features_, scale_factor_, level_pyramid_ );

}

VisualOdometry::~VisualOdometry()

{

}

bool VisualOdometry::addFrame ( Frame::Ptr frame )

{

switch ( state_ )

{

case INITIALIZING:

{

state_ = OK;

curr_ = ref_ = frame;

// extract features from first frame and add them into map

extractKeyPoints();

computeDescriptors();

//之前增加关键帧需调用map类中的insertKeyFrame()函数,

// 这里第一帧的话,就直接调用自身的addKeyFrame()函数添加进地图

addKeyFrame(); // the first frame is a key-frame

break;

}

case OK:

{

//整个流程的改变就是只需要不断进行每一帧的位姿迭代,

//而不需要用到参考帧的3D点进行匹配得T了

curr_ = frame;

//说一下这一句,新的帧来了,先将其位姿赋值为参考帧的位姿,

//因为考虑到匹配失败的情况下,这一帧就定义为丢失了,所以位姿就用参考帧的了。

//如果一切正常,求得了当前帧的位姿,就进行赋值覆盖掉就好了。

curr_->T_c_w_ = ref_->T_c_w_;

extractKeyPoints();

computeDescriptors();

featureMatching();

poseEstimationPnP();

if ( checkEstimatedPose() == true ) // a good estimation

{

//正常求得位姿T,对当前帧位姿进行更新

curr_->T_c_w_ = T_c_w_estimated_;

optimizeMap();

num_lost_ = 0;

if ( checkKeyFrame() == true ) // is a key-frame

{

addKeyFrame();

}

}

//考虑到匹配失败的情况下,当前帧也不会没有位姿,只是还是前一帧的罢了。

else // bad estimation due to various reasons

{

num_lost_++;

if ( num_lost_ > max_num_lost_ )

{

state_ = LOST;

}

return false;

}

break;

}

case LOST:

{

cout<<"vo has lost."<<endl;

break;

}

}

return true;

}

void VisualOdometry::extractKeyPoints()

{

boost::timer timer;

orb_->detect ( curr_->color_, keypoints_curr_ );

cout<<"extract keypoints cost time: "<<timer.elapsed() <<endl;

}

void VisualOdometry::computeDescriptors()

{

boost::timer timer;

orb_->compute ( curr_->color_, keypoints_curr_, descriptors_curr_ );

cout<<"descriptor computation cost time: "<<timer.elapsed() <<endl;

}

void VisualOdometry::featureMatching()

{

boost::timer timer;

vector<cv::DMatch> matches;

// select the candidates in map

//建立一个目标图,承接匹配需要地图点的描述子,因为匹配是需要的参数是描述子

Mat desp_map;

//建立一个候选地图点数组,承接匹配需要的地图点

vector<MapPoint::Ptr> candidate;

//检查地图点是否为匹配需要的,逻辑就是遍历维护的局部地图中所有地图点,

//然后利用isInFrame()函数检查有哪些地图点在当前观察帧中,

//如果在则把地图点push进candidate中,描述子push进desp_map中

for ( auto& allpoints: map_->map_points_ )

{

//这里还是STL用法,allpoints为map类中定义的双模板类型类成员,此表示第二个模板类型

//总之功能上就是把地图点取出,赋值给p

MapPoint::Ptr& p = allpoints.second;

// check if p in curr frame image

//利用是否在匹配帧中来判断是否添加进去

if ( curr_->isInFrame(p->pos_) )

{

// add to candidate

//观察次数增加一次

p->visible_times_++;

//点push进candidate

candidate.push_back( p );

//描述子push进desp_map

desp_map.push_back( p->descriptor_ );

}

}

//这步匹配中,由原来的参考帧,变成了上面定义的desp_map地图,进行匹配。

matcher_flann_.match ( desp_map, descriptors_curr_, matches );

// select the best matches

float min_dis = std::min_element (

matches.begin(), matches.end(),

[] ( const cv::DMatch& m1, const cv::DMatch& m2 )

{

return m1.distance < m2.distance;

} )->distance;

match_3dpts_.clear();

match_2dkp_index_.clear();

for ( cv::DMatch& m : matches )

{

if ( m.distance < max<float> ( min_dis*match_ratio_, 30.0 ) )

{

//这里变化是不像之前直接将好的m push进feature_matches_就完了。

//这里感觉像做一个记录,candidate中存的是观察到的地图点

// 进一步,在candidate中选出好的匹配的点,push进match_3dpts_,

//这个match_3dpts_代表当前这一帧计算T时所利用到的所有好的地图点,放入其中。

//由此可见,candidate只是个中间存储,新的一帧过来会被刷新。

//同样match_2dkp_index_也是同样的道理,只不过是记录的当前帧detect出来的keypoint数组中的点的索引。

match_3dpts_.push_back( candidate[m.queryIdx] );

match_2dkp_index_.push_back( m.trainIdx );

}

}

cout<<"good matches: "<<match_3dpts_.size() <<endl;

cout<<"match cost time: "<<timer.elapsed() <<endl;

}

//这里删除了setRef3DPoints()函数,用不到了

void VisualOdometry::poseEstimationPnP()

{

// construct the 3d 2d observations

vector<cv::Point3f> pts3d;

vector<cv::Point2f> pts2d;

for ( int index:match_2dkp_index_ )

{

pts2d.push_back ( keypoints_curr_[index].pt );

}

for ( MapPoint::Ptr pt:match_3dpts_ )

{

pts3d.push_back( pt->getPositionCV() );

}

Mat K = ( cv::Mat_<double> ( 3,3 ) <<

ref_->camera_->fx_, 0, ref_->camera_->cx_,

0, ref_->camera_->fy_, ref_->camera_->cy_,

0,0,1

);

Mat rvec, tvec, inliers;

cv::solvePnPRansac ( pts3d, pts2d, K, Mat(), rvec, tvec, false, 100, 4.0, 0.99, inliers );

num_inliers_ = inliers.rows;

cout<<"pnp inliers: "<<num_inliers_<<endl;

T_c_w_estimated_ = SE3 (

SO3 ( rvec.at<double> ( 0,0 ), rvec.at<double> ( 1,0 ), rvec.at<double> ( 2,0 ) ),

Vector3d ( tvec.at<double> ( 0,0 ), tvec.at<double> ( 1,0 ), tvec.at<double> ( 2,0 ) )

);

// using bundle adjustment to optimize the pose

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6,2>> Block;

Block::LinearSolverType* linearSolver = new g2o::LinearSolverDense<Block::PoseMatrixType>();

Block* solver_ptr = new Block ( std::unique_ptr<Block::LinearSolverType>(linearSolver) );

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( std::unique_ptr<Block>(solver_ptr) );

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm ( solver );

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap();

pose->setId ( 0 );

pose->setEstimate ( g2o::SE3Quat (

T_c_w_estimated_.rotation_matrix(), T_c_w_estimated_.translation()

));

optimizer.addVertex ( pose );

// edges

for ( int i=0; i<inliers.rows; i++ )

{

int index = inliers.at<int> ( i,0 );

// 3D -> 2D projection

EdgeProjectXYZ2UVPoseOnly* edge = new EdgeProjectXYZ2UVPoseOnly();

edge->setId ( i );

edge->setVertex ( 0, pose );

edge->camera_ = curr_->camera_.get();

edge->point_ = Vector3d ( pts3d[index].x, pts3d[index].y, pts3d[index].z );

edge->setMeasurement ( Vector2d ( pts2d[index].x, pts2d[index].y ) );

edge->setInformation ( Eigen::Matrix2d::Identity() );

optimizer.addEdge ( edge );

// set the inlier map points

match_3dpts_[index]->matched_times_++;

}

optimizer.initializeOptimization();

optimizer.optimize ( 10 );

T_c_w_estimated_ = SE3 (

pose->estimate().rotation(),

pose->estimate().translation()

);

cout<<"T_c_w_estimated_: "<<endl<<T_c_w_estimated_.matrix()<<endl;

}

bool VisualOdometry::checkEstimatedPose()

{

// check if the estimated pose is good

if ( num_inliers_ < min_inliers_ )

{

cout<<"reject because inlier is too small: "<<num_inliers_<<endl;

return false;

}

// if the motion is too large, it is probably wrong

SE3 T_r_c = ref_->T_c_w_ * T_c_w_estimated_.inverse();

Sophus::Vector6d d = T_r_c.log();

if ( d.norm() > 5.0 )

{

cout<<"reject because motion is too large: "<<d.norm() <<endl;

return false;

}

return true;

}

bool VisualOdometry::checkKeyFrame()

{

SE3 T_r_c = ref_->T_c_w_ * T_c_w_estimated_.inverse();

Sophus::Vector6d d = T_r_c.log();

Vector3d trans = d.head<3>();

Vector3d rot = d.tail<3>();

if ( rot.norm() >key_frame_min_rot || trans.norm() >key_frame_min_trans )

return true;

return false;

}

//增加关键帧函数多了一步在第一帧时,将其对应的地图点全部添加进地图中。

void VisualOdometry::addKeyFrame()

{

if ( map_->keyframes_.empty() )

{

// first key-frame, add all 3d points into map

for ( size_t i=0; i<keypoints_curr_.size(); i++ )

{

double d = curr_->findDepth ( keypoints_curr_[i] );

if ( d < 0 )

continue;

Vector3d p_world = ref_->camera_->pixel2world (

Vector2d ( keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y ), curr_->T_c_w_, d

);

Vector3d n = p_world - ref_->getCamCenter();

n.normalize();

//上方求出构造地图点所需所有参数,3D点、模长、描述子、帧,然后构造一个地图点

MapPoint::Ptr map_point = MapPoint::createMapPoint(

p_world, n, descriptors_curr_.row(i).clone(), curr_.get()

);

//添加进地图

map_->insertMapPoint( map_point );

}

}

//一样的,第一帧添加进关键帧

map_->insertKeyFrame ( curr_ );

ref_ = curr_;

}

//新增函数,增加地图中的点。局部地图类似于slidewindow一样,随时的增删地图中的点,来跟随运动

void VisualOdometry::addMapPoints()

{

// add the new map points into map

//创建一个bool型的数组matched,大小为当前keypoints数组大小,值全为false

vector<bool> matched(keypoints_curr_.size(), false);

//首先这个match_2dkp_index_是新来的当前帧跟地图匹配时,好的匹配到的关键点在keypoins数组中的索引

//在这里将已经匹配的keypoint索引置为true

for ( int index:match_2dkp_index_ )

matched[index] = true;

//遍历当前keypoints数组

for ( int i=0; i<keypoints_curr_.size(); i++ )

{

//如果为true,说明在地图中找到了匹配,也就意味着地图中已经有这个点了。直接continue

if ( matched[i] == true )

continue;

//如果没有continue的话,说明这个点在地图中没有找到匹配,认定为新的点,

//下一步就是找到depth数据,构造3D点,然后构造地图点,添加进地图即可。

double d = ref_->findDepth ( keypoints_curr_[i] );

if ( d<0 )

continue;

Vector3d p_world = ref_->camera_->pixel2world (

Vector2d ( keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y ),

curr_->T_c_w_, d

);

Vector3d n = p_world - ref_->getCamCenter();

n.normalize();

MapPoint::Ptr map_point = MapPoint::createMapPoint(

p_world, n, descriptors_curr_.row(i).clone(), curr_.get()

);

map_->insertMapPoint( map_point );

}

}

//新增函数:优化地图。主要是为了维护地图的规模。删除一些地图点,在点过少时增加地图点等操作。

void VisualOdometry::optimizeMap()

{

// remove the hardly seen and no visible points

//删除地图点,遍历地图中的地图点。并分几种情况进行删除。

for ( auto iter = map_->map_points_.begin(); iter != map_->map_points_.end(); )

{

//如果点在当前帧都不可见了,说明跑的比价远了,删掉

if ( !curr_->isInFrame(iter->second->pos_) )

{

iter = map_->map_points_.erase(iter);

continue;

}

//定义匹配率,用匹配次数/可见次数,匹配率过低说明经常见但是没有几次匹配。应该是一些比较难识别的点,也就是出来的描述子比价奇葩。所以删掉

float match_ratio = float(iter->second->matched_times_)/iter->second->visible_times_;

if ( match_ratio < map_point_erase_ratio_ )

{

iter = map_->map_points_.erase(iter);

continue;

}

double angle = getViewAngle( curr_, iter->second );

if ( angle > M_PI/6. )

{

iter = map_->map_points_.erase(iter);

continue;

}

//继续,可以根据一些其他条件自己添加要删除点的情况

if ( iter->second->good_ == false )

{

// TODO try triangulate this map point

}

iter++;

}

//下面说一些增加点的情况,首先当前帧去地图中匹配时,点少于100个了,

// 一般情况是运动幅度过大了,跟之前的帧没多少交集了,所以增加一下。

if ( match_2dkp_index_.size()<100 )

addMapPoints();

//如果点过多了,多于1000个,适当增加释放率,让地图处于释放点的趋势。

if ( map_->map_points_.size() > 1000 )

{

// TODO map is too large, remove some one

map_point_erase_ratio_ += 0.05;

}

//如果没有多于1000个,保持释放率在0.1,维持地图的体量为平衡态

else

map_point_erase_ratio_ = 0.1;

cout<<"map points: "<<map_->map_points_.size()<<endl;

}

//取得一个空间点在一个帧下的视角角度。返回值是double类型的角度值。

double VisualOdometry::getViewAngle ( Frame::Ptr frame, MapPoint::Ptr point )

{

//构造发方法是空间点坐标减去相机中心坐标。得到从相机中心指向指向空间点的向量。

Vector3d n = point->pos_ - frame->getCamCenter();

//单位化

n.normalize();

//返回一个角度,acos()为反余弦,

//向量*乘为:a*b=|a||b|cos<a,b>

//所以单位向量的*乘得到的是两个向量的余弦值,再用acos()即得到角度,返回

//物理意义就是得到匆匆世界坐标系下看空间点和从相机坐标系下看空间点,视角差的角度。

return acos( n.transpose()*point->norm_ );

}

}