MedaiSync是android M新加入的API,可以帮助应用视音频的同步播放,如同官网介绍的

From Andriod M:

MediaSync:

class which helps applications to synchronously render audio and video streams. The audio buffers are submitted in non-blocking fashion and are returned via a callback. It also supports dynamic playback rate.MediaSync的基本用法

第一步:初始化MediaSync, 初始化mediaCodec和AudioTrack, 将AudioTrack和surface传给MeidaSync

MediaSync sync = new MediaSync();

sync.setSurface(surface);

Surface inputSurface = sync.createInputSurface();

...

// MediaCodec videoDecoder = ...;

videoDecoder.configure(format, inputSurface, ...);

...

sync.setAudioTrack(audioTrack);

第二步: MediaSync只会对audiobuffer做操作,一个是代表写入的queueAudio方法,一个是代表写完了的回调方法,也就是下面的

onAudioBufferConsumed

sync.setCallback(new MediaSync.Callback() {

@Override

public void onAudioBufferConsumed(MediaSync sync, ByteBuffer audioBuffer, int bufferId) {

...

}

}, null);

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

第三步:设置播放速度

// This needs to be done since sync is paused on creation.

sync.setPlaybackParams(new PlaybackParams().setSpeed(1.f));第四步:开始流转音视频buffer,这里就和MediaCodec的基本调用流程一样了,当拿到audioBuffer后,通过queueAudio将buffer给MediaSync,在对应的回调方法中release播放出去,至于video部分,直接releaseOutputBuffer即可

for (;;) {

...

// send video frames to surface for rendering, e.g., call

videoDecoder.releaseOutputBuffer(videoOutputBufferIx,videoPresentationTimeNs);

...

sync.queueAudio(audioByteBuffer, bufferId, audioPresentationTimeUs); // non-blocking.

// The audioByteBuffer and bufferId will be returned via callback.

}

第五步:播放完毕

sync.setPlaybackParams(new PlaybackParams().setSpeed(0.f));

sync.release();

sync = null;如果用的是MediaCodec的异步流程,如下,通过下面的代码可以更好的理解video buffer和audio buffer分别是怎么处理的

onOutputBufferAvailable(MediaCodec codec, int bufferId, BufferInfo info) {

// ...

if (codec == videoDecoder) {

codec.releaseOutputBuffer(bufferId, 1000 * info.presentationTime);

} else {

ByteBuffer audioByteBuffer = codec.getOutputBuffer(bufferId);

sync.queueAudio(audioByteBuffer, bufferId, info.presentationTime);

}

// ...

}

onAudioBufferConsumed(MediaSync sync, ByteBuffer buffer, int bufferId) {

// ...

audioDecoder.releaseBuffer(bufferId, false);

// ...

}

MediaSync的关键变量与方法

SyncParams:Android M新加入的API,用于控制AV同步的方法

1、倍速播放时如何处理audio

int AUDIO_ADJUST_MODE_DEFAULT

System will determine best handling of audio for playback rate adjustments.

Used by default. This will make audio play faster or slower as required by the sync source without changing its pitch; however, system may fall back to some other method (e.g. change the pitch, or mute the audio) if time stretching is no longer supported for the playback rate.

int AUDIO_ADJUST_MODE_RESAMPLE

Resample audio when playback rate must be adjusted.

This will make audio play faster or slower as required by the sync source by changing its pitch (making it lower to play slower, and higher to play faster.)

int AUDIO_ADJUST_MODE_STRETCH

Time stretch audio when playback rate must be adjusted.

This will make audio play faster or slower as required by the sync source without changing its pitch, as long as it is supported for the playback rate.

2、选择avsync的基准

int SYNC_SOURCE_AUDIO

Use audio track for sync source. This requires audio data and an audio track.

int SYNC_SOURCE_DEFAULT

Use the default sync source (default). If media has video, the sync renders to a surface that directly renders to a display, and tolerance is non zero (e.g. not less than 0.001) vsync source is used for clock source. Otherwise, if media has audio, audio track is used. Finally, if media has no audio, system clock is used.

int SYNC_SOURCE_SYSTEM_CLOCK

Use system monotonic clock for sync source.

int SYNC_SOURCE_VSYNC

Use vsync as the sync source. This requires video data and an output surface that directly renders to the display, e.g. SurfaceView

PlaybackParams Android M 新加入的API,主要用于控制倍速播放

get & setPlaybackParams (PlaybackParams params)

Gets and Sets the playback rate using PlaybackParams.MediaTimestamp Android M新加入的API

MediaTimestamp getTimestamp ()

Get current playback position.

MediaSyncExample

了解了MediaSync的基本用法和关键变量之后,我们可以参考cts中的代码写一个demo,基于这个demo测试MediaSync的音视频同步结果,研究背后的原理。

DEMO源码地址关注文末公众号获取。

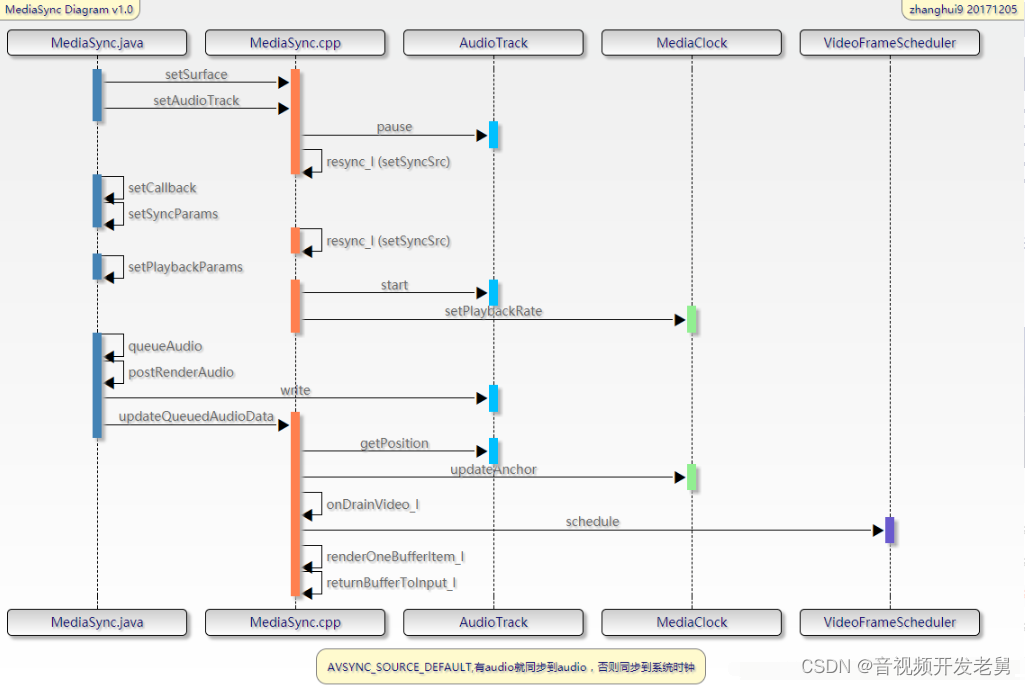

DEMO对应的时序图如下

关键的同步部分是在queueAudio之后开始进行的,需要注意的是,这里我们看到了熟悉的MediaClock和VideoFrameScheduler,它们都在libstagefright中,并且在NuPlayer的同步中就用到了它们。

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

MediaSync的同步逻辑

下面我会先简要的介绍MediaSync avsync逻辑中的关键点,最后再进行详细的代码分析。

Video部分

1、对实际送显时间的计算

void MediaSync::onDrainVideo_l()

int64_t nowUs = ALooper::GetNowUs();

BufferItem *bufferItem = &*mBufferItems.begin();

int64_t itemMediaUs = bufferItem->mTimestamp / 1000;

//这里就是调用MediaClock的getRealTimeFor方法,得到“视频帧应该显示的时间”

int64_t itemRealUs = getRealTime(itemMediaUs, nowUs);2、利用vsync信号调整itemRealUs

void MediaSync::onDrainVideo_l()

itemRealUs = mFrameScheduler->schedule(itemRealUs * 1000) / 1000;

//计算两倍vsyncDuration

int64_t twoVsyncsUs = 2 * (mFrameScheduler->getVsyncPeriod() / 1000);

3、提前2倍vsync duration时间送显

void MediaSync::onDrainVideo_l()

if (itemRealUs <= nowUs + twoVsyncsUs) {

//如果当前时间距离itemRealUs已经不足2*vsyncDuration,则赶紧去显示

//将buffer的timeStamp设置为修正后的itemRealUs

bufferItem->mTimestamp = itemRealUs * 1000;

bufferItem->mIsAutoTimestamp = false;

//queuebuffer给output,送去SF显示

renderOneBufferItem_l(*bufferItem);

} else {

sp<AMessage> msg = new AMessage(kWhatDrainVideo, this);

msg->post(itemRealUs - nowUs - twoVsyncsUs);

mNextBufferItemMediaUs = itemMediaUs;

}

前面我们分析NuPlayer的avsync时,曾经发现了一个问题,即NuPlayer实际送给render的video buffer timestamp并不是经过frameScheduler调整后的时间戳,看来在MediaSync中修正了这个问题

Audio部分

1、current play time 的计算

status_t MediaSync::updateQueuedAudioData

int64_t nowUs = ALooper::GetNowUs();

int64_t nowMediaUs = presentationTimeUs

- (getDurationIfPlayedAtNativeSampleRate_l(mNumFramesWritten)

- getPlayedOutAudioDurationMedia_l(nowUs));

mMediaClock->updateAnchor(nowMediaUs, nowUs, maxMediaTimeUs);

至于其中关键的getPlayedOutAudioDurationMedia_l方法,也和NuPlayer中的完全一样

小结

Video部分:

和NuPlayer的相似度在95%以上,

不同点在于真正利用了vsync调整后的时间戳作为显示时间戳

Audio部分:

完全就是NuPlayer中的逻辑!

MediaSync的架构

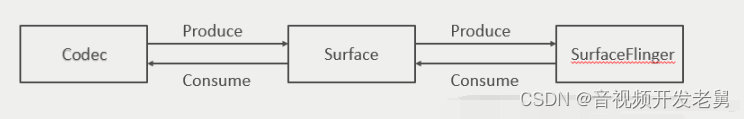

先来介绍一下MediaSync的基本架构,codec, surface, surfaceFlinger之间的关系如下

我们知道framework中各个模块都是各司其职的,MediaCodec专门做解码的工作,Surface & SurfaceFlinger专门做画面的组合、绘制的工作,现在要专门搞一个负责AVSync的模块MediaSync,又要独立于MediaCodec和Suface,要怎么搞呢?

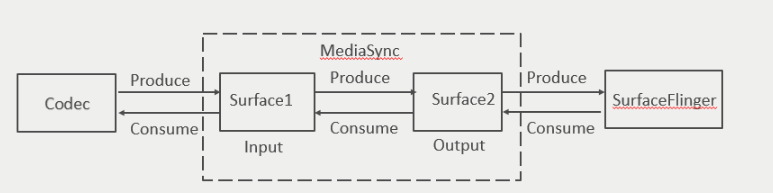

回忆MediaSync的基本调用流程,在第一步中我们进行了如下了的工作

MediaSync sync = new MediaSync();

sync.setSurface(surface);

这里的第一个surface是应用的surfaceHolder.getSurface()拿到的Surface

Surface inputSurface = sync.createInputSurface();

这里的第二个Surface是MediaSync返回给我们的surface,

是一个新的Surface,已经和第一个surface不同了

...

// MediaCodec videoDecoder = ...;

videoDecoder.configure(format, inputSurface, ...);

在MediaCodec.configure时传入的是MediaSync返回的Surface,而不是应用本身的surface了我们之前在ExoPlayer和NuPlayer中看到的音视频同步策略都是在codec和Surface之间来做的。现在想要加一个可以配合MediaCodec使用的MediaSync模块,则需要做成下面的样子

图中Surface2是应用本身的Surface,定义为MediaSync的output,它是一个GraphicBufferProducer;Surface1是MediaSync提供给MediaCodec configure用的,定义为MediaSync的input,它是一个GraphicBufferConsumer

在MediaSync的音视频同步控制就是以audio clock为基准,控制Surface1和Surface2之间的视频buffer

buffer流转的顺序如下:

1、surface1拿到codec送来的buffer

acquire buffer from Input

2、在MediaSync中对buffer的timestamp进行调整后,把buffer给surface2

queue buffer to Output

3、surface2将这个buffer给surfaceFlinger显示

detached buffer from output

4、和mediacodec一样,再把buffer给input

release buffer to Input

先来看一下这两个surface是怎么来的

1、应用本身的buffer,即图中的surface2

status_t MediaSync::setSurface(const sp<IGraphicBufferProducer> &output) {

...

if (output != NULL) {

...

// Try to connect to new output surface. If failed, current output surface will not

// be changed.

IGraphicBufferProducer::QueueBufferOutput queueBufferOutput;

sp<OutputListener> listener(new OutputListener(this, output));

IInterface::asBinder(output)->linkToDeath(listener);

status_t status =

output->connect(listener,

NATIVE_WINDOW_API_MEDIA,

true /* producerControlledByApp */,

&queueBufferOutput);

...

if (mFrameScheduler == NULL) {

mFrameScheduler = new VideoFrameScheduler();

mFrameScheduler->init();

}

}

…

//将MediaSync中的output和应用本身的surface关联起来了

mOutput = output;

return NO_ERROR;

}

2.MediaSync自己创建出的新surface,其实直接看到最后那个

android_view_Surface_createFromIGraphicBufferProducer 就知道这里新创建了一个surface了

static jobject android_media_MediaSync_createInputSurface(

JNIEnv* env, jobject thiz) {

...

sp<JMediaSync> sync = getMediaSync(env, thiz);

...

// Tell the MediaSync that we want to use a Surface as input.

sp<IGraphicBufferProducer> bufferProducer;

//初始化bufferProducer, 详见2.2

status_t err = sync->createInputSurface(&bufferProducer);

...

// Wrap the IGBP in a Java-language Surface.

//利用bufferProducer, 创建新surface,详见2.1

return android_view_Surface_createFromIGraphicBufferProducer(env,

bufferProducer);

}

2.1

jobject android_view_Surface_createFromIGraphicBufferProducer(JNIEnv* env,

const sp<IGraphicBufferProducer>& bufferProducer) {

...

sp<Surface> surface(new Surface(bufferProducer, true));

if (surface == NULL) {

return NULL;

}

...

}

2.2

status_t MediaSync::createInputSurface(

sp<IGraphicBufferProducer> *outBufferProducer) {

...

sp<IGraphicBufferProducer> bufferProducer;

sp<IGraphicBufferConsumer> bufferConsumer;

BufferQueue::createBufferQueue(&bufferProducer, &bufferConsumer);

sp<InputListener> listener(new InputListener(this));

IInterface::asBinder(bufferConsumer)->linkToDeath(listener);

status_t status =

bufferConsumer->consumerConnect(listener, false /* controlledByApp */);

if (status == NO_ERROR) {

bufferConsumer->setConsumerName(String8("MediaSync"));

// propagate usage bits from output surface

mUsageFlagsFromOutput = 0;

mOutput->query(NATIVE_WINDOW_CONSUMER_USAGE_BITS, &mUsageFlagsFromOutput);

bufferConsumer->setConsumerUsageBits(mUsageFlagsFromOutput);

//将MediaSync的input和新surface对应的producer关联起来了

*outBufferProducer = bufferProducer;

mInput = bufferConsumer;

...

}

MediaSync avsync逻辑代码精读

先来看video部分

–

对video的调整主要在下面的方法中,可以看到,和NuPlayer中的相似度在95%以上

同样可以看到对实际送显时间的计算,以及利用VideoFrameScheduler进行调整

void MediaSync::onDrainVideo_l() {

...

while (!mBufferItems.empty()) {

int64_t nowUs = ALooper::GetNowUs();

BufferItem *bufferItem = &*mBufferItems.begin();

int64_t itemMediaUs = bufferItem->mTimestamp / 1000;

//这里就是调用MediaClock的getRealTimeFor方法,得到“视频帧应该显示的时间”

int64_t itemRealUs = getRealTime(itemMediaUs, nowUs);

// adjust video frame PTS based on vsync

//利用vsync信号调整itemRealUs

itemRealUs = mFrameScheduler->schedule(itemRealUs * 1000) / 1000;

//计算两倍vsyncDuration

int64_t twoVsyncsUs = 2 * (mFrameScheduler->getVsyncPeriod() / 1000);

// post 2 display refreshes before rendering is due

if (itemRealUs <= nowUs + twoVsyncsUs) {

//如果当前时间距离itemRealUs已经不足2*vsyncDuration,则赶紧去显示

//将buffer的timeStamp设置为修正后的itemRealUs,前面我们分析NuPlayer的avsync时,曾经发现了一个问题,即NuPlayer实际送给render的video buffer timestamp并不是经过frameScheduler调整后的时间戳,看来在MediaSync中修正了这个问题

bufferItem->mTimestamp = itemRealUs * 1000;

bufferItem->mIsAutoTimestamp = false;

if (mHasAudio) {

//nowUs大于itemRealUs,说明视频帧来晚了,这里默认的门限值是40ms

if (nowUs - itemRealUs <= kMaxAllowedVideoLateTimeUs) {

//queuebuffer给output,送去SF显示

renderOneBufferItem_l(*bufferItem);

} else {

// too late.丢帧

returnBufferToInput_l(

bufferItem->mGraphicBuffer, bufferItem->mFence);

mFrameScheduler->restart();

}

} else {

// always render video buffer in video-only mode.

renderOneBufferItem_l(*bufferItem);

// smooth out videos >= 10fps

mMediaClock->updateAnchor(

itemMediaUs, nowUs, itemMediaUs + 100000);

}

mBufferItems.erase(mBufferItems.begin());

mNextBufferItemMediaUs = -1;

} else {

//如果当前时间距离itemRealUs大于2*vsyncDuration,则等到提前2*vsyncDuration的时候再去显示

if (mNextBufferItemMediaUs == -1

|| mNextBufferItemMediaUs > itemMediaUs) {

sp<AMessage> msg = new AMessage(kWhatDrainVideo, this);

msg->post(itemRealUs - nowUs - twoVsyncsUs);

mNextBufferItemMediaUs = itemMediaUs;

}

break;

}

}

}

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

2.MediaSync的audio同步机制很有意思,是从java部分开始的, 尽管如此,我们还是可以发现他和NuPlayer的相似度在95%以上

public void queueAudio(

@NonNull ByteBuffer audioData, int bufferId, long presentationTimeUs) {

...

synchronized(mAudioLock) {

mAudioBuffers.add(new AudioBuffer(audioData, bufferId, presentationTimeUs));

}

if (mPlaybackRate != 0.0) {

postRenderAudio(0);

}

}

//这里同样存在一个delay入参

private void postRenderAudio(long delayMillis) {

mAudioHandler.postDelayed(new Runnable() {

public void run() {

synchronized(mAudioLock) {

...

AudioBuffer audioBuffer = mAudioBuffers.get(0);

int size = audioBuffer.mByteBuffer.remaining();

// restart audio track after flush

if (size > 0 && mAudioTrack.getPlayState() != AudioTrack.PLAYSTATE_PLAYING) {

try {

mAudioTrack.play();

} catch (IllegalStateException e) {

Log.w(TAG, "could not start audio track");

}

}

int sizeWritten = mAudioTrack.write(

audioBuffer.mByteBuffer,

size,

AudioTrack.WRITE_NON_BLOCKING);

if (sizeWritten > 0) {

if (audioBuffer.mPresentationTimeUs != -1) {

//将pts时间送到framework中,用于更新currentPosition

native_updateQueuedAudioData(

size, audioBuffer.mPresentationTimeUs);

audioBuffer.mPresentationTimeUs = -1;

}

if (sizeWritten == size) {

//送给回调函数,在回调函数中进行audio的播放

postReturnByteBuffer(audioBuffer);

mAudioBuffers.remove(0);

if (!mAudioBuffers.isEmpty()) {

postRenderAudio(0);

}

return;

}

}

//和nuplayer中一样,先计算出pendingDuration,然后等pendingDuration/2时间后再开始新的轮转

long pendingTimeMs = TimeUnit.MICROSECONDS.toMillis(

native_getPlayTimeForPendingAudioFrames());

postRenderAudio(pendingTimeMs / 2);

}

}

}, delayMillis);

}

2.1

到了framework中,对应的是下面的方法,可以说完全就是NuPlayer的avsync逻辑了,相信不用我说,你也能看懂

status_t MediaSync::updateQueuedAudioData(

size_t sizeInBytes, int64_t presentationTimeUs) {

...

int64_t numFrames = sizeInBytes / mAudioTrack->frameSize();

int64_t maxMediaTimeUs = presentationTimeUs

+ getDurationIfPlayedAtNativeSampleRate_l(numFrames);

int64_t nowUs = ALooper::GetNowUs();

int64_t nowMediaUs = presentationTimeUs

- getDurationIfPlayedAtNativeSampleRate_l(mNumFramesWritten)

+ getPlayedOutAudioDurationMedia_l(nowUs);

mNumFramesWritten += numFrames;

int64_t oldRealTime = -1;

if (mNextBufferItemMediaUs != -1) {

oldRealTime = getRealTime(mNextBufferItemMediaUs, nowUs);

}

mMediaClock->updateAnchor(nowMediaUs, nowUs, maxMediaTimeUs);

mHasAudio = true;

if (oldRealTime != -1) {

int64_t newRealTime = getRealTime(mNextBufferItemMediaUs, nowUs);

if (newRealTime >= oldRealTime) {

return OK;

}

}

mNextBufferItemMediaUs = -1;

onDrainVideo_l();

return OK;

}

至于其中关键的getPlayedOutAudioDurationMedia_l方法,也和NuPlayer中的完全一样

int64_t MediaSync::getPlayedOutAudioDurationMedia_l(int64_t nowUs) {

...

uint32_t numFramesPlayed;

int64_t numFramesPlayedAt;

AudioTimestamp ts;

static const int64_t kStaleTimestamp100ms = 100000;

status_t res = mAudioTrack->getTimestamp(ts);

if (res == OK) {

// case 1: mixing audio tracks.

numFramesPlayed = ts.mPosition;

numFramesPlayedAt =

ts.mTime.tv_sec * 1000000LL + ts.mTime.tv_nsec / 1000;

const int64_t timestampAge = nowUs - numFramesPlayedAt;

if (timestampAge > kStaleTimestamp100ms) {

// This is an audio FIXME.

// getTimestamp returns a timestamp which may come from audio

// mixing threads. After pausing, the MixerThread may go idle,

// thus the mTime estimate may become stale. Assuming that the

// MixerThread runs 20ms, with FastMixer at 5ms, the max latency

// should be about 25ms with an average around 12ms (to be

// verified). For safety we use 100ms.

ALOGV("getTimestamp: returned stale timestamp nowUs(%lld) "

"numFramesPlayedAt(%lld)",

(long long)nowUs, (long long)numFramesPlayedAt);

numFramesPlayedAt = nowUs - kStaleTimestamp100ms;

}

//ALOGD("getTimestamp: OK %d %lld",

// numFramesPlayed, (long long)numFramesPlayedAt);

} else if (res == WOULD_BLOCK) {

// case 2: transitory state on start of a new track

numFramesPlayed = 0;

numFramesPlayedAt = nowUs;

//ALOGD("getTimestamp: WOULD_BLOCK %d %lld",

// numFramesPlayed, (long long)numFramesPlayedAt);

} else {

// case 3: transitory at new track or audio fast tracks.

res = mAudioTrack->getPosition(&numFramesPlayed);

CHECK_EQ(res, (status_t)OK);

numFramesPlayedAt = nowUs;

// MStar Android Patch Begin

numFramesPlayedAt += 1000LL * mAudioTrack->latency() / 2; /* XXX */

// MStar Android Patch End

//ALOGD("getPosition: %d %lld", numFramesPlayed, (long long)numFramesPlayedAt);

}

//can't be negative until 12.4 hrs, test.

//CHECK_EQ(numFramesPlayed & (1 << 31), 0);

int64_t durationUs =

getDurationIfPlayedAtNativeSampleRate_l(numFramesPlayed)

+ nowUs - numFramesPlayedAt;

if (durationUs < 0) {

// Occurs when numFramesPlayed position is very small and the following:

// (1) In case 1, the time nowUs is computed before getTimestamp() is

// called and numFramesPlayedAt is greater than nowUs by time more

// than numFramesPlayed.

// (2) In case 3, using getPosition and adding mAudioTrack->latency()

// to numFramesPlayedAt, by a time amount greater than

// numFramesPlayed.

//

// Both of these are transitory conditions.

ALOGV("getPlayedOutAudioDurationMedia_l: negative duration %lld "

"set to zero", (long long)durationUs);

durationUs = 0;

}

ALOGV("getPlayedOutAudioDurationMedia_l(%lld) nowUs(%lld) frames(%u) "

"framesAt(%lld)",

(long long)durationUs, (long long)nowUs, numFramesPlayed,

(long long)numFramesPlayedAt);

return durationUs;

}

如果你对音视频开发感兴趣,或者对本文的一些阐述有自己的看法,可以在下方的留言框,一起探讨。

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓