1. Zookeeper集群

基于pv和pvc作为后端存储,实现zookeeper集群

2. 创建PV

创建NFS

## nfs服务器上生成目录,并配置权限

root@haproxy-1:~# mkdir /data/k8s/wework/zookeeper-datadir-{1..3}

root@haproxy-1:~# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/data/k8s *(rw,sync,no_root_squash)

/data/k8s/wework/images *(rw,sync,no_root_squash)

/data/k8s/wework/zookeeper-datadir-1 *(rw,sync,no_root_squash)

/data/k8s/wework/zookeeper-datadir-2 *(rw,sync,no_root_squash)

/data/k8s/wework/zookeeper-datadir-3 *(rw,sync,no_root_squash)

root@haproxy-1:~# exportfs -rva

## 客户端查看是否有nfs权限

root@k8s-master-01:~# showmount -e 192.168.31.109

Export list for 192.168.31.109:

/data/k8s/wework/zookeeper-datadir-3 *

/data/k8s/wework/zookeeper-datadir-2 *

/data/k8s/wework/zookeeper-datadir-1 *

/data/k8s/wework/images *

/data/k8s *

zookeeper-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.31.109

path: /data/k8s/wework/zookeeper-datadir-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.31.109

path: /data/k8s/wework/zookeeper-datadir-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.31.109

path: /data/k8s/wework/zookeeper-datadir-3

创建pv

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper/pv# kubectl apply -f zookeeper-persistentvolume.yaml

persistentvolume/zookeeper-datadir-pv-1 created

persistentvolume/zookeeper-datadir-pv-2 created

persistentvolume/zookeeper-datadir-pv-3 created

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper/pv# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

test 1Gi RWX Retain Available nfs 56d

zookeeper-datadir-pv-1 20Gi RWO Retain Available 10s

zookeeper-datadir-pv-2 20Gi RWO Retain Available 10s

zookeeper-datadir-pv-3 20Gi RWO Retain Available 10s

3. 创建PVC

zookeeper-persistentvolumeclaim.yaml

pvc只能小于或等于pv

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: wework

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: wework

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: wework

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3

resources:

requests:

storage: 10Gi

创建pvc,pvc属于某个namespace

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper/pv# kubectl apply -f zookeeper-persistentvolumeclaim.yaml

persistentvolumeclaim/zookeeper-datadir-pvc-1 created

persistentvolumeclaim/zookeeper-datadir-pvc-2 created

persistentvolumeclaim/zookeeper-datadir-pvc-3 created

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper/pv# kubectl get pvc -n wework

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-datadir-pvc-1 Bound zookeeper-datadir-pv-1 20Gi RWO 8s

zookeeper-datadir-pvc-2 Bound zookeeper-datadir-pv-2 20Gi RWO 8s

zookeeper-datadir-pvc-3 Bound zookeeper-datadir-pv-3 20Gi RWO 8s

4. Zookeeper 镜像构建

获取基础镜像

docker pull elevy/slim_java:8

docker tag elevy/slim_java:8 harbor.intra.com/baseimages/slim_java:8

docker push harbor.intra.com/baseimages/slim_java:8

Zookeeper 的Dockerfile

数据文件: /zookeeper/data

预写日志: /zookeeper/wal

运行日志: /zookeeper/log

FROM harbor.intra.com/baseimages/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${

ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${

PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

build

#!/bin/bash

TAG=$1

docker build -t harbor.intra.com/wework/zookeeper:${

TAG} .

sleep 1

docker push harbor.intra.com/wework/zookeeper:${

TAG}

构建zookeeper镜像并上传harbor

root@k8s-master-01:/opt/k8s-data/dockerfile/web/wework/zookeeper# ./build-command.sh v3.4.14

Successfully built 5ae04b2d185f

Successfully tagged harbor.intra.com/wework/zookeeper:v3.4.14

The push refers to repository [harbor.intra.com/wework/zookeeper]

0b68dce37282: Pushed

1eb2dfcd3952: Layer already exists

6151f3742918: Layer already exists

bc80b73c0131: Layer already exists

4a6aa019c220: Layer already exists

d7946f8d3eee: Layer already exists

ffc9bf08c9aa: Layer already exists

6903db7df6a5: Layer already exists

e053edd72ca6: Layer already exists

aba783efb1a4: Layer already exists

5bef08742407: Layer already exists

v3.4.14: digest: sha256:9d751752e075839c36f48787c337cbfbe5de7aeca60bbf3f81f26b00705e3de3 size: 2621

root@k8s-master-01:/opt/k8s-data/dockerfile/web/wework/zookeeper# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

harbor.intra.com/wework/zookeeper v3.4.14 5ae04b2d185f About a minute ago 183MB

测试镜像是否可以正常使用,确保zookeeper服务可以监听在2181端口

扫描二维码关注公众号,回复:

14462712 查看本文章

root@k8s-master-01:/opt/k8s-data/dockerfile/web/wework/zookeeper# docker run -it --rm harbor.intra.com/wework/zookeeper:v3.4.14

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

2022-08-10 01:57:43,154 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /zookeeper/bin/../conf/zoo.cfg

2022-08-10 01:57:43,158 [myid:] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3

2022-08-10 01:57:43,158 [myid:] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 1

2022-08-10 01:57:43,162 [myid:] - WARN [main:QuorumPeerMain@116] - Either no config or no quorum defined in config, running in standalone mode

2022-08-10 01:57:43,163 [myid:] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@138] - Purge task started.

2022-08-10 01:57:43,165 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /zookeeper/bin/../conf/zoo.cfg

2022-08-10 01:57:43,166 [myid:] - INFO [main:ZooKeeperServerMain@98] - Starting server

2022-08-10 01:57:43,171 [myid:] - INFO [main:Environment@100] - Server environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

2022-08-10 01:57:43,171 [myid:] - INFO [main:Environment@100] - Server environment:host.name=6b10cd66d66d

2022-08-10 01:57:43,172 [myid:] - INFO [main:Environment@100] - Server environment:java.version=1.8.0_144

2022-08-10 01:57:43,172 [myid:] - INFO [main:Environment@100] - Server environment:java.vendor=Oracle Corporation

2022-08-10 01:57:43,172 [myid:] - INFO [main:Environment@100] - Server environment:java.home=/usr/lib/jvm/java-8-oracle

2022-08-10 01:57:43,173 [myid:] - INFO [main:Environment@100] - Server environment:java.class.path=/zookeeper/bin/../zookeeper-server/target/classes:/zookeeper/bin/../build/classes:/zookeeper/bin/../zookeeper-server/target/lib/*.jar:/zookeeper/bin/../build/lib/*.jar:/zookeeper/bin/../lib/slf4j-log4j12-1.7.25.jar:/zookeeper/bin/../lib/slf4j-api-1.7.25.jar:/zookeeper/bin/../lib/netty-3.10.6.Final.jar:/zookeeper/bin/../lib/log4j-1.2.17.jar:/zookeeper/bin/../lib/jline-0.9.94.jar:/zookeeper/bin/../lib/audience-annotations-0.5.0.jar:/zookeeper/bin/../zookeeper-3.4.14.jar:/zookeeper/bin/../zookeeper-server/src/main/resources/lib/*.jar:/zookeeper/bin/../conf:

2022-08-10 01:57:43,173 [myid:] - INFO [main:Environment@100] - Server environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2022-08-10 01:57:43,173 [myid:] - INFO [main:Environment@100] - Server environment:java.io.tmpdir=/tmp

2022-08-10 01:57:43,174 [myid:] - INFO [main:Environment@100] - Server environment:java.compiler=<NA>

2022-08-10 01:57:43,174 [myid:] - INFO [main:Environment@100] - Server environment:os.name=Linux

2022-08-10 01:57:43,175 [myid:] - INFO [main:Environment@100] - Server environment:os.arch=amd64

2022-08-10 01:57:43,175 [myid:] - INFO [main:Environment@100] - Server environment:os.version=4.15.0-189-generic

2022-08-10 01:57:43,175 [myid:] - INFO [main:Environment@100] - Server environment:user.name=root

2022-08-10 01:57:43,176 [myid:] - INFO [main:Environment@100] - Server environment:user.home=/root

2022-08-10 01:57:43,176 [myid:] - INFO [main:Environment@100] - Server environment:user.dir=/zookeeper

2022-08-10 01:57:43,182 [myid:] - INFO [main:ZooKeeperServer@836] - tickTime set to 2000

2022-08-10 01:57:43,183 [myid:] - INFO [main:ZooKeeperServer@845] - minSessionTimeout set to -1

2022-08-10 01:57:43,183 [myid:] - INFO [main:ZooKeeperServer@854] - maxSessionTimeout set to -1

2022-08-10 01:57:43,185 [myid:] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@144] - Purge task completed.

2022-08-10 01:57:43,189 [myid:] - INFO [main:ServerCnxnFactory@117] - Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory

2022-08-10 01:57:43,193 [myid:] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181

5. Zookeeper yaml

zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: wework

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: wework

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: wework

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: wework

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: wework

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {

}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.intra.com/wework/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: wework

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {

}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.intra.com/wework/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: wework

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {

}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.intra.com/wework/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

将zookeeper部署到k8s

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper# kubectl apply -f zookeeper.yaml

service/zookeeper created

service/zookeeper1 created

service/zookeeper2 created

service/zookeeper3 created

deployment.apps/zookeeper1 created

deployment.apps/zookeeper2 created

deployment.apps/zookeeper3 created

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper# kubectl get pods -n wework

NAME READY STATUS RESTARTS AGE

wework-nginx-deployment-cdbb4945f-7xgx5 1/1 Running 0 92m

wework-tomcat-app1-deployment-65d8d46957-s4666 1/1 Running 0 92m

zookeeper1-699d46468c-8jq4x 1/1 Running 0 4s

zookeeper2-7cc484778-gj45x 1/1 Running 0 4s

zookeeper3-cdf484f7c-jh6hz 1/1 Running 0 4s

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper# kubectl get pvc -n wework

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-datadir-pvc-1 Bound zookeeper-datadir-pv-1 20Gi RWO 12h

zookeeper-datadir-pvc-2 Bound zookeeper-datadir-pv-2 20Gi RWO 12h

zookeeper-datadir-pvc-3 Bound zookeeper-datadir-pv-3 20Gi RWO 12h

root@k8s-master-01:/opt/k8s-data/yaml/wework/zookeeper# kubectl get svc -n wework

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wework-nginx-service NodePort 10.200.89.252 <none> 80:30090/TCP,443:30091/TCP 45h

wework-tomcat-app1-service ClusterIP 10.200.21.158 <none> 80/TCP 26h

zookeeper ClusterIP 10.200.117.19 <none> 2181/TCP 41m

zookeeper1 NodePort 10.200.167.230 <none> 2181:32181/TCP,2888:31774/TCP,3888:56670/TCP 41m

zookeeper2 NodePort 10.200.36.129 <none> 2181:32182/TCP,2888:46321/TCP,3888:30984/TCP 41m

zookeeper3 NodePort 10.200.190.129 <none> 2181:32183/TCP,2888:61447/TCP,3888:51393/TCP 41m

此时zookeeper的数据已经通过pv写入了nfs

root@haproxy-1:~# ls /data/k8s/wework/zookeeper-datadir-1

myid version-2

root@haproxy-1:~# ls /data/k8s/wework/zookeeper-datadir-2

myid version-2

root@haproxy-1:~# ls /data/k8s/wework/zookeeper-datadir-3

myid version-2

root@haproxy-1:~# cat /data/k8s/wework/zookeeper-datadir-1/myid

1

root@haproxy-1:~# cat /data/k8s/wework/zookeeper-datadir-2/myid

2

root@haproxy-1:~# cat /data/k8s/wework/zookeeper-datadir-3/myid

3

root@haproxy-1:~# ls -l /data/k8s/wework/zookeeper-datadir-1/version-2/

total 8

-rw-r--r-- 1 root root 1 Aug 10 10:24 acceptedEpoch

-rw-r--r-- 1 root root 1 Aug 10 10:24 currentEpoch

root@haproxy-1:~# ls -l /data/k8s/wework/zookeeper-datadir-2/version-2/

total 12

-rw-r--r-- 1 root root 1 Aug 10 10:24 acceptedEpoch

-rw-r--r-- 1 root root 1 Aug 10 10:24 currentEpoch

-rw-r--r-- 1 root root 296 Aug 10 10:24 snapshot.100000000

root@haproxy-1:~# ls -l /data/k8s/wework/zookeeper-datadir-3/version-2/

total 8

-rw-r--r-- 1 root root 1 Aug 10 10:24 acceptedEpoch

-rw-r--r-- 1 root root 1 Aug 10 10:24 currentEpoch

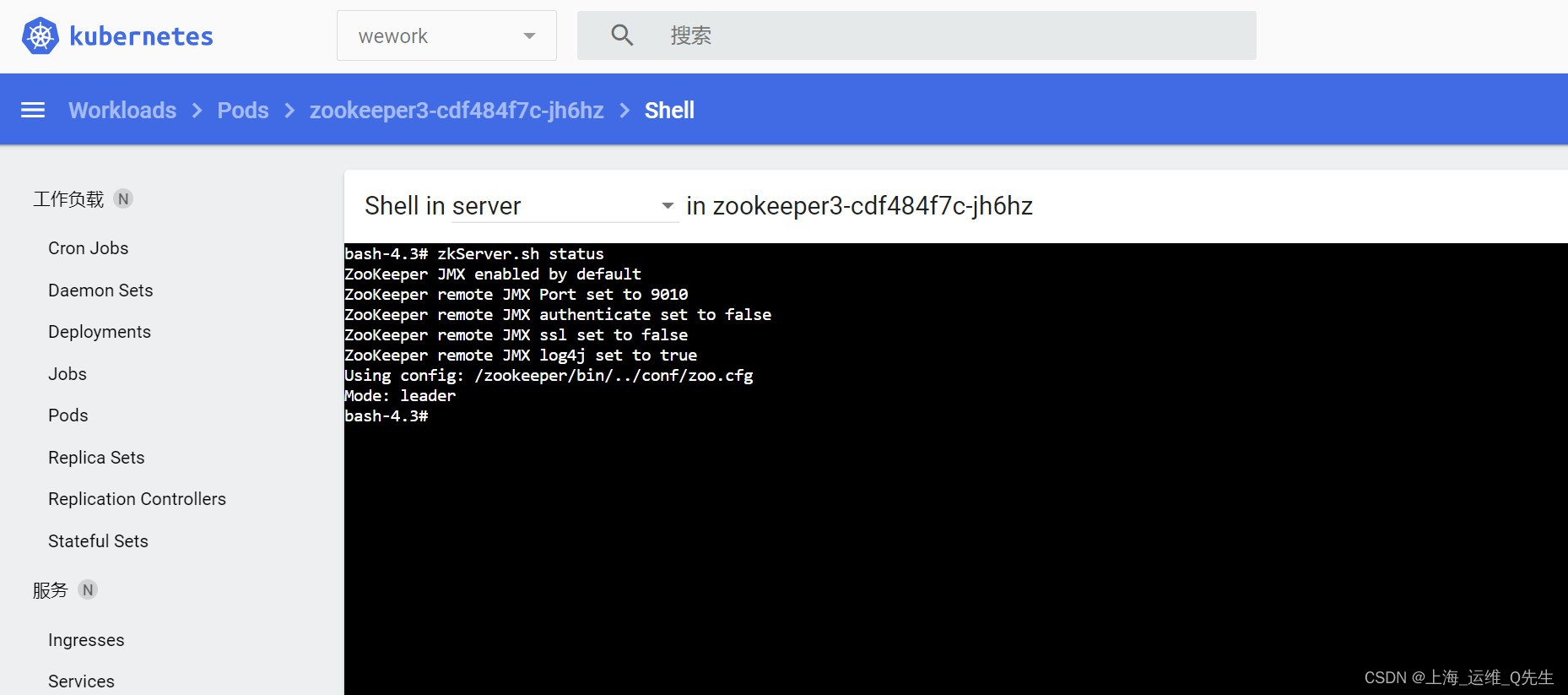

登录控制台查看zookeeper状态

bash-4.3# zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader

bash-4.3# netstat -tulnp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:2181 0.0.0.0:* LISTEN 1/java

tcp 0 0 0.0.0.0:44677 0.0.0.0:* LISTEN 1/java

tcp 0 0 0.0.0.0:2888 0.0.0.0:* LISTEN 1/java

tcp 0 0 0.0.0.0:3888 0.0.0.0:* LISTEN 1/java

tcp 0 0 0.0.0.0:9010 0.0.0.0:* LISTEN 1/java

tcp 0 0 0.0.0.0:45529 0.0.0.0:* LISTEN 1/java

至此zookeeper配置完成