闲聊

- 前几天骑电瓶车被厦门交警抓拍了,发了一条短信给我,××××××,您于×月×号,没带头盔,

- 请文明安全出行,感慨科技越来越厉害了,我就想这东西咱能不能自己整一个,马上安排上。

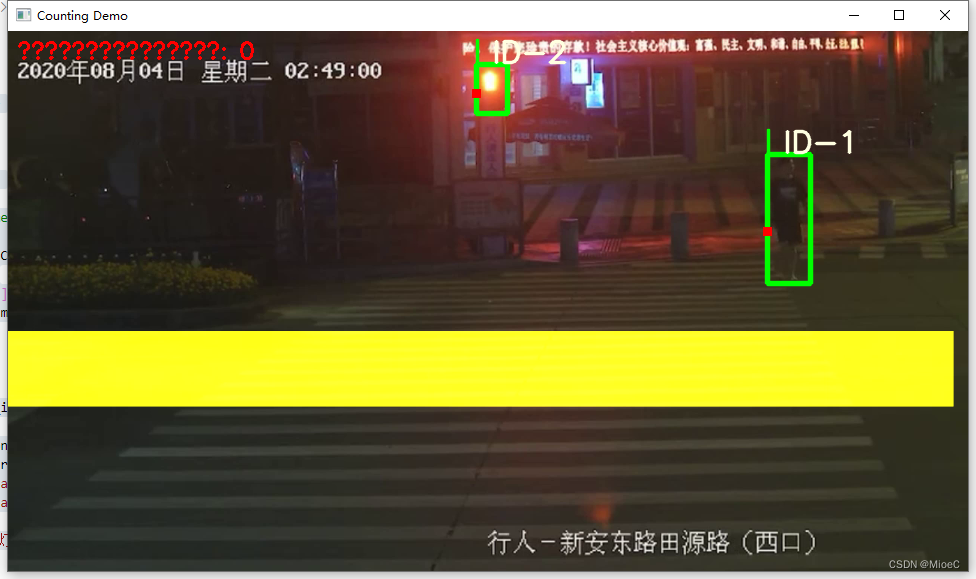

效果

演示地址https://www.bilibili.com/video/BV1pe4y1Q7zP?spm_id_from=333.999.0.0

代码地址

https://github.com/cdmstrong/traffic-light

思路

- 首先啊,咱需要有一个路口的摄像头监控视频输入,先用网上小视频替代

- 然后呢,咱还需要目标检测算法,需要检测斑马线,行人,头盔,电瓶车,红绿灯

- 然后,还需要一个目标跟踪算法,在画面中跟踪同一个人,不然会出现无数的人

- 再然后,咱需要判断红绿灯的情况,以及行人的状态和位置,综合各种情况考虑是否闯红灯

- 然后再反馈到界面操作上面,给老板看效果,好升职加薪嘛

步骤

下载小视频

- 这个视频真的太难找了,实在是稀有,

点击链接下载

检测算法实现

- 左看又看,还是yolov5比较适合我,主要是有用过目标检测行人的方案

- 这个部分比较复杂,是项目的核心

跟踪算法实现

- 这个我毫不犹豫就选择了deepsort算法, 牛逼的算法,大牛的设计,不解释

- 论文参考 下载

红绿灯的情况判断

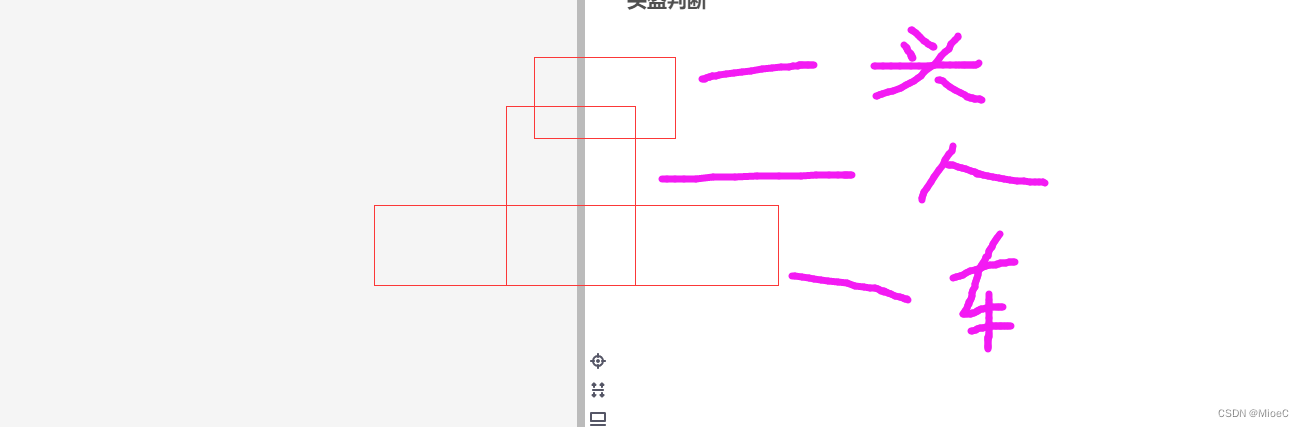

头盔判断

- 我的想法是识别出人和车,但yolov5是分开识别的,也就是人和车识别出来的是不同的物体,所以这块需要将人和车合并进行训练,就是训练出人骑车的识别模型,然后判断有么有带头盔

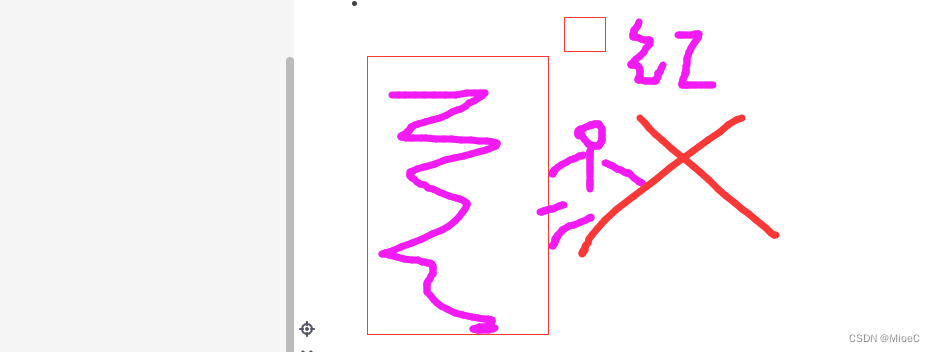

- 另一个思路是,训练出不戴头盔的识别模型,直接检测不戴 头盔的人就可以,这个是比较好的实现方案,但由于情况有限,数据较少,加上电脑没有GPU环境,所以头盔检测没法实现。

红绿灯抓拍

- 这个不需要训练模型,而且较为简单,只需要检测红绿灯,再判断有么有人过马路即可。

- 所以我们先明确检测的物体是红绿灯, 人-

- 过滤情况设置为两类

OBJ_LIST = ['person', 'traffic light']

- 将红灯的图片截取,进行opencv 判断

def isRed(img):

# cv2.imwrite('video/' + time.strftime('%Y-%m-%d-%H-%M-%S') + '.png', img)

img = cv2.medianBlur(img, 3)

img = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

min = np.array([0, 43, 46])

max = np.array([10, 255, 255])

img = cv2.inRange(img, min, max)

if np.max(img):

return True

else :

return False

- 抓拍方法

def catch_person(isLight, track_id, img, ori):

if isLight:

if track_id not in person_list:

person_list.append(track_id)

cv2.imwrite('video/catch/' + time.strftime('%Y-%m-%d-%H-%M-%S') + '.png', img)

cv2.imwrite('video/catch/' + time.strftime('%Y-%m-%d-%H-%M-%S') + str(track_id) + '.png', ori)

#

print('妖秀啦,敢闯红灯')

-

在斑马线的马路中间画一条线,有行人通过且是红灯的情况,就是闯红灯

-

运用cv2.fillPoly 画检测矩形

polygon_yellow_value_2 = cv2.fillPoly(mask_image_temp, [ndarray_pts_yellow], color=1)

polygon_yellow_value_2 = polygon_yellow_value_2[:, :, np.newaxis]

- 检测碰撞情况

if polygon_mask_blue_and_yellow[y, x] == 1:

- 这样就实现了完整的抓拍功能

import time

import numpy as np

import objtracker

from objdetector import Detector

import cv2

VIDEO_PATH = './video/short.mp4'

def isRed(img):

# cv2.imwrite('video/' + time.strftime('%Y-%m-%d-%H-%M-%S') + '.png', img)

img = cv2.medianBlur(img, 3)

img = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

min = np.array([0, 43, 46])

max = np.array([10, 255, 255])

img = cv2.inRange(img, min, max)

if np.max(img):

return True

else :

return False

def catch_person(isLight, track_id, img, ori):

if isLight:

if track_id not in person_list:

person_list.append(track_id)

cv2.imwrite('video/catch/' + time.strftime('%Y-%m-%d-%H-%M-%S') + '.png', img)

cv2.imwrite('video/catch/' + time.strftime('%Y-%m-%d-%H-%M-%S') + str(track_id) + '.png', ori)

#

print('妖秀啦,敢闯红灯')

if __name__ == '__main__':

# 根据视频尺寸,填充供撞线计算使用的polygon

width = 1920

height = 1080

mask_image_temp = np.zeros((height, width), dtype=np.uint8)

# 填充第一个撞线polygon(蓝色)

# list_pts_blue = [[204, 305], [227, 431], [605, 522], [1101, 464], [1900, 601], [1902, 495], [1125, 379], [604, 437],

# [299, 375], [267, 289]]

list_pts_blue = [[0, 450], [1890, 450], [1890, 550], [0, 550]]

ndarray_pts_blue = np.array(list_pts_blue, np.int32)

# 填充第二个撞线polygon(黄色)

mask_image_temp = np.zeros((height, width), dtype=np.uint8)

# list_pts_yellow = [[181, 305], [207, 442], [603, 544], [1107, 485], [1898, 625], [1893, 701], [1101, 568],

# [594, 637], [118, 483], [109, 303]]

list_pts_yellow = [[0, 600], [1890, 600], [1890, 750], [0, 750]]

ndarray_pts_yellow = np.array(list_pts_yellow, np.int32)

polygon_yellow_value_2 = cv2.fillPoly(mask_image_temp, [ndarray_pts_yellow], color=1)

polygon_yellow_value_2 = polygon_yellow_value_2[:, :, np.newaxis]

# 撞线检测用的mask,包含2个polygon,(值范围 0、1、2),供撞线计算使用

polygon_mask_blue_and_yellow = polygon_yellow_value_2

# 缩小尺寸,1920x1080->960x540

polygon_mask_blue_and_yellow = cv2.resize(polygon_mask_blue_and_yellow, (width//2, height//2))

# 黄 色盘

yellow_color_plate = [0, 255, 255]

# 黄 polygon图片

yellow_image = np.array(polygon_yellow_value_2 * yellow_color_plate, np.uint8)

# 彩色图片(值范围 0-255)

color_polygons_image = yellow_image

# 缩小尺寸,1920x1080->960x540

color_polygons_image = cv2.resize(color_polygons_image, (width//2, height//2))

# list 与蓝色polygon重叠

list_overlapping_blue_polygon = []

# list 与黄色polygon重叠

list_overlapping_yellow_polygon = []

# 下行数量

down_count = 0

# 上行数量

up_count = 0

font_draw_number = cv2.FONT_HERSHEY_SIMPLEX

draw_text_postion = (int((width/2) * 0.01), int((height/2) * 0.05))

# 实例化yolov5检测器

detector = Detector()

# 打开视频

capture = cv2.VideoCapture(VIDEO_PATH)

person_list = []

while True:

# 读取每帧图片

_, im = capture.read()

if im is None:

break

isLight = False

# 缩小尺寸,1920x1080->960x540

im = cv2.resize(im, (width//2, height//2))

im_ori = im.copy()

list_bboxs = []

# # 检测红绿灯

# red_img, red_box = detector.detect(im, ['traffic light'])

# if len(red_box) > 0:

# x1, y1, x2, y2, lbl, conf = red_box

# red_light = isRed(red_img[y1: y2, x1: x2])

# if red_light:

# print('检测到红灯')

# 更新跟踪器

output_image_frame, list_bboxs, light = objtracker.update(detector, im)

# 输出图片

output_image_frame = cv2.add(output_image_frame, color_polygons_image)

if light is not None:

(x1, y1, x2, y2, label, conf) = light

red_light = isRed(im_ori[y1: y2, x1: x2])

if red_light:

print('检测到红灯')

isLight = True

if len(list_bboxs) > 0:

# ----------------------判断撞线----------------------

for item_bbox in list_bboxs:

x1, y1, x2, y2, label, track_id = item_bbox

# 撞线检测点,(x1,y1),y方向偏移比例 0.0~1.0

y1_offset = int(y1 + ((y2 - y1) * 0.6))

# 撞线的点

y = y1_offset

x = x1

if polygon_mask_blue_and_yellow[y, x] == 1:

catch_person(isLight, track_id, im_ori[y1: y2, x1: x2], im_ori)

# 如果撞 蓝polygon

# if track_id not in list_overlapping_blue_polygon:

# list_overlapping_blue_polygon.append(track_id)

# 判断 黄polygon list里是否有此 track_id

# 有此track_id,则认为是 UP (上行)方向

# if track_id in list_overlapping_yellow_polygon:

# 上行+1

# up_count += 1

# print('up count:', up_count, ', up id:', list_overlapping_yellow_polygon)

# # 删除 黄polygon list 中的此id

# list_overlapping_yellow_polygon.remove(track_id)

# catch_person(isLight, track_id, im_ori[y1: y2, x1: x2])

# elif polygon_mask_blue_and_yellow[y, x] == 2:

# # 如果撞 黄polygon

# if track_id not in list_overlapping_yellow_polygon:

# list_overlapping_yellow_polygon.append(track_id)

# # 判断 蓝polygon list 里是否有此 track_id

# # 有此 track_id,则 认为是 DOWN(下行)方向

# if track_id in list_overlapping_blue_polygon:

# # 下行+1

# down_count += 1

# print('down count:', down_count, ', down id:', list_overlapping_blue_polygon)

# # 删除 蓝polygon list 中的此id

# list_overlapping_blue_polygon.remove(track_id)

# catch_person(isLight, track_id, im_ori[y1: y2, x1: x2])

# ----------------------清除无用id----------------------

# list_overlapping_all = list_overlapping_yellow_polygon + list_overlapping_blue_polygon

# for id1 in list_overlapping_all:

# is_found = False

# for _, _, _, _, _, bbox_id in list_bboxs:

# if bbox_id == id1:

# is_found = True

# if not is_found:

# # 如果没找到,删除id

# if id1 in list_overlapping_yellow_polygon:

# list_overlapping_yellow_polygon.remove(id1)

# if id1 in list_overlapping_blue_polygon:

# list_overlapping_blue_polygon.remove(id1)

# list_overlapping_all.clear()

# 清空list

# list_bboxs.clear()

else:

# 如果图像中没有任何的bbox,则清空list

list_overlapping_blue_polygon.clear()

list_overlapping_yellow_polygon.clear()

# 输出计数信息

text_draw = '闯红灯人数: ' + str(len(person_list))

output_image_frame = cv2.putText(img=output_image_frame, text=text_draw,

org=draw_text_postion,

fontFace=font_draw_number,

fontScale=0.75, color=(0, 0, 255), thickness=2)

cv2.imshow('Counting Demo', output_image_frame)

cv2.waitKey(1)

capture.release()

cv2.destroyAllWindows()

关于检测算法的提取和封装

- 模型加载

- 图片处理

- 调用模型方法检测,并处理图片

class Detector(baseDet):

def __init__(self):

super(Detector, self).__init__()

self.init_model()

self.build_config()

def init_model(self):

self.weights = DETECTOR_PATH

self.device = '0' if torch.cuda.is_available() else 'cpu'

self.device = select_device(self.device)

model = attempt_load(self.weights, map_location=self.device)

model.to(self.device).eval()

model.float()

self.m = model

self.names = model.module.names if hasattr(

model, 'module') else model.names

def preprocess(self, img):

img0 = img.copy()

img = letterbox(img, new_shape=self.img_size)[0]

img = img[:, :, ::-1].transpose(2, 0, 1)

img = np.ascontiguousarray(img)

img = torch.from_numpy(img).to(self.device)

img = img.float() # 半精度

img /= 255.0 # 图像归一化

if img.ndimension() == 3:

img = img.unsqueeze(0)

return img0, img

def detect(self, im, red = None):

im0, img = self.preprocess(im)

pred = self.m(img, augment=False)[0]

pred = pred.float()

pred = non_max_suppression(pred, self.threshold, 0.4)

pred_boxes = []

for det in pred:

if det is not None and len(det):

det[:, :4] = scale_coords(

img.shape[2:], det[:, :4], im0.shape).round()

for *x, conf, cls_id in det:

lbl = self.names[int(cls_id)]

if not lbl in OBJ_LIST:

continue

x1, y1 = int(x[0]), int(x[1])

x2, y2 = int(x[2]), int(x[3])

pred_boxes.append(

(x1, y1, x2, y2, lbl, conf))

return im, pred_boxes

目标跟踪算法提取

- 获取检测目标,

- 用deepsort更新目标

- 绘制效果

from deep_sort.utils.parser import get_config

from deep_sort.deep_sort import DeepSort

import torch

import cv2

import numpy as np

cfg = get_config()

cfg.merge_from_file("deep_sort/configs/deep_sort.yaml")

deepsort = DeepSort(cfg.DEEPSORT.REID_CKPT,

max_dist=cfg.DEEPSORT.MAX_DIST, min_confidence=cfg.DEEPSORT.MIN_CONFIDENCE,

nms_max_overlap=cfg.DEEPSORT.NMS_MAX_OVERLAP, max_iou_distance=cfg.DEEPSORT.MAX_IOU_DISTANCE,

max_age=cfg.DEEPSORT.MAX_AGE, n_init=cfg.DEEPSORT.N_INIT, nn_budget=cfg.DEEPSORT.NN_BUDGET,

use_cuda=True)

def plot_bboxes(image, bboxes, line_thickness=None):

# Plots one bounding box on image img

tl = line_thickness or round(

0.002 * (image.shape[0] + image.shape[1]) / 2) + 1 # line/font thickness

list_pts = []

point_radius = 4

for (x1, y1, x2, y2, cls_id, pos_id) in bboxes:

if cls_id in ['smoke', 'phone', 'eat']:

color = (0, 0, 255)

else:

color = (0, 255, 0)

if cls_id == 'eat':

cls_id = 'eat-drink'

# check whether hit line

check_point_x = x1

check_point_y = int(y1 + ((y2 - y1) * 0.6))

c1, c2 = (x1, y1), (x2, y2)

cv2.rectangle(image, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)

tf = max(tl - 1, 1) # font thickness

t_size = cv2.getTextSize(cls_id, 0, fontScale=tl / 3, thickness=tf)[0]

c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3

cv2.rectangle(image, c1, c2, color, -1, cv2.LINE_AA) # filled

cv2.putText(image, '{} ID-{}'.format(cls_id, pos_id), (c1[0], c1[1] - 2), 0, tl / 3,

[225, 255, 255], thickness=tf, lineType=cv2.LINE_AA)

list_pts.append([check_point_x-point_radius, check_point_y-point_radius])

list_pts.append([check_point_x-point_radius, check_point_y+point_radius])

list_pts.append([check_point_x+point_radius, check_point_y+point_radius])

list_pts.append([check_point_x+point_radius, check_point_y-point_radius])

ndarray_pts = np.array(list_pts, np.int32)

cv2.fillPoly(image, [ndarray_pts], color=(0, 0, 255))

list_pts.clear()

return image

def update(target_detector, image):

# 获得所有检测框

_, bboxes = target_detector.detect(image)

bbox_xywh = []

confs = []

bboxes2draw = []

light = None

if len(bboxes):

# Adapt detections to deep sort input format

for x1, y1, x2, y2, label, conf in bboxes:

obj = [

int((x1+x2)/2), int((y1+y2)/2),

x2-x1, y2-y1

]

bbox_xywh.append(obj)

if label == 'traffic light':

light = (x1, y1, x2, y2, label, conf)

confs.append(conf)

xywhs = torch.Tensor(bbox_xywh)

confss = torch.Tensor(confs)

# Pass detections to deepsort

outputs = deepsort.update(xywhs, confss, image)

for value in list(outputs):

x1,y1,x2,y2,track_id = value

bboxes2draw.append(

(x1, y1, x2, y2, "", track_id)

)

image = plot_bboxes(image, bboxes2draw)

return image, bboxes2draw, light

太复杂了这两个算法,等后面整理清晰了再写个完整的