RDD依赖关系

RDD 血缘关系

RDD 只支持粗粒度转换,即在大量记录上执行的单个操作。将创建 RDD 的一系列Lineage(血统)记录下来,以便恢复丢失的分区。RDD 的Lineage 会记录RDD 的元数据信息和转换行为,当该RDD 的部分分区数据丢失时,它可以根据这些信息来重新运算和恢复丢失的数据分区。

package com.atguigu.bigdata.spark.core.rdd.dep

import org.apache.spark.{

SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

object Spark01_RDD_Dep {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setMaster("local").setAppName("WordCount")

val sc = new SparkContext(sparkConf)

val lines: RDD[String] = sc.textFile("datas/word.txt")

println(lines.toDebugString)

println("**********************************")

val words: RDD[String] = lines.flatMap(_.split(" "))

println(words.toDebugString)

println("**********************************")

val wordToOne = words.map {

word => (word,1)

}

val wordGroup: RDD[((String, Int), Iterable[(String, Int)])] = wordToOne.groupBy(word => word)

println(wordGroup.toDebugString)

println("**********************************")

val wordToCount = wordGroup.map{

case (word, list) => {

list.reduce((t1, t2) => {

(t1._1, t1._2 + t2._2)

}

)

}

}

println(wordToCount.toDebugString)

println("**********************************")

val array = wordToCount.collect()

array.foreach(println)

//TODO 关闭spark的连接

sc.stop()

}

}

2. RDD 依赖关系

所谓的依赖关系,其实就是两个相邻RDD 之间的关系。

package com.atguigu.bigdata.spark.core.rdd.dep

import org.apache.spark.{

SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

object Spark01_RDD_Dep {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setMaster("local").setAppName("WordCount")

val sc = new SparkContext(sparkConf)

val lines: RDD[String] = sc.textFile("datas/word.txt")

println(lines.dependencies)

println("**********************************")

val words: RDD[String] = lines.flatMap(_.split(" "))

println(words.dependencies)

println("**********************************")

val wordToOne = words.map {

word => (word,1)

}

val wordGroup: RDD[((String, Int), Iterable[(String, Int)])] = wordToOne.groupBy(word => word)

println(wordGroup.dependencies)

println("**********************************")

val wordToCount = wordGroup.map{

case (word, list) => {

list.reduce((t1, t2) => {

(t1._1, t1._2 + t2._2)

}

)

}

}

println(wordToCount.dependencies)

println("**********************************")

val array = wordToCount.collect()

array.foreach(println)

//TODO 关闭spark的连接

sc.stop()

}

}

3. RDD 窄依赖

窄依赖表示每一个父(上游)RDD 的Partition 最多被子(下游)RDD 的一个Partition 使用,窄依赖可以形象的比喻为独生子女。

class OneToOneDependency[T](rdd: RDD[T]) extends NarrowDependency[T](rdd)

4. RDD 宽依赖

宽依赖表示同一个父(上游)RDD 的Partition 被多个子(下游)RDD 的Partition 依赖,会引起Shuffle,宽依赖可以形象的比喻为多生。

class ShuffleDependency[K: ClassTag, V: ClassTag, C: ClassTag](

@transient private val _rdd: RDD[_ <: Product2[K, V]],

val partitioner: Partitioner,

val serializer: Serializer = SparkEnv.get.serializer,

val keyOrdering: Option[Ordering[K]] = None,

val aggregator: Option[Aggregator[K, V, C]] = None,

val mapSideCombine: Boolean = false)

extends Dependency[Product2[K, V]]

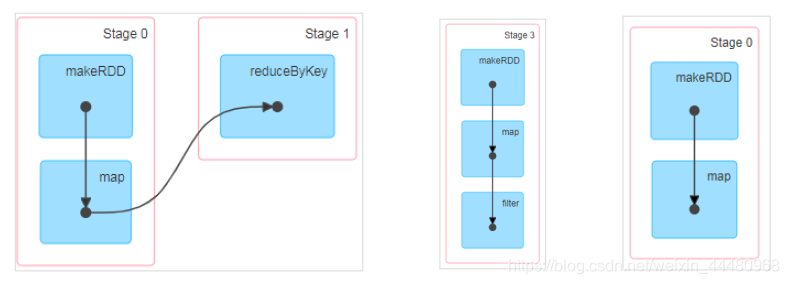

5. RDD 阶段划分

DAG(Directed Acyclic Graph)有向无环图是由点和线组成的拓扑图形,该图形具有方向,不会闭环。例如,DAG 记录了RDD 的转换过程和任务的阶段。

RDD 阶段划分源码

try {

// New stage creation may throw an exception if, for example, jobs are run on

a

// HadoopRDD whose underlying HDFS files have been deleted.

finalStage = createResultStage(finalRDD, func, partitions, jobId, callSite)

} catch {

case e: Exception =>

logWarning("Creating new stage failed due to exception - job: " + jobId, e)

listener.jobFailed(e)

return

}

……

private def createResultStage(

rdd: RDD[_],

func: (TaskContext, Iterator[_]) => _,

partitions: Array[Int],

jobId: Int,

callSite: CallSite): ResultStage = {

val parents = getOrCreateParentStages(rdd, jobId)

val id = nextStageId.getAndIncrement()

val stage = new ResultStage(id, rdd, func, partitions, parents, jobId, callSite)

stageIdToStage(id) = stage

updateJobIdStageIdMaps(jobId, stage)

stage

}

……

private def getOrCreateParentStages(rdd: RDD[_], firstJobId: Int): List[Stage]

= {

getShuffleDependencies(rdd).map {

shuffleDep =>

getOrCreateShuffleMapStage(shuffleDep, firstJobId)

}.toList

}

……

private[scheduler] def getShuffleDependencies(

rdd: RDD[_]): HashSet[ShuffleDependency[_, _, _]] = {

val parents = new HashSet[ShuffleDependency[_, _, _]]

val visited = new HashSet[RDD[_]]

val waitingForVisit = new Stack[RDD[_]]

waitingForVisit.push(rdd)

while (waitingForVisit.nonEmpty) {

val toVisit = waitingForVisit.pop()

if (!visited(toVisit)) {

visited += toVisit

toVisit.dependencies.foreach {

case shuffleDep: ShuffleDependency[_, _, _] =>

parents += shuffleDep

case dependency =>

waitingForVisit.push(dependency.rdd)

}

}

}

parents

}

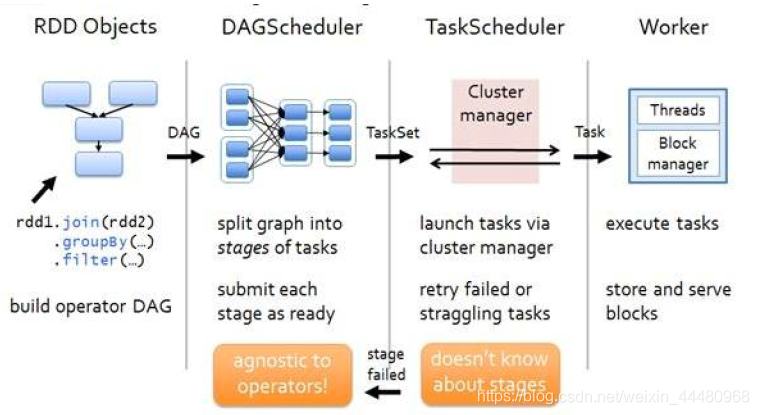

6. RDD 任务划分

RDD 任务切分中间分为:Application、Job、Stage 和 Task。

- Application:初始化一个 SparkContext 即生成一个Application;

- Job:一个Action 算子就会生成一个Job;

- Stage:Stage 等于宽依赖(ShuffleDependency)的个数加1;

- Task:一个 Stage 阶段中,最后一个RDD 的分区个数就是Task 的个数。

注意:Application->Job->Stage->Task 每一层都是1 对n 的关系。

RDD 任务划分源码

val tasks: Seq[Task[_]] = try {

stage match {

case stage: ShuffleMapStage =>

partitionsToCompute.map {

id =>

val locs = taskIdToLocations(id)

val part = stage.rdd.partitions(id)

new ShuffleMapTask(stage.id, stage.latestInfo.attemptId,

taskBinary, part, locs, stage.latestInfo.taskMetrics, properties,

Option(jobId),

Option(sc.applicationId), sc.applicationAttemptId)

}

case stage: ResultStage =>

partitionsToCompute.map {

id =>

val p: Int = stage.partitions(id)

val part = stage.rdd.partitions(p)

val locs = taskIdToLocations(id)

new ResultTask(stage.id, stage.latestInfo.attemptId,

taskBinary, part, locs, id, properties, stage.latestInfo.taskMetrics,

Option(jobId), Option(sc.applicationId), sc.applicationAttemptId)

}

}

……

val partitionsToCompute: Seq[Int] = stage.findMissingPartitions()

……

override def findMissingPartitions(): Seq[Int] = {

mapOutputTrackerMaster

.findMissingPartitions(shuffleDep.shuffleId)

.getOrElse(0 until numPartitions)

}