一. 概念引入

1. 心跳

在TCP长连接中,客户端和服务端之间定期收发的一种特殊的数据包称为“心跳包”,用以通知和确认对方都还在线,以确保TCP连接的有效性

2. 心跳的必要性

- 客户端程序崩溃、或者网络断开等原因,单方面释放了TCP连接

- TCP连接被防火墙干掉

计算机与计算机之间相互是有防火墙的,而这个防火墙随时可以做到一个策略,随时可以断开socket连接,而断开的时候可能不会进行四次挥手,服务端或者客户端没有收到连接断开的消息,此时会认为连接还可用,随时还想发送数据,发送的时候才知道连接不可用。

一般来说,正是因为如上这些导致TCP长连接断开的不确定因素(客户端的因素比较常见),才需要“心跳包”来确认双方是否在线。服务端得知客户端单方面释放掉TCP连接,服务端会及时释放相应的内存资源。而客户端知道TCP连接被断开了,根据需要来采取措施是否需要重连

二. 带有注释的demo

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import io.netty.handler.timeout.IdleStateHandler;

public class HeartBeatServer {

public static void main(String[] args) throws Exception {

EventLoopGroup boss = new NioEventLoopGroup();

EventLoopGroup worker = new NioEventLoopGroup();

try {

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(boss, worker)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast("decoder", new StringDecoder());

pipeline.addLast("encoder", new StringEncoder());

// IdleStateHandler的readerIdleTime参数指定超过3秒还没收到客户端的连接,

// 会触发IdleStateEvent事件并且交给下一个handler处理,下一个handler必须

// 实现userEventTriggered方法处理对应事件

pipeline.addLast(new IdleStateHandler(3, 0, 0));

pipeline.addLast(new HeartBeatServerHandler());

}

});

System.out.println("netty server start。。");

ChannelFuture future = bootstrap.bind(9000).sync();

future.channel().closeFuture().sync();

} catch (Exception e) {

e.printStackTrace();

} finally {

worker.shutdownGracefully();

boss.shutdownGracefully();

}

}

}

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.handler.timeout.IdleStateEvent;

public class HeartBeatServerHandler extends SimpleChannelInboundHandler<String> {

// 读超时的次数

int readIdleTimes;

@Override

protected void channelRead0(ChannelHandlerContext ctx, String s) throws Exception {

System.out.println(" ====== > [server] message received : " + s);

if ("Heartbeat Packet".equals(s)) {

// 如果是心跳包,给客户端发送一个响应 "ok"

ctx.channel().writeAndFlush("ok");

} else {

System.out.println("其他信息处理 ... ");

}

}

@Override

public void userEventTriggered(ChannelHandlerContext ctx, Object evt) throws Exception {

IdleStateEvent event = (IdleStateEvent) evt;

String eventType = null;

switch (event.state()) {

case READER_IDLE:

eventType = "读空闲";

readIdleTimes++; // 读空闲的计数加1

break;

case WRITER_IDLE:

eventType = "写空闲";

// 不处理

break;

case ALL_IDLE:

eventType = "读写空闲";

// 不处理

break;

}

System.out.println(ctx.channel().remoteAddress() + "超时事件:" + eventType);

if (readIdleTimes > 3) {

// 即时超过3次,也不一定是TCP连接真的挂掉了

// 也有可能是传输过程中网络拥堵,从而造成服务端在误判为TCP连接断开了

System.out.println(" [server]读空闲超过3次,关闭连接,释放更多资源");

// 关闭通道之前,先尝试发送一个关闭信息

ctx.channel().writeAndFlush("idle close");

ctx.channel().close();

}

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.err.println("=== " + ctx.channel().remoteAddress() + " is active ===");

// 通道就绪之后,初始化读超时的次数为0

readIdleTimes = 0;

}

}

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import java.util.Random;

public class HeartBeatClient {

public static void main(String[] args) throws Exception {

EventLoopGroup eventLoopGroup = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(eventLoopGroup).channel(NioSocketChannel.class)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast("decoder", new StringDecoder());

pipeline.addLast("encoder", new StringEncoder());

pipeline.addLast(new HeartBeatClientHandler());

}

});

System.out.println("netty client start。。");

Channel channel = bootstrap.connect("127.0.0.1", 9000).sync().channel();

String text = "Heartbeat Packet";

Random random = new Random();

while (channel.isActive()) {

int num = random.nextInt(10);

Thread.sleep(num * 1000);

channel.writeAndFlush(text); // 模拟发送心跳包

}

} catch (Exception e) {

e.printStackTrace();

} finally {

eventLoopGroup.shutdownGracefully();

}

}

static class HeartBeatClientHandler extends SimpleChannelInboundHandler<String> {

@Override

protected void channelRead0(ChannelHandlerContext ctx, String msg) throws Exception {

System.out.println("client received :" + msg);

if (msg != null && msg.equals("idle close")) {

System.out.println(" 服务端关闭连接,客户端也关闭");

ctx.channel().closeFuture();

}

}

}

}

三. Netty的心跳机制的关键源码分析

1. IdleStateHandler的构造器介绍

public IdleStateHandler(int readerIdleTimeSeconds, int writerIdleTimeSeconds, int allIdleTimeSeconds) {

this((long)readerIdleTimeSeconds, (long)writerIdleTimeSeconds, (long)allIdleTimeSeconds, TimeUnit.SECONDS);

}

- readerIdleTimeSeconds: 读超时. 即当在指定的时间间隔内没有从 Channel 读取到数据时, 会触发一个 READER_IDLE 的 IdleStateEvent 事件

- writerIdleTimeSeconds: 写超时. 即当在指定的时间间隔内没有数据写入到 Channel 时, 会触发一个 WRITER_IDLE 的 IdleStateEvent 事件

- allIdleTimeSeconds: 读/写超时. 即当在指定的时间间隔内没有读或写操作时, 会触发一个 ALL_IDLE 的 IdleStateEvent 事件

2. 大胆猜想

在此之前,先来大胆猜想一波。

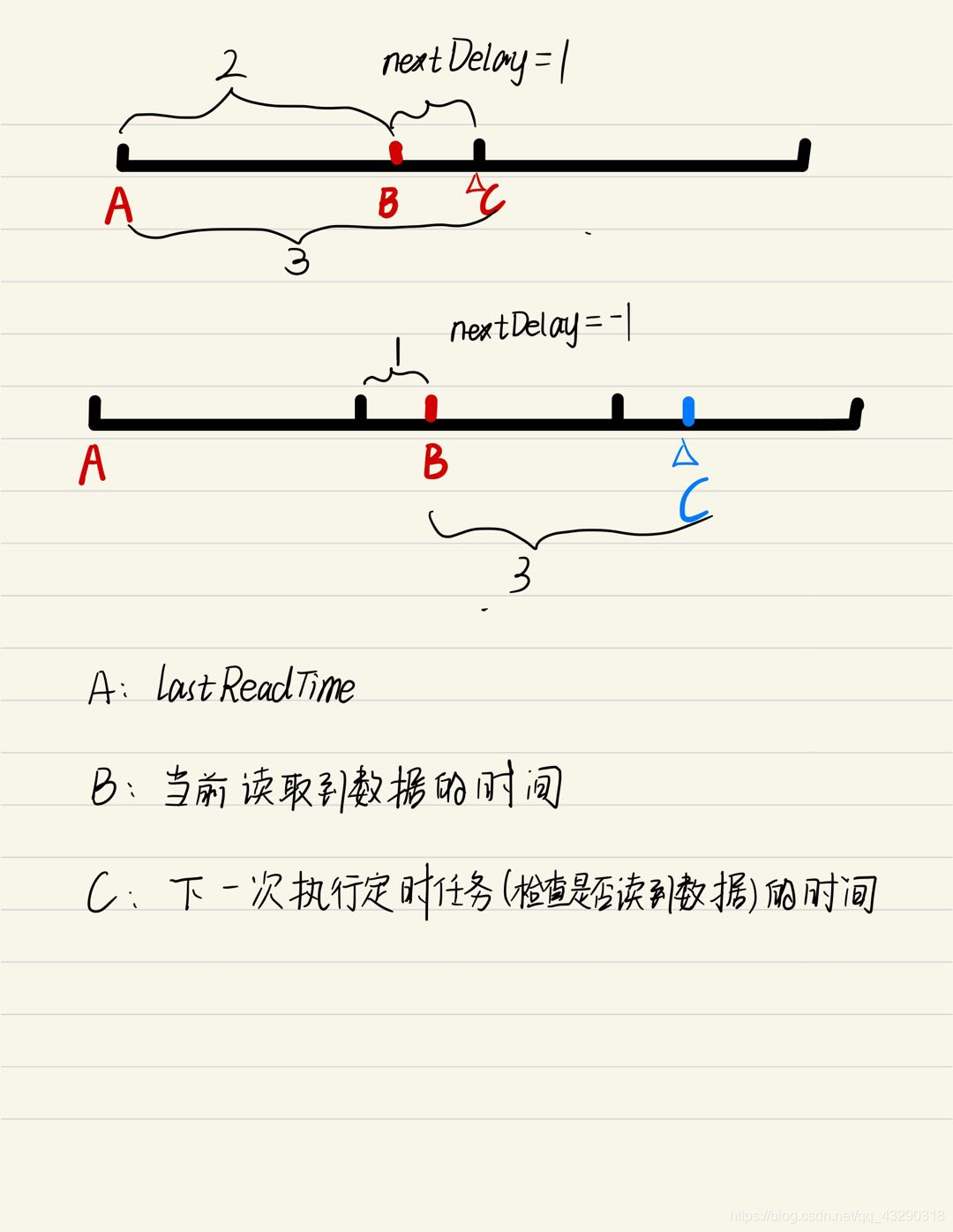

在demo中,设置了读超时参数为3秒。那么Netty会每隔3秒就会判断是否能够从通道中读取到数据(可能是心跳包,也可能是业务数据)。很显然,只需要一个定时器。那么,很容易才想到定时器的初始化必定是在通道就绪之后。所以我们定位IdleStateHandler中的channelActive()方法,发现了核心的initialize()

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

// This method will be invoked only if this handler was added

// before channelActive() event is fired. If a user adds this handler

// after the channelActive() event, initialize() will be called by beforeAdd().

initialize(ctx); // 关键!!!

super.channelActive(ctx);

}

3. IdleStateHandler中的initialize()

从源码中可以看出,Netty并没有直接使用定时器,而是使用了 “延时任务”(递归调用) 来实现更加灵活的定时器

private void initialize(ChannelHandlerContext ctx) {

// Avoid the case where destroy() is called before scheduling timeouts.

// See: https://github.com/netty/netty/issues/143

switch (state) {

case 1:

case 2:

return;

}

state = 1;

initOutputChanged(ctx);

lastReadTime = lastWriteTime = ticksInNanos();

if (readerIdleTimeNanos > 0) {

// 关键!!!

readerIdleTimeout = schedule(ctx, new ReaderIdleTimeoutTask(ctx),

readerIdleTimeNanos, TimeUnit.NANOSECONDS);

}

if (writerIdleTimeNanos > 0) {

writerIdleTimeout = schedule(ctx, new WriterIdleTimeoutTask(ctx),

writerIdleTimeNanos, TimeUnit.NANOSECONDS);

}

if (allIdleTimeNanos > 0) {

allIdleTimeout = schedule(ctx, new AllIdleTimeoutTask(ctx),

allIdleTimeNanos, TimeUnit.NANOSECONDS);

}

}

/**

* This method is visible for testing!

*/

ScheduledFuture<?> schedule(ChannelHandlerContext ctx, Runnable task, long delay, TimeUnit unit) {

return ctx.executor().schedule(task, delay, unit);

}

4. 查看读超时的定时任务ReaderIdleTimeoutTask

private final class ReaderIdleTimeoutTask extends AbstractIdleTask {

ReaderIdleTimeoutTask(ChannelHandlerContext ctx) {

super(ctx);

}

@Override

protected void run(ChannelHandlerContext ctx) {

// nextDelay:顾名思义,下一次任务应该在延时多少秒之后执行

// lastReadTime:顾名思义,上一次从通道中读取到数据的时间

long nextDelay = readerIdleTimeNanos;

if (!reading) {

// 等价于 nextDelay = nextDelay - (ticksInNanos() - lastReadTime);

// 以demo的读超时为3秒为例

/*

假如(ticksInNanos()-lastReadTime)为2,说明此时读到数据,距离上次读取到数据为2秒。

小于传参的3秒,换言之,就是下一个3秒的“节点”还没有到。

那么下一次的任务应该在3-2=1秒之后执行。所以新的nextDelay就为1

*/

/*

假如(ticksInNanos()-lastReadTime)为4,说明此时读到数据,距离上次读取到数据为4秒。

大于传参的3秒,换言之下一个3秒的“节点”已经过了。

此时按照下面代码算出来的nextDelay就是-1,此时需要重新开启一个3秒的延时任务

*/

nextDelay -= ticksInNanos() - lastReadTime;

}

if (nextDelay <= 0) {

// Reader is idle - set a new timeout and notify the callback.

// 重新开启一个3秒延时任务。延时3秒后执行任务

readerIdleTimeout = schedule(ctx, this, readerIdleTimeNanos, TimeUnit.NANOSECONDS);

boolean first = firstReaderIdleEvent;

firstReaderIdleEvent = false;

try {

IdleStateEvent event = newIdleStateEvent(IdleState.READER_IDLE, first);

// 调用下一个管道中IdleStateHandler的下一个handler的userEventTriggered方法来处理读超时事件

channelIdle(ctx, event);

} catch (Throwable t) {

ctx.fireExceptionCaught(t);

}

} else {

// Read occurred before the timeout - set a new timeout with shorter delay.

// 延时nextDelay秒后执行任务

readerIdleTimeout = schedule(ctx, this, nextDelay, TimeUnit.NANOSECONDS);

}

}

}

/**

* Is called when an {@link IdleStateEvent} should be fired. This implementation calls

* {@link ChannelHandlerContext#fireUserEventTriggered(Object)}.

*/

protected void channelIdle(ChannelHandlerContext ctx, IdleStateEvent evt) throws Exception {

// 凡是fireXxx()方法,都是调用管道中当前handler的下一个handler的Xxx()方法来继续消费数据

ctx.fireUserEventTriggered(evt);

}