演示:http://wx0725.top/%E5%8E%86%E5%8F%B2%E4%B8%8A%E7%9A%84%E4%BB%8A%E5%A4%A9/

历史上的今天http://www.todayonhistory.com/

主要目的:学习爬虫。。。

下面的代码没什么难度,毕竟是新手敲的,就是分析有点费时

# encoding=utf8

# 环境python3.5

import json

import os

import random

import requests

from bs4 import BeautifulSoup

host = 'www.todayonhistory.com'

Referer = 'http://www.todayonhistory.com'

accent = 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9'

headers = [{

'Referer': Referer,

'Host': host,

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36',

'Accept': accent

}, {

'Referer': Referer,

'Host': host,

'User-Agent': 'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Accept': accent

}, {

'Referer': Referer,

'Host': host,

'User-Agent': 'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50',

'Accept': accent

}, {

'Referer': Referer,

'Host': host,

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11',

'Accept': accent

}]

def DownloadFile(str, save_url, file_name):

try:

folder = os.path.exists(save_url)

if not folder:

os.makedirs(save_url)

file_path = os.path.join(save_url, file_name)

with open(file_path, 'w+', encoding='utf-8') as fd:

fd.writelines(str + "\n")

except:

print("程序错误")

def one(url): #首先获取首页的数据

s = requests.session()

ra = random.randint(0, 3)

response = s.get(url, headers=headers[ra]).content

html = BeautifulSoup(response, 'html.parser')

ul = html.find('ul', {'id': 'container'})

li = ul.findAll('li')

lists=[]

for lis in li:

if lis.find('span',{'class': 'moh'}) == None:

continue

list = {'solaryear': "", 'thumb': "", "url": "", "description": "", "title": ""}

if (lis.find('img') == None): # 如果没有图片

text = lis.find('div', {'class': 'text'})

list = {'id':"", "url": text.find('a').attrs['href'], "title": text.find('a').attrs['title'] , 'thumb': "",

'solaryear': lis.find('span', {'class': 'poh'}).find('b').text, "description": text.find('p').text,}

else:

pica = lis.find('a', {'class': 'pica'})

img = pica.find('img').attrs['src']

if img[0] == '/':

img = Referer + img

list = {'id':"", "url": pica.attrs['href'], "title": pica.attrs['title'], 'thumb': img,

'solaryear': lis.find('span', {'class': 'poh'}).find('b').text, "description": pica.attrs['title'],}

lists.append(list)

return lists

def more(url): #获取首页之外的更多数据

s = requests.session()

ra = random.randint(0, 3)

response = s.get(url, headers=headers[ra]).content

html = json.loads(response)

return html;

def get_FileSize(filePath): #获取文件的大小,调试的时候用的

fsize = os.path.getsize(filePath)

# fsize = fsize / float(1024 * 1024

# print(fsize)

return fsize

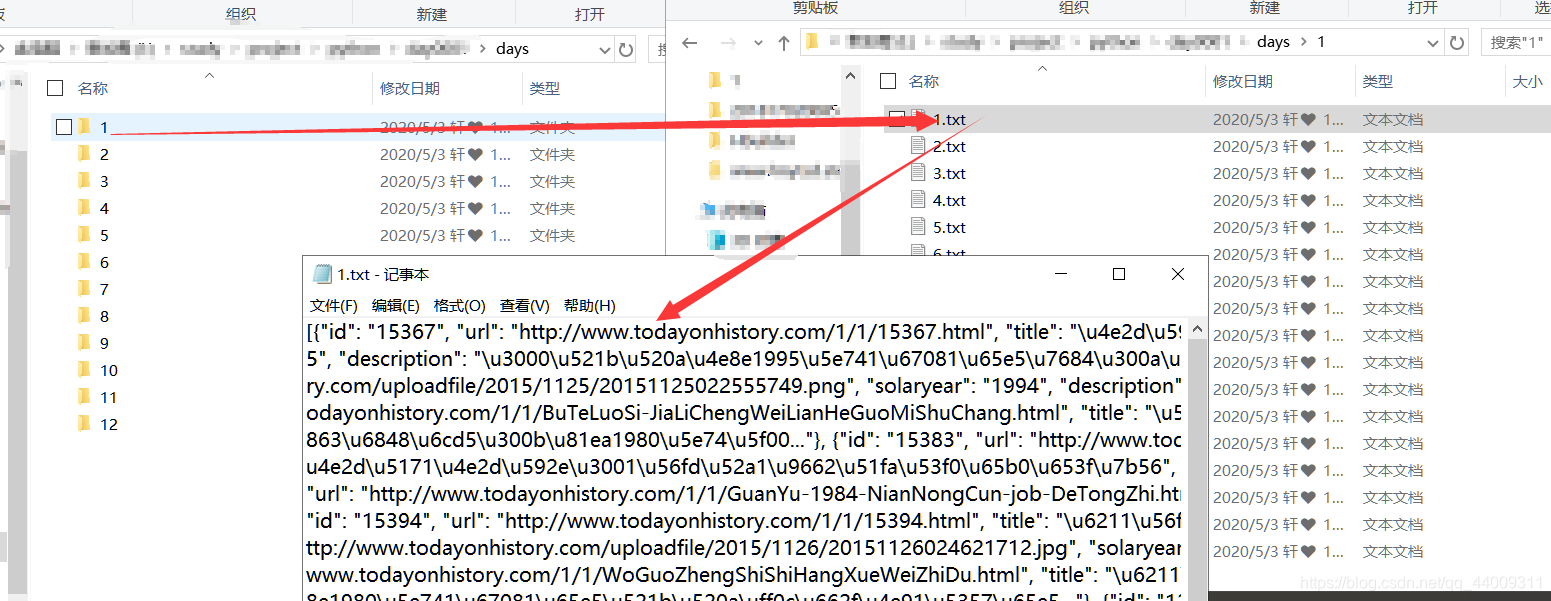

def main():

arr = [31, 29, 31, 30, 31, 30, 31, 31, 30, 31, 30, 31]

ms = 12 + 1

for m in range(1, ms):

days = arr[m - 1] + 1

for d in range(1, days):

print(m," ",d)

path = ("./days/%d/" % m) + ("%d.txt" % d)

# if(get_FileSize(path)>=20):

# continue

result = []

url = 'http://www.todayonhistory.com/%d/%d/' % (m, d)

thisday_one = one(url)

for s in thisday_one:

result.append(s)

page = 1

while 1:

url = "http://www.todayonhistory.com/index.php?m=content&c=index&a=json_event&page=%d&pagesize=40&month=%d&day=%d" % (page, m, d)

thisday_more = more(url)

if thisday_more == 0:

break

else:

for s in thisday_more:

result.append(s)

page += 1

print(result)

DownloadFile(json.dumps(result),("./days/%d" % m), ("%d.txt" % d))

main()#k开hi

爬虫爬取下来的东西对我来说确实没什么使用价值。

所以学习还是需要耐心的。。。

爬虫还是以学习为主,拒绝商用。。。

如发现文章违背https://baike.baidu.com/item/robots协议/2483797?fr=aladdin#2协议,请及时联系,进行删除。。。