前言

在现代人类的生活中经常遇到分类与预测的问题,目标变量的值可能受多个因素影响,而不同的因素对于目标变量的影响的权重也不相同,有的因素可能影响更大有的可能更小,人们通常会通过这些已知的因素来预测目标变量的值。

房价也是受多个因素影响的,如房子所在的地理位置,房子周边交通是否方便,房子是否邻近学校或医院,这些都影响了房子的价格。而房价很明显是一个连续的取值,这很明显是一个回归问题。下面我们通过深度学习来预测波士顿房价。

观察数据

由于keras.datasets里内置了波士顿房价数据集,我们直接导入即可(第一次导入会去互联网下载)

在波士顿房价数据集中,房价由十三个因素决定,它们的含义如下:

- CRIM: 城镇人均犯罪率

- ZN: 住宅用地所占比例

- INDUS: 城镇中非商业用地所占比例

- CHAS: 查尔斯河虚拟变量,用于回归分析

- NOX: 环保指数

- RM: 每栋住宅的房间数

- AGE: 1940 年以前建成的自住单位的比例

- DIS: 距离 5 个波士顿的就业中心的加权距离

- RAD: 距离高速公路的便利指数

- TAX: 每一万美元的不动产税率

- PTRATIO: 城镇中的教师学生比例

- B: 城镇中的黑人比例

- LSTAT: 地区中有多少房东属于低收入人群

通过这十三个因素来预测房价值:

- MEDV: 自住房屋房价中位数(也就是均价)

导入数据

from sklearn.preprocessing import StandardScaler

(x_train, y_train), (x_test, y_test) = boston_housing.load_data()

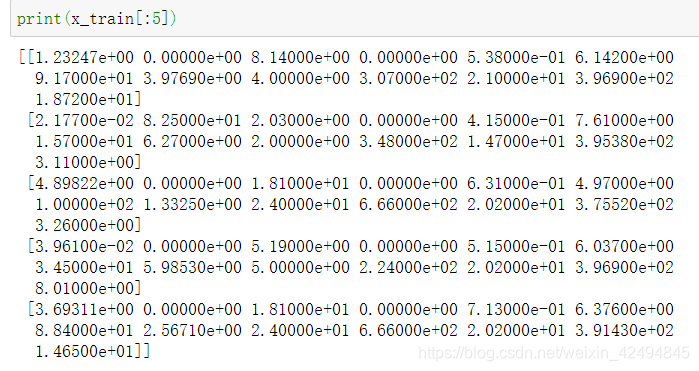

查看前五栋房子的特征

查看前五栋房子的价格

将特征标准化

from sklearn.preprocessing import StandardScaler

standar = StandardScaler()

standar.fit(x_train)

x_train = standar.transform(x_train)

x_test = standar.transform(x_test)

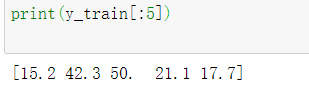

查看标准化后的特征数据

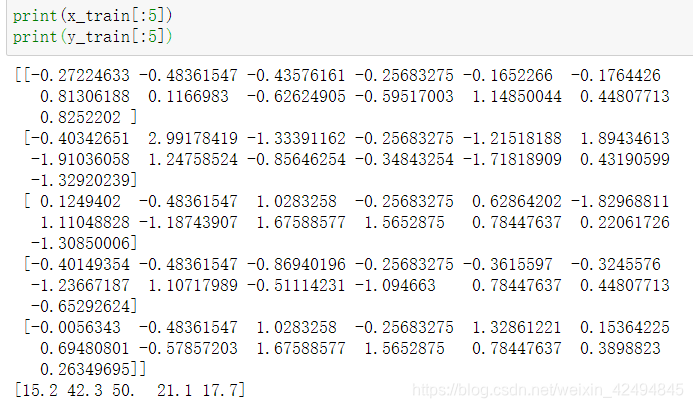

定义模型

from keras.models import Sequential

from keras.layers import Dense

model = Sequential([

Dense(64,activation="relu",input_shape=(13,),name="dense1"),

Dense(64,activation="relu",name="dense2"),

Dense(32,activation="relu",name="dense3"),

Dense(16,activation="relu",name="dense4"),

Dense(1,name="dense5"),

])

model.compile(optimizer="adam",loss="mse",metrics=['mae'])

查看模型

训练模型

model.fit(x_train,y_train,batch_size=10,validation_data=(x_test,y_test),epochs=50)

训练结果如下:

Train on 404 samples, validate on 102 samples

Epoch 1/50

404/404 [==============================] - 0s 754us/step - loss: 446.0820 - mae: 18.6781 - val_loss: 225.8196 - val_mae: 12.6960

Epoch 2/50

404/404 [==============================] - 0s 212us/step - loss: 102.1950 - mae: 7.7815 - val_loss: 62.4751 - val_mae: 5.9926

Epoch 3/50

404/404 [==============================] - 0s 224us/step - loss: 40.3086 - mae: 4.6010 - val_loss: 31.5359 - val_mae: 4.4687

Epoch 4/50

404/404 [==============================] - 0s 225us/step - loss: 25.2902 - mae: 3.5951 - val_loss: 23.8020 - val_mae: 3.8547

Epoch 5/50

404/404 [==============================] - 0s 200us/step - loss: 19.6817 - mae: 3.0821 - val_loss: 21.9666 - val_mae: 3.4767

Epoch 6/50

404/404 [==============================] - 0s 207us/step - loss: 16.4824 - mae: 2.8223 - val_loss: 20.5904 - val_mae: 3.3732

Epoch 7/50

404/404 [==============================] - 0s 207us/step - loss: 14.0943 - mae: 2.5889 - val_loss: 22.1427 - val_mae: 3.4350

Epoch 8/50

404/404 [==============================] - 0s 219us/step - loss: 13.0704 - mae: 2.5115 - val_loss: 21.5184 - val_mae: 3.2644

Epoch 9/50

404/404 [==============================] - 0s 216us/step - loss: 11.6217 - mae: 2.4122 - val_loss: 20.3703 - val_mae: 3.0965

Epoch 10/50

404/404 [==============================] - 0s 202us/step - loss: 11.5130 - mae: 2.3841 - val_loss: 23.2939 - val_mae: 3.4119

Epoch 11/50

404/404 [==============================] - 0s 212us/step - loss: 10.6873 - mae: 2.2870 - val_loss: 22.7500 - val_mae: 3.2360

Epoch 12/50

404/404 [==============================] - 0s 213us/step - loss: 10.0525 - mae: 2.2667 - val_loss: 21.2671 - val_mae: 3.0888

Epoch 13/50

404/404 [==============================] - 0s 216us/step - loss: 10.3001 - mae: 2.3162 - val_loss: 21.8721 - val_mae: 3.2844

Epoch 14/50

404/404 [==============================] - 0s 197us/step - loss: 9.1856 - mae: 2.2060 - val_loss: 22.6189 - val_mae: 3.1511

Epoch 15/50

404/404 [==============================] - 0s 223us/step - loss: 9.9570 - mae: 2.2369 - val_loss: 20.1566 - val_mae: 2.8972

Epoch 16/50

404/404 [==============================] - 0s 225us/step - loss: 8.6319 - mae: 2.0927 - val_loss: 21.8881 - val_mae: 3.1486

Epoch 17/50

404/404 [==============================] - 0s 230us/step - loss: 9.1622 - mae: 2.1976 - val_loss: 22.3696 - val_mae: 3.1611

Epoch 18/50

404/404 [==============================] - 0s 223us/step - loss: 9.0051 - mae: 2.1883 - val_loss: 18.4506 - val_mae: 2.8980

Epoch 19/50

404/404 [==============================] - 0s 225us/step - loss: 9.0376 - mae: 2.1770 - val_loss: 19.8351 - val_mae: 2.9734

Epoch 20/50

404/404 [==============================] - 0s 211us/step - loss: 7.9863 - mae: 2.0248 - val_loss: 21.2624 - val_mae: 3.0110

Epoch 21/50

404/404 [==============================] - 0s 200us/step - loss: 8.8075 - mae: 2.1881 - val_loss: 19.8362 - val_mae: 2.8055

Epoch 22/50

404/404 [==============================] - 0s 203us/step - loss: 7.8023 - mae: 2.0390 - val_loss: 18.8211 - val_mae: 2.8119

Epoch 23/50

404/404 [==============================] - ETA: 0s - loss: 8.0057 - mae: 2.035 - 0s 195us/step - loss: 8.0621 - mae: 2.0509 - val_loss: 22.4199 - val_mae: 3.0033

Epoch 24/50

404/404 [==============================] - 0s 180us/step - loss: 7.6770 - mae: 2.0011 - val_loss: 19.2629 - val_mae: 2.8199

Epoch 25/50

404/404 [==============================] - 0s 176us/step - loss: 7.1079 - mae: 1.9867 - val_loss: 20.9281 - val_mae: 2.9590

Epoch 26/50

404/404 [==============================] - 0s 197us/step - loss: 7.4668 - mae: 1.9623 - val_loss: 20.7744 - val_mae: 3.1235

Epoch 27/50

404/404 [==============================] - 0s 193us/step - loss: 7.2771 - mae: 1.9568 - val_loss: 17.6985 - val_mae: 2.6940

Epoch 28/50

404/404 [==============================] - 0s 177us/step - loss: 6.8489 - mae: 1.9287 - val_loss: 16.4952 - val_mae: 2.6697

Epoch 29/50

404/404 [==============================] - 0s 170us/step - loss: 7.0103 - mae: 1.9162 - val_loss: 18.3884 - val_mae: 2.8031

Epoch 30/50

404/404 [==============================] - 0s 170us/step - loss: 6.5881 - mae: 1.8746 - val_loss: 16.1180 - val_mae: 2.5900

Epoch 31/50

404/404 [==============================] - 0s 172us/step - loss: 6.6325 - mae: 1.8997 - val_loss: 17.6262 - val_mae: 2.7079

Epoch 32/50

404/404 [==============================] - 0s 172us/step - loss: 6.3085 - mae: 1.8548 - val_loss: 15.9697 - val_mae: 2.5988

Epoch 33/50

404/404 [==============================] - 0s 167us/step - loss: 6.3754 - mae: 1.8258 - val_loss: 14.9632 - val_mae: 2.5001

Epoch 34/50

404/404 [==============================] - 0s 165us/step - loss: 6.1542 - mae: 1.8103 - val_loss: 16.9977 - val_mae: 2.6670

Epoch 35/50

404/404 [==============================] - 0s 165us/step - loss: 6.0618 - mae: 1.8184 - val_loss: 15.5738 - val_mae: 2.6117

Epoch 36/50

404/404 [==============================] - 0s 168us/step - loss: 5.9557 - mae: 1.7907 - val_loss: 13.9688 - val_mae: 2.4483

Epoch 37/50

404/404 [==============================] - 0s 168us/step - loss: 5.6048 - mae: 1.7577 - val_loss: 18.0699 - val_mae: 2.8068

Epoch 38/50

404/404 [==============================] - 0s 173us/step - loss: 5.8400 - mae: 1.7863 - val_loss: 16.1574 - val_mae: 2.6192

Epoch 39/50

404/404 [==============================] - 0s 167us/step - loss: 5.7057 - mae: 1.7473 - val_loss: 14.3446 - val_mae: 2.5343

Epoch 40/50

404/404 [==============================] - 0s 167us/step - loss: 5.3335 - mae: 1.6728 - val_loss: 14.0671 - val_mae: 2.5500

Epoch 41/50

404/404 [==============================] - 0s 168us/step - loss: 5.4731 - mae: 1.7181 - val_loss: 13.6885 - val_mae: 2.4550

Epoch 42/50

404/404 [==============================] - 0s 168us/step - loss: 5.3591 - mae: 1.7077 - val_loss: 13.9039 - val_mae: 2.4630

Epoch 43/50

404/404 [==============================] - 0s 169us/step - loss: 5.1396 - mae: 1.6695 - val_loss: 14.8980 - val_mae: 2.5772

Epoch 44/50

404/404 [==============================] - 0s 170us/step - loss: 4.9212 - mae: 1.6375 - val_loss: 14.3894 - val_mae: 2.5253

Epoch 45/50

404/404 [==============================] - 0s 168us/step - loss: 4.8216 - mae: 1.6455 - val_loss: 15.0759 - val_mae: 2.7389

Epoch 46/50

404/404 [==============================] - 0s 170us/step - loss: 5.1621 - mae: 1.6988 - val_loss: 12.4840 - val_mae: 2.4142

Epoch 47/50

404/404 [==============================] - 0s 172us/step - loss: 4.9492 - mae: 1.6380 - val_loss: 14.9770 - val_mae: 2.6219

Epoch 48/50

404/404 [==============================] - 0s 167us/step - loss: 4.7333 - mae: 1.5874 - val_loss: 13.5644 - val_mae: 2.5041

Epoch 49/50

404/404 [==============================] - 0s 166us/step - loss: 4.7236 - mae: 1.5884 - val_loss: 12.6027 - val_mae: 2.4299

Epoch 50/50

404/404 [==============================] - 0s 167us/step - loss: 4.4954 - mae: 1.5606 - val_loss: 13.7365 - val_mae: 2.5092

<keras.callbacks.callbacks.History at 0x7f0922779a90>

可以看到随着训练的进行模型训练的损失在不断减少。

预测房价

我们来预测测试集中前五栋房子的价格

扫描二维码关注公众号,回复:

11277370 查看本文章

import numpy as np

test = np.array([[ 1.55369355e+00, -4.83615471e-01 , 1.02832580e+00 ,-2.56832748e-01,

1.03838067e+00 , 2.35458154e-01 , 1.11048828e+00, -9.39769356e-01,

1.67588577e+00 , 1.56528750e+00 , 7.84476371e-01 ,-3.48459553e+00,

2.25092074e+00],

[-3.92426750e-01, -4.83615471e-01, -1.60877730e-01,-2.56832748e-01,

-8.84006055e-02 ,-4.99474361e-01, 8.56063288e-01, -6.83962347e-01,

-3.96035570e-01 , 1.57078413e-01 ,-3.07595832e-01 , 4.27331262e-01,

4.78801191e-01],

[-3.99829269e-01 ,-4.83615471e-01, -8.69401957e-01, -2.56832748e-01,

-3.61559702e-01 ,-3.97909790e-01, -8.46075750e-01 , 5.28642770e-01,

-5.11142311e-01, -1.09466300e+00 , 7.84476371e-01 , 4.48077135e-01,

-4.14159358e-01],

[-2.67805040e-01, -4.83615471e-01, 1.24588095e+00 , 3.89358447e+00,

4.06700257e-01 ,-2.40957473e-02 , 8.45312937e-01, -9.57671408e-01,

-5.11142311e-01, -1.74432263e-02 ,-1.71818909e+00, -1.68766802e-01,

-9.99345252e-01],

[-3.98037149e-01, -4.83615471e-01 ,-9.72299666e-01, -2.56832748e-01,

-9.24950339e-01, -2.06065602e-01, -4.37562381e-01, 3.61454166e-03,

-7.41355794e-01, -9.56249284e-01 , 1.09252273e-02 , 4.29459044e-01,

-5.93579561e-01]])

model.predict(test)

预测结果为:

array([[ 9.144175],

[16.844572],

[20.86806 ],

[30.631687],

[23.005634]], dtype=float32)

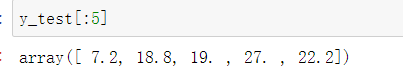

而这五栋房子真实价格如下:

可以看到预测结果还是较为接近的。

保存模型

model.save("boston_model.h5")

今天的深度学习案例就到此为止了,希望大家能够有所收获,活学活用。