Paper Reading @ 23/50

-- original : https://arxiv.org/abs/1812.06203

Formatted Citation:

@misc{dai2018tan,

title={TAN: Temporal Aggregation Network for Dense Multi-label Action Recognition},

author={Xiyang Dai and Bharat Singh and Joe Yue-Hei Ng and Larry S. Davis},

year={2018},

eprint={1812.06203},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Abstract

Temporal Aggregation Network TAN decompose 3D convolutions into spatial and temporal aggregation blocks.

Reduce complexity : Only apply temporal aggregation blocks once after each spatial down-sampling layer in the network.

Dilated convolutions at different resolutions of the network helps in aggregating multi-scale spatial-temporal information.

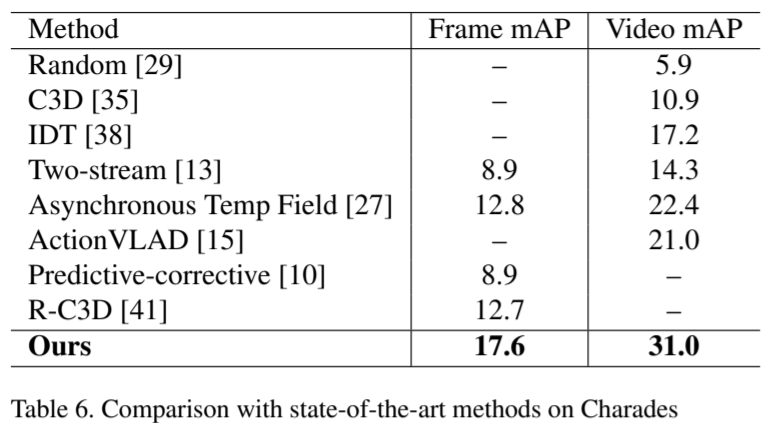

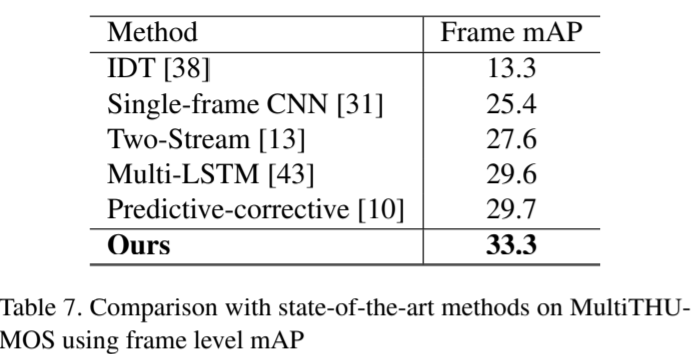

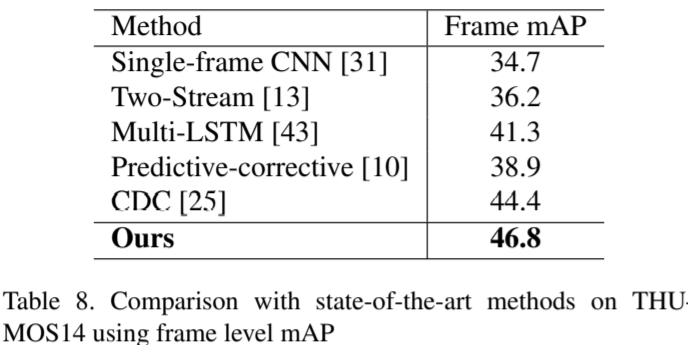

TAN model is well suited for dense multi-label action recognition.

Difficulties:

- In a video, multiple frames aggregated together represent a semantic label which caused more computation compared to image recognition.

- Actions can span multiple temporal and spatial scales in videos.

Related Work

- Action recognition

- Two-stream network comprising two parallel

CNNs, one trained onRGBimages and another trained on stacked optical flow fields. C3Doperate on a sequence of image and perform3Dconvolution (\(3 \times 3 \times 3\)).

- Two-stream network comprising two parallel

- Multi-label prediction

- Temporal action localization

- Making dense predictions

- Predict temporal boundaries

Model

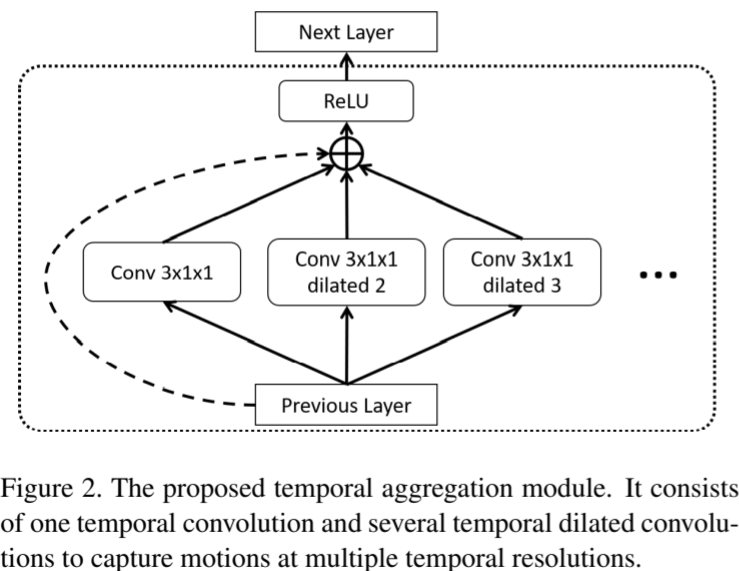

- Proposed Temporal Aggregation Module

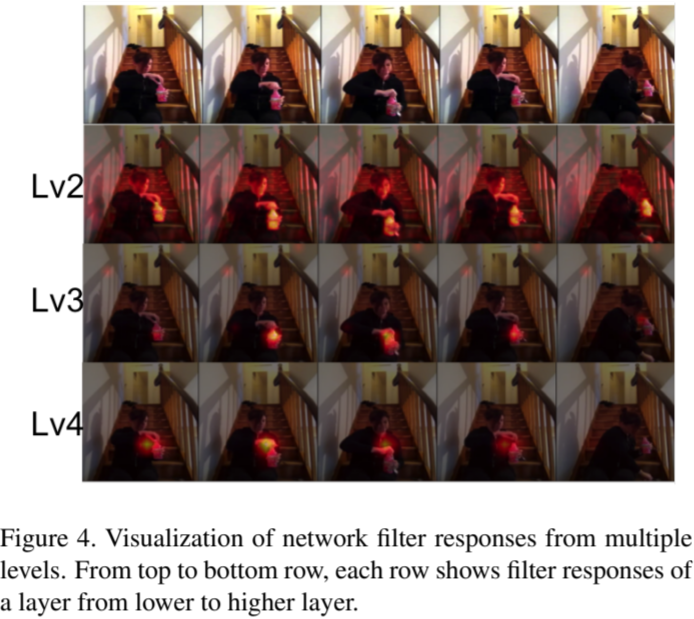

- A temporal aggregation module combines multiple convolutions with different dilation factors (in the temporal domain) and stacks them across the entire network.

- Temporal convolution is a simple

1Ddilated convolution - Add a residual identity connection from previous layers

- Spatial information

- The bottleneck structure from residual networks.

- A bottleneck block: \(1\times1 \to 3\times3 \to 1 \times 1\). 3 convolution layers.

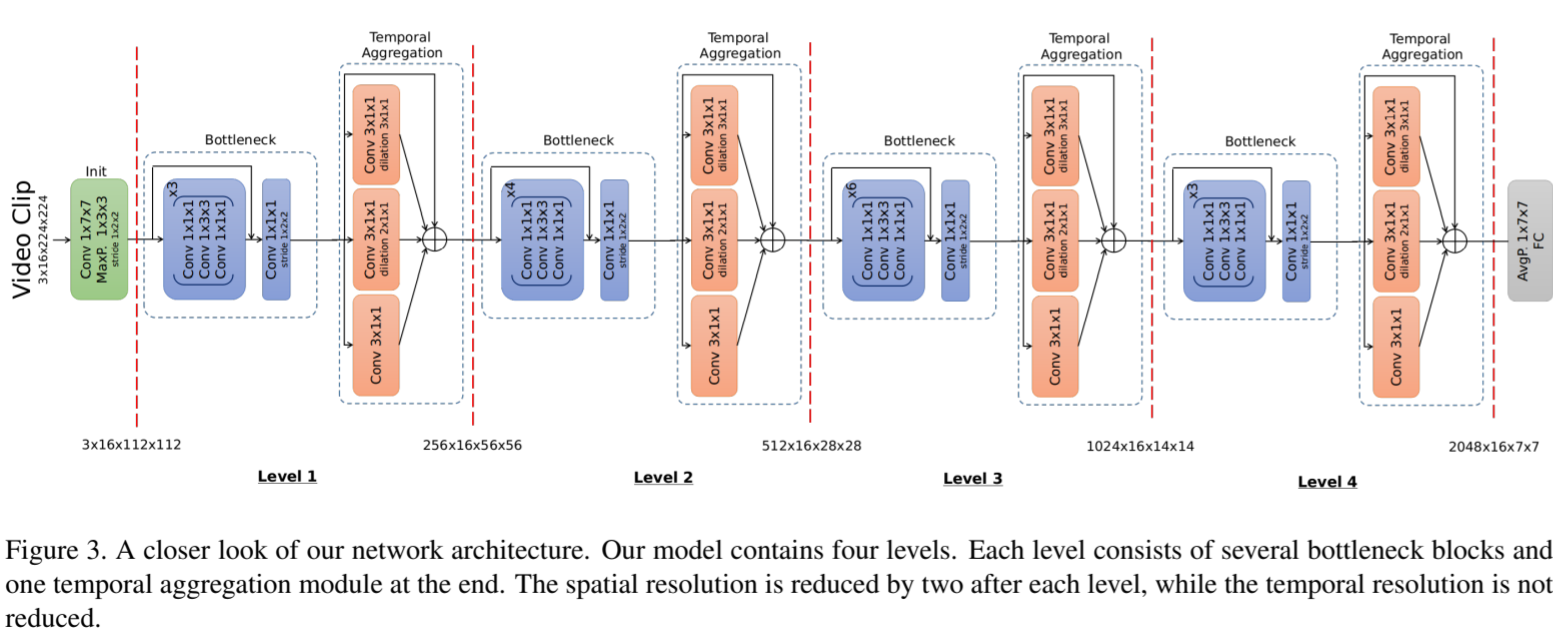

- Full Model

- Spatial and temporal blocks stacking

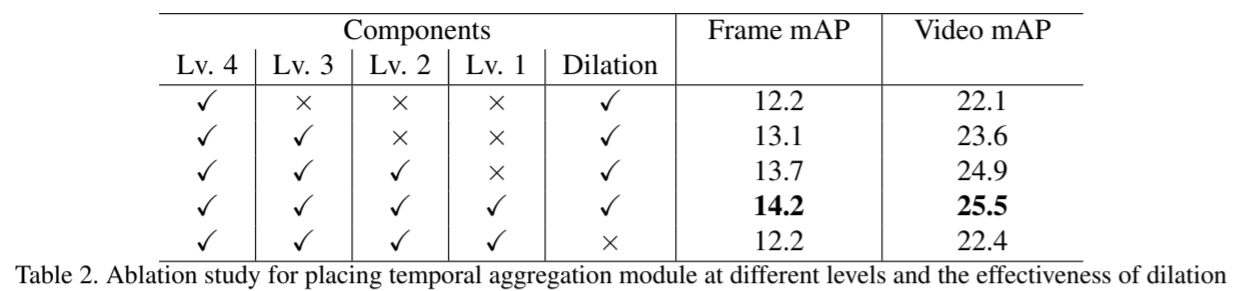

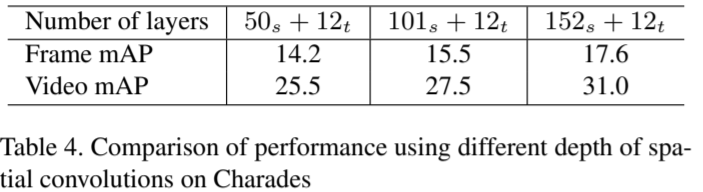

- The final architecture consists of four levels of bottleneck blocks and temporal aggregation blocks.

- One temporal aggregation block follows after multiple bottleneck blocks

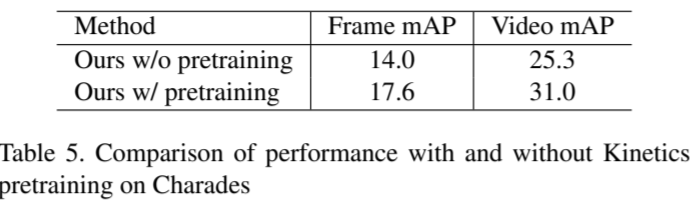

- The weights of bottleneck blocks can be initialized using pre-trained ImageNet models.

- Spatial resolution is reduced : 1. initial convolution and pooling layers; 2. max pooling after every level

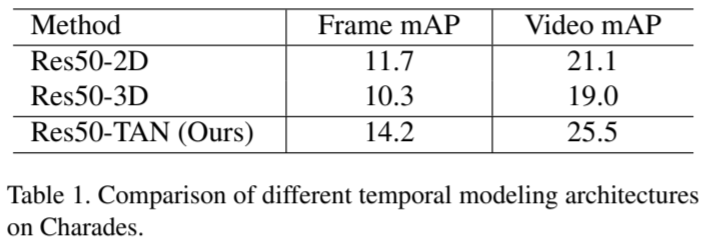

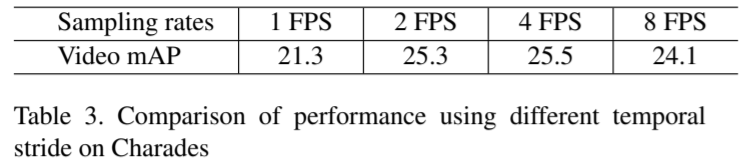

Experiment