爬取网站 http://www.fishc.com

import urllib.request

response = urllib.request.urlopen("http://www.fishc.com")

html = response.read() response是一个对象,要把对象读出来

print(html) 打印html

html = html.decode("utf-8") 给html重新编码

print(html)

爬有道翻译

import urllib.request

import urllib.parse

url = "http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule"

data = {}

data['type'] = 'AUTO'data['i'] = "I love fishc.com"

data['doctype'] = 'json'

data['version'] = '2.1'

data['keyfrom'] = 'fanyi.web'

data['typoResult'] = 'false'

data = urllib.parse.urlencode(data).encode("utf-8")

response = urllib.request.urlopen(url,data)

html = response.read().decode('utf-8')

print(html)

结果如下:

{"type":"EN2ZH_CN","errorCode":0,"elapsedTime":0,"translateResult":[[{"src":"I love fishc.com","tgt":"我爱fishc.com"}]]}

这个结果是 JSON格式的字符串,所以需要解析这个JSON格式的字符串

import urllib.request

import urllib.parse

import json

content = input("请输入需要翻译的内容")

url = "http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule"

data = {}

data['type'] = 'AUTO'

data['i'] = content

data['doctype'] = 'json'

data['version'] = '2.1'

data['keyfrom'] = 'fanyi.web'

data['typoResult'] = 'false'

data = urllib.parse.urlencode(data).encode("utf-8")

response = urllib.request.urlopen(url,data)

html = response.read().decode('utf-8')

target = json.loads(html)

print("翻译结果:%s" % (target['translateResult'][0][0]['tgt'])

隐藏

Request 有个headers参数,通过设置这个参数,你可以伪造成浏览器访问

设置这个参数有两种途径

1.实例化Request对象的时候将headers参数传进去

2.通过add_header()方法往Request对象添加headers

第一种:

import urllib.request

import urllib.parse

import json

content = input("请输入需要翻译的内容")

url = "http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule"

head = {} 要求headers必须是字典的形式

head['User-Agent'] = "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.75 Safari/537.36"

data = {}

data['type'] = 'AUTO'

data['i'] = content

data['doctype'] = 'json'

data['version'] = '2.1'

data['keyfrom'] = 'fanyi.web'

data['typoResult'] = 'false'

data = urllib.parse.urlencode(data).encode("utf-8")

req = urllib.request.Request(url,data,head) 实例化Request对象 的同时 将head参数加进去

response = urllib.request.urlopen(url,data)

html = response.read().decode('utf-8')

target = json.loads(html)

print("翻译结果:%s" % (target['translateResult'][0][0]['tgt']))

第二种:

通过add_header()方法往Request对象添加headers

req = urllib.request.Request(url,data)

req.add_header('User-Agent','Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.75 Safari/537.36')

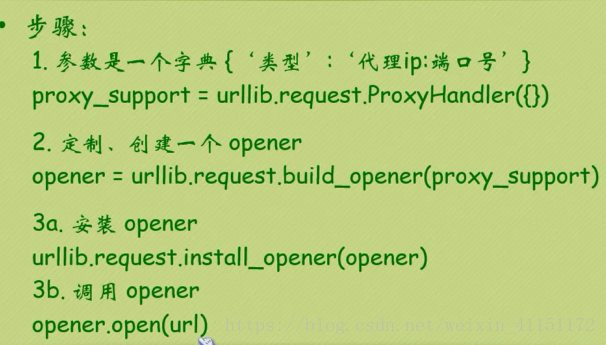

代理

import urllib.request

import random

url = 'http://www.whatismyip.com.tw/'

iplist = ['117.87.177.16:9000','115.193.98.250:9000','117.87.176.140:9000']

proxy_support = urllib.request.ProxyHandler({'http':random.choice(iplist)})

opener = urllib.request.build_opener(proxy_support)

urllib.request.install_opener(opener)

response = urllib.request.urlopen(url)

html = response.read().decode('utf-8')

print(html)